Abstract

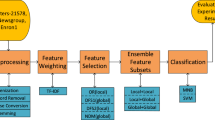

Text classification reduces the time complexity and space complexity by dividing the complete task into the different classes. The main problem with text classification is a vast number of features extracted from the textual data. Pre-processed dataset have many features, some of which are not desirable and act only like noise. In this paper, a novel approach for optimal text classification based on nature-inspired algorithm and ensemble classifier is proposed. In the proposed model, feature selection was performed with Biogeography Based Optimization (BBO) algorithm along with ensemble classifiers (Bagging). The use of ensemble classifiers for classification delivers better performance for optimal text classification as compared to an individual classifier, and hence, improving the accuracy. Ensemble classifiers combines the weakness of individual classifiers. The individual classifiers are unable to improve the classification results when compared to ensemble classifier. The selected features, after feature selection using BBO algorithm, are classified into various classes using six machine learning classifier. The experimental results are computed on ten text classification datasets taken from UCI repository and one real-time dataset of an airlines. The four different measures namely; Accuracy, Precision, Recall and F- measure are used to validate performance of our model with ten-fold cross-validation. For feature selection process, a comparison is performed among state-of-the-art algorithms available in the literature. Results shows that BBO for feature selection outperforms the other similar nature-based optimization techniques. Our proposed approach of BBO with ensemble classifier is also compared with techniques proposed by other researchers and we analyzed the results quantitatively and qualitatively.

Similar content being viewed by others

References

Akinyelu, AA, Adewumi, AO (2014): Classification of phishing email using random forest ma- chine learning technique. Journal of Applied Mathematics 2014

Balasubramanian, V, Ho, SS, Vovk, V (2014): Conformal prediction for reliable machine learn- ing: theory, adaptations and applications. Newnes

Bhattacharya A, Chattopadhyay PK (2010) Solving complex economic load dispatch problems using biogeography-based optimization. Expert Syst Appl 37(5):3605–3615

Boynukalin, Z (2012): Emotion analysis of turkish texts by using machine learning methods. Middle East Technical University

Catal C, Guldan S (2017) Product review management software based on multiple classifiers. IET Softw 11(3):89–92

Da Silva NF, Hruschka ER, Hruschka ER Jr (2014) Tweet sentiment analysis with classifier ensembles. Decis Support Syst 66:170–179

Dadaneh BZ, Markid HY, Zakerolhosseini A (2016) Unsupervised probabilistic feature se- lection using ant colony optimization. Expert Syst Appl 53:27–42

Das AK, Sengupta S, Bhattacharyya S (2018) A group incremental feature selection for classification using rough set theory based genetic algorithm. Appl Soft Comput 65:400–411

Demidova, L, Nikulchev, E, Sokolova, Y (2016): The svm classifier based on the modified particle swarm optimization. arXiv preprint arXiv:1603.08296

Diab DM, El Hindi KM (2017) Using differential evolution for fine tuning näıve bayesian classifiers and its application for text classification. Appl Soft Comput 54:183–199

El Hindi K (2014) Fine tuning the näıve bayesian learning algorithm. AI Commun 27(2):133–141

El Hindi K (2014) A noise tolerant fine tuning algorithm for the näıve bayesian learning al- gorithm. Journal of King Saud University-Computer and Information Sciences 26(2):237–246

Emary, E., Zawbaa, H.M. (2016), Hassanien, A.E.: Binary grey wolf optimization approaches for feature selection. Neurocomputing 172, 371–381

Eroglu DY, Kilic K (2017) A novel hybrid genetic local search algorithm for feature selection and weighting with an application in strategic decision making in innovation management. Inf Sci 405:18–32

Esseghir MA, Goncalves G, Slimani Y (2010) Adaptive particle swarm optimizer for feature selection. In: International Conference on Intelligent Data Engineering and Automated Learning. Springer, pp 226–233

Fersini E, Messina E, Pozzi FA (2014) Sentiment analysis: Bayesian ensemble learning. De- cision support systems 68:26–38

Fister Jr, I., Yang, X.S., Fister, I., Brest, J, Fister, D (2013): A brief review of nature-inspired algorithms for optimization. arXiv preprint arXiv:1307.4186

Fong S, Wong R, Vasilakos A (2016) Accelerated pso swarm search feature selection for data stream mining big data. IEEE Trans Serv Comput 1:1–1

Ghareb AS, Bakar AA, Hamdan AR (2016) Hybrid feature selection based on enhanced genetic algorithm for text categorization. Expert Syst Appl 49:31–47

Gong W, Cai Z, Ling CX (2010) De/bbo: a hybrid differential evolution with biogeography- based optimization for global numerical optimization. Soft Comput 15(4):645–665

Guyon I, Elisseeff A (2003) An introduction to variable and feature selection. J Mach Learn Res 3(Mar):1157–1182

Hafez AI, Zawbaa HM, Emary E, Mahmoud HA, Hassanien AE (2015) An innovative approach for feature selection based on chicken swarm optimization. In: 2015 7th Inter- national Conference of Soft Computing and Pattern Recognition (SoCPaR). IEEE, pp 19–24

Hafez AI, Zawbaa HM, Emary E, Hassanien AE (2016) Sine cosine optimization algorithm for feature selection. In: 2016 International Symposium on INnovations in Intelligent SysTems and Applications (INISTA). IEEE, pp 1–5

Jiang L, Cai Z, Wang D, Zhang H (2012) Improving tree augmented naive bayes for class probability estimation. Knowl-Based Syst 26:239–245

Jiang L, Li C, Wang S, Zhang L (2016) Deep feature weighting for naive bayes and its application to text classification. Eng Appl Artif Intell 52:26–39

Jiang M, Liang Y, Feng X, Fan X, Pei Z, Xue Y, Guan R (2018) Text classification based on deep belief network and softmax regression. Neural Comput & Applic 29(1):61–70

Kennedy J (2011) Particle swarm optimization. In: Encyclopedia of machine learning. Springer, pp 760–766

Li X, Rao Y, Xie H, Lau RYK, Yin J, Wang FL (2017) Bootstrapping social emo- tion classification with semantically rich hybrid neural networks. IEEE Trans Affect Comput 8(4):428–442

Liang, HS, Xu, JM, Cheng, YP (2007): An improving text categorization method of na ve bayes. Journal of Hebei University (Natural Science Edition) (3), 24

Lin SW, Ying KC, Chen SC, Lee ZJ (2008) Particle swarm optimization for parameter determination and feature selection of support vector machines. Expert Syst Appl 35(4):1817–1824

Lin KC, Zhang KY, Huang YH, Hung JC, Yen N (2016) Feature selection based on an improved cat swarm optimization algorithm for big data classification. J Supercomput 72(8):3210–3221

Liu Y, Bi JW, Fan ZP (2017) Multi-class sentiment classification: the experimental com- parisons of feature selection and machine learning algorithms. Expert Systems with Ap- plications 80:323–339

McCallum A, Nigam K (1998) A comparison of event models for naive bayes text classification. In: et al. (ed) AAAI-98 workshop on learning for text categorization, vol. 752. Citeseer, pp 41–48

Mehta, S. (2017), et al.: Concept drift in streaming data classification: algorithms, platforms and issues. Procedia computer science 122, 804–811

Melo A, Paulheim H (2019) Local and global feature selection for multilabel classification with binary relevance. Artif Intell Rev 51(1):33–60

Mirończuk MM, Protasiewicz J (2018) A recent overview of the state-of-the-art elements of text classification. Expert Syst Appl 106:36–54

Moradi P, Gholampour M (2016) A hybrid particle swarm optimization for feature subset selection by integrating a novel local search strategy. Appl Soft Comput 43:117–130

Nag K, Pal NR (2016) A multiobjective genetic programming-based ensemble for simultane- ous feature selection and classification. IEEE transactions on cybernetics 46(2):499–510

Onan A, Korukŏglu S, Bulut H (2016) Ensemble of keyword extraction methods and classi- fiers in text classification. Expert Syst Appl 57:232–247

Pai PF, Chen CT, Hung YM, Hung WZ, Chang YC (2014) A group decision clas- sifier with particle swarm optimization and decision tree for analyzing achievements in mathematics and science. Neural Comput & Applic 25(7–8):2011–2023

Panchal, V, Singh, P, Kaur, N, Kundra, H (2009): Biogeography based satellite image classifi- cation. arXiv preprint arXiv:0912.1009

Panchal V, Kundra H, Kaur A (2010) An integrated approach to biogeography based opti- mization with case based reasoning for retrieving groundwater possibility. Int J Comput Appl 1(8):975–8887

Pinheiro RH, Cavalcanti GD, Correa RF, Ren TI (2012) A global-ranking local feature selection method for text categorization. Expert Syst Appl 39(17):12851–12857

Prabowo R, Thelwall M (2009) Sentiment analysis: a combined approach. Journal of Infor-metrics 3(2):143–157

Rennie JD, Shih L, Teevan J, Karger DR (2003) Tackling the poor assumptions of naive bayes text classifiers. In: Proceedings of the 20th international conference on machine learning (ICML-03), pp 616–623

Russell SJ, Norvig P (1995) A modern, agent-oriented approach to introductory artificial intelligence. SIGART Bulletin 6(2):24–26

Sabbah T, Selamat A, Selamat MH, Al-Anzi FS, Viedma EH, Krejcar O, Fujita H (2017) Modified frequency-based term weighting schemes for text classification. Appl Soft Comput 58:193–206

Sayed GI, Darwish A, Hassanien AE, Pan JS (2016) Breast cancer diagnosis approach based on meta-heuristic optimization algorithm inspired by the bubble-net hunting strat- egy of whales. In: International Conference on Genetic and Evolutionary Computing. Springer, pp 306–313

Sayed SAF, Nabil E, Badr A (2016) A binary clonal flower pollination algorithm for feature selection. Pattern Recogn Lett 77:21–27

Sayed GI, Hassanien AE, Azar AT (2019) Feature selection via a novel chaotic crow search algorithm. Neural Comput & Applic 31(1):171–188

Sboev A, Litvinova T, Gudovskikh D, Rybka R, Moloshnikov I (2016) Machine learning models of text categorization by author gender using topic-independent features. Procedia Computer Science 101:135–142

Schiezaro M, Pedrini H (2013) Data feature selection based on artificial bee colony algorithm. EURASIP Journal on Image and Video Processing 2013(1):47

Silla CN, Freitas AA (2011) A survey of hierarchical classification across different application domains. Data Min Knowl Disc 22(1–2):31–72

Simon D (2008) Biogeography-based optimization. IEEE Trans Evol Comput 12(6):702–713

Simon D (2011) A probabilistic analysis of a simplified biogeography-based optimization algo- rithm. Evol Comput 19(2):167–188

Storn R, Price K (1997) Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359

Su CT, Lin HC (2011) Applying electromagnetism-like mechanism for feature selection. In- formation Sciences 181(5):972–986

Tang, X., Chen, L. (2018): Artificial bee colony optimization-based weighted extreme learning machine for imbalanced data learning. Cluster Computing pp. 1–16

Tang B, He H, Baggenstoss PM, Kay S (2016) A bayesian classification approach using class-specific features for text categorization. IEEE Trans Knowl Data Eng 28(6):1602–1606

Uysal AK (2016) An improved global feature selection scheme for text classification. Expert Syst Appl 43:82–92

Uysal AK, Gunal S (2014) Text classification using genetic algorithm oriented latent semantic features. Expert Syst Appl 41(13):5938–5947

Wang G, Sun J, Ma J, Xu K, Gu J (2014) Sentiment classification: the contribution of ensemble learning. Decis Support Syst 57:77–93

Wang H, Zhou X, Sun H, Yu X, Zhao J, Zhang H, Cui L (2017) Firefly algorithm with adaptive control parameters. Soft Comput 21(17):5091–5102

Xia R, Zong C, Li S (2011) Ensemble of feature sets and classification algorithms for senti- ment classification. Inf Sci 181(6):1138–1152

Yang B, Zhang Y, Li X (2011) Classifying text streams by keywords using classifier ensemble. Data Knowl Eng 70(9):775–793

Ying, L (2007): Analysis on text classification using naive bayes. Computer Knowledge and Technology (Academic Exchange) 11

Zawbaa HM, Emary E, Parv B, Sharawi M (2016) Feature selection approach based on moth-flame optimization algorithm. In: 2016 IEEE Congress on Evolutionary Computation (CEC). IEEE, pp 4612–4617

Zhang Y, Gong DW, Cheng J (2017) Multi-objective particle swarm optimization approach for cost-based feature selection in classification. IEEE/ACM Transactions on Computa- tional Biology and Bioinformatics (TCBB) 14(1):64–75

Zorarpacı E, Ö Zel SA (2016) a hybrid approach of differential evolution and artificial bee colony for feature selection. Expert Syst Appl 62:91–103

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Khurana, A., Verma, O.P. Novel approach with nature-inspired and ensemble techniques for optimal text classification. Multimed Tools Appl 79, 23821–23848 (2020). https://doi.org/10.1007/s11042-020-09013-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09013-2