Abstract

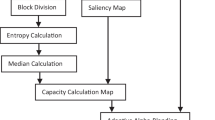

Just noticeable distortion (JND) and visual attention (VA), which are two widely used mathematical models of human visual system (HVS) that aim to simulate the human brain mechanism, are sufficiently explored and applied to many researches including digital watermarking. The activity of human brain, however, is extremely complex and it can be more limited due to complicated fusion of spatial saliency for image domain. In this paper, we propose a novel VA guided JND model in which we fuse the final attention map from the low-level features by using two laws of Gestalt principle. Firstly, we demonstrate a classic JND model in DCT domain, which consists of spatial contrast sensitivity function (CSF), luminance adaptation (LA) and contrast masking (CM). The foveation effect and orientation feature are considered to obtain the CSF and CM factor. The foveation effect is affected by spatial attention, and the orientation features are modeled for CM effect together with traditional block texture strength through three direction-based AC coefficients in DCT domain. The attention features are integrated with a novel Gestalt principle-based weighting mechanism for the final block-based VA model, which is then used to modulate JND profiles with two non-linear functions. Finally, the proposed VA-guided JND model is incorporated into a logarithmic spread transform dither modulation (L-STDM) watermarking scheme. Experimental results show that the newly proposed algorithm can achieve good performance in term of robustness and get better visual quality.

Similar content being viewed by others

References

Cox IJ, Miller ML, Bloom JA, Honsinger C (2002) Digital watermarking, vol 53. Springer, Cham

Liu H, Xu B, Lu D, Zhang G (2018) A path planning approach for crowd evacuation in buildings based on improved artificial bee colony algorithm. Appl Soft Comput 68:360–376

Liu H, Liu B, Zhang H, Li L, Qin X, Zhang G (2018) Crowd evacuation simulation approach based on navigation knowledge and two-layer control mechanism. Inf Sci 436:247–267

Zong J, Meng L, Zhang H, Wan W (2017) Jnd-based multiple description image coding KSII. Trans Internet Inf Syst 11(8)

Li Q, Doerr G, Cox IJ (2006) Spread transform dither modulation using a perceptual model. In: IEEE Workshop on multimedia signal processing

Qiao L, Cox IJ (2007) Improved spread transform dither modulation using a perceptual model: Robustness to amplitude scaling and jpeg compression. In: IEEE International conference on acoustics

Ma L, Yu D, Wei G, Tian J, Lu H (2010) Adaptive spread-transform dither modulation using a new perceptual model for color image watermarking. IEICE Trans Inf Syst 93(4):843–857

Wan W, Ju L, Sun J, Di G (2016) Improved logarithmic spread transform dither modulation using a robust perceptual model. Multimed Tools Appl 75(21):13481–13502

Wan W, Wang J, Li J, Meng L, Sun J, Zhang H, Liu J (2018) Pattern complexity-based jnd estimation for quantization watermarking. Pattern Recognit Lett. https://doi.org/10.1016/j.patrec.2018.08.009

Wan W, Wang J, Li J, Sun J, Zhang H, Liu J (2018) Hybrid jnd model-guided watermarking method for screen content images. MultimedTools Appl 1–24. https://doi.org/10.1007/s11042-018-6860-1

Li J, Zhang H, Wang J, Xiao Y, Wan W (2019) Orientation-aware saliency guided JND Model for robust image watermarking. IEEE Access 7:41261–41272

Wang J, Wan W, Li XX, De Sun J, Zhang H (2020) Color image watermarking based on orientation diversity and color complexity. Expert Syst Appl 140:112868

Watson AB (1993) Dct quantization matrices visually optimized for individual images. In: Human vision, visual processing, and digital display IV, vol 1913, pp 202–216. International Society for Optics and Photonics

Fang Y, Lin W, Chen Z, Tsai CM, Lin CW (2013) A video saliency detection model in compressed domain. IEEE Trans Circ Syst Video Technol 24(1):27–38

Wan W, Wang J, Xu M, Li J, Sun J, Zhang H (2019) Robust image watermarking based on two-layer visual saliency-induced JND profile. IEEE Access 7:39826–39841

Sun J, Liu X, Wan W, Li J, Zhao D, Zhang H (2016) Video hashing based on appearance and attention features fusion via dbn. Neurocomputing 213:84–94

Xing S, Wang Q, Zhao X, Li T, et al. (2019) A hierarchical attention model for rating prediction by leveraging user and product reviews. Neurocomputing 332:417–427

Fang Y, Chen Z, Lin W, Lin CW (2012) Saliency detection in the compressed domain for adaptive image retargeting. IEEE Trans Image Process 21(9):3888–3901

Zhang L, Shen Y, Li H (2014) Vsi: a visual saliency-induced index for perceptual image quality assessment. IEEE Trans Image Process 23(10):4270–4281

Niu Y, Kyan M, Lin M, Beghdadi A, Krishnan S (2013) Visual saliency’s modulatory effect on just noticeable distortion profile and its application in image watermarking. Signal Process Image Commun 28(8):917–928

Lu Z, Lin W, Yang X, Ong E, Yao S (2005) Modeling visual attention’s modulatory aftereffects on visual sensitivity and quality evaluation. IEEE Trans Image Process 14(11):1928–1942

Wan W, Ju L, Sun J, Ge C, Nie X (2015) Logarithmic stdm watermarking using visual saliency-based JND Model. Electron Lett 51(10):758–760

Hadizadeh H (2016) A saliency-modulated just-noticeable-distortion model with non-linear saliency modulation functions. Pattern Recogn Lett 84:49–55

Judd T, Ehinger K, Durand F, Torralba A (2010) Learning to predict where humans look. In: 2009 IEEE 12Th international conference on computer vision

Duan L, Wu C, Miao J, Qing L, Fu Y (2011) Visual saliency detection by spatially weighted dissimilarity. In: CVPR 2011, pp 473–480, https://doi.org/10.1109/CVPR.2011.5995676, (to appear in print)

Erdem E, Erdem A (2013) Visual saliency estimation by nonlinearly integrating features using region covariances. J Vis 13(4):11

Ma X, Xie X, Lam KM, Zhong Y (2015) Efficient saliency analysis based on wavelet transform and entropy theory. J Vis Commun Image Represent 30:201–207

Stevenson H (2012) Emergence: The gestalt approach to change. Unleashing executive and orzanizational potential. Retrieved 7

Huang YL, Chang RF (1999) Texture features for dct-coded image retrieval and classification. In: 1999 IEEE International conference on acoustics, speech, and signal processing. Proceedings. ICASSP99 (cat. no. 99CH36258), vol 6. IEEE, pp 3013–3016

Wang H, Yu L, Wang S, Xia G, Yin H (2018) A novel foveated-jnd profile based on an adaptive foveated weighting model. In: 2018 IEEE Visual communications and image processing (VCIP), IEEE, pp 1–4

Hu S, Jin L, Wang H, Zhang Y, Kwong S, Kuo CCJ (2016) Objective video quality assessment based on perceptually weighted mean squared error. IEEE Trans Circ Syst Video Technol 27(9):1844–1855

Wan W, Liu J, Sun J, Ge C, Nie X, Gao D (2015) Improved spread transform dither modulation based on robust perceptual just noticeable distortion model, vol 24

Wei Z, Ngan KN (2009) Spatio-temporal just noticeable distortion profile for grey scale image/video in dct domain. IEEE Trans Circ Syst Video Technol 19(3):337–346

Bae SH, Kim M (2013) A novel dct-based jnd model for luminance adaptation effect in dct frequency. IEEE Signal Process Lett 20(9):893–896

Wu J, Wan W, Shi G (2016) Content complexity based just noticeable difference estimation in dct domain. In: 2016 Asia-pacific signal and information processing association annual summit and conference (APSIPA), IEEE, pp 1–5

Comesana P, Pérez-González F (2011) Weber’s law-based side-informed data hiding. In: 2011 IEEE International conference on acoustics, speech and signal processing (ICASSP), IEEE, pp 1840–1843

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP, et al. (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13 (4):600–612

Ernawan F, Kabir MN (2018) A robust image watermarking technique with an optimal dct-psychovisual threshold. IEEE Access 6:20464–20480

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P., Zitnick CL (2014) Microsoft coco: Common objects in context. In: European conference on computer vision, Springer, pp 740–755

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, J., Wan, W. A novel attention-guided JND Model for improving robust image watermarking. Multimed Tools Appl 79, 24057–24073 (2020). https://doi.org/10.1007/s11042-020-09102-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09102-2