Abstract

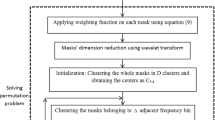

The recently-proposed deep clustering-based algorithms represent a fundamental advance towards the single-channel multi-speaker speech sep- aration problem. These methods use an ideal binary mask to construct the objective function and K-means clustering method to estimate the ideal bina- ry mask. However, when sources belong to the same class or the number of sources is large, the assumption that one time-frequency unit of the mixture is dominated by only one source becomes weak, and the IBM-based separation causes spectral holes or aliasing. Instead, in our work, the quantized ideal ratio mask was proposed, the ideal ratio mask is quantized to have the output of the neural network with a limited number of possible values. Then the quan- tized ideal ratio mask is used to construct the objective function for the case of multi-source domination, to improve network performance. Furthermore, a network framework that combines a residual network, a recurring network, and a fully connected network was used for exploiting correlation information of frequency in our work. We evaluated our system on TIMIT dataset and show 1.6 dB SDR improvement over the previous state-of-the-art methods.

Similar content being viewed by others

References

Aihara R, Hanazawa T, Okato Y, et al. (2019). Teacher-student deep clustering for low- delay Single Channel speech Separation[C]//ICASSP 2019-2019 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, 690–694.

Bando Y, Nakamura E, Itakura K, Kawahara T (2018) Bayesian multichannel audio source separation based on integrated source and spatial models. IEEE/ACM Transac- tions on Audio, Speech, and Language Processing 26(4):831–C846

Bregman, AS (1990). Auditory scene analysis (The MIT Press, Cambridge, MA), Chap. 1.

Chan TST, Yang YH (2016) Complex and quaternionic principal component pursuit and its application to audio separation[J]. IEEE Signal Processing Letters 23(2):287–291

Cherry EC (1953) Some experiments on the recognition of speech,with one and with two ears. The Journal of the acoustical society of America 25(5):975C–9979C

Dai L, Du J, Tu Y, Lee C (2016) A regression approach to single-channel speech separation via high-resolution deep neural networks, IEEE trans. Audio, speech. Language Process(TASLP) 24(8):1424C–11437C

Ephrat A, Mosseri I, Lang O, et al. (2018). Looking to listen at the cocktail party: a speaker- independent audio-visual model for speech separation[C]. international conference on computer graphics and interactive techniques, 37(4).

John S Garofolo, Lori F Lamel, William M Fisher, Jonathan G Fiscus, and David S Pallett (1993). Darpa timit acoustic-phonetic continous speech corpus cd-rom. nist speech disc 1–1.1, NASA STI/Recon technical report n, vol. 93

Gemmeke JF, Virtanen T, Raj B (2013) Active-set newton algorithm for overcomplete non-negative representations of audio. IEEE Trans Audio, Speech, Language Process (TASLP) 21(11):2277C–22289C

Gong Y, Li J, Deng L, Haeb-Umbach R (2014) An overview of noise-robust automatic speech recognition. IEEE TransAudio, Speech, Language Process (TASLP) 22(4):745C–7777C

Han K, Wang Y, Wang D (2013) Exploring monaural features for classification-based speech segregation. IEEE Trans Audio, Speech, Language Process (TASLP) 21(2):270C–2279C

M Hasegawa-Johnson P Huang, M Kim and P Smaragdis (2014). Deep learning for monaural speech separation, in acoustics, speech and signal processing (ICASSP), 2014 IEEE international conference on. 2014, pp. 1562C-1566, IEEE

Hu K, Wang D (2013) An unsupervised approach to cochan- nel speech separation. IEEE Trans Audio, Speech, Language Process (TASLP) 21(1):122–C131

Hu K, Wang D (2013) An unsupervised approach to cochannel speech separation. IEEE Trans Audio, Speech, Language Process (TASLP) 21(1):122C131

Hummersone, Christopher, Toby Stokes, and Tim Brookes (2014). “On the ideal ratio mask as the goal of computational auditory scene analysis.” Blind source separation. Springer, Berlin, Heidelberg, 349–368

Hyvärinen A, Oja E (2000) Independent component analysis: algorithms and applications. Neural Netw 13(4–5):411C–4430C

Isik Y, Roux JL, Chen Z, et al. (2016). Single-channel multi-speaker separation using deep clustering[C]. conference of the international speech communication association: 545–549

Ke S, Hu R, Li G, et al. (2019). Multi-speakers speech Separation based on modified attractor points estimation and GMM clustering[C]//2019 IEEE international conference on multimedia and expo (ICME). IEEE, 1414–1419.

J Le Roux JR Hershey, Z Chen and S Watanabe (2016). Deep clustering: discriminative embeddings for segmentation and separation, in acoustics, speech and signal processing (ICASSP), 2016 IEEE international conference on. 2016, pp.31-C35, IEEE.

Le Roux J, Wichern G, Watanabe S et al (2019) Phasebook and friends: leveraging discrete representations for source separation[J]. IEEE Journal of Selected Topics in Signal Pro- cessing 13(2):370–382

Li X, Girin L, Gannot S et al (2019) Multichannel Speech Separation and Enhancement Using the Convolutive Transfer Function[J]. IEEE/ACM transactions on audio. Speech and Language Processing (TASLP) 27(3):645–659

Lu R, Duan Z, Zhang C (2018) Listen and look: AudioCVisual matching assisted speech source Separation[J]. IEEE Signal Processing Letters 25(9):1315–1319

Y Luo Z Chen and N Mesgarani (2017). Deep attractor network for single-microphone s- peaker separation, in acoustics, speech and signal processing (ICASSP), 2017 IEEE international conference on. pp. 246C-250, IEEE.

Luo Y, Mesgarani N (2019) Conv-tasnet: surpassing ideal timeCfrequency magnitude mask- ing for speech separation[J]. IEEE/ACM transactions on audio, speech, and language processing 27(8):1256–1266

Mandel MI, Weiss R, Ellis DP et al (2010) Model-based expectation-maximization source Separation and localization[J]. IEEE Trans Audio Speech Lang Process 18(2):382–394

Narayanan, Arun, and DeLiang Wang (2013). “Ideal ratio mask estimation using deep neu- ral networks for robust speech recognition.” 2013 IEEE International Conference on Acoustics, Speech and Signal Processing. IEEE

Narayanan A, Wang Y, Wang D (2014) On training targets for supervised speech sepa- ration. IEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP) 22(12):1849–C1858

MS.Pedersen (2006). Source separation for hearingaid applications, IMM, Informatik og Matematisk Modelling, DTU

Colin Raffel, Brian McFee, Eric J. Humphrey, Justin Salamon, Oriol Nieto, Dawen Liang, and Daniel P. W. Ellis (2014). Mir evalA Transparent Implementation of Common MIR Metrics, Proceedings of the 15th International Conference on Music Information Re- trieval, 2014

Rodrguez-Serrano FJ et al (2014) Monophonic constrained non-negative sparse coding using instrument models for audio separation and transcription of monophonic source-based polyphonic mixtures. Multimed Tools Appl 72.1:925–949

A Senior TN Sainath, O Vinyals and H Sak (2015). Convolutional, long short-term mem- ory, fully connected deep neural networks, in acoustics, speech and signal processing (ICASSP),2015 IEEE international conference on. pp. 4580-C4584,IEEE

Smaragdis P, Mohammadiha N, Leijon A (2013) Supervised and unsupervised speech enhancement using nonnegative matrix factorization. IEEE Trans Audio, Speech, Lan- guage Process (TASLP) 21(10):2140C–22151C

Tan Z, Kolbæk M, Yu D, Jensen J (2017) Multitalker speech separation with utterance-level permutation invariant training of deep recurrent neural networks. IEEE Trans Audio, Speech, Language Process (TASLP) 25(10):1901C–11913C

Z Tan D Yu, M Kolbæk and J Jensen (2017). Permutation invariant training of deep models for speaker-independent multi-talker speech separation, in acoustics, speech and signal processing (ICASSP), 2017 IEEE international conference on. 2017, pp. 241C-245, IEEE

Vasko JL, Carter BL, Healy EW, Delfarah M, Wang D (2017) An algorithm to increase intelligibility for hearing-impaired listeners in the presence of a competing talker. The Journal of the Acoustical Society of America 141(6):4230C–44239C

Venkatesan R, Balaji Ganesh A (2018) Deep recurrent neural networks based binaural speech segregation for the selection of closest target of interest. Multimed Tools Appl 77(15):20129–20156

Vincent E, Gribonval Ŕ, Fevotte Ć (2006) Performance measurement in blind audio source separation. IEEE transactions on audio, speech, and language processing 14(4):1462C–11469C

Virtanen T (2007) Monaural sound source separation by nonnegative matrix factorization with temporal continuity and sparseness criteria. IEEE Trans Audio, Speech, Language Process(TASLP) 15(3):1066–C1074

Wang, DL (2005). On ideal binary mask as the computational goal of auditory scene analysis, in Speech Separation by Humans and Machines, edited by P. Divenyi (Kluwer Academic, Dordrecht), pp. 181C197

Zhong-Qiu Wang, Jonathan Le Roux, and John R. Hershey (2018). Alternative objective func- tions for deep clustering, in 2018 IEEE international conference on acoustics, speech and signal processing (ICASSP). pp. 686C-690, IEEE.

Zhong-Qiu Wang, Jonathan Le Roux, DeLiang Wang, and John R. Hershey (2018). End-to- end speech separation with unfolded iterative phase reconstruction, arXiv preprint arX- iv:1804.10204

Wang Z Q, Tan K, Wang D L (2019). Deep learning based phase reconstruction for speak- er separation: a trigonometric perspective[C]//ICASSP 2019-2019 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, 71–75.

Wang Z, Wang D (2018) Integrating spectral and spatial features for multi-channel s-peaker separation. Proc Interspeech 2018:2718–C2722

Williamson DS, Wang Y, Wang DL (2015) Complex ratio masking for monaural speech separation. IEEE/ACM transactions on audio, speech, and language processing 24.3:483–492

H Zen K Simonyan O Vinyals A Graves N Kalchbrenner AW Senior A Van Den Oord, S Dieleman and K Kavukcuoglu (2016). Wavenet: A generative model for raw audio., in SSW, p. 125.

Zhang X, Wang D (2016) A deep ensemble learning method for monaural speech separation. IEEE Trans Audio, Speech,Language Process (TASLP) 24(5):967C–9977C

Acknowledgements

This research is partially supported by the National Key R&D Pro-

gram of China (No. 2017YFB1002803), National Nature Science Foundation of China (No.U1736206, No. 61761044), Hubei Province Technological Innovation Major Project (No. 2017AAA123).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(RAR 2270 kb)

Rights and permissions

About this article

Cite this article

Ke, S., Hu, R., Wang, X. et al. Single Channel multi-speaker speech Separation based on quantized ratio mask and residual network. Multimed Tools Appl 79, 32225–32241 (2020). https://doi.org/10.1007/s11042-020-09419-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09419-y