Abstract

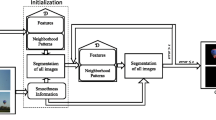

As an interesting and emerging topic, multiple foreground cosegmentation (MFC) aims at extracting a finite number of common objects from an image collection, which is useful to variety of visual media applications. Although a number of approaches have been proposed to address this problem, many of them are designed with the misleading consistent information, suboptimal image representation, or inefficient segmentation assist and thus still suffer from certain limitations, which reduces their capability in the real-world scenarios. To alleviate these limitations, we propose a novel unsupervised MFC framework, which is composed of three components: unsupervised label generation, saliency based pseudo-annotation and cosegmentation by MIML learning. Specifically, we combine the high-level and low-level feature to represent the proposal objects, and adopt a novel SPAP clustering scheme to obtain more accurate consistent information of common objects. Then the saliency based pseudo-annotation help us reformulate the MFC problem as a Multi-Instance Multi-Label (MIML) learning problem by label propagation. Finally, by introducing a novel ensemble MIML learning scheme, the consistent information of common objects can more efficiently assist the segmentation of the images and get the more accurate segmentation results. We evaluate our framework on widely used public databases including the ICoseg dataset, MSRC dataset and FlickrMFC dataset for single and multiple common object cosegmentation respectively. Comparison results show that the proposed methods reach advanced and efficient performance.

Similar content being viewed by others

References

Achanta R, Shaji A, Smith K, Lucchi A, Fua P, Süsstrunk S (2012) SLIC Superpixels compared to state-of-the-art superpixel methods. IEEE Trans Pattern Anal Mach Intell 34(11):2274–2282

Arbeláez P A, Pont-Tuset J, Barron J T, Marqués F, Malik J (2014) Multiscale combinatorial grouping. In: CVPR, pp 328–335

Batra D, Kowdle A, Parikh D, Luo J, Chen T (2010) icoseg: interactive co-segmentation with intelligent scribble guidance. In: The twenty-third IEEE conference on computer vision and pattern recognition, CVPR 2010, San Francisco, CA, USA, 13–18 June 2010, pp 3169–3176

Batra D, Kowdle A, Parikh D, Luo J, Chen T (2011) Interactively co-segmentating topically related images with intelligent scribble guidance. Int J Comput Vis 93(3):273–292

Borji A, Tanner J (2016) Reconciling saliency and object center-bias hypotheses in explaining free-viewing fixations. IEEE Trans Neural Netw Learning Syst 27(6):1214–1226

Borji A, Cheng M -M, Jiang H, Li J (2015) Salient object detection: a benchmark. IEEE Trans Image Processing 24(12):5706–5722

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Briggs F, Fern X Z, Raich R (2012) Rank-loss support instance machines for MIML instance annotation. In: The 18th ACM SIGKDD international conference on knowledge discovery and data mining, KDD ’12, Beijing, China, August 12–16, 2012, pp 534–542

Briggs F, Fern X Z, Raich R (2015) Context-aware MIML instance annotation: exploiting label correlations with classifier chains. Knowl Inf Syst 43 (1):53–79

Chang H-S, Wang Y-CF (2015) Optimizing the decomposition for multiple foreground cosegmentation. Comput Vis Image Underst 141:18–27

Chen T, Lin L, Liu L, Luo X, Li X (2016) DISC: deep image saliency computing via progressive representation learning. IEEE Trans Neural Netw Learning Syst 27(6):1135–1149

Chen Y, Wang J, Chen X, Zhu M, Yang K, Wang Z, Xia R (2019) Single-image super-resolution algorithm based on structural self-similarity and deformation block features. IEEE Access 7:58791–58801

Chen Y, Wang J, Liu S, Chen X, Xiong J, Xie J, Yang K (2019) Multiscale fast correlation filtering tracking algorithm based on a feature fusion model. Concurrency and Computation: Practice and Experience 10

Chen Y, Wang J, Liu S, Chen X, Xiong J, Xie J, Yang K (2019) Multiscale fast correlation filtering tracking algorithm based on a feature fusion model. Concurrency and Computation: Practice and Experience e5533

Chen Y, Wang J, Xia R, Zhang Q, Cao Z, Yang K (2019) The visual object tracking algorithm research based on adaptive combination kernel. J Ambient Intell Humaniz Comput 10(12):4855–4867

Chen Y, Xiong J, Xu W, J Zuo A (2019) Novel online incremental and decremental learning algorithm based on variable support vector machine. Clust Comput 22(3):7435–7445

Chen Y, Xu W, Zuo J, Yang K (2019) The fire recognition algorithm using dynamic feature fusion and iv-svm classifier. Clust Comput 22 (3):7665–7675

Chen Y, Tao J, Liu L, Xiong J, Xia R, Xie J, Zhang Q, Yang K (2020) Research of improving semantic image segmentation based on a feature fusion model. Journal of Ambient Intelligence and Humanized Computing

Chen Y, Tao J, Zhang Q, Yang K, Chen X, Xiong J, Xia R, Xie J (2020) Saliency detection via the improved hierarchical principal component analysis method. Wirel Commun Mob Comput 2020:8822777:1–8822777:12

Chen Y, Tao J, Zhang Q, Yang K, Chen X, Xiong J, Xia R, Xie J (2020) Saliency detection via the improved hierarchical principal component analysis method. Wirel Commun Mob Comput 2020:1–12

Cheng M-M, Mitra N J, Huang X, Torr PHS, Hu S-M (2015) Global contrast based salient region detection. IEEE Trans Pattern Anal Mach Intell 37(3):569–582

Cho M, Kwak S, Schmid C, Ponce J (2015) Unsupervised object discovery and localization in the wild: Part-based matching with bottom-up region proposals. In: IEEE conference on computer vision and pattern recognition, CVPR 2015, Boston, MA, USA, June 7–12, 2015, pp 1201–1210

Cinbis RG, Verbeek JJ, Schmid C (2014) Multi-fold MIL training for weakly supervised object localization. In: 2014 IEEE conference on computer vision and pattern recognition, CVPR 2014, columbus, OH, USA, June 23–28, 2014, pp 2409–2416

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: 2005 IEEE computer society conference on computer vision and pattern recognition (CVPR 2005), 20–26 June 2005, San Diego, CA, USA, pp 886–893

Endres I, Hoiem D (2010) Category independent object proposals. In: Computer vision - ECCV 2010 - 11th European conference on computer vision, Heraklion, Crete, Greece, September 5–11, 2010, proceedings, Part V, pp 575–588

Faktor A, Irani M (2013) Co-segmentation by composition. In: IEEE international conference on computer vision, ICCV 2013, Sydney, Australia, December 1–8, 2013, pp 1297–1304

Fan D-P, Cheng M-M, Liu Y, Li T, Borji A (2017) Structure-measure: a new way to evaluate foreground maps. In: ICCV, pp 4558–4567

Fan D-P, Cheng M-M, Liu J, Gao S, Hou Q, Borji A (2018) Salient objects in clutter: bringing salient object detection to the foreground. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y (eds) Computer vision - ECCV 2018 - 15th European conference, Munich, Germany, September 8–14, 2018, proceedings, Part XV, volume 11219 of Lecture Notes in Computer Science, pp 196–212. Springer

Fan D-P, Gong C, Cao Y, Ren B, Cheng M-M, Borji A (2018) Enhanced-alignment measure for binary foreground map evaluation. In: IJCAI. ijcai.org, pp 698–704

Fan D-P, Wang W, Cheng M-M, Shen J (2019) Shifting more attention to video salient object detection. In: IEEE conference on computer vision and pattern recognition, CVPR 2019, Long Beach, CA, USA, June 16–20, 2019. Computer Vision Foundation / IEEE, pp 8554–8564

Fan D-P, Lin Z, Ji G-P, Zhang D, Fu H, Cheng M -M (2020) Taking a deeper look at co-salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2919–2929

Fan J, Song H, Zhang K, Yang K, Liu Q (2020) Feature alignment and aggregation siamese networks for fast visual tracking. IEEE Transactions on Circuits and Systems for Video Technology 1–1

Fern XZ, Brodley CE (2003) Random projection for high dimensional data clustering: a cluster ensemble approach. In: Machine Learning, Proceedings of the Twentieth International Conference (ICML 2003), August 21–24, 2003, Washington, DC, USA, pp 186–193

Fred ALN, Jain AK (2005) Combining multiple clusterings using evidence accumulation. IEEE Trans Pattern Anal Mach Intell 27(6):835–850

Frey BJ, Dueck D (2007) Clustering by passing messages between data points. Science 315(5814):972–976

Friedman J, Hastie T, Tibshirani R (2000) Additive logistic regression: a statistical view of boosting (with discussion and a rejoinder by the authors). Ann Statist 28(2):337–407

Girshick RB (2015) Fast r-CNN. In: 2015 IEEE International conference on computer vision, ICCV 2015, Santiago, Chile, December 7–13, 2015, pp 1440–1448

Han J, Zhang D, Hu X, Guo L, Ren J, Wu F (2015) Background prior-based salient object detection via deep reconstruction residual. IEEE Trans Circuits Syst Video Techn 25(8):1309–1321

Han J, Zhang D, Wen S, Guo L, Liu T, Li X (2016) Two-stage learning to predict human eye fixations via sdaes. IEEE Trans Cybernetics 46 (2):487–498

Han J, Zhang D, Cheng G, Liu N, Xu D (2018) Advanced deep-learning techniques for salient and category-specific object detection: a survey. IEEE Signal Process Mag 35(1):84–100

He K, Zhang X, Ren S, Sun J (2015) Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans Pattern Anal Mach Intell 37(9):1904–1916

Hochbaum DS, Singh V (2009) An efficient algorithm for co-segmentation. In: IEEE 12th international conference on computer vision, ICCV 2009, Kyoto, Japan, September 27–October 4, 2009, pp 269–276

Huang X, Shen C, Boix X, Zhao Q (2015) SALICON: reducing the semantic gap in saliency prediction by adapting deep neural networks. In: 2015 IEEE international conference on computer vision, ICCV 2015, Santiago, Chile, December 7–13, 2015, pp 262–270

Huang G, Pun C-M, Lin C (2017) Unsupervised video co-segmentation based on superpixel co-saliency and region merging. Multimedia Tools Appl 76 (10):12941–12964

Joulin A, Bach FR, Ponce J (2010) Discriminative clustering for image co-segmentation. In: The twenty-third IEEE conference on computer vision and pattern recognition, CVPR 2010, San Francisco, CA, USA, 13–18 June 2010, pp 1943–1950

Joulin A, Bach FR, Ponce J (2012) Multi-class cosegmentation. In: 2012 IEEE conference on computer vision and pattern recognition, providence, RI, USA, June 16–21, 2012, pp 542–549

Kim G, Xing EP (2012) On multiple foreground cosegmentation. In: 2012 IEEE conference on computer vision and pattern recognition, Providence, RI, USA, June 16–21, 2012, pp 837–844

Kim G, Xing EP, Li F-F, Kanade T (2011) Distributed cosegmentation via submodular optimization on anisotropic diffusion. In: IEEE international conference on computer vision, ICCV 2011, Barcelona, Spain, November 6–13, 2011, pp 169–176

Krizhevsky A, Sutskever I, Hinton G E (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

Li H, Yang J (2005) An improved algorithm for searching connected area in binary image. Computer and Modernization 4

Li G, Yu Y (2016) Deep contrast learning for salient object detection. In: 2016 IEEE conference on computer vision and pattern recognition, CVPR 2016, Las Vegas, NV, USA, June 27–30, 2016, pp 478–487

Li H, Meng F, Wu Q, Luo B (2014) Unsupervised multiclass region cosegmentation via ensemble clustering and energy minimization. IEEE Transactions on Circuits and Systems for Video Technology 24(5):789–801

Li L, Fei X, Dong Z, Zhang D (2015) Unsupervised multi-class co-segmentation via joint object detection and segmentation with energy minimization. vol 9812

Li K, Zhang J, Tao W (2016) Unsupervised co-segmentation for indefinite number of common foreground objects. IEEE Trans Image Processing 25 (4):1898–1909

Li B, Sun Z, Guo Y (2019) Supervae: Superpixelwise variational autoencoder for salient object detection. In: The thirty-third AAAI conference on artificial intelligence, AAAI 2019, the thirty-first innovative applications of artificial intelligence conference, IAAI 2019, the ninth AAAI symposium on educational advances in artificial intelligence, EAAI 2019, Honolulu, Hawaii, USA, January 27–February 1, 2019., pp 8569–8576

Li B, Sun Z, Li Q, Wu Y, Hu A (2019) Group-wise deep object co-segmentation with co-attention recurrent neural network. In: 2019 IEEE/CVF international conference on computer vision, ICCV 2019, Seoul, Korea (South), October 27–November 2, 2019. IEEE, pp 8518–8527

Li B, Sun Z, Tang L, Hu A (2019) Two-b-real net: two-branch network for real-time salient object detection. In: IEEE international conference on acoustics, speech and signal processing, ICASSP 2019, Brighton, United Kingdom, May 12–17, 2019, pp 1662–1666

Li B, Sun Z, Tang L, Sun Y, Shi J (2019) Detecting robust co-saliency with recurrent co-attention neural network. In: Kraus S (ed) Proceedings of the twenty-eighth international joint conference on artificial intelligence, IJCAI 2019, Macao, China, August 10–16, 2019. ijcai.org, pp 818–825

Li B, Sun Z, Wang Q, Li Q (2019) Co-saliency detection based on hierarchical consistency. In: Amsaleg L, Huet B, Larson M A, Gravier G, Hung H, Ngo C-W, Ooi W T (eds) Proceedings of the 27th ACM international conference on multimedia, MM 2019, Nice, France, October 21–25, 2019. ACM, pp 1392–1400

Li T, Song H, Zhang K, Liu Q, Lian W (2019) Low-rank weighted co-saliency detection via efficient manifold ranking. Multim Tools Appl 78(15):21309–21324

Li T, Song H, Zhang K, Liu Q (2020) Recurrent reverse attention guided residual learning for saliency object detection. Neurocomputing 389:170–178

Liao Z, Zhang R, He S, Zeng D, Wang J, Kim H (2019) Deep learning-based data storage for low latency in data center networks. IEEE Access 7:26411–26417

Liu N, Han J, Zhang D, Wen S, Liu T (2015) Predicting eye fixations using convolutional neural networks. In: IEEE conference on computer vision and pattern recognition, CVPR 2015, Boston, MA, USA, June 7–12, 2015, pp 362–370

Liu C, Chen T, Ding X, Zou H, Tong Y (2016) A multi-instance multi-label learning algorithm based on instance correlations. Multimedia Tools Appl 75(19):12263–12284

Liu L, Li K, Liao X (2017) Image co-segmentation by co-diffusion. CSSP 36(11):4423–4440

Liu G, Zhang Z, Liu Q, Xiong H (2019) Robust subspace clustering with compressed data. IEEE Trans Image Process 28(10):5161–5170

Liu Z, Zhang W, Zhao P (2020) A cross-modal adaptive gated fusion generative adversarial network for RGB-d salient object detection. Neurocomputing 387:210–220

Lu C-J, Hsu C-F, Yeh M-C (2013) Real-time salient object detection. In: ACM multimedia conference, MM ’13, Barcelona, Spain, October 21–25, 2013, pp 401–402

Luo Y, Qin J, Xiang X, Tan Y, Liu Q, Xiang L (2019) Coverless real-time image information hiding based on image block matching and dense convolutional network. J Real-Time Image Proc 1–11

Ma T, Latecki LJ (2013) Graph transduction learning with connectivity constraints with application to multiple foreground cosegmentation. In: 2013 IEEE conference on computer vision and pattern recognition, pp 1955–1962

Margolin R, Zelnik-Manor L, Tal A (2014) How to evaluate foreground maps. In: CVPR. IEEE Computer Society, pp 248–255

Meng F, Li H, Liu G, Ngan KN (2012) Object co-segmentation based on shortest path algorithm and saliency model. IEEE Trans Multimedia 14 (5):1429–1441

Meng F, Li H, Zhu S, Luo B, Huang C, Zeng B, Gabbouj M (2015) Constrained directed graph clustering and segmentation propagation for multiple foregrounds cosegmentation. IEEE Trans Circuits Syst Video Techn 25 (11):1735–1748

Mukherjee L, Singh V, Dyer CR (2009) Half-integrality based algorithms for cosegmentation of images. In: 2009 IEEE computer society conference on computer vision and pattern recognition (CVPR 2009), 20–25 June 2009, Miami, Florida, USA, pp 2028–2035

Piao Y, Rong Z, Zhang M, Lu H Exploit and replace: an asymmetrical two-stream architecture for versatile light field saliency detection

Rother C, Kolmogorov V, Blake A (2004) “grabcut”: interactive foreground extraction using iterated graph cuts. ACM Trans Graph 23(3):309–314

Rother C, Minka TP, Blake A, Kolmogorov V (2006) Cosegmentation of image pairs by histogram matching - incorporating a global constraint into mrfs. In: 2006 IEEE Computer society conference on computer vision and pattern recognition (CVPR 2006), 17–22 June 2006, New York, NY, USA, pp 993–1000

Rubinstein M, Joulin A, Kopf J, Liu C (2013) Unsupervised joint object discovery and segmentation in internet images. In: 2013 IEEE conference on computer vision and pattern recognition, Portland, OR, USA, June 23–28, 2013, pp 1939–1946

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:http://arxiv.orb/abs/1409.1556

Tang KD, Joulin A, Li L-J, Li F-F (2014) Co-localization in real-world images. In: 2014 IEEE conference on computer vision and pattern recognition, CVPR 2014, Columbus, OH, USA, June 23–28, 2014, pp 1464–1471

Tsai Y-H, Zhong G, Yang M-H (2016) Semantic co-segmentation in videos. In: Computer vision - ECCV 2016 - 14th European conference, Amsterdam, The Netherlands, October 11–14, 2016, proceedings, Part IV, pp 760–775

Vicente S, Kolmogorov V, Rother C (2010) Cosegmentation revisited: Models and optimization. In: Computer vision - ECCV 2010, 11th European conference on computer vision, Heraklion, Crete, Greece, September 5–11, 2010, proceedings, Part II, pp 465–479

Vicente S, Rother C, Kolmogorov V (2011) Object cosegmentation. In: The 24th IEEE conference on computer vision and pattern recognition, CVPR 2011, Colorado Springs, CO, USA, 20-25 June 2011, pp 2217–2224

von Luxburg U (2007) A tutorial on spectral clustering. Stat Comput 17(4):395–416

Wang W, Shen J (2016) Higher-order image co-segmentation. IEEE Trans Multimedia 18(6):1011–1021

Wang F, Huang Q, Ovsjanikov M, Guibas LJ (2014) Unsupervised multi-class joint image segmentation. In: 2014 IEEE Conference on computer vision and pattern recognition, CVPR 2014, Columbus, OH, USA, June 23–28, 2014, pp 3142–3149

Wang W, Shen J, Shao L (2018) Video salient object detection via fully convolutional networks. IEEE Trans Image Process 27(1):38–49

Wang W, Lai Q, Fu H, Shen J, Ling H (2019) Salient object detection in the deep learning era: an in-depth survey. arXiv:1904.09146

Winn JM, Criminisi A, Minka TP (2005) Object categorization by learned universal visual dictionary. In: ICCV, pp 1800–1807

Xie Y, Liu Z, Zhou X, Liu W, Zou X (2019) Video co-segmentation based on directed graph. Multimedia Tools Appl 78(8):10353–10372

Xu X-S, Xue X, Zhou Z-H (2011) Ensemble multi-instance multi-label learning approach for video annotation task. In: Proceedings of the 19th international conference on multimedia 2011, Scottsdale, AZ, USA, November 28–December 1, 2011, pp 1153–1156

Yang W, Sun Z, Li B, Hu J, Yang K (2017) Unsupervised multiple object cosegmentation via ensemble MIML learning. In: Multimedia modeling - 23rd international conference, MMM 2017, Reykjavik, Iceland, January 4–6, 2017, proceedings, Part II, pp 393–404

Yuan Z-H, Lu T, Wu Y (2017) Deep-dense conditional random fields for object co-segmentation. In: Proceedings of the twenty-sixth international joint conference on artificial intelligence, IJCAI 2017, Melbourne, Australia, August 19–25, 2017, pp 3371–3377

Zha Z-J, Hua X-S, Mei T, Wang J, Qi G-J, Wang Z (2008) Joint multi-label multi-instance learning for image classification. In: 2008 IEEE computer society conference on computer vision and pattern recognition (CVPR 2008), 24–26 June, 2008, Anchorage, Alaska, USA

Zhang M-L, Zhou Z-H (2008) M3MIML: a maximum margin method for multi-instance multi-label learning. In: Proceedings of the 8th IEEE international conference on data mining (ICDM 2008), December 15–19, 2008, Pisa, Italy, pp 688–697

Zhang J, Wu Y, Feng W, Wang J (2019) Spatially attentive visual tracking using multi-model adaptive response fusion. IEEE Access 7:83873–83887

Zhang K, Chen J, Liu B, Liu Q (2019) Deep object co-segmentation via spatial-semantic network modulation. arXiv:1911.12950

Zhang K, Li T, Liu B, Liu Q (2019) Co-saliency detection via mask-guided fully convolutional networks with multi-scale label smoothing. In: IEEE conference on computer vision and pattern recognition, CVPR 2019, long beach, CA, USA, June 16–20, 2019. Computer Vision Foundation / IEEE, pp 3095–3104

Zhang K, Li T, Shen S, Liu B, Chen J, Liu Q (2020) Adaptive graph convolutional network with attention graph clustering for co-saliency detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9050–9059

Zhang X, Song H, Zhang K, Qiao J, Liu Q (2020) Single image super-resolution with enhanced laplacian pyramid network via conditional generative adversarial learning. Neurocomputing 398:531–538

Zhao J, Cao Y, Fan D-P, Cheng M-M, Li X-Y, Zhang L (2019) Contrast prior and fluid pyramid integration for RGBD salient object detection. In: IEEE conference on computer vision and pattern recognition, CVPR 2019, Long Beach, CA, USA, June 16–20, 2019. Computer Vision Foundation / IEEE, pp 3927–3936

Zhou Z-H, Zhang M-L (2006) Multi-instance multi-label learning with application to scene classification. In: Advances in neural information processing systems 19, proceedings of the twentieth annual conference on neural information processing systems, Vancouver, British Columbia, Canada, December 4–7, 2006, pp 1609–1616

Zhou Z-H, Zhang M-L, Huang S-J, Li Y-F (2012) Multi-instance multi-label learning. Artif Intell 176(1):2291–2320

Zhu H, Lu J, Cai J, Zheng J, Magnenat-Thalmann N (2014) Multiple foreground recognition and cosegmentation: an object-oriented CRF model with robust higher-order potentials. In: IEEE winter conference on applications of computer vision, Steamboat Springs, CO, USA, March 24–26, 2014, pp 485–492

Acknowledgments

This work was supported by National High Technology Research and Development Program of China (No. 2007AA01Z334), National Natural Science Foundation of China (Nos. 61321491 and 61272219), National Key Research and Development Program of China (Nos. 2018YFC0309100, 2018YFC0309104), the China Postdoctoral Science Foundation (Grant No. 2017M621700) and Innovation Fund of State Key Laboratory for Novel Software Technology (Nos. ZZKT2018A09).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: : SPAP clustering algorithm

Appendix: : SPAP clustering algorithm

The SPAP clustering algorithm we used is a novel clustering algorithm which is based on spectral clustering method. Generally, given a dataset Z = (z1,z2,z3...zn),n is the data number of this dataset. To use spectral clustering, we should first get its similarity matrix by Radial basis function kernel:

M is similarity matrix, \(|| z_{i} - z_{j} ||^{2}_{2}\) is Euclidean distance between zi and zj,σ is a free parameter and we set σ = 1 here. According similarity matrix M, we can then get its adjacency matrix W, degree matrix D, and Laplacian matrix L by L = D − W. After having Laplacian matrix L, we then construct a standardized Laplacian matrix. We use

to get standardized Laplacian matrix Ls. Then we can get the most m minimum eigenvalues of Ls. By the way, each eigenvalue has an eigenvector f and the shape of f is (n, 1). Finally, we can combine these m eigenvectors to construct an n × m matrix F and we standardize F row by row to get final eigenmatrix FS.

Normally, in spectral clustering, the k-means algorithm are used on matrix FS to get clustering result. The k-means algorithm requires the number of clusters. However, the group number of all common objects are unknown in practice. Hence, we use Affinity propagation to replace k-means, which does not need the number of clusters before clustering. Affinity propagation has the concept of similarity, responsibility, and availability to together determine whether one point can be the center. These points are called exemplars. First of all, similaritys(i,k) indicates how well the data point with index k is suited to be the exemplar for data point i. Obviously, k means the row k of eigenmatrix FS. When the goal is to minimize squared error, each similarity is set to a negative squared error(Euclidean distance): for point FSi and \(FS_{k}, s(i,k) = || FS_{i} - FS_{k} ||^{2}_{2}\). Alternatively, when appropriate, similarities may be set by hand. Because the similarity describes each point’s ability whether it can be chosen as an exemplar, in the beginning, we set each s(i,i) the same value. Then, the responsibility r(i,k), sent from data point i to candidate exemplar point k, reflects the accumulated evidence for how well-suited point k is to serve as the exemplar for point i, taking into account other potential exemplars for point i. The availability a(i,k), sent from candidate exemplar point k to point i, reflects the accumulated evidence for how appropriate it would be for point i to choose point k as its exemplar, taking into account the support from other points that point k should be an exemplar. To begin with, the availabilities are initialized to zero: a(i,k) = 0. Then, the responsibilities are computed using the rule:

Whereas the above responsibility update lets all candidate exemplars compete for ownership of a data point, the following availability update gathers evidence from data points as to whether each candidate exemplar would make a good exemplar:

According to the above rules, if the self-responsibilityr(k,k) is negative, the availability of point k as an exemplar can be increased if some other points have positive responsibilities for point k being their exemplar. To limit the influence of strong incoming positive responsibilities, the total sum is threshold so that it cannot go above zero. So the self-availabilitya(k,k) is updated differently:

Hence, after some iterations , availability and responsibility can be combined to identify exemplars. For point k, the value of that maximizes r(i,k) + a(i,k) either identifies point k as an exemplar if k = i, or identifies the data point is the exemplar for point i. The whole SPAP method is shown in Algorithm 1.

Rights and permissions

About this article

Cite this article

Li, B., Sun, Z., Xu, J. et al. Saliency based multiple object cosegmentation by ensemble MIML learning. Multimed Tools Appl 79, 31299–31328 (2020). https://doi.org/10.1007/s11042-020-09458-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09458-5