Abstract

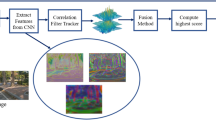

We propose the multilayer filter fusion network (MFFN) to address the problem of visual object tracking. In MFFN, the convolutional neural network (CNN) is used to extract the multilayer spatial features and then the convolutional long short-term memory (LSTM) to extract the temporal features of images. The object image centered at the target is cropped and fed into MFFN to obtain the correlation filter and the feature map to discriminate the target from background. The correlation filter is convolved with the corresponding feature map for the same layer to produce the probability map, which is then used to estimate the target position by searching its maximum value. The correlation filter corresponds to the tracked object image that is fed into MFFN and thus contains the appearance changes of target. In our multilayer filter fusion tracking (MFFT) framework, we use two MFFNs with different inputs to track the target via coarse-to-fine location approach. The first one is used to estimate the target position from the entire image and the second one to locate the target from the estimated target position. After the networks are trained off-line they do not require online learning during tracking. Experimental results on the CVPR2013 benchmark demonstrate that our tracking algorithm achieves competitive results compared with other tracking methods.

Similar content being viewed by others

References

Babenko B, Yang M-H, and Belongie S (2009) Visual tracking with online multiple instance learning. IEEE Conf. Computer Vision Pattern Recog (CVPR). :983–990

Bertinetto L, Valmadre J, Henriques JF, Vedaldi A, and Torr PHS (2016) Fully-convolutional siamese networks for object tracking. European Conf. Computer Vision (ECCV)

Bhat G, Johnander J, Danelljan M, Khan FS, Felsberg M (2018) Unveiling the power of deep tracking. European Conf. Computer Vision (ECCV)

Bolme, D. S. J. R. Beveridge, B. A. Draper, and Y. M. Lui (2010) Visual object tracking using adaptive correlation filters. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)

Cui Z, Xiao S, Feng J, and Yan S (2016) Recurrently target attending tracking. IEEE Conf. Computer Vision and Pattern Recognition (CVPR): 1449–1458

Dai K, Wang D, Lu H, Sun C, J. Li (2019) Visual tracking via adaptive spatially-regularized correlation filters. IEEE Conf. Comput Vision Pattern Recog (CVPR)

Danelljan M, Khan FS, Felsberg M, and Weijer JVD(2014) Adaptive color attributes for real-time visual tracking. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)

Danelljan M, Gustav H, Khan FS, and Felsberg M (2015) Convolutional features for correlation filter based visual tracking. IEEE Int’l Conf. Computer Vision (ICCV) :58–66

Danelljan M, Hager G, Shahbaz Khan F, and Felsberg M (2015) Learning spatially regularized correlation filters for visual tracking. IEEE Int’l Conf. Computer Vision (ICCV)

Danelljan, M. G. Hager, F. S. Khan, and M. Felsberg. (2016) Discriminative scale space tracking. IEEE Trans. Pattern Analysis and Machine Intelligence (TPAMI)

Danelljan M, Robinson A, Shahbaz Khan F, and Felsberg M (2016) Beyond correlation filters: learning continuous convolution operators for visual tracking. European Conf. Computer Vision (ECCV)

Danelljan M, Bhat G, Khan FS, et al. (2017) ECO: Efficient Convolution Operators for Tracking. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)

Danelljan M, Bhat G, Khan FS and Felsberg M (2019) Atom: Accurate tracking by overlap maximization. IEEE Conf. Comput Vision Pattern Recog (CVPR), 4660–4669.

Deng J, Dong W, Socher R, Li L-J, Li K, and FeiFei L (2009) ImageNet: a large-scale hierarchical image database. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)

Fan H and Ling H (2016) SANet: structure-aware network for visual tracking. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)

Galoogahi HK, Fagg A, and Lucey S (2017) Learning background-aware correlation filters for visual tracking. IEEE Int’l Conf. Computer Vision (ICCV)

Hare S, A. Saffari, and P. H. S. Torr (2011) Struck: structured output tracking with kernels. IEEE Int’l Conf. Computer Vision (ICCV) :263–270

He A, Luo C, Tian X, and Zeng W (2018) A twofold siamese network for real-time object tracking. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)

Henriques, J. F. R. Caseiro, P. Martins, and J. Batista. Highspeed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal Mach Intel (TPAMI), vol. 37, no. 3, pp.583–596, 2015.

Kalal Z, Mikolajczyk K, Matas J (2012) Tracking-learning-detection. IEEE Trans. Patt Anal Mach Intel (TPAMI) 34(7):1409–1422

Kingma D, Ba J (2015) Adam: a method for stochastic optimization. Int’l Conf. Learning Represent (ICLR)

Li B, Yan J, Wu W, Zhu Z, and Hu X (2018) High performance visual tracking with siamese region proposal network. IEEE Conf. Computer Vision and Pattern Recognition (CVPR). :8971–8980

Li F, Tian C, Zuo W, L. Zhang, and Yang M-H Learning spatial-temporal regularized correlation filters for visual tracking. IEEE Conf. Computer Vision and Pattern Recognition (CVPR), 2018.

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J (2019) Siamrpn++: Evolution of siamese visual tracking with very deep networks. IEEE Conf. Computer Vision and Pattern Recognition (CVPR). 4282–4291

Lukezic A, Vojir T, Zajc LC, Matas J, Kristan M (2018) Discriminative correlation filter tracker with channel and spatial reliability. Int J Comput Vision (IJCV)

Ma C, Huang J-B, Yang X, and Yang M-H (2015) Hierarchical convolutional features for visual tracking. IEEE Int’l Conf. Computer Vision (ICCV) : 3074–3082

Ma C, Huang J-B, Yang X, Yang M-H (2018) Adaptive correlation filters with long-term and short-term memory for object tracking. Int J Comput Vision (IJCV) 126:771–796

Mahadevan V, Vasconcelos N (2013) Biologically inspired object tracking using center-surround saliency mechanisms. IEEE Trans. Pattern Anal Mach Intel (TPAMI) 35(3):541–554

Nam H and Han B (2016) Learning multi-domain convolutional neural networks for visual tracking. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)

Ning G, Zhang Z, Huang C, He Z, Ren X, and Wang H (2016) Spatially supervised recurrent convolutional neural networks for visual object tracking. IEEE Int’l Symposium Circuits Syst (ISCAS). :1–4,

Qi Y, Zhang S, Qin L, Yao H, Huang Q, and Yang JLM-H (2016) Hedged deep tracking. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)

Redmon J, Divvala S, Girshick R, and Farhadi A (2016) You only look once: unified, real-time object detection. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, hosla A, Bernstein M, Berg AC, Fei-Fei L (2015) ImageNet large scale visual recognition challenge. Int J Comput Vision (IJCV) 115:211–252

Song Y, Ma C, Wu X, Gong L, Bao L, Zuo W, Shen C, Lau R, Yang M-H (2018) Vital: visual tracking via adversarial learning. IEEE Conf. Comput Vision Pattern Recog (CVPR)

C. Sun, D. Wang, H. Lu, and M. Yang (2018) Correlation tracking via joint discrimination and reliability learning. IEEE Conf. Comput Vision Pattern Recog (CVPR)

Sun Y, Sun C, Wang D, He Y, and Lu H (2019) Roi pooled correlation filters for visual tracking. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)

Supancic JS and Ramanan D (2013). Self-paced learning for long-term tracking. IEEE Conf. Comput Vision Patt Recog (CVPR). :2379–2386

Valmadre J, Bertinetto L, Henriques JF, Vedaldi A, and Torr PHS (2017) End-to-end representation learning for correlation filter based tracking. IEEE Conf. Computer Vision and Pattern Recognition (CVPR)

Wang X, Valstar M, Martinez B, Khan MH, and Pridmore T (2015) TRIC-track: Tracking by regression with incrementally learned cascades. IEEE Int’l Conf. Computer Vision (ICCV). :4337–4345

Wu Y, Lim J, and Yang M-H (2013) Online object tracking: A benchmark. IEEE Conf. Computer Vision and Pattern Recognition (CVPR). :2411–2418

Yang T and Chan AB (2017) Recurrent filter learning for visual tracking. IEEE Int’l Conf. Computer Vision (ICCV)

Yilmaz A, Javed O, Shah M (2006) Object tracking: a survey. ACM computing surveys (CSUR) 38(4):13

Zhu Z, Wang Q, Li B, Wu W, Yan J, and Hu W (2018) Distractor-aware siamese networks for visual object tracking. European Conf. Computer Vision (ECCV). :101–117.

W. Zuo, X. Wu, L. Lin, L. Zhang, and M.-H. Yang (2018) Learning support correlation filters for visual tracking. IEEE Trans. Pattern Anal Mach Intel (TPAMI). :1–1

Acknowledgements

This work was supported by the National Natural Science Foundation of China (Grant No. 61703350) and Independent Research Project of National Key Laboratory of Traction Power of China (Grant No. 2019TPL-T19).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Quan, W., Li, T., Zhou, N. et al. Visual tracking with multilayer filter fusion network. Multimed Tools Appl 80, 6493–6506 (2021). https://doi.org/10.1007/s11042-020-09852-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-09852-z