Abstract

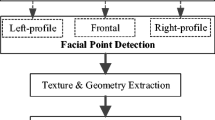

A high volume of images is shared on the public Internet each day. Many of these are photographs of people with facial expressions and actions displaying various emotions. In this work, we examine the problem of classifying broad categories of emotions based on such images, including Bullying, Mildly Aggressive, Very Aggressive, Unhappy, Disdain and Happy. This work proposes the Context-based Features for Classification of Emotions in Photographs (CFCEP). The proposed method first detects faces as a foreground component, and other information (non-face) as background components to extract context features. Next, for each foreground and background component, we explore the Hanman transform to study local variations in the components. The proposed method combines the Hanman transform (H) values of foreground and background components according to their merits, which results in two feature vectors. The two feature vectors are fused by deriving weights to generate one feature vector. Furthermore, the feature vector is fed to a CNN classifier for classification of images of different emotions uploaded on social media and public internet. Experimental results on our dataset of different emotion classes and the benchmark dataset show that the proposed method is effective in terms of average classification rate. It reports 91.7% for our 10-class dataset, 92.3% for 5 classes of standard dataset and 81.4% for FERPlus dataset. In addition, a comparative study with existing methods on the benchmark dataset of 5-classes, standard dataset of facial expression (FERPlus) and another dataset of 10-classes show that the proposed method is best in terms of scalability and robustness.

Similar content being viewed by others

References

Albanie S, Nagrani A, Vedaldi A, Zisserman A (2018) Emotions recognition in speech using cross-model transfer in the wild. In Proc ACMMM:292–301

Alexandre GR, Soares JM, The GAP (2020) Systematic review of 3D facial expression recognition methods. Pattern Recognition 100

Alzubi J, Nayyar A, Kumar A (2018) Machine learning from theory to algorithms: an overview. J Phys

Arora R, Suman (2012) Comparative analysis of classification algorithms on different datasets using Weka. Int J Comput Appl 54:21–25

E. Avots, T. Sapinski, M. Bachmann an d, D. Kaminska, “Audiovisual emotions recognition in the wild”, Mach Vis Appl, 30, 975–985, 2018.

Bachrach Y, Kosiniski M, Graepel T, Kohli P, Stillwell D (2012) Personality and patterns of Facebook usage. In Proc ACM WebSci:24–32

Barsoum E, Zhang C, Ferrer CC, Zhang Z (2016) Training deep networks for facial expression recognition with crowd-sourced label distribution. In Proc ACMMM:279–283

Bharati A, Singh R, Vatsa M, Bowyer KW (2016) Detecting facial retouching using supervised deep learning. IEE Trans IFS 11:1903–1913

Chen X, Qin Z, An L, Bhanu B (2016) Multi-person tracking by online learned grouping model with nonlinear motion context. IEEE Trans CSVT 26:2226–2239

Cheung M, She J, Jie Z (2015) Connection discovery using big data of user shared images in social media. IEEE Trans. MM 17:1417–1428

Dwivedi R, Dey S Score level fusion for cancelable multi-biometric verification. Pattern Recognition Letters 2018

Farzindar A, Inkpen D (2015) Natural language processing for social media. Synthesis Lectures on Human Language Techniques, Morgan and Claypool Publishers

Favaretto RM, Knob P, Musse SR, Vilanova F, Costa AB (2018) Detecting personality and emotions traits in crowds from video sequences. Mach Vis Appl 30:999–1012

Grover J, Hanmandlu M (2018) The fusion of multispectral palmprints using the information set based features and classifier. Eng Appl Artif Intell 67:111–125

Han H, Otto C, Liu X, Jain AK (2015) Demographic estimation from face images: Human vs. machine performance. IEEE Trans PAMI 37:1148–1161

Hsu SC, Chuang CH, Huang CL, Teng PR, Lin MJ (2018) A video based abnormal human behavior detection for psychiatric patient monitoring. In Proc IWAIT:1–4

Hu Y, Manikonda L, Kambhampati S (2014) What we Instagram: A first analysis of Instagram photo content and user types. In: Proc. AAAI, pp 595–598

Jaiswal S, Virmani S, Sethi V, De K, Roy PP (2019) An intelligent recommendation system using gaze and emotion detection. Multimed Tools Appl 78:14231–14250

Krishnani D, Shivakumara P, Lu T, Pal U, Ramachandra R (2019) Structure function based transform features for behavior-oriented social media image classification. Proc ACPR:594–608

Kumar A, Sangwan SR, Arora A, Nayyar A, Abdel-Basset M (2019) Sarcasm detection using soft attention-based bidirectional long short-term memory model with convolution network. IEEE Access 7:23319–23328

Kumar A, Sangwan SR, Nayyar A (2019) Rumour veracity detection on twitter using particle swarm optimized shallow classifiers. Multimed Tools Appl 78:24083–24101

Li S, Deng W (2019) Reliable crowdsourcing and deep locality preserving learning for unconstrained facial expression recognition. IEEE Trans Image Processing 28:356–370

Liu L, Pietro DP, Samani ZR, Moghadadam ME, Ungar L (2016) Analyzing personality through social media profile picture choice. In Proc ICWSM

Lu S, Guo S, Wang W, Qiao H, Wang Y, Luo W (2020) Multi-view Laplacian eigenmaps based on bag-of-neighbors for RGB-D human emotion recognition. Information Sciences 509:243–256

Mabrouk AB, Zagrouba E (2018) Abnormal behavior recognition for intelligent video surveillance systems: A review. Expert Syst Appl 91:480–491

Mukhopadhyay M, Pal A, Nayyar A, Pramanik PKD, Dasgupta N, Choudhury P (2020) Facial Emotion Detection to Assess Learner's State of Mind in an Online Learning System. In: Proc. ICIIT, pp 107–115

Mungra D, Agrawal A, Sharma P, Tanwar S, Obaidat MS (2020) PRATIT: a CNN-based emotion recognition system using histogram equalization and data augmentation. Multimed Tools Appl:2285–2307

Rowden LB, Han H, Otto C, Klare BF, Jain AK (2014) Unconstrained face recognition: identifying a person of interest from a media collection. IEE Trans IFS 9:2144–2157

Roy S, Shivakumara P, Jain N, Khare V, Dutta A, Pal U, Lu T (2018) Rough fuzzy based scene categorization for text detection and recognition in video. Pattern Recognition 80:64–82

Said N, Ahmad K, Pogorelov K, Hassan L, Ahmad N, Conci N Natural disasters detection in social media and satellite imagery: a survey. Multimed Tools Appl 78:31267–31302, 2029

Sharama M, Jalal AS, Khan A (2019) Emotion recognition using facial expression by using key points descriptor and texture features. Multimed Tools Appl 78:16195–16219

Shehab D, Ammar H (2018) Statistical detection of panic behavior in crowded scenes. Mach Vis Appl 30:919–931

Tian S, Pan Y, Huang C, Lu S, Yu K, Tan CL (2015) Text Flow: A unified text detection system in natural scene images. In Proc ICCV:4651–4659

Tiwari C, Hanmandlu M, Vasikarla S (2015) Suspicious face detection based on eye and other facial features movement monitoring. In Proc AIPR:1–8

Tous R, Gomez M, Poveda J, Cruz L, Wust O, Makni M, Ayguade E (2018) Automated curation of brand-related social media images with deep learning. Multimed Tools Appl 77:27123–27142

Viola P, Jones MJ (2004) Robust real-time face detection. Int J Comput Vis 57(2):137–154

Wang T, Li B (2015) Sentiment analysis for social media images, In Proc ICDAMW, 1584–1591

Wang D, Otto C, Jain AK (2017) Face search at scale. IEEE Trans PAMI 39:1122–1136

Xie S, Hu H (2019) Facial expression recognition using hierarchical features with deep comprehensive multi-patches aggregation convolutional neural networks. IEEE Trans Multimedia 21:211–220

Xu YW, Chen S (2016) Medical image fusion using discrete fractional wavelet transform. Biomed Signal Process Control 27:103–111

Xu G, Li W, Liu J (2020) A social emotion classification approach using multi-modal fusion. Future Gener Comput Syst 102:347–356

Yan Y, Zhang Z, Chen S, Wang H (2020) Low resolution facial expression recognition: A filter learning perspective. Signal Processing 169

Zhang K, Zhang Z, Li Z, Qiao Y (2016) Joint face detection and alignment using multi-task cascaded convolutional networks. IEEE Signal Process Lett 23:1499–1503

Zhang J, Wu C, Wang Y, Wang P (2018) Detection of abnormal behavior in narrow scene with perspective distortion. Mach Vis Appl 30:987–998

Zhang T, Zheng W, Cui Z, Zong Y, Li Y (2019) Spatial-temporal recurrent neural network for emotion recognition. IEEE Trans Cybernetics 49:839–847

Zheng Y, Iwana BK, Uchida S (2019) Mining the displacement of Max-pooling for text recognition. Pattern Recognit:558–569

Acknowledgements

Tong Lu, Palaiahnakote Shivakumara and Umapada Pal received support for this work from the Natural Science Foundation of China under Grant 61672273 and Grant 61832008, and the Science Foundation for Distinguished Young Scholars of Jiangsu under Grant BK20160021. Palaiahnakote Shivakumara received partial support for this work from the Faculty Grant: GPF014D-2019, University of Malaya, Malaysia. The authors would like to thank the authors of the paper [23] for sharing their dataset to facilitate experimentation and a comparative study. Special thanks to Swati Kanchan, Computer Vision and Patten Recognition Unit, Indian Statistical Institute, Kolkata for helping to conduct all the new experiments to revise the draft.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Krishnani, D., Shivakumara, P., Lu, T. et al. A new context-based feature for classification of emotions in photographs. Multimed Tools Appl 80, 15589–15618 (2021). https://doi.org/10.1007/s11042-020-10404-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-020-10404-8