Abstract

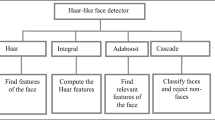

The era of video data has fascinated users into creating, processing, and manipulating videos for various applications. Voluminous video data requires higher computation power and processing time. In this work, a model is developed that can precisely acquire keyframes through hierarchical summarization and use the keyframes to detect faces and assess the emotional intent of the user. The key-frames are used to detect faces using recursive Viola-Jones algorithm and an emotional analysis for the faces extracted is conducted using an underlying architecture developed based on Deep Neural Networks (DNN). This work has significantly contributed in improving the accuracy of face detection and emotional analysis in non-redundant frames. The number of frames selected after summarization was less than 30% using the local minima extraction. The recursive routine introduced for face detection reduced false positives in all the video frames to lesser than 2%. The accuracy of emotional prediction on the faces acquired through the summarized frames, on Indian faces achieved a 90%. The computational requirement scaled down to 40% due to the hierarchical summarization that removed redundant frames and recursive face detection removed false localization of faces. The proposed model intends to emphasize the importance of keyframe detection and use them for facial emotional recognition.

Similar content being viewed by others

References

Ahad Md. Rahman A, Paul T, Shammi U, Kobashi S (2018) A Study on Face Detection Using Viola-Jones Algorithm for Various Backgrounds, Angels and Distances. Applied Soft Computing

Aravind Raj R, Haresh V, Sini Raj P (2017) Comparative study of emotion detection using multi-level HMM and convolution neural networks from real time videos. Int J Pure Appl Math 114(11):71–81

Engoor S, SendhilKumar S, Hepsibah Sharon C, Mahalakshmi GS (2020) Occlusion-aware Dynamic Human Emotion Recognition Using Landmark Detection. 2020 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, pp 795–799

Farkhod Makhmudkhujaev M, Abdullah-Al-Wadud MD, Iqbal TB, Ryu B, Chae O (2019) Facial expression recognition with local prominent directional pattern. Signal Process Image Commun 74:1–12

Fei M, Jiang W, Mao W (2018) A novel compact yet rich key frame creation method for compressed video summarization. Multimedia Tools Application 77(10):11957–11977

Geng T, Yang M, You Z, Cai Y, Huang F (2018) 111Multiscale overlapping blocks Binarized statistical image features descriptor with Flip-free distance for face verification in the wild. Neural Comput Appl 30(10):3243–3252

Gharaee Z, Gärdenfors P, Johnsson M (2017) First and second order dynamics in a hierarchical SOM system for action recognition. Appl Soft Comput 59(1):574–585

Gong B, Chao W, Grauman K, Sha F (2014) Diverse Sequential Subset Selection for Supervised Video Summarization. In: Proc. 28th Conference on Neural Information Processing System s, Montreal, Canada, pp 2069–2077

González-Lozoya SM, de la Calleja J, Pellegrin L (2020) Recognition of facial expressions based on CNN features. Multimed Tools Appl 79:13987–14007

Gunawardena P, Amila O, Sudarshana H, Nawaratne R, Kr A, Luhach DA, Perera AS, Chitraranjan C, Chilamkurti N, De Silva D (2020) Real-time automated video highlight generation with dual-stream hierarchical growing self-organizing maps. Journal of Real-Time Image Processing:1–19

Gygli M, Grabner H, Van Gool L (2015) Video summarization by learning submodular mixtures of objectives. In: Pro-ceedings of the IEEE Conference on Computer Vision andPattern Recognition (CVPR)

Hannane R, Elboushaki A, Afdel K, Naghabhushan P, Javed M (2016) An efficient method for video shot boundary detection and Keyframe extraction using SIFT-point distribution histogram. International Journal of Multimedia Information Retrieval 5(2):89–104

Happy SL, Patnaik P, Routray A, Guha R (2017) The Indian spontaneous expression database for emotion recognition. IEEE Trans Affect Comput 8(1):131–142

Karimi V, Tashk A (2012) Age and Gender Estimation by using Hybrid Facial Features. In: Proc. 20th Telecommunications Forum, Belgrade, Serbia, pp 1111725–1728

Kaya H, Gürpınar F, Salah AA (2017) Video-based emotion recognition in the wild using deep transfer learning and score fusion. Image Vis Comput 65(1):66–75

Kohonen T (1990) The self-organizing map. Proc IEEE 78(9):1464–1480

Kortli Y, Jridi M, Falou AA, Atri M (2020) Face Recognition Systems: A Survey. Sensors (Basel). vol 20(2), pp 342

Kumar K, Shrimankar DD, Singh N (2018) An Efficient SOM Technique for Event Summarization in Multi-view Surveillance Videos. In: Proc. 5th International Conference on Advanced Computing, Networking and Informatics, Rourkela, India, pp s383–389

Lan S, Panda R, Zhu Q, Roy-Chowdhury AK (2018) Ffnet: Video fast-forwarding via reinforcement learning. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 6771–6780

Lu W, Yang M (2019) Face Detection Based on Viola-Jones Algorithm Applying Composite Features. 2019 International conference on Robots & Intelligent System (ICRIS), Haikou, China, pp 82–85

Moses TM, Balachandran K (2019) A Deterministic Key-Frame Indexing and Selection for Surveillance Video Summarization. In: Proc. International Conference on Data Science and Communication, Bangalore, India, pp 1–5

Nawaratne R, Alahakoon D, Silvaa DD, Chhetri P, Chilamkurti N (2018) Self-evolving intelligent algorithms for facilitating data interoperability in IoT environments. Futur Gener Comput Syst 86(1):421–432

Panda, Roy-Chowdhury AK (2017) Collaborative summarization of topic-related videos. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR),

Panda R, Mithun NC, Chowdhury AKR (2017) Diversity-aware multi-video summarization. IEEE Trans Image Process 26(10):4712–4724

Paul V, Jones Michael J (2004) Robust Real-Time Face Detection. International Journal of Computer Vision, Vol 57–2,

Pineda XA, Ricci E, Sebe N (2019) Multimodal Behavior Analysis in the Wild: An Introduction. Computer Vision and Pattern Recognition, Academic Press, New York, NY, pp 1–8

Ramirez Rivera A, Rojas Castillo J, Oksam Chae O (2013) Local directional number pattern for face analysis: face and expression recognition. IEEE Trans Image Process 22(5):1740–1752

Riaz H, Akram U (2018) Emotion Detection in Videos Using Non-Sequential Deep Convolutional Neural Network. In: Proc. The IEEE International Conference on Information and Automation for Sustainability, Colombo, Sri Lanka, pp 1–6

Salih H, Kulkarni L (2017) Study of Video Based Facial Expression and Emotions Recognition Methods. In: Proc. The International Conference on IoT in Social, Mobile, Analytics and Cloud, Coimbatore, India, pp 692–696

Sanchez JG, Baydogan M, Echeagaray MEC, Atkinson R, Winslow B (2017) Affect measurement: a roadmap through approaches, technologies, and data analysis. Emotions and Affect in Human Factors and Human-Computer Interaction, vol 1, pp 255–288, Academic Press, New York, NY

Singh S, Benedict S (2019) Indian semi-acted facial expression (iSAFE) dataset for human emotions recognition. Commun Comp Infor Sci 1209(1):150–162

Tang H, Wang W, Wu S, Chen X, Xu D, Sebe N, Yan Y (2019) Expression conditional Gan for facial expression-to-expression translation. In: Proc. IEEE international conference on image processing, Taipei, Taiwan, pp 4449–4453

Tautkute I, Trzcinski T, Bielski A (2018) I know how You feel: emotion recognition with facial landmarks. IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, pp 1959–19592

Thiruthuvanathan M, Krishnan B (2020) EMONET: a cross database progressive deep network for facial expression recognition. Int J Intell Eng Syst 13(6):31–41

Zhang B, Essl G, Provost EM (2016) Automatic Recognition of Self-Reported and Perceived Emotion: Does Joint Modeling Help?. In: Proc. The 18th ACM international conference on multimodal interaction, association for computing machinery, New York, pp 217–224

Zhang Y, Liang X, Zhang D, Tan M, Xing EP (2020) Unsupervised object-level video summarization with online motion auto-encoder. Pattern Recogn Lett 130(1):376–385

Author information

Authors and Affiliations

Contributions

The contributions by the authors for this research article are as follows: “conceptualization, methodology, Formal analysis, data curation, visualization and writing—original draft preparation Michael Moses Thiruthuvanathan; Result validation, data curation, resources, formal analysis writing—review and editing and supervision Balachandran Krishnan;”

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Thiruthuvanathan, M.M., Krishnan, B. Multimodal emotional analysis through hierarchical video summarization and face tracking. Multimed Tools Appl 81, 35535–35554 (2022). https://doi.org/10.1007/s11042-021-11010-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11010-y