Abstract

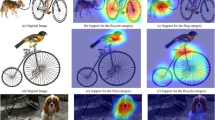

Deep convolution neural networks have been widely studied and applied in many computer vision tasks. However, they are commonly treated as black-boxes and plagued by the inexplicability. In this paper, we propose a novel method to visually interpret the convolutional neural network in the field of image classification. Our method is capable of generating fine-grained and class discriminative heatmap that highlights the important input features contributing to specific predictions. Specifically, through the combination of the modified deconvolution and the pixel-wise Grad-CAM, the fine-grained heatmap and discriminative mask can be fused to achieve fine-grained deconvolution characteristics, and retain the class discriminativeness of the Grad-CAM, enhancing the interpretation effect of the heatmap. Both qualitative and quantitative experiments on ILSVRC 2012 dataset and PASCAL VOC 2012 dataset are conducted. The results indicate that the proposed method achieves a better visual effect with less noise in comparison to the previous methods, especially for visualising small objects in simple contexts. Furthermore, this method can realize a moderately effective performance on weakly supervised instance segmentation tasks, whereas the existing methods only work for weakly supervised object localisation.

Similar content being viewed by others

References

Adebayo J, Gilmer J, Muelly MC, Goodfellow I, Hardt M, Kim B (2018) Sanity checks for saliency maps. In: Advances in neural information processing systems, pp. 9505–9515.

Bach S, Binder A, Montavon G, Klauschen F, Müller KR, Samek W (2015) On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLOS One 10(7):130140

Bojarski M, Choromanska A, Choromanski K, Firner B, Jackel LD, Muller U, Zieba K (2016) VisualBackProp: visualizing CNNs for autonomous driving. arXiv preprint.https://arxiv.org/#. Accessed 30 Oct 2021.

Chattopadhay A, Sarkar A, Howlader P, Balasubramanian VN (2018) Grad-CAM++: generalized gradient-based visual explanations for deep convolutional networks. In 2018 IEEE winter conference on applications of computer vision (WACV), pp. 839–847.

Everingham M, Gool LV, Williams CKI, Winn J, Zisserman A (2010) The pascal visual object classes (voc) challenge. Int J Comput Vision 88(2):303–338

Fong RC, Vedaldi A (2017) Interpretable explanations of black boxes by meaningful perturbation. In: International conference on computer vision (ICCV), pp. 3449–3457.

Gu J, Yang Y, Tresp V (2018) Understanding individual decisions of CNNs via contrastive backpropagation Asian conference on computer vision. Springer, Cham, pp 119–134

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR) (pp. 770–778).

Iwana BK, Kuroki R, Uchida S (2019) Explaining convolutional neural networks using Softmax gradient layer-wise relevance propagation. In: International conference on computer vision workshop (ICCVW), pp. 4176–4185.

Kim B, Seo J, Jeon S, Koo J, Choe J, Jeon T (2019) Why are saliency maps noisy? Cause of and solution to noisy saliency maps. In: International conference on computer vision workshop (ICCVW), pp. 4149–4157.

Liu P, Yu H, Cang S (2019) Adaptive neural network tracking control for underactuated systems with matched and mismatched disturbances. Nonlinear Dyn 98(2):1447–1464

Lu, X., Ma, C., Shen, J., Yang, X., Reid, I., & Yang, M. H. (2020). Deep object tracking with shrinkage loss. In: IEEE transactions on pattern analysis and machine intelligence, 1–1.

Lu X, Wang W, Shen J, Crandall D, Luo J (2020) Zero-shot video object segmentation with co-attention siamese networks. IEEE Trans Pattern Anal Mach Intell 1:1–1

Marcel S, Rodriguez Y (2010) Torchvision the machine-vision package of torch. In: Proceedings of the 18th ACM international conference on multimedia, pp. 1485–1488.

Montavon G, Lapuschkin S, Binder A, Samek W, Müller KR (2017) Explaining nonlinear classification decisions with deep Taylor decomposition. Pattern Recogn 65:211–222

Noh H, Hong S, Han B (2015) Learning deconvolution network for semantic segmentation. In: 2015 IEEE international conference on computer vision (ICCV), pp. 1520–1528.

Ozbulak U (2019) PyTorch CNN visualizations. GitHub repository: https://github.com/utkuozbulak/pytorch-cnn-visualizations, Accessed 30 Oct 2021, GitHub.

Paszke A, Gross S, Chintala S, Chanan G, Yang E, DeVito Z, Lin Z, Desmaison A, Antiga L, Lerer A (2017) Automatic differentiation in PyTorch. In: NIPS autodiff workshop.

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S et al (2015) ImageNet large scale visual recognition challenge. Int J Comput Vision 115(3):211–252

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D (2017) Grad-CAM: visual explanations from deep networks via gradient-based localization. Int J Comput Vision 128(2):336–359

Simonyan K, Vedaldi A, Zisserman A (2013) Deep inside convolutional networks: visualising image classification models and saliency maps. In: ICLR (Workshop Poster) 2015: 1520–1528

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. In: International conference on learning representations (ICLR). arXiv preprint. https://arxiv.org/#. Accessed 30 Oct 2021.

Smilkov D, Thorat N, Kim B, Viégas FB, Wattenberg M (2017) SmoothGrad: removing noise by adding noise. arXiv preprint. https://arxiv.org/#. Accessed 30 Oct 2021

Springenberg JT, Dosovitskiy A, Brox T, Riedmiller MA (2015) Striving for simplicity: the all convolutional net. In: ICLR (workshop track). arXiv preprint 2014. https://arxiv.org/#. Accessed 30 Oct 2021

Sun L, Zhao C, Yan Z, Liu P, Duckett T, Stolkin R (2019) A novel weakly-supervised approach for RGB-D-based nuclear waste object detection. IEEE Sens J 19(9):3487–3500

Sundararajan M, Taly A, Yan Q (2017) Axiomatic attribution for deep networks. In: ICML’17 proceedings of the 34th international conference on machine learning, pp. 3319–3328.

Wagner, J., Kohler, J. M., Gindele, T., Hetzel, L., Wiedemer, J. T., & Behnke, S. (2019). Interpretable and fine-grained visual explanations for convolutional neural networks. In: 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp. 9097–9107.

Wang H, Wang Z, Du M, Yang F, Zhang Z, Ding S, Hu X (2020) Score-CAM: score-weighted visual explanations for convolutional neural networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pp. 24–25.

Zeiler MD, Fergus R (2014) Visualizing and understanding convolutional networks. In 13th European conference on computer vision (ECCV) (pp. 818–833).

Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A (2015) Object detectors emerge in deep scene CNNs. In: International conference on learning representations (ICLR). arXiv preprint. https://arxiv.org/#. Accessed 30 Oct 2021.

Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A (2016) Learning deep features for discriminative localization. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp. 2921–2929. https://arxiv.org/#. Accessed 30 Oct 2021

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 61673395). We would like to thank anonymous editors and reviewers for their valuable comments on this article.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Si, N., Zhang, W., Qu, D. et al. Fine-grained visual explanations for the convolutional neural network via class discriminative deconvolution. Multimed Tools Appl 81, 2733–2756 (2022). https://doi.org/10.1007/s11042-021-11464-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11464-0