Abstract

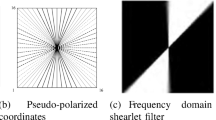

The fusion of infrared and visible images can obtain a combined image with hidden objective and rich visible details. To improve the details of the fusion image from the infrared and visible images by reducing artifacts and noise, an infrared and visible image fusion algorithm based on ResNet-152 is proposed. First, the source images are decomposed into the low-frequency part and the high-frequency part. The low-frequency part is processed by the average weighting strategy. Second, the multi-layer features are extracted from high-frequency part by using the ResNet-152 network. Regularization L1, convolution operation, bilinear interpolation upsampling and maximum selection strategy on the feature layers to obtain the maximum weight layer. Multiplying the maximum weight layer and the high-frequency as new high-frequency. Finally, the fusion image is reconstructed by the low-frequency and the high-frequency. Experiments show that the proposed method can obtain more details from the image texture by retaining the significant features of the images. In addition, this method can effectively reduce artifacts and noise. The consistency in the objective evaluation and visual observation performs superior to the comparative algorithms.

Similar content being viewed by others

Data availability

The code and the test vector map data associated with this paper can be found at https://github.com/diylife/imagefusion_deeplearning.git.

References

Du P et al (2020) Advances of four machine learning methods for spatial data handling: a review. J Geovis Spat Anal 4(1):13

Haghighat M, Razian MA (2014) Fast-FMI: non-reference image fusion metric. IEEE

He K, et al (2016) Deep residual learning for image recognition

Huang Y, et al (2017) Infrared and visible image fusion with the target marked based on multi-resolution visual attention mechanisms. In: Selected Papers of the Chinese Society for Optical Engineering Conferences held October and November 2016. International Society for Optics and Photonics

Kim M, Han DK, Ko H (2016) Joint patch clustering-based dictionary learning for multimodal image fusion. Inf fusion 27:198–214

Kumar BS (2015) Image fusion based on pixel significance using cross bilateral filter. SIViP 9(5):1193–1204

Li H, Wu X-J (2018) Densefuse: a fusion approach to infrared and visible images. IEEE Trans Image Process 28(5):2614–2623

Li S, Kang X, Hu J (2013) Image fusion with guided filtering. IEEE Trans Image Process 22(7):2864–2875

Li H, Wu X-J, Durrani TS (2019) Infrared and visible image fusion with ResNet and zero-phase component analysis. Infrared Phys Technol 102:103039

Liu Y et al (2016) Image fusion with convolutional sparse representation. IEEE Signal Process Lett 23(12):1882–1886

Liu Y et al (2017) Multi-focus image fusion with a deep convolutional neural network. Inf Fusion 36:191–207

Liu SP, Fang Y (2007) Infrared image fusion algorithm based on contourlet transform and improved pulse coupled neural network. J Infrared Millim Waves 26(3):217–221

Liu C, Qi Y, Ding W (2017) Infrared and visible image fusion method based on saliency detection in sparse domain. Infrared Phys Technol 83:94–102

Liu S, Tian G, Xu Y (2019) A novel scene classification model combining ResNet based transfer learning and data augmentation with a filter. Neurocomputing 338:191–206

Ma J et al (2017) Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Phys Technol 82:8–17

Ma J et al (2020) Infrared and visible image fusion via detail preserving adversarial learning. Inf Fusion 54:85–98

Ma J, Ma Y, Li C (2019) Infrared and visible image fusion methods and applications: a survey. Inf Fusion 45:153–178

Prabhakar, KR, Srikar VS, Babu RV (2017) DeepFuse: a deep unsupervised approach for exposure fusion with extreme exposure image Pairs

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. http://arxiv.org/abs/1409.1556

Toet A (2014) TNO Image fusion dataset. Figshare. data

Wang M et al (2019) Scene classification of high-resolution remotely sensed image based on ResNet. J Geovis Spat Anal 3(2):16

Wang Z, Bovik AC (2002) A universal image quality index. IEEE Signal Process Lett 9(3):81–84

Wu Y, Wang Z (2017) Infrared and visible image fusion based on target extraction and guided filtering enhancement. Acta Opt Sin 37(8):0810001

Xu L, Cui GM, Zheng CP (2017) Fusion method of visible and infrared images based on multi-scale decomposition and saliency region. Laser Optoelectron Prog 54(11):111–120

Yin H (2015) Sparse representation with learned multiscale dictionary for image fusion. Neurocomputing 148:600–610

Zhang Q et al (2013) Dictionary learning method for joint sparse representation-based image fusion. Opt Eng 52(5):057006

Zhu P, Ma X, Huang Z (2017) Fusion of infrared-visible images using improved multi-scale top-hat transform and suitable fusion rules. Infrared Phys Technol 81:282–295

Acknowledgements

This work is funded by the Natural Science Foundation Committee, China (No. 41761080, and No. 41930101) and Industrial Support and Guidance Project of Gansu Colleges and Universities, No. 2019C-04.

Author information

Authors and Affiliations

Contributions

LZ conceived, designed, and also wrote the manuscript; HL performed the experiments; RZ supervised the study; PD offered helpful suggestions and reviewed the manuscript. RZ and PD analyzed and evaluated the results.

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, L., Li, H., Zhu, R. et al. An infrared and visible image fusion algorithm based on ResNet-152. Multimed Tools Appl 81, 9277–9287 (2022). https://doi.org/10.1007/s11042-021-11549-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11549-w