Abstract

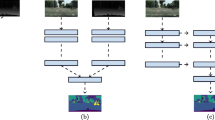

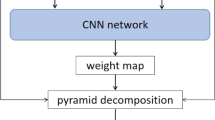

The multi-spectral image pairs composed of LIDAR and RGB images demonstrates more effective detection performance in complex traffic environments, such as low illumination, motion blur and strong noise, etc. However, there is still a lack of relevant research on how to better fuse the two modalities to improve the robustness and detection accuracy of the perception system for autonomous vehicles under the condition of low visible light image quality. In this paper, we proposed a dual-modal image quality aware deep neural network (DMIQADNN). We comprehensively compared and analyzed the adaptions of fusion architectures in the early, middle, late, and score stages. By comprehensively considering the detection accuracy and detection speed, the fusion architecture in the middle stage was selected. Besides, we developed an image quality assessment network (IQAN) to evaluate the image quality score for RGB images. The corresponding fusion weights for RGB sub-network and LIDAR sub-network were adaptively assigned by using the proposed fusion weight assignment function. Then based on the calculated fusion weights, the RGB and LIDAR sub-networks were adaptively merged via a data fusion sub-network. The RGB images in the KITTI dataset were processed by reducing illumination and adding motion blur and Gaussian noises to produce a modified dataset containing 7481 RBG-LIDAR image pairs, and the DNIQADNN was trained and tested by semi-automatic annotation. The experimental results on modified KITTI Benchmark and dataset collected by using our own developed autonomous vehicle validate the robustness and effectiveness of proposed method. The ultimate FPS and AP values of the DNIQADNN reach 27 and 39.1, which are superior to those of the state-of-the-art instance segmentation networks.

Similar content being viewed by others

Abbreviations

- DMIQADNN:

-

Dual-Modal Image Quality Aware Deep Neural Network

- IQA:

-

Image Quality Assessment

- NR-IQA:

-

No-Reference Image Quality Assessment

- IQAN:

-

Image Quality Assessment Network

- MOS:

-

Mean opinion score

- FR-IQA:

-

Full-reference image quality assessment

- RRIQA:

-

Reduced-reference image quality assessment

- NR-IQA:

-

No-reference image quality assessment

- CNNs:

-

Convolutional Neural Networks

- DNN:

-

Deep Neural Network

- PSN:

-

Pattern Separation Network

- RGB-D:

-

RGB + Depth Map

- MV3D-Net:

-

Multi-View 3D Object Detection Network

- F-PointNet:

-

Frustum Point Network

- AVOD-Net:

-

Aggregate View Object Detection Network

- F-ConvNet:

-

Frustum Convolutional Network

- 3D-CVF-Net:

-

3D Cross View Fusion Network

- CLOCs-Net:

-

Camera-LiDAR Object Candidates fusion Network

- FPN:

-

Feature Pyramid Network

- YOLACT:

-

You Only Look At Coefficient

- FPS:

-

Frame Per Second

- AP:

-

Average Precision

- IoU:

-

Intersection over Union

- Mask-RCNN:

-

Mams Region with CNN.

- DMOS:

-

Differential Mean Opinion Scores (DMOS).

- αand β :

-

the azimuth and zenith angles when observing the point.

- ∆α and ∆β :

-

the average horizontal and vertical angle resolution between consecutive beam emitters.

- [x, y z]:

-

Cartesian coordinates of LIDAR points.

- w RGB :

-

weight coefficient of RGB sub-network

- δ :

-

relative error.

- λ :

-

effect parameter.

- IQ RGB :

-

the IQA score of image

- IQ T :

-

the threshold value of IQA score

- w RGBandw LIDAR :

-

fusion weight for RGB and LIDAR sub-networks

- P and R :

-

Precision and Recall.

- TP, FP, TN and FP :

-

true positive, false positive, true negative and false negative.

References

Amirkhani D, Bastanfard A (2021) An objective method to evaluate exemplar-based in painted images quality using Jaccardindex. Multimed Tools Appl 80(17):26199–26212

Antico M, Vukovic D, Camps SM, Sasazawa F, Carneiro G (2020) Deep learning for US image quality assessment based on femoral cartilage boundary detection in autonomous knee arthroscopy. IEEE Trans Ultrason Ferroelectr Freq Control 67(12):2543–2552

Bi HB, Liu ZQ, Wang K, Dong B, Chen G, Ma JQ (2021) Towards accurate RGB-D saliency detection with complementary attention and adaptive integration. Neurocomputing 439:63–74

Bolya D, Zhou C, Xiao F, Lee YJ (2019) YOLACT: real-time instance segmentation. In: IEEE/CVF international conference on computer vision. Seoul, South Korea, pp 4493–4497

Bu F, Le T, Xx D, Vasudevan R, Johnson-Roberson M (2020) Pedestrian planar LiDAR pose (PPLP) network for oriented pedestrian detection based on planar LiDAR and monocular images. IEEE Robotic Automation Lett 5(2):1626–1633

Chen C, Mou X (2020) A shift-insensitive full reference image quality assessment model based on quadratic sum of gradient magnitude and LOG signals. arXiv:2012.11525v1.

Chen XZ, Ma HM, Wan J, Li B, Xia T (2017) Multi-view 3D object detection network for autonomous driving. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), Honolulu, HI, pp. 21–26

Cheng G, Yang C, Yao X, Guo L, Han J (2018) When deep learning meets metric learning: remote sensing image scene classification via learning discriminative CNNs. IEEE Trans Geosci Remote Sens 56(5):2811–2821

Dolson J, Baek J, Plagemann C, Thrun S (2010) Upsampling range data in dynamic environments. In: 23rd IEEE conference on computer vision and pattern recognition. CA, USA, San Francisco, pp 1141–1148

Dou J, Xue J, Fang J (2019) SEG-VoxelNet for 3D vehicle detection from RGB and LiDAR data. In: International conference on robotics and automation. Montreal, Canada, pp 4362–4368

Eitel A, Springenberg J T, Spinello L, Riedmiller M, Burgard W (2015) Multimodal deep learning for robust RGB-D object recognition. In: International Conference on Intelligent Robots and Systems, Daejeon, pp. 681–687.

Fei L., J.B. Zheng, Y.F. Zhang, N.L., W.J. Jia (2021) AMDFNet: adaptive multi-level deformable fusion network for RGB-D saliency detection. Neurocomputing 465:141–156.

Fourati E, Elloumi W, Chetouani A (2020) Anti-spoofing in face recognition-based biometric authentication using image quality assessment. Multimed Tools Appl 79(1/2):865–889

Fu C Y, Shvets M, Berg A C (2019) RetinaMask: learning to predict masks improves state-of-the-art single-shot detection for free. arXiv:1901.03353v1 [cs.CV].

Geiger A, Lenz P, Stiller C, Urtasun R (2013) Vision meets robotics: the KITTI dataset. Int J Robot Res 32(11):1231–1237

Geng K, Dong G, Yin G (2020) Deep dual-modal traffic objects instance segmentation method using camera and LIDAR data for autonomous driving. Remote Sens 12(20):3274

Georgios Z, Lazaros T, Angelos A, Ioannis P (2021) A comprehensive survey of LIDAR-based 3D object detection methods with deep learning for autonomous driving. Comput Graph 99:153–181

Gong C, Han J, Zhou P, Xu D (2018) Learning rotation-invariant and fisher discriminative convolutional neural networks for object detection. IEEE Trans Image Process 28(1):265–278

Gu K, Zhai G, Yang X, Zhang W (2014) Using free energy principle for blind image quality assessment. IEEE Transactions on Multimedia 17(1):50–63

Guangtao G, Weisi Z, Lin X, Yang W, Zhang (2021) No-reference image sharpness assessment in autoregressive parameter space. IEEE Trans Image Process: Publ IEEE Signal Process Soc 24(10):3218–3231

Gupta S, Girshick R, Arbeláez P, Malik J (2014) Learning rich features from RGB-D images for object detection and segmentation. In: 13th European conference on computer vision, Korea, pp. 345-360.

Jiang Y, Qiao R, Zhu Y, Wang G (2021) Data fusion of atmospheric ozone remote sensing Lidar according to deep learning. J Supercomput 77:6904–6919

Kaiming H , Georgia G, Piotr D, Girshick R (2018) Mask R-CNN. arXiv:1703.06870v3 [cs.CV].

Ke G, Zhou J, Qiao J, Zhai G, Bovik AC (2017) No-reference quality assessment of screen content pictures. IEEE Trans Image Process 26(8):4005–4018

Ke G, Lin W, Zhai G, Yang X, Zhang W, Chang WC (2017) No-reference quality metric of contrast-distorted images based on information maximization. IEEE Transactions on Cybernetics 47(12):4559–4565

Ke G, Tao D, Qiao JF, Lin W (2018) Learning a no-reference quality assessment model of enhanced images with big data. IEEE Trans Neural Netw Learn Syst 29(4):1301–1313

Ku J, Mozifian M, Lee J, Harakeh A, Waslander SL (2018) Joint 3D proposal generation and object detection from view aggregation. In: IEEE/RSJ international conference on intelligent robots and systems (IROS). SPAIN, Madrid, pp 5750–5757

C Li, L Yun, H Chen, S Xu (2021) No-reference stereoscopic image quality assessment using 3D visual saliency maps fused with three-channel convolutional neural network. Signal, Image and Video Processing 1–9.

Lin TY, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. IEEE Transactions on Pattern Analysis & Machine Intelligence 42(2):318–327

Lv C, Wang H, Cao D (2017) High-precision hydraulic pressure control based on linear pressure-drop modulation in valve critical equilibrium state. IEEE Trans Ind Electron 64(10):7984–7993

Modhej N, Bastanfard A, Teshnehlab M, Raiesdana S (2020) Pattern separation network based on the Hippocampus activity for handwritten recognition. IEEE Access 8:212803–212817

Pan B, Zhang L, Yin H, Lan J, Cao F (2021) An automatic 2D to 3D video conversion approach based on RGB-D images. Multimed Tools Appl 80:19179–19201

Su Pang, Daniel Morris, Hayder Radha (2020) CLOCs: Camera-LiDAR Object Candidates Fusion for 3D Object Detection. arXiv:2009.00784v1 [cs.CV].

Premebida C, Carreira J, Batista J, Nunes U (2014) Pedestrian detection combining RGB and dense LIDAR data. In: IEEE/RSJ international conference on intelligent robots and systems. Illinois, USA, Chicago, pp 4112–4117

Qi CR, Liu W, Wu CX, Su H, Guibas LJ (2018) Frustum PointNets for 3D object detection from RGB-D data. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR). Salt Lake City, UT, pp 918–927

Rdnyi G, Tóth R, Pup D, Kisari VZ, Krs P, Bokor J (2021) Data-driven linear parameter-varying modelling of the steering dynamics of an autonomous car. IFAC-PapersOnLine 54(8):20–26

Rezaei M, Ravanbakhsh E, Namjoo E, Haghighat M (2019) Assessing the effect of image quality on SSD and faster R-CNN networks for face detection. In: 27th Iranian conference on electrical engineering. Mashhad, Iran, pp 1589–1594

Rövid A, Remeli V, Szalay Z (2020) Raw fusion of camera and sparse LiDAR for detecting distant objects. At-Automatisierungstechnik 68(5):337–346

Schlosser J, Chow CK, Kira Z (2016) Fusing LIDAR and images for pedestrian detection using convolutional neural networks. In: IEEE international conference on robotics and automation. Stockholm, Sweden, pp 19179–19201

Silberman N, Hoiem D, Kohli P, Fergus R (2016) Indoor segmentation and support inference from RGB-D images. In: European conference on computer vision. Florence, Italy, pp 361–371

Sun J, Wan C, Cheng J, Yu FL, Liu J (2017) Retinal image quality classification using fine-tuned CNN. In: International workshop on fetal and infant image analysis (FIFI) / 4th international workshop on ophthalmic medical image analysis. Granada, Spain, pp 126–133

Tang Z, Zheng Y, Gu K, Liao K, Wang W, Yu M (2019) Full-reference image quality assessment by combining features in spatial and frequency domains. IEEE Trans Broadcast 61(1):138–151

Wang ZX and Jia K (2019) Frustum ConvNet: sliding frustums to aggregate local point-wise features for Amodal 3D object detection. IEEE/RSJ international conference on intelligent robots and systems (IROS), Macau, PEOPLES R CHINA, pp.1742-1749.

Z. Wang, R. Lin, J. Lu, J. Feng (2016) Correlated and individual multi-modal deep learning for RGB-D object recognition. arXiv preprint 375, arXiv: 01655.1604.

Wang Y, Louie DC, Cai J (2021) Deep learning enhances polarization speckle for in vivo skin cancer detection. Opt Laser Technol 140(6):107006

Jin Hyeok Yoo, Yecheol Kim, Jisong Kim, and Jun Won Choi (2020) 3D-CVF: Generating Joint Camera and LiDAR Features Using Cross-View Spatial Feature Fusion for 3D Object Detection. arXiv:2004.12636v2 [cs.CV].

Zhang W, Qu C, Ma L, Guan J, Huang R (2016) Learning structure of stereoscopic image for no-reference quality assessment with convolutional neural network. Pattern Recogn 59:176–187

Zhao X, Sun P, Xu Z, Min H (2020) Fusion of 3D LIDAR and camera data for object detection in autonomous vehicle applications. IEEE Sensors J 20(9):4901–4913

Zhong H, Wang H, Wu Z, Chang C, Tang T (2021) A survey of LiDAR and camera fusion enhancement. Procedia Comp Sci 183(5):579–588

Acknowledgements

This study is supported by the National Natural Science Foundation of China (Grant No. 51905095), and National Natural Science Foundation of Jiangsu Province (Grant No. BK20180401).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Geng, K., Dong, G. & Huang, W. Robust dual-modal image quality assessment aware deep learning network for traffic targets detection of autonomous vehicles. Multimed Tools Appl 81, 6801–6826 (2022). https://doi.org/10.1007/s11042-022-11924-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-11924-1