Abstract

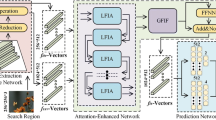

Trackers based on anchor-free strategy have achieved a great success in recent years. However, they have limitations. To be specific, receptive fields of their models in each layer are fixed, so that the flexibility is lost. Then, they have no effective modeling of global context. Therefore, our model SiamDAG is put forward in this paper. The core part is Global Context - Selective Kernel block. This part can dynamically adjust its receptive field size based on multiple scales of input information, and model the global context effectively so that the tracker has the global understanding of a visual scene. Meanwhile, the Intersection over Union (IoU) prediction branch linking classification task and regression task is added. Our tracker was evaluated in VOT2019, OTB100 and GOT-10 k benchmark datasets, which achieved good results. It can also run up to 65FPS, far above the real-time requirement.

Similar content being viewed by others

Data availability

All the training data sets and test data sets used in our experiment are public and can be downloaded from their official websites. The results of all published algorithms can also be obtained from the websites provided by their respective authors.

References

Bertinetto L, Valmadre J, Henriques JF, Vedaldi A, Torr PH (2016) Fully-convolutional siamese networks for object tracking. In: European conference on computer vision. Springer, pp 850–865

Bhat G, Danelljan M, Gool LV, Timofte R (2019) Learning discriminative model prediction for tracking. In: Proceedings of the IEEE/CVF International Conference on Computer Vision. pp. 6182–6191

Cao Y, Xu J, Lin S, Wei F, Hu H (2019) Gcnet: non-local networks meet squeeze-excitation networks and beyond. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops. pp 0–0

Chen Z, Zhong B, Li G, Zhang S, Ji R (2020) Siamese box adaptive network for visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 6668–6677

Danelljan M, Robinson A, Khan FS, Felsberg M (2016) Beyond correlation filters: learning continuous convolution operators for visual tracking. Springer International Publishing

Danelljan M, Bhat G, Shahbaz Khan F, Felsberg M (2017) Eco: efficient convolution operators for tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 6638–6646

Danelljan M, Bhat G, Khan FS, Felsberg M (2019) Atom: accurate tracking by overlap maximization. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 4660–4669

Duan K, Bai S, Xie L, Qi H, Huang Q, Tian Q (2019) CenterNet: Keypoint triplets for object detection. In: International Conference on Computer Vision. pp. 6569–6578

Guo Q, Wei F, Zhou C, Rui H, Song W (2017) Learning Dynamic Siamese Network for Visual Object Tracking. In: 2017 IEEE International Conference on Computer Vision (ICCV)

Guo D, Wang J, Cui Y, Wang Z, Chen S (2020) SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 6269–6277

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 770–778

Held D, Thrun S, Savarese S (2016) Learning to track at 100 fps with deep regression networks. In: European conference on computer vision. Springer, pp 749–765

Howard AG, Zhu M, Chen B, Kalenichenko D, Wang W, Weyand T, Andreetto M, Adam H (2017) MobileNets: efficient convolutional neural networks for Mobile vision applications. arXiv preprint arXiv:170404861

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 7132–7141

Huang L, Zhao X, Huang K (2019) GOT-10k: a large high-diversity benchmark for generic object tracking in the wild. IEEE Trans Pattern Anal Mach Intell 43:1562–1577. https://doi.org/10.1109/TPAMI.2019.2957464

Jaderberg M, Simonyan K, Zisserman A, Kavukcuoglu K (2015) Spatial transformer networks. arXiv preprint arXiv:150602025

Kristan M, Matas J, Leonardis A, Felsberg M, Pflugfelder R, Kamarainen J-K, Cehovin Zajc L, Drbohlav O, Lukezic A (2019) Berg a the seventh visual object tracking vot2019 challenge results. In: Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops. pp 0–0

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. Adv Neural Inf Proces Syst 25:1097–1105

Law H, Deng J (2020) CornerNet: detecting objects as paired Keypoints. Int J Comput Vis 128(3):642–656

L-h F, Ding Y, Y-b D, Zhang B, Wang L-y, Wang D (2020) SiamMN: Siamese modulation network for visual object tracking. Multimed Tools Appl 79(43):32623–32641

Li B, Yan J, Wu W, Zhu Z, Hu X (2018) High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 8971–8980

Li B, Wu W, Wang Q, Zhang F, Xing J, Yan J (2019) Siamrpn++: evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 4282–4291

Li X, Wang W, Hu X, Yang J (2019) Selective kernel networks. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 510–519

Li M-W, Wang Y-T, Geng J, Hong W-C (2021) Chaos cloud quantum bat hybrid optimization algorithm. Nonlinear Dynamics 103(1):1167–1193

Lin T-Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft coco: Common objects in context. In: European conference on computer vision. Springer, pp 740–755

Nam H, Han B (2016) Learning multi-domain convolutional neural networks for visual tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 4293–4302

Real E, Shlens J, Mazzocchi S, Pan X, Vanhoucke V (2017) Youtube-boundingboxes: A large high-precision human-annotated data set for object detection in video. In: proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 5296–5305

Ren S, He K, Girshick R, Sun J (2016) Faster R-CNN: towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Machine Intelli 39(6):1137–1149

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M (2015) Imagenet large scale visual recognition challenge. Int J Comput Vis 115(3):211–252

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 1–9

Tian Z, Shen C, Chen H, He T (2020) FCOS: a simple and strong anchor-free object detector. IEEE Trans Pattern Anal Machine Intell PP 99:1–1

Tripathi AS, Danelljan M, Van Gool L, Timofte R (2019) Tracking the known and the unknown by leveraging semantic information. In: BMVC. p 6

Valmadre J, Bertinetto L, Henriques J, Vedaldi A, Torr PH (2017) End-to-end representation learning for correlation filter based tracking. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 2805–2813

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. arXiv preprint arXiv:170603762

Wang Q, Teng Z, Xing J, Gao J, Maybank S (2018) Learning Attentions: Residual Attentional Siamese Network for High Performance Online Visual Tracking. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 7794–7803

Wang Q, Zhang L, Bertinetto L, Hu W, Torr PH (2019) Fast online object tracking and segmentation: a unifying approach. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 1328–1338

Wang G, Luo C, Xiong Z, Zeng W (2019) Spm-tracker: series-parallel matching for real-time visual object tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 3643–3652

Woo S, Park J, Lee J-Y, Kweon IS (2018) Cbam: Convolutional block attention module. In: Proceedings of the European conference on computer vision (ECCV). pp. 3–19

Wu Y, Lim J, Yang M-H (2015) Object tracking benchmark. IEEE Trans Pattern Anal Mach Intell 37(9):1834–1848. https://doi.org/10.1109/TPAMI.2014.2388226

Xie S, Girshick R, Dollár P, Tu Z, He K (2017) Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp. 1492–1500

Xu Y, Wang Z, Li Z, Yuan Y, Yu G (2020) Siamfc++: Towards robust and accurate visual tracking with target estimation guidelines. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol 07. pp. 12549–12556

Yang T, Chan AB (2018) Learning dynamic memory networks for object tracking. In: Proceedings of the European conference on computer vision (ECCV). pp. 152–167

Yu J, Jiang Y, Wang Z, Cao Z, Huang T (2016) Unitbox: An advanced object detection network. In: Proceedings of the 24th ACM international conference on Multimedia. pp 516–520

Zhang Z, Peng H (2019) Deeper and wider siamese networks for real-time visual tracking. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 4591–4600

Zhang YF, Xia T, Liu Y (2019) 3D convolution network and Siamese-attention mechanism for expression recognition. Multimed Tools Appl 78(21):30355–30371

Zhang Z, Peng H, Fu J, Li B, Hu W (2020) Ocean: Object-Aware Anchor-Free Tracking. In, Cham. Computer Vision – ECCV 2020. Springer International Publishing, pp 771–787

Zhao L, Wang J, Li X, Tu Z, Zeng W (2016) Deep convolutional neural networks with merge-and-run mappings. arXiv preprint arXiv:161107718

Zhu Z, Wang Q, Li B, Wu W, Yan J, Hu W (2018) Distractor-aware siamese networks for visual object tracking. In: Proceedings of the European Conference on Computer Vision (ECCV). pp. 101–117

Zhu J, Zhang G, Zhou S, Li K (2021) Relation-aware Siamese region proposal network for visual object tracking. Multimed Tools Appl 80(10):15469–15485

Code availability

The source code is available at https://github.com/Huangggjian/SiamDAG.

Funding

This research was funded by National Natural Science Foundation of China(NSFC, 61972123) and Zhejiang Provincial Key Lab of Equipment Electronics(2019E10009).

Author information

Authors and Affiliations

Contributions

Conceptualization, J.H. and Q.S.; Formal analysis, J.H. and Q.S.; Investigation, J.H., Q.S. and Z.L.; Methodology, Z.L. and C.Z.; Project administration, Q.S.; Resources, Q.S.; Supervision, B.Y.; Validation, J.H.; Writing—original draft, J.H. and Q.S.; Writing—review & editing, J.H. and C.Z.

Corresponding author

Ethics declarations

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Consent for publication

Written informed consent for publication was obtained from all participants.

Conflict of interest

We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sheng, Qh., Huang, J., Li, Z. et al. SiamDAG: Siamese dynamic receptive field and global context modeling network for visual tracking. Multimed Tools Appl 82, 681–701 (2023). https://doi.org/10.1007/s11042-022-12008-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12008-w