Abstract

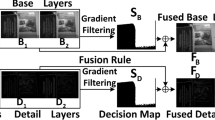

An important goal of multi-focus image fusion technology is to generate all-focus images that can better retain the source image information, while improving the quality and performance of image fusion. However, traditional image fusion methods usually have problems such as block artifacts, artificial edges, halo effects, and decreased contrast. To solve these problems, this paper proposes a dual-path fusion network (A-DPFN) with attention mechanism for multi-focus image fusion. Firstly, our method splits the complete image into image blocks, and obtains higher image classification with the preprocessing of the image blocks, so that our proposed dual-path fusion network accelerates the model convergence speed; Secondly, feature extraction block1 (FEB1) and feature extraction block2 (FEB2) in our network respectively extract the feature information of pair of focused images, in which we have added an attention mechanism; Finally, we merge the obtained pair of feature images as the input of the feature fusion block (FFB), and enhance the details of the image through the down-sampling block (DB) and the up-sampling block (UB). The experimental results show that the method has strong robustness and can effectively avoid problems such as block effect and artificial effect. Compared with the traditional image fusion method, the multi-focus image fusion method proposed in this paper is more effective.

Similar content being viewed by others

References

Amin-Naji M, Aghagolzadeh A, Ezoji M (2019) Ensemble of CNN for multi-focus image fusion. Inform Fusion 51(February):201–214

Aymaz S, Köse C, Aymaz S (2020) Multi-focus image fusion for different datasets with super-resolution using gradient-based new fusion rule. Multimedia Tools and Applications, pp 1–40

Amin-Naji M, Ranjbar-Noiey P, Aghagolzadeh A (2017) Multi-focus image fusion using Singular Value Decomposition in DCT domain. In: 2017 10th Iranian conference on machine vision and image processing (MVIP), pp 45–51

Amin-Naji M, Ranjbar-Noiey P, Aghagolzadeh A (2018) Multi-focus image fusion using singular value decomposition in DCT domain. In: 2017 10th Iranian conference on machine vision and image processing (MVIP)

Bavirisetti DP, Xiao G, Zhao J, Dhuli R, Liu G (2019) Multi-scale guided image and video fusion: A fast and efficient approach. Circuits, Systems, and Signal Processing 38(12):5576–5605

Bavirisetti DP, Xiao G, Zhao J, Dhuli R, Liu G (2019) Multi-scale guided image and video fusion: A fast and efficient approach. Circuits, Systems, and Signal Processing 38:5576–5605

Chakraborty C, Gupta B, Ghosh SK, Das DK, Chakraborty C (2016) Telemedicine supported chronic wound tissue prediction using classification approaches. J Med Syst 40:68

Chen Y, Blum RS (2009) A new automated quality assessment algorithm for image fusion. Image Vis Comput 27:1421–1432

Cvejic N, Canagarajah CN, Bull DR (2006) Image fusion metric based on mutual information and Tsallis entropy. Electron Lett 42:626–627

Du C, Gao S (2017) Image Segmentation-Based Multi-Focus Image Fusion Through Multi-Scale Convolutional Neural Network. IEEE Access 5:15750–15761

Gai D, Shen X, Chen H, Su P (2020) Multi-focus image fusion method based on two stage of convolutional neural network. Signal Process 176:107681

Guo Y, Huang C, Zhang Y, Li Y, Chen W (2020) A novel multitemporal image-fusion algorithm: Method and application to GOCI and himawari images for inland water remote sensing. IEEE Trans Geosci Remote Sens 58:4018–4032. 2020-01-01

Guo X, Nie R, Cao J, Zhou D, Mei L, He K (2019) FuseGAN: Learning to fuse multi-focus image via conditional generative adversarial network. IEEE Transactions on Multimedia 21:1982–1996

Guo R, Shen X, Dong X, Zhang X (2020) Multi-focus image fusion based on fully convolutional networks. Frontiers of Information Technology and Electronic Engineering 21:1019–1033. 2020-01-01

Hong R, Wang C, Ge Y, Wang M, Wu X (2007) Salience preserving mufti-focus image fusion. In: 2007 IEEE international conference on multimedia and expo, pp 1663–1666

Hu X, Yang K, Fei L, Wang K (2019) Acnet: Attention based network to exploit complementary features for rgbd semantic segmentation. In: 2019 IEEE international conference on image processing (ICIP), IEEE, pp 1440–1444

Kingma D, Ba J (2014) Adam: A method for stochastic optimization computer science - learning

Krishnan MMR, Banerjee S, Chakraborty C, Chakraborty C, Ray AK (2010) Statistical analysis of mammographic features and its classification using support vector machine. Expert Syst Appl 37:470–478

Liu Y, Chen X, Ward RK, Wang ZJ (2016) Image fusion with convolutional sparse representation. IEEE Signal Process Lett 23(12):1882–1886

Li G, Li L, Zhu H, Liu X, Jiao L (2019) Adaptive multiscale deep fusion residual network for remote sensing image classification. IEEE Trans Geosci Remote Sens 57:8506–8521. 2019-01-01

Li S, Kang X, Hu J, Yang B (2013) Image matting for fusion of multi-focus images in dynamic scenes. Inform Fusion 14(2):147–162

Li S, Kang X, Hu J (2013) Image fusion with guided filtering. IEEE Trans Image Process 22:2864–2875

Liu Y, Chen X, Ward R, Wang ZJ (2016) Image fusion with convolutional sparse representation. IEEE Signal Process Lett 23:1882–1886

Li J, Guo X, Lu G, Zhang B, Zhang D (2020) DRPL: Deep regression pair learning for Multi-Focus image fusion. IEEE Trans Image 29:4816–4831

Liu Y, Chen X, Peng H, Wang Z (2017) Multi-focus image fusion with a deep convolutional neural network. Inform Fusion 36:191–207

Long J, Shelhamer E, Darrell T (2015) Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3431–3440

Lai R, Li Y, Guan J, Xiong A (2019) Multi-scale visual attention deep convolutional neural network for multi-focus image fusion. IEEE Access 7:114385–114399

Liu Z, Blasch E, Xue Z, Zhao J, Laganiere R, Wu W (2012) Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: A comparative study. IEEE Trans Pattern Anal Mach Intell 34:94–109

Liu Z, Blasch E, Xue Z, Zhao J, Laganiere R, Wu W (2012) Objective assessment of multiresolution image fusion algorithms for context enhancement in night vision: A comparative study. IEEE Trans Pattern Anal Mach Intell 34:94–109

Liu Z, Forsyth DS, Laganière R (2008) A feature-based metric for the quantitative evaluation of pixel-level image fusion. Comput Vis Image Underst 109:56–68

Liu Y, Wang Z (2014) Simultaneous image fusion and denoising with adaptive sparse representation. IET Image Process 9(5):347–357

Ma B, Ban X, Huang H, Zhu Y (2019) Sesf-fuse: An unsupervised deep model for multi-focus image fusion. arXiv:1908.01703

Nejati M, Samavi S, Shirani S (2015) Multi-focus image fusion using dictionary-based sparse representation. Inform Fusion 25:72–84

Paul S, Sevcenco IS, Agathoklis P (2016) Multi-Exposure and Multi-Focus Image Fusion in Gradient Domain. Journal of Circuits, Systems and Computers 25:1650123.1–1650123.18

Paul S, Sevcenco IS, Agathoklis P (2016) Multiexposure and multi-focus image fusion in gradient domain. Journal of Circuits, Systems and Computers 25(10):1650123

Prabhakar KRSV (2017) DeepFuse: A deep unsupervised approach for exposure fusion with extreme exposure image pairs. In: Proceedings of the IEEE international conference on computer vision (ICCV), pp 4724–4732

Qu G, Zhang D, Yan P (2002) Information measure for performance of image fusion. Electron Lett 38:313–315

Sarkar A, Khan MZ, Singh MM, Noorwali A, Chakraborty C, Pani SK (2021) Artificial neural synchronization using nature inspired whale optimization. IEEE Access 9:16435–16447

SH, MX, ZL (2016) An integrated framework for the spatio–temporal–spectral fusion of remote sensing images. IEEE Transactions On Geoscience and Remote Sensing 54:7135–7148. 2016-01-01

Sarker MK, Rashwan H, Akram F, Talavera E (2019) Recognizing food places in egocentric Photo-Streams using Multi-Scale atrous convolutional networks and Self-Attention mechanism. IEEE Access 7:39069–39082

Song X, Wu X-J (2018) Multi-focus image fusion with PCA filters of PCANet. In: IAPR workshop on multimodal pattern recognition of social signals in humancomputer interaction, Springer, pp 1–17

Savi S, Babi Z (2012) Multifocus image fusion based on empirical mode decomposition. In: 19th IEEE In- ternational conference on systems, signals and image processing (IWSSIP)

Tang H, Xiao B, Li W, Wang G (2018) Pixel convolutional neural network for multi-focus image fusion. Inf Sci 433:125–141

Tian J, Chen L, Ma L, Yu W (2011) Multi-focus image fusion using a bilateral gradient-based sharpness criterion. Opt Commun 284(1):80–87

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. arXiv:1706.03762

Wang Q, Shen Y, Jin J (2008) Performance evaluation of image fusion techniques. Image Fusion, pp 469–492

Xu T, Zhang P, Huang Q, Zhang H (2018) AttnGAN: Fine-Grained text to image generation with attentional generative adversarial networks. In: 2018 IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Xiang K, Yang K, Wang K (2021) Polarization-driven semantic segmentation via efficient attention-bridged fusion. Opt Express 29:4802–4820

Xu S, Wei X, Zhang C, Liu J, Zhang J (2020) Mffw: A new dataset for multi-focus image fusion. arXiv:2002.04780

Yang Z, He X, Gao J, Deng L, Smola A (2016) Stacked attention networks for image question answering. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 1063–6919

Zhang Q, Levine MD (2016) Robust multi-focus image fusion using multi-task sparse representation and spatial context. IEEE Trans Image Process 25:2045–2058

Zhang Y, Bai X, Wang T (2017) Boundary finding based multi-focus image fusion through multi-scale morphological focus-measure. Inform Fusion 35:81–101

Zhang Y, Liu Y, Sun P, Yan H, Zhao X, Zhang L (2020) IFCNN: A general image fusion framework based on convolutional neural network. Inform Fusion 54:99–118

Zhang Y, Liu Y, Sun P, Yan H, Zhao X, Zhang L (2020) IFCNN: A general image fusion framework based on convolutional neural network. Inform Fusion 54:99–118

Zhao J, Laganiere R, Liu Z (2006) Performance assessment of combinative pixel-level image fusion based on an absolute feature measurement. International Journal of Innovative Computing Information and Control Ijicic, vol 3

Zhu J, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 2223–2232

Acknowledgements

The authors acknowledge the National Natural Science Foundation of China (Grant nos. 61772319, 619-76125), and Shandong Natural Science Foundation of China (Grant no. ZR2017MF049).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yu, N., Li, J. & Hua, Z. Attention based dual path fusion networks for multi-focus image. Multimed Tools Appl 81, 10883–10906 (2022). https://doi.org/10.1007/s11042-022-12046-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12046-4