Abstract

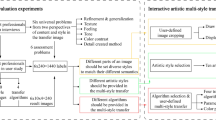

The exciting method of creating unique visual experiences through composing a complex interplay between the content and style of an image has been extended to art works, creative design, video processing and other fields. Image style transfer technology is used to create images colorful styles automatically. Most of the existing researches focus on the style transfer of the whole image or a single region in the image, which is inevitably not adequate for practical applications. In this work, we introduce an approach of differential stylization for image different areas. Considering the human visual attention mechanism, the saliency regions in the training image data set are labeled, and the salient regions are trained in the semantic segmentation model. The structure of the neural style transfer model is simplified to improve the operation efficiency. In our approach, each local target region in the image is stylized evenly and carefully. Different regions are well integrated to achieve more realistic and pleasing effect, while more dominant operation efficiency is achieved. We separately perform experiments with the Cityscapes and the Microsoft COCO 2017 database. The performance is also compared with some reported methods and shown improved, while considering the accuracy and efficiency as performance metrics.

Similar content being viewed by others

References

Benedetti L, Winnemoller MH, Corsini, and Scopigno R (2014) Painting with bob: Assisted creativity for novices[C]. In Proc. UIST

Castillo C, De S, Han XT, et al. (2017) Son of Zorn’s lemma: targeted style transfer using instance-aware semantic segmentation [C]//Proceedings of 2017 IEEE International Conference on Acoustics Speech, and Signal Processing. New Orleans, LA: IEEE : 1348–1352. https://doi.org/10.1109/ICASSP.2017.7952376

Champandard AJ (2016) Semantic Style Transfer and Turning Two-Bit Doodles into Fine Artworks. arXiv:1603.01768 [CS], arXiv: 1603.01768

Champandard AJ (2016) Semantic style transfer and turning two-bit doodles into fine artworks [J]. arXiv preprint arXiv:1603.01768

Chen T Q, Schmidt M (2016) Fast patch-based style transfer of arbitrary style[J]. arXiv preprint arXiv:1612.04337

Chen LC, Zhu Y, Papandreou G, et al. (2018)Encoder-decoder with atrous separable convolution for semantic image segmentation [C]//proc of European conference on computer vision (ECCV)

Choi HC (2020) Unbiased image style transfer[J]. IEEE Access 8:196600–196608

Faridul HS, Pouli T, Chamaret C, Stauder J, Reinhard E, Kuzovkin D, Tremeau A (2016) Colour mapping: a review of recent methods, extensions and applications[J]. Computer Graphics Forum 35(1):59–88

Gatys LA, Ecker AS, Bethge M (2015) A neural algorithm of artistic style[J]. arXiv preprint arXiv:1508.06576

Gatys LA, Alexander S Ecker, and Matthias Bethge (2015) Texture synthesis using convolutional neural networks[C]. In NIPS

Gatys LA, Alexander S Ecker, and Matthias Bethge (2016) Image style transfer using convolutional neural networks[C]. In CVPR

Gatys LA, Ecker AS, Bethge M (2016) Image style transfer using convolutional neural networks[C]. In Proc, CVPR

Gatys LA, Ecker AS, Bethge M (2017) Controlling Perceptual Factors in Neural Style Transfer[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition : 3730–3738

Heeger DJ and Bergen JR (1995)Pyramid-based Texture Analysis/Synthesis [C]. In Proceedings of the 22Nd Annual Conference on Computer Graphics and Interactive Techniques, SIGGRAPH ‘95, pages 229–238, New York, NY, USA. ACM

Hertzmann A (1998) Painterly rendering with curved brush strokes of multiple sizes[C]//Proceedings of the 25th annual conference on Computer graphics and interactive techniques : 453–460

Hertzmann A, Jacobs C E, Oliver N, et al. (2001) Image analogies[C]//Proceedings of the 28th annual conference on Computer graphics and interactive techniques : 327–340

Hu C, Ding YD, Gao YZ (2020) Artistic Text Style Transfer based on Generative Adversarial Networks[C]//Proceedings of the 7th International Forum on Electrical Engineering and Automation : 451–455

Huang X, Belongie S (2017) Arbitrary style transfer in real-time with adaptive instance normalization [C]//Proceedings of the IEEE International Conference on Computer Vision : 1501–1510

Jin ZG, Zhou MR (2021) Research on Image Style Transfer Algorithm Based on Convolutional Neural Networks [J]. Journal of Hefei University (Comprehensive Edition) 38(2):27–33

Johnson J, Alexandre Alahi, and Li Fei-Fei(2016) Perceptual losses for real-time style transfer and super-resolution[C]. In ECCV

Johnson J, Alahi A, Fei-Fei L (2016) Perceptual Losses for Real-Time Style Transfer and Super-Resolution[J]. Computer Science, 14th European Conference on Computer Vision (ECCV)

Julesz B (1962) Visual Pattern Discrimination. IRE Trans Inf Theory[J], 8(2)

Kalnins D, Markosian L, Meier BJ, Kowalski MA, Lee JC, Davidson PL, Webb M, Hughes JF, and Finkelstein A (2002) Drawing strokes directly on 3d models. ACM Trans Graph 21(3)

Kolliopoulos A (2005) Image segmentation for stylized non-photorealistic rendering and animation[M]. University of Toronto

Li C and Wand M (2016) Precomputed real-time texture synthesis with markovian generative adversarial networks[C]. In ECCV

Li C and Wand M (2016) Combining markov random fields and convolutional neural networks for image synthesis[C]. In Proc CVPR

Li C, Wand M (2016) Combining markov random fields and convolutional neural networks for image synthesis[C]. In Proc, CVPR

Li Y, Wang N, Liu J, Hou X (2017) Demystifying neural style transfer. arXiv preprint arXiv:1701.01036

Li SH, Xu XX, Nie LQ, et al. (2017)Laplacian-Steered Neural Style Transfer[C]//Proceedings of the 25th ACM international conference on Multimedia : 1716–1724

Li Y, Fang C, Yang J, et al. (2017) Universal style transfer via feature transforms [C]//Advances in neural information processing systems : 386–396.

Liu T, Chen ZW, Yang Y, et al. (2020) Lane Detection in Low-light Conditions Using an Efficient Data Enhancement: Light Conditions Style Transfer[C]//Proceedings of the IEEE Intelligent Vehicles Symposium : 1394–1399

Lu M, Zhao H, Yao A, et al. (2017) Decoder network over lightweight reconstructed feature for fast semantic style transfer [C]//Proceedings of the IEEE international conference on computer vision : 2469–2477.

Lu XK, Wang WG, Ma C, et al. (2019) See More, Know More: Unsupervised Video Object Segmentation With Co-Attention Siamese Networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition : 3618–3627

Lu XK, Wang WG, Shen JB, Crandall D, Luo J (2020)Zero-shot video object segmentation with co-attention Siamese networks [J]. IEEE Trans Pattern Anal Mach Intell 01:1

Lu XK, Wang WG, Danelljan M, et al. (2020) Video Object Segmentation with Episodic Graph Memory Networks[C]//Proceedings of the European conference on computer vision : 661–679

Lum EB, Ma KL (2001)Non-photorealistic rendering using watercolor inspired textures and illumination[C]//Proceedings Ninth Pacific Conference on Computer Graphics and Applications. Pacific Graphics. IEEE, 2001: 322–330

O’Donovan P and Hertzmann A (2012) Anipaint: Interactive painterly animation from video[J]. IEEE TVCG, 18(3)

Park JH, Park S, Shim H (2019)Semantic-aware neural style transfer[J]. Image Vis Comput 87:13–23

Park SW, Ko JS, Huh JH, Kim JC (2021) Review on generative adversarial networks: focusing on computer vision and its applications [J]. Electronics 10(10):1216

Portilla J, Simoncelli EP (Oct. 2000) A parametric texture model based on joint statistics of complex wavelet coefficients [J]. Int J Comput Vis 40(1):49–70

Qiao YX, Cui JB, Huang FX, Liu H, Bao C, Li X (2021) Efficient style-Corpus constrained learning for photorealistic style transfer[J]. IEEE Trans Image Process 30:3154–3166

Qiao YX, Cui JB, Huang FX, Liu H, Bao C, Li X (2021) Efficient style-Corpus constrained learning for photorealistic style transfer[J]. IEEE Trans Image Process 30:3154–3166

Risser E, Wilmot P, Barnes C (2017) Stable and controllable neural texture synthesis and style transfer using histogram losses[J]. arXiv preprint arXiv:1701.08893

Strothotte T, Schlechtweg S (2002)Non-photorealistic computer graphics: modeling, rendering, and animation[M]. Morgan Kaufmann

Ulyanov D, Vadim Lebedev, Andrea Vedaldi, and Victor S Lempitsky (2016) Texture networks: Feed-forward synthesis of textures and stylized images[M]. In ICML

Ulyanov D, Vedaldi A, Lempitsky V (2016) Instance normalization: The missing ingredient for fast stylization [J]. arXiv preprint arXiv:1607.08022

Wang WJ, Yang S, Xu JZ, Liu J (2020) Consistent video style transfer via relaxation and regularization[J]. IEEE Trans Image Process 29:9125–9139

Wang WJ, Yang S, Xu JZ, Liu J (2020) Consistent video style transfer via relaxation and regularization[J]. IEEE Trans Image Process 29:9125–9139

Wei TT, Zhu LX (2021) Comic style transfer based on generative confrontation network[C]//Proceedings of the 6th International Conference on Intelligent Computing and Signal Processing : 1011–1014

Winnemöller H, Olsen SC, Gooch B (2006)Real-time video abstraction[J]. ACM Transactions On Graphics (TOG) 25(3):1221–1226

Xia XD, Xue TF, Lai WS, et al. (2021)Real-time Localized Photorealistic Video Style Transfer[C]// Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision : 1088–1097

Yao Y, Ren JQ, Xie XS, et al. (2019)Attention-Aware Multi-Stroke Style Transfer[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition : 1467–1475

Ye HM, Liu WJ, Liu YZ (2020) Image Style Transfer Method Based on Improved Style Loss Function[C]//Proceedings of the IEEE 9th Joint International Information Technology and Artificial Intelligence Conference : 410–413

Ye HM, Liu WJ, Liu YZ (2020) Image Style Transfer Method Based on Improved Style Loss Function[C]//Proceedings of the IEEE 9th Joint International Information Technology and Artificial Intelligence Conference : 410–413

Zeng K, Zhao M, Xiong C, et al. (2009) From image parsing to painterly rendering[J]. ACM Trans Graph, 29(1): 2:1–2:11

Zhao C (2020) A Survey on Image Style Transfer Approaches Using Deep Learning[C]. Journal of Physics: Conference Series 1453:012129

Zhao HH, Rosin PL, Lai YK, Wang YN (2020) Automatic semantic style transfer using deep convolutional neural networks and soft masks [J]. Vis Comput 36(7):1307–1324

Acknowledgements

This work is supported by Key Project of Hebei Provincial Department of Education(ZD2020304).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yang, W., Zhenxin, Y. & Haiyan, L. Research on style transfer for multiple regions. Multimed Tools Appl 81, 7183–7200 (2022). https://doi.org/10.1007/s11042-022-12121-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12121-w