Abstract

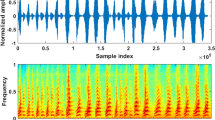

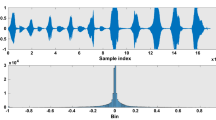

This paper explores the necessity of having hearing impaired (HI) and dysarthric speakers be part of the person authentication system and it is considered to be imperative. Automated system on identifying speakers is evaluated by having the perceptual features with critical band analysis done in various non-linear frequency scales and vector quantization (VQ) & Fuzzy C means (FCM) based iterative clustering templates and multi-variant hidden Markov (MHMM) models as representative of HI or dysarthric speakers. For developing a training system, perceptual features are extracted from the speeches of HI or dysarthric speakers after the initial pre-processing techniques namely voice activity detection, pre-emphasis, frame blocking, and windowing contemplated on the speech utterances, and VQ & FCM clustering models and MHMM models are created for each speaker and the study is done on varying cluster and mixture size. The testing phase emphasizes the extraction of features from the test utterances, application of features to the templates, and classification is done based on minimum distance criterion for clustering technique and maximum log-likelihood criterion for MHMM technique. This algorithm gives the overall accuracy of 100% when the decision level fusion classification is done for the perceptual features with critical band analysis done in MEL, BARK, and ERB scales for all the clusters with variations in cluster size for both hearing impaired and dysarthric speaker recognition. Decision level fusion classification using FCM and MHMM technique provides low overall accuracy as compared to the VQ technique.

Similar content being viewed by others

Data availability

All relevant data are within the paper and its supporting information files.

References

Ahlawat S, Choudhary A, Nayyar A, Singh S, Yoon B (2020) Improved handwritten digit recognition using convolutional neural networks (CNN). Sensors 20(12):3344. https://doi.org/10.3390/s20123344

Andrade AN, MartinelliIorio MC, Gil D (2016) Speech recognition in individuals with sensorineural hearing loss. Braz J Otorhinolaryngol 82(3):334–340. https://doi.org/10.1016/j.bjorl.2015.10.002

Chin Y-H, Tai T-C, Zhao J-H, Wang K-Y, Hong C-T, Wang J-C (2017) Program Guardian: screening system with a novel speaker recognition approach for smart TV. Multimedia Tools and Applications 76(120):13881–13896. https://doi.org/10.1007/s11042-016-3764-9

Dargan S, Kumar M (2020) A comprehensive survey on the biometric recognition systems based on physiological and behavioral modalities. Expert Syst Appl 143:113114. https://doi.org/10.1016/j.eswa.2019.113114

Dargan S, Kumar M, Garg A, Thakur K (2020) Writer identification system for pre-segmented offline handwritten Devanagari characters using k-NN and SVM. Soft Comput 24:10111–10122. https://doi.org/10.1007/s00500-019-04525-y

Farhadipour A, Veisi H, Asgari M, Keyvanrad MA (2018) Dysarthric speaker identification with different degrees of dysarthria severity using deep belief networks. J ETRI 40(5):643–652. https://doi.org/10.4218/etrij.2017-0260

Fink N, Furst M, Muchnik C (2012) Improving word recognition in noise among hearing-impaired subjects with a single-channel cochlear noise-reduction algorithm. J Acoust Soc Am 132:1718–1731. https://doi.org/10.1121/1.4739441

Gadekallu TR, Khare N, Bhattacharya S, Singh S, Reddy Maddikunta PK, Ra IH, Alazab M (2020) Early detection of diabetic retinopathy using PCA-firefly based deep learning model. Electronics 9(2):274. https://doi.org/10.3390/electronics9020274

Ghezaiel W, Slimane AB, Braiek EB (2017) Non-linear multi-scale decomposition by EMD for Co-Channel speaker identification. Multimed Tools Appl 76(20):20973–20988. https://doi.org/10.1007/s11042-016-4044-4

Healy EW, Vasko JL, Wang DL (2019) The optimal threshold for removing noise from the speech is similar across normal and impaired hearing—a time-frequency masking study. J Acoust Soc Am 145(6):EL581. https://doi.org/10.1121/1.5112828

Healy EW, Yoho SE, Wang Y, Apoux F, Wang DL (2014) Speech-cue transmission by an algorithm to increase consonant recognition in noise for hearing-impaired listeners. J Acoust Soc Am 136:3325–3336. https://doi.org/10.1121/1.4901712

Healy EW, Yoho SE, Wang Y, Wang D (2013) An algorithm to improve speech recognition in noise for hearing-impaired listeners. J Acoust Soc Am 134:3029–3038 https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3799726/

Hermansky H, Margon N, Bayya A, Kohn P (1991) The challenge of inverse E: the RASTA PLP method. Proc Twenty-Fifth IEEE Asilomar Conf Signals Syst Comput 2:800–804. https://doi.org/10.1109/ACSSC.1991.186557

Hermansky H, Morgan N (1994) RASTA processing of speech. IEEE Trans Speech Audio Process 2(4):578–589. https://doi.org/10.1109/89.326616

Hermansky H, Tsuga K, Makino S, Wakita H (1986) Perceptually based processing in automatic speech recognition. Proc IEEE Int Conf Acoust Speech Signal Process 11:1971–1974. https://doi.org/10.1109/ICASSP.1986.1168649

Jürgens T, Ewert SD, Kollmeier B, Brand T (2014) Prediction of consonant recognition in quiet for listeners with normal and impaired hearing using an auditory model. J Acoust Soc Am 135:1506–1517. https://doi.org/10.1121/1.4976054

Khare N, Devan P, Chowdhary CL, Bhattacharya S, Singh G, Singh S, Yoon B (2020) SMO-DNN: Spider Monkey Optimization and Deep Neural Network Hybrid Classifier Model for Intrusion Detection. Electronics 9(4):692. https://doi.org/10.3390/electronics9040692

Kumar M, Jindal SR, Jindal MK, Lehal GS (2020) Improved recognition results of medieval handwritten Gurmukhi manuscripts using boosting and bagging methodologies. Neural Process Lett 50(1):43–56 https://www.springerprofessional.de/en/improved-recognition-results-of-medieval-handwritten-gurmukhi-ma/16108768

Kumar M, Jindal MK, Sharma RK, Jindal SR (2018) Offline handwritten numeral recognition using combination of different feature extraction techniques. Natl Acad Sci Lett 41:29–33. https://doi.org/10.1007/s40009-017-0606-x

Kumar M, Jindal MK, Sharma RK et al (2020) Performance evaluation of classifiers for the recognition of offline handwritten Gurmukhi characters and numerals: a study. Artif Intell Rev 53:2075–2097. https://doi.org/10.1007/s10462-019-09727-2

Kumar M, Singh N, Kumar R, Goel S, Kumar K (2021) Gait recognition based on vision systems: a systematic survey. J Vis Commun Image Represent 75:103052. https://doi.org/10.1016/j.jvcir.2021.103052

LahceneKadi K, AhmedSelouani S, Boudraa B, Boudraa M (2016) Fully automated speaker identification and intelligibility assessment in dysarthria disease using auditory knowledge. Bio-cybern Biomed Eng 36(1):233–247. https://doi.org/10.1016/j.bbe.2015.11.004

Li Z, Gao Y (2015) Acoustic feature extraction method for robust speaker identification. Multimed Tools Appl 75(12):7391–7406. https://doi.org/10.1007/s11042-015-2660-z

Li B, Guo Y, Yang G, Feng Y, Yin S (2017) Effects of various extents of high-frequency hearing loss on speech recognition and gap detection at low frequencies in patients with sensorineural hearing loss. Neural Plast 2017:1–9. https://doi.org/10.1155/2017/8941537

Li Z-Y, Zhang W-Q, Liu J (2015) Multi-resolution time-frequency feature and complementary combination for short utterance speaker recognition. Multimed Tools Appl 74(3):937–953. https://doi.org/10.1007/s11042-013-1705-4

Luque-Suárez F, Camarena-Ibarrola A, Chávez E (2019) Efficient speaker identification using spectral entropy. Multimedia Tools and Applications 78(12):16803–16815. https://doi.org/10.1007/s11042-018-7035-9

Moro-Velázquez L, Gómez-Garcíaa JA, Godino-Llorentea JI, Villalba J, Orozco-Arroyavec JR, Dehak N (2018) Analysis of speaker recognition methodologies and the influence of kinetic changes to automatically detect Parkinson’s disease. J Appl Soft Comput 62:649–666. https://doi.org/10.1016/j.asoc.2017.11.001

Neher T, Lougesen S, Jensen NS, Kragelund L (2011) Can basic auditory and cognitive measures predict hearing-impaired listeners localization and spatial speech recognition abilities? J Acoust Soc Am 130:1542–1558. https://doi.org/10.1121/1.3608122

Neher T, Lunner T (2012) Binaural temporal fine structure sensitivity, cognitive function and spatial speech recognition of hearing-impaired listeners. J Acoust Soc Am 131:2561–2564. https://doi.org/10.1121/1.3689850

Rabiner L, Juang BH (1993) Fundamentals of speech recognition. Prentice-Hall, NJ

Revathi A, Venkataramani Y (2008) Iterative clustering approach for text-independent speaker identification using multiple features. Proc Int Conf Signal Process Commun Syst. https://doi.org/10.1109/ICSPCS.2008.4813764

Revathi A, Venkataramani Y (2009) Text independent composite speaker identification/verification using multiple features. Int Conf Comput Sci Inf Eng. https://doi.org/10.1109/CSIE.2009.926

Singh A, Kadyan V, Kumar M, Bassan N (2020) ASRoIL: a comprehensive survey for automatic speech recognition of Indian languages. Artif Intell Rev 5:1–32 https://www.springerprofessional.de/en/asroil-a-comprehensive-survey-for-automatic-speech-recognition-o/17266068

Tiwari V, Hashmi MF, Keskar A, Shivaprakash NC (2020) Virtual home assistant for voice-based controlling and scheduling with short speech speaker identification. Multimed Tools Appl 2020:1–26. https://doi.org/10.1007/s11042-018-6358-x

Acknowledgements

It is our work - no grant & contribution numbers.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

As the authors of the manuscript, we do not have a direct financial relationship with the commercial Identity mentioned in our paper that might lead to a conflict of interest for any of the authors.

Competing interests

The authors have declared that no competing interest exists.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Revathi, A., Nagakrishnan, R. & Sasikaladevi, N. Robust HI and dysarthric speaker recognition – perceptual features and models. Multimed Tools Appl 81, 8215–8233 (2022). https://doi.org/10.1007/s11042-022-12184-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12184-9