Abstract

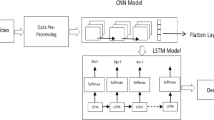

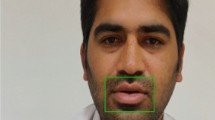

Hearing impaired persons are more expressive while speaking and expression is a salient feature in hearing impaired Visual Speech Recognition. Most Visual Speech Recognition systems focus only on the lip area for the recognition of speech or speaker. This work utilizes video data which includes information from both speech and facial expressions. As part of this study, we have developed a Malayalam audio-visual speech expression database of unimpaired people. The experiments were conducted on this newly developed Malayalam audio-visual speech database. The data has been collected from two people, 1 male, and 1 female. A combination of Convolutional Neural Network-Long Short Term Memory deep learning video processing model is applied for this system. The result demonstrate that, the classification accuracy is better for the features extracted using GoogleNet model compared to AlexNet and ResNet model. The system evaluation is carried out in both Speaker-dependent and speaker-independent domains. The recognition rate of the system for both speaker-dependent and speaker-independent experiments proves that facial expression analysis plays a crucial role in Visual Speech Recognition.

Similar content being viewed by others

References

Arunachalam R (2018) A strategic approach to recognize the speech of the children with hearing impairment: different sets of features and models. Multimed Tools Appl 78:20787–20808

Avots E, Sapiński T, Bachmann M, Kamińska D (2019) Audiovisual emotion recognition in wild. Mach Vis Appl 30(5):975–985

Bao W, Li Y, Gu M, Yang M, Li H, Chao L, Tao J (2014) Building a chinese natural emotional audio-visual database. In: 2014 12th International conference on signal processing (ICSP), pp 583–587

Busso C, Bulut M, Lee C-C, Kazemzadeh A, Mower E, Kim S, Chang JN, Lee S, Narayanan S, Narayanan SS (2008) Iemocap: interactive emotional dyadic motion capture database. Language Resources and Evaluation

Busso C, Deng Z, Yildirim S, Bulut M, Lee CM, Kazemzadeh A, Lee S, Neumann U, Narayanan S (2004) Analysis of emotion recognition using facial expressions, speech and multimodal information. In: Proceedings of the 6th international conference on multimodal interfaces, pp 205–211

Chen X, Du J, Zhang H (2020) Lipreading with densenet and resbi-lstm. SIViP 14(5):981–989

Chen J, Wang C, Wang K, Yin C, Zhao C, Xu T, Zhang X, Huang Z, Liu M, Yang T (2021) Heu emotion: a large-scale database for multimodal emotion recognition in the wild. Neural Comput and Applic, 1–17

Chung JS, Zisserman A (2016) Lip reading in the wild. In: Proc Asian Conf Comput Vis. Springer (ICASSP), Cham, pp 87–103

Dhanjal AS, Singh W (2019) Tools and techniques of assistive technology for hearing impaired people. In: 2019 International conference on machine learning, big data, cloud and parallel computing (COMITCon), pp 205–210. https://doi.org/10.1109/COMITCon.2019.8862454

Douglas-Cowie E, Campbell N, Cowie R, Roach P (2003) Emotional speech: towards a new generation of databases. Speech Commun 40(1–2):33–60

Elmadany NED, He Y, Guan L (2016) Multiview emotion recognition via multi-set locality preserving canonical correlation analysis. In: 2016 IEEE international symposium on circuits and systems (ISCAS), pp 590–593

Fabelo H (2019) In-vivo hyperspectral human brain image database for brain cancer detection. IEEE Access 7:39098–39116

Frank MG (2001) Facial expressions. In: Smelser NJ, Baltes PB (eds) International encyclopedia of the social and behavioral sciences. Pergamon, Oxford, pp 5230–5234

Goehring T, Bolner F, Monaghan JJ, Van Dijk B, Zarowski A, Bleeck S (2017) Speech enhancement based on neural networks improves speech intelligibility in noise for cochlear implant users. Hear Res 344:183–194

Goldschen AJ, Garcia ON, Petajan E (2002) Continuous optical automatic speech recognition by lipreading. In: Conf Signals, Syst Comput, pp 572–577

Hao M, Mamut M, Yadikar N, Aysa A, Ubul K (2020) A survey of research on lipreading technology. IEEE Access 8:204518–204544

Jan A (2017) Artificial intelligent system for automatic depression level analysis through visual and vocal expressions. IEEE Trans Cogn Develop Syst 10.3:668–680

Khan A, Sohail A, U Z, AS Q (2020) A survey of the recent architectures of deep convolutional neural networks. Artif Intell Rev 22(4):1–62

Kossaifi J, Walecki R, Panagakis Y, Shen J, Schmitt M, Ringeval F, Han J, Pandit V, Toisoul A, Schuller BW et al, Sewa DB (2019) A rich database for audio-visual emotion and sentiment research in the wild. IEEE Transactions on Pattern Analysis and Machine Intelligence

Kumar KB, Kumar RS, Sandesh EPA, Sourabh S, Lajish V (2015) Audio-visual speech recognition using deep learning. Appl Intell 42:722–737

Lee H, Ekanadham C, Ng AY (2008) Sparse deep belief net model for visual area v2. In: Adv Neural Inf Process Syst, pp 873–880

Martin O, Kotsia I, Macq B, Pitas I (2006) The enterface’05 audio-visual emotion database. In: 22nd International conference on data engineering workshops (ICDEW’06), pp 8–8

Martinez B, Ma P, Petridis S, Pantic M (2020) Lipreading using temporal convolutional networks. In: ICASSP 2020-2020 IEEE international conference on acoustics, speech and signal processing (ICASSP), pp 6319–6323

Ngiam J, Khosla A, Kim M, Nam J, Lee H, Ng AY (2007) Continuous automatic speech recognition by lipreading in motion-based recognition. In: Proc ACM Int Multimedia Conf Exhib, pp 57–66

Noda K, Yamaguchi Y, Nakadai K, Okuno HG, Ogata T (2014) Lipreading using convolutional neural network. In: Proc Conf Int.speech Commun Assoc, pp 1149–1153

Noroozi F, Marjanovic M, Njegus A, Escalera S, Anbarjafari G (2019) Audio-visual emotion recognition in video clips. IEEE Trans Affect Comput 10(1):60–75

Ogawa T, Sasaka Y, Maeda K, Haseyama M (2018) Favorite video classification based on multimodal bidirectional lstm. IEEE Access 6:61401–61409. https://doi.org/10.1109/ACCESS.2018.2876710

Petajan ED (1984) Automatic lipreading to enhance speech recognition. In: Proc. Global Telecommun. Conf., pp 265–272

Phutela D (2015) The importance of non-verbal communication. IUP J Soft Skills 9(4):43

Poria S (2017) A review of affective computing: from unimodal analysis to multimodal fusion. Inform Fus 37:98–125

Puviarasan N, Palanivel S (2011) Lip reading of hearing-impaired persons using hmm. Expert Syst Appl 38:4477–4481

Rahdari F, Rashedi E, Eftekhari M (2019) A multimodal emotion recognition system using facial landmark analysis. Iran J Sci Technol Trans Electr Eng 43:171–189

Roisman GI, Holland A, Fortuna K, Fraley RC, Clausell E, Clarke A (2007) The adult attachment interview and self-reports of attachment style: an empirical rapprochement. J Person Soc Psychol 92(4):678

Shah M, Jain R (1997) Continuous automatic speech recognition by lipreading in motion-based recognition. Dordrecht, pp 321–343

Shoumy JN (2020) Multimodal big data affective analytics: a comprehensive survey using text, audio, visual and physiological signals. J Netw Comput Appl 149:102447

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR), pp 1–9. https://doi.org/10.1109/CVPR.2015.7298594

Ullah W, Ullah A, Haq IU, Muhammad K, Sajjad M, Baik SW (2020) Cnn features with bi-directional lstm for real-time anomaly detection in surveillance networks. Multimed Tools Appl, 1–17

Vakhshiteh F, Almasganj F (2019) Exploration of properly combined audiovisual representation with the entropy measure in audiovisual speech recognition. Circ Syst Signal Process 38:2523–2543

Vidal A, Salman A, Lin W-C, Busso C (2020) Msp-face corpus: a natural audiovisual emotional database. In: Proceedings of the 2020 international conference on multimodal interaction, pp 397–405

Wand M, Koutnik J, Schmidhuber J (2016) Lipreading with long shortterm memory. In: Proc IEEE Int Conf Acoust., Speech Signal Process (ICASSP), pp 6115–6119

Wang W (2011) Machine audition: principles, algorithms, and systems. IGI Global

Wang Y, Guan L (2008) Recognizing human emotional state from audiovisual signals. IEEE Trans Multimed 10(5):936–946

Wong SC, Stamatescu V, Gatt A, Kearney D, Lee I, McDonnell MD (2017) Track everything: limiting prior knowledge in online multi-object recognition. IEEE Trans Image Process 26:4669–4683

Yang S, Zhang Y, Feng D, Yang M, Wang C, Xiao J, Long K, Shan S, Chen X (2019) Lrw-1000: a naturally-distributed large-scale benchmark for lip reading in the wild. In: 2019 14th IEEE international conference on automatic face & gesture recognition (FG 2019), pp 1–8

Zhalehpour S, Onder O, Akhtar Z, Erdem CE (2017) Baum-1: a spontaneous audio-visual face database of affective and mental states. IEEE Trans Affect Comput 8(3):300–313

Zhang S, Pan X, Cui Y, Zhao X, Liu L (2019) Learning affective video features for facial expression recognition via hybrid deep learning. IEEE Access 7:32297–32304

Zhang S, Zhang S, Huang T, Gao W, Tian Q (2017) Learning affective features with a hybrid deep model for audio–visual emotion recognition. IEEE Trans Circuits Syst Video Technol 28(10):3030–3043

Zhao G, Pietikäinen M, Hadid A (2007) Continuous automatic speech recognition by lipreading in motion-based recognition. In: Proc ACM Int Multimedia Conf Exhib, pp 57–66

Acknowledgements

The authors express sincere thanks to Central University of Kerala for the research support. Also, We thank the Kevees Studio team for the support they have made for the database development. The authors acknowledge the participants who have participated in data collection.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The dataset used human volunteers for the experiment. The data collection got ethical clearance from Institutional Ethics Clearance Committee Central University of Kerala (CUK/IHEC/2017-015). Informed written consent was obtained prior to any experiment or recording from all participants.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Bhaskar, S., Thasleema T. M. LSTM model for visual speech recognition through facial expressions. Multimed Tools Appl 82, 5455–5472 (2023). https://doi.org/10.1007/s11042-022-12796-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12796-1