Abstract

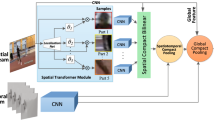

The visual speed, as important visual information on videos, has the ability to enhance the performance of video action recognition. Modeling the visual speed of different actions facilitates their recognition. Previous works have often attempted to capture the visual speed through sampling raw videos at multiple rates and then constructing an input-level frame pyramid, which usually requires to manipulate a costly multibranched network. In this work, we proposed a fast–slow visual network (FSVN) to improve the accuracy of video action recognition via a visual speed stripping strategy which can flexibly be integrated into various excellent 2-D or 3-D backbone networks. Specifically, by the method of the frame difference, we divided a video into fast visual frames and slow visual frames which can respectively represent the action information and the spatial information in the video. Then, we designed a fast visual information recognition network to capture the action information and a slow visual information recognition network to record the spatial information; finally, these two networks were integrated. The experiments on the data sets UCF101 (98.3%) and HMDB51 (76.4%) prove the superiority of our method over the traditional approaches.

Similar content being viewed by others

References

Avola D, Bernardi M, Foresti GL (2019) Fusing depth and colour information for human action recognition[J]. Multimed Tools Appl 78(5):5919–5939

Carreira J, Zisserman A 2017 Quo vadis, action recognition? a new model and the kinetics dataset. in proceedings of the IEEE Conference on Computer Vision and Pattern Recognition

Chu H et al (2008) Target tracking algorithm based on camshift algorithm combined with difference in frame. Journal of Projectiles, Rockets, Missiles and Guidance 28(3):85–88

Deng J, Dong W, Socher R et al (2009) Imagenet: A large-scale hierarchical image database[C]. 2009 IEEE conference on computer vision and pattern recognition. Ieee 2009:248–255

Dhiman C, Vishwakarma DK (2020) View-invariant deep architecture for human action recognition using two-stream motion and shape temporal dynamics[J]. IEEE Trans Image Process 29:3835–3844

Diba A, Sharma V, Van Gool L (2017) Deep temporal linear encoding networks[C]. Proceedings of the IEEE conference on Computer Vision and Pattern Recognition 2017:2329–2338

Feichtenhofer C, Pinz A, Zisserman A (2016) Convolutional two-stream network fusion for video action recognition[C]. Proceedings of the IEEE conference on computer vision and pattern recognition 2016:1933–1941

Feichtenhofer C, Fan H, Malik J et al (2019) Slowfast networks for video recognition[C]. Proceedings of the IEEE/CVF international conference on computer vision 2019:6202–6211

Ge H, Yan Z, Yu W et al (2019) An attention mechanism based convolutional LSTM network for video action recognition[J]. Multimed Tools Appl 78(14):20533–20556

He K, Zhang X, Ren S et al (2016) Deep residual learning for image recognition[C]. Proceedings of the IEEE conference on computer vision and pattern recognition 2016:770–778

Kuehne H, Jhuang H, Garrote E et al (2011) HMDB: a large video database for human motion recognition[C]. 2011 International conference on computer vision. IEEE 2011:2556–2563

Kashiwagi T, Oe S, Terada K (2000) Edge characteristic of color image and edge detection using color histogram. IEEJ Transactions on Electronics, Information and Systems 120(5):715–723

Kay W, Carreira J, Simonyan K et al (2017) The kinetics human action video dataset[J]. arXiv preprint arXiv:1705.06950

Kumar K (2019) EVS-DK: Event video skimming using deep keyframe[J]. J Vis Commun Image Represent 58:345–352

Kumar K, Shrimankar DD (2018) ESUMM: event summarization on scale-free networks[J]. IETE Technical Review

Kumar K, Shrimankar DD, Singh N (2018) V-LESS: a video from linear event summaries[C]. Proceedings of 2nd International Conference on Computer Vision & Image Processing. Springer, Singapore, pp 385–395

Kumar K, Shrimankar DD, Singh N (2019) Key-lectures: keyframes extraction in video lectures[M]//Machine intelligence and signal analysis. Springer, Singapore, pp 453–459

Lan Z, Zhu Y, Hauptmann AG et al (2017) Deep local video feature for action recognition[C]//Proceedings of the IEEE conference on computer vision and pattern recognition workshops 2017:1–7

Peng L, Lafortune EPF, Greenberg DP et al (1997) Use of computer graphic simulation to explain color histogram structure[C]. Color and Imaging Conference. Society for Imaging Science and Technology 1997(1):187–192

Pengcheng D, Siyuan C, Zhenyu Z et al (2019) Human Behavior Recognition Based on IC3D[C]. 2019 Chinese Control And Decision Conference (CCDC). IEEE 2019:3333–3337

Qiu Z, Yao T, Mei T (2017) Learning spatio-temporal representation with pseudo-3d residual networks[C]. Proceedings of the IEEE International Conference on Computer Vision 2017:5533–5541

Simonyan K, Zisserman A (2014) Two-stream convolutional networks for action recognition in videos[J]. Advances in neural information processing systems, 27

Simonyan K, Zisserman A, 2014 Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Solanki A, Bamrara R, Kumar K et al (2020) VEDL: a novel video event searching technique using deep learning[M]. Soft Computing: Theories and Applications. Springer, Singapore, pp 905–914

Soomro K, Zamir AR, Shah M 2012 A dataset of 101 human action classes from videos in the wild. Center for Research in Computer Vision, 2(11)

Sun L, Jia K, Yeung DY et al (2015) Human action recognition using factorized spatio-temporal convolutional networks[C]. Proceedings of the IEEE international conference on computer vision 2015:4597–4605

Szegedy C, et al. 2015 Going deeper with convolutions. in Proceedings of the IEEE conference on computer vision and pattern recognition

Tang Q, Dai S.G, Yang J 2013 Object tracking algorithm based on camshift combining background subtraction with three frame difference. In applied mechanics and materials. 2013. Trans Tech Publ

Tran D, Bourdev L, Fergus R et al (2015) Learning spatiotemporal features with 3d convolutional networks[C]. Proceedings of the IEEE international conference on computer vision 2015:4489–4497

Wang H, Schmid C (2013) Action recognition with improved trajectories[C]. Proceedings of the IEEE international conference on computer vision 2013:3551–3558

Wang L, Qiao Y, Tang X (2015) Action recognition with trajectory-pooled deep-convolutional descriptors[C]. Proceedings of the IEEE conference on computer vision and pattern recognition 2015:4305–4314

Wang L, Xiong Y, Wang Z et al (2016) Temporal segment networks: Towards good practices for deep action recognition[C]. European conference on computer vision. Springer, Cham, pp 20–36

Wu H, Liu J, Zhu X et al (2021) Multi-scale spatial-temporal integration convolutional tube for human action recognition[C]. Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence 2021:753–759

Xu Y, Chen M, Xie T (2017) Method for state recognition of egg embryo in vaccines production based on support vector machine[J]. DEStech Transactions on Engineering and Technology Research, (tmcm)

Yang C, Xu Y, Shi J et al (2020) Temporal pyramid network for action recognition[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020:591–600

Yoo GH, Park JM, You KS et al (2005) Content-Based Image Retrieval Using Adaptive Color Histogram[J]. The Journal of Korean Institute of Communications and Information Sciences 30(9C):949–954

Zhang D, Dai X, Wang YF (2018) Dynamic temporal pyramid network: a closer look at multi-scale modeling for activity detection[C]. Asian Conference on Computer Vision. Springer, Cham, pp 712–728

Zhong X, Tu K, Xia H (2017) Mean-shift algorithm fusing multi feature[C]. 2017 IEEE 2nd Advanced Information Technology, Electronic and Automation Control Conference (IAEAC). IEEE 2017: 245–1249

Acknowledgements

This research was jointly supported by (1) National Natural Science Foundation of China (U1809208); (2) the Research and Development Fund Talent Startup Project of Zhejiang A&F University (No. 2019FR070).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hu, H., Liu, T. & Feng, H. Fast–slow visual network for action recognition in videos. Multimed Tools Appl 81, 26361–26379 (2022). https://doi.org/10.1007/s11042-022-12948-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12948-3