Abstract

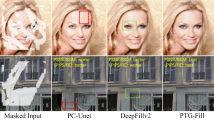

Deep learning-based methods have shown great potential in image inpainting, especially when dealing with large missing regions. However, the inpainted results often suffer from blurring, and improper textures can be created without an understanding of semantic information. In order to extract more features from the known regions, we propose a multi-level feature integration (MFI) network for image inpainting. We complete hole regions by two generators. For each generator, we use the MFI network to fill the hole region with multi-level skip connections. With multi-level feature integration, the network gains more knowledge about the global semantic structures and local fine details. Moreover, instead of a deconvolution layer or an interpolation algorithm, we adopt a sub-pixel layer to up-sample feature maps and produce more coherent results. We use PatchGAN to support the refinement generator network to produce more discriminative detail. Our experiments done with the Paris StreetView, CelebA-HQ and Places2 datasets demonstrate the effectiveness of our MFI network for producing visually pleasing results with semantically ordered textures.

Similar content being viewed by others

References

Ballester C, Bertalmio M, Caselles V, Sapiro G, Verdera J (2001) Filling-in by joint interpolation of vector fields and gray levels. IEEE Trans Image Process 10(8):1200–1211

Barnes C, Shechtman E, Finkelstein A, Goldman D B (2009) PatchMatch: A randomized correspondence algorithm for structural image editing. ACM Transactions on Graphics (Proc. SIGGRAPH) 28(3)

Chollet F (2017) Xception: Deep learning with depthwise separable convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1251–1258

Criminisi A, Pérez P, Toyama K (2004) Region filling and object removal by exemplar-based image inpainting. IEEE Trans Image Process 13(9):1200–1212

Ding D, Ram S, Rodríguez J J (2018) Image inpainting using nonlocal texture matching and nonlinear filtering. IEEE Trans Image Process 28 (4):1705–1719

Drozdzal M, Vorontsov E, Chartrand G, Kadoury S, Pal C (2016) The importance of skip connections in biomedical image segmentation. In: Deep learning and data labeling for medical applications. Springer, pp 179–187

Fan Q, Zhang L (2018) A novel patch matching algorithm for exemplar-based image inpainting. Multimed Tools Appl 77(9):10807–10821

Guillemot C, Le Meur O (2014) Image inpainting: Overview and recent advances. IEEE Signal Process Mag 31(1):127–144

Guo Q, Gao S, Zhang X, Yin Y, Zhang C (2017) Patch-based image inpainting via two-stage low rank approximation. IEEE Trans Vis Comput Graph 24(6):2023–2036

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S (2017) Gans trained by a two time-scale update rule converge to a local nash equilibrium. In: Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems, pp 6626–6637

Iizuka S, Simo-Serra E, Ishikawa H (2017) Globally and Locally Consistent Image Completion. ACM Trans Graph (Proc. of SIGGRAPH 2017) 36 (4):107:1–107:14

Ioffe S, Szegedy C (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning. PMLR, pp 448–456

Isola P, Zhu J-Y, Zhou T, Efros A A (2017) Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1125–1134

Li F, Zeng T (2014) A universal variational framework for sparsity-based image inpainting. IEEE Trans Image Process 23(10):4242–4254

Liu G, Reda F A, Shih K J, Wang T-C, Tao A, Catanzaro B (2018) Image inpainting for irregular holes using partial convolutions. In: The European Conference on Computer Vision (ECCV)

Liu H, Jiang B, Xiao Y, Yang C (2019) Coherent semantic attention for image inpainting. In: IEEE International Conference on Computer Vision (ICCV)

Liu J, Yang S, Fang Y, Guo Z (2018) Structure-guided image inpainting using homography transformation. IEEE Trans Multimed PP(99):1–1

Liu J, Jung C (2019) Facial image inpainting using multi-level generative network. In: 2019 IEEE International Conference on Multimedia and Expo (ICME). IEEE, pp 1168–1173

Liu X, Chen S, Song L, Woźniak M, Liu S (2021) Self-attention negative feedback network for real-time image super-resolution. Journal of King Saud University-Computer and Information Sciences

Liu Z, Luo P, Wang X, Tang X (2015) Deep learning face attributes in the wild. In: Proceedings of International Conference on Computer Vision (ICCV)

Lu H, Liu Q, Zhang M, Wang Y, Deng X (2018) Gradient-based low rank method and its application in image inpainting. Multimed Tools Appl 77 (5):5969–5993

Mao X, Li Q, Xie H, Lau Raymond YK, Wang Z, Paul Smolley S (2017) Least squares generative adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 2794–2802

Nazeri K, Ng E, Joseph T, Qureshi F Z, Ebrahimi M (2019) Edgeconnect: Generative image inpainting with adversarial edge learning. arXiv:1901.00212

Pathak D, Krähenbühl P, Donahue J, Darrell T, Efros A (2016) Context encoders: Feature learning by inpainting. In: Computer Vision and Pattern Recognition (CVPR)

Ren Y, Yu X, Zhang R, Li T H, Liu S, Li G (2019) Structureflow: Image inpainting via structure-aware appearance flow. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 181–190

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention

Shen L, Hong R, Zhang H, Zhang H, Wang M (2019) Single-shot semantic image inpainting with densely connected generative networks. In: Proceedings of the 27th ACM International Conference on Multimedia, pp 1861–1869

Shi W, Caballero J, Huszár F, Totz J, Aitken A P, Bishop R, Rueckert D, Wang Z (2016) Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1874–1883

Song Y, Yang C, Shen Y, Wang P, Huang Q, Kuo C-C J (2018) Spg-net: Segmentation prediction and guidance network for image inpainting. In: British Machine Vision Conference 2018, BMVC 2018, Newcastle, UK, September 3-6, 2018. BMVA Press, p 97

Tschumperlé D (2006) Fast anisotropic smoothing of multi-valued images using curvature-preserving pde’s. Int J Comput Vis 68(1):65–82

Wan Z, Zhang J, Chen D, Liao J (2021) High-fidelity pluralistic image completion with transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), pp 4692–4701

Wang N, Ma S, Li J, Zhang Y, Zhang L (2020) Multistage attention network for image inpainting. Pattern Recogn 106:107448

Wang N, Zhang Y, Zhang L (2021) Dynamic selection network for image inpainting. IEEE Trans Image Process 30:1784–1798

Wang Z, Simoncelli E P, Bovik A C (2003) Multiscale structural similarity for image quality assessment. In: The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003, vol 2. IEEE, pp 1398–1402

Xiao Z, Li D (2021) Generative image inpainting by hybrid contextual attention network. In: International Conference on Multimedia Modeling. Springer, pp 162–173

Xie C, Liu S, Li C, Cheng M-M, Zuo W, Liu X, Wen S, Ding E (2019) Image inpainting with learnable bidirectional attention maps. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 8858–8867

Yaghmaee F, Peyvandi K (2020) Improving image inpainting quality by a new svd-based decomposition. Multimed Tools Appl 79(19):13795–13809

Yan Z, Li X, Li M, Zuo W, Shan S (2018) Shift-net: Image inpainting via deep feature rearrangement. In: Proceedings of the European conference on computer vision (ECCV), pp 1–17

Yang C, Lu X, Lin Z, Shechtman E, Wang O, Li H (2017) High-resolution image inpainting using multi-scale neural patch synthesis. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6721–6729

Yu J, Lin Z, Yang J, Shen X, Lu X, Huang T S (2018) Generative image inpainting with contextual attention. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5505–5514

Yu J, Lin Z, Yang J, Shen X, Lu X, Huang T S (2019) Free-form image inpainting with gated convolution. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 4471–4480

Yu T, Guo Z, Jin X, Wu S, Chen Z, Li W, Zhang Z, Liu S (2020) Region normalization for image inpainting. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol 34, pp 12733–12740

Zamir A R, Shah M (2014) Image geo-localization based on multiplenearest neighbor feature matching usinggeneralized graphs. IEEE Trans Pattern Anal Mach Intell 36(8):1546–1558

Zeng Y, Fu J, Chao H, Guo B (2019) Learning pyramid-context encoder network for high-quality image inpainting. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 1486–1494

Zhang J, Zhao D, Gao W (2014) Group-based sparse representation for image restoration. IEEE Trans Image Process 23(8):3336–3351

Zhang L, Chang M (2021) An image inpainting method for object removal based on difference degree constraint. Multimed Tools Appl 80:1–20. https://doi.org/10.1007/s11042-020-09835-0

Zhang X, Hamann B, Pan X, Zhang C (2017) Superpixel-based image inpainting with simple user guidance. In: 2017 IEEE International Conference on Image Processing (ICIP). IEEE, pp 3785–3789

Zhou B, Lapedriza A, Khosla A, Oliva A, Torralba A (2017) Places: A 10 million image database for scene recognition. IEEE Trans Pattern Anal Mach Intell 40(6):1452–1464

Zhu M, He D, Li X, Li C, Li F, Liu X, Ding E, Zhang Z (2021) Image inpainting by end-to-end cascaded refinement with mask awareness. IEEE Trans Image Process 30:4855–4866

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research was supported by Zhejiang Provincial Natural Science Foundation of China under Grant No.LQ21F020015 and No.LQ20F020015.

Appendix:

Appendix:

The detailed architecture and used parameter values of our approach are provided in Table 5- Table 8. We use the following abbreviations in the table: Size_in stands for the spatial size of the input in one dimension; Size_out stands for the spatial size of the output in one dimension; C_in refers to the channel number of the input; C_out is the channel number of the output; Act refers to the non-linear activation function; Norm refers to the normalization method; Conv stands for convolution layer; K stands for kernel size; S stands for stride; and P stands for padding.

Rights and permissions

About this article

Cite this article

Chen, T., Zhang, X., Hamann, B. et al. A multi-level feature integration network for image inpainting. Multimed Tools Appl 81, 38781–38802 (2022). https://doi.org/10.1007/s11042-022-13028-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13028-2