Abstract

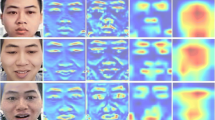

Most facial expression recognition (FER) algorithms are based on shallow features, and the deep networks tend to lose some key features in the expression, such as eyes, nose and mouth. To address the limitations, we present in this paper a novel approach, named CBAM-Global-Efficient Channel Attention-ResNet (C-G-ECA-R). C-G-ECA-R combines a strong attention mechanism and residual network. The strong attention enhances the extraction of important features of expressions by embedding the channel and spatial attention mechanism before and after the residual module. The addition of Global-Efficient Channel Attention (G-ECA) into the residual module strengthens the extraction of key features and reduces the loss of facial information. The extensive experiments have been conducted on two publicly available datasets, Extended Cohn-Kanade and Japanese Female Facial Expression. The results demonstrate that our proposed C-G-ECA-R, especially under ResNet34, has achieved 98.98% and 97.65% accuracy, respectively for the two datasets, that are higher than the state-of-arts.

Similar content being viewed by others

Data Availability

All data can be obtained by contacting the first author.

References

Arriaga O, Valdenegro-Toro M, Plöger P (2017) Real-time convolutional neural networks for emotion and gender classification. arXiv:1710.07557

Avani V, Shaila S, Vadivel A (2020) Interval graph of facial regions with common intersection salient points for identifying and classifying facial expression. Multimed Tools Appl 80(3):3367–3390

Ayeche F, Alti A (2021) HDG And HDGG: an extensible feature extraction descriptor for effective face and facial expressions recognition. Pattern Anal Applic, 1–16

Bystroff C, Thorsson V, Baker D (2000) HMMSTR: A hidden markov model for local sequence-structure correlations in proteins. J Mol Biol 301 (1):173–190

Cao S, Yao Y, An G (2020) E2-capsule neural networks for facial expression recognition using AU-aware attention. IET Image Process 14(11):2417–2424

Chen D, Song P (2021) Dual-graph regularized discriminative transfer sparse coding for facial expression recognition. Digital Signal Process, 108

Gera D, Balasubramanian S (2021) Landmark guidance independent spatio-channel attention and complementary context information based facial expression recognition. Pattern Recogn Lett 145:58–66

He J, Yu X, Sun B, Yu L (2021) Facial expression and action unit recognition augmented by their dependencies on graph convolutional networks. J Multimodal User Interfaces, 1–12

Khaliluzzaman M, Pervin S, Islam M, Hassan M (2019) Automatic facial expression recognition using shallow convolutional neural network. In: IEEE International conference on robotics, automation, artificial-intelligence and internet-of-things (RAAICON). IEEE, pp 98-103

Li K, Jin Y, Akram M W, Han R, Chen J (2019) Facial expression recognition with convolutional neural networks via a new face cropping and rotation strategy. Vis Comput 36(2):391–404

Li J, Jin K, Zhou D, Kubota N, Ju Z (2020) Attention mechanism-based CNN for facial expression recognition. Neurocomputing 411:340–350

Liu X, Li C, Dai C, Chao H (2021) Nonnegative tensor factorization based on low-rank subspace for facial expression recognition. Mobile Networks and Applications, 1–12

Lopes A, Aguiar E, Souza A, Oliveira-Santos T (2017) Facial expression recognition with convolutional neural networks: coping with few data and the training sample order. Pattern Recogn 61:610–628

Ma X, Guo J, Tang S, Qiao Z, Fu S (2021) DCANet: learning connected attentions for convolutional neural networks. In: 2021 IEEE International conference on multimedia and expo (ICME), pp 1-6

Mena-Chalco J, Carrer H, Zana Y, Jr R (2008) Identification of protein coding regions using the modified Gabor-Wavelet transform. IEEE/ACM Trans Comput Biol Bioinformatics 5(2):198–207

Milborrow S, Nicolls F (2008) Locating facial features with an extended active shape model. In: European conference on computer vision, pp 504-513

Mnih V, Heess N, Graves A (2014) Recurrent models of visual attention. Adv Neural Inf Process Syst, 2204–2212

Mohan K, Seal A, Krejcar Yazidi A (2021) Facial expression recognition using local gravitational force descriptor-based deep convolution neural networks. IEEE Trans Instrum Meas 70:1–12

Rao T, Li J, Wang X, Sun Y, Chen H (2021) Facial expression recognition with multiscale graph convolutional networks. IEEE MultiMedia 28 (2):11–19

Roy A G, Nav Ab N, Wachinger C (2018) Concurrent spatial and channel squeeze & excitation in fully convolutional networks. Springer, Cham, pp 421–429

Rubel A, Chowdhury A, Kabir M (2019) Facial expression recognition using adaptive robust local complete pattern. In: IEEE International conference on image processing (ICIP), pp 41-45

Sabour S, Frosst N, Hinton G E (2017) Dynamic routing between capsules. Advances in neural information processing systems, 30

Sadeghi H, Raie A (2019) Human vision inspired feature extraction for facial expression recognition. Multimed Tools Appl 78(21):30335–30353

Smolyanskiy N, Huitema C, Liang L, Anderson S (2014) Real-time 3D face tracking based on active appearance model constrained by depth data. Image Vis Comput 32(11):860–869

Suykens J, Vandewalle J (1999) Least squares support vector machine classifiers. Neural Process Lett 9(3):293–300

Vinay A, Gupta A, Bharadwaj A, Srinivasan A, Murthy K, Natarajan S (2018) Unconstrained face recognition using bayesian classification. Procedia Comput Sci 143:519–527

Wang Q, Wu B, Zhu P, Li P, Zuo W, Hu Q (2020) ECA-Net: Efficient channel attention for deep convolutional neural networks. In: 2020 IEEE/CVF Conference on computer vision and pattern recognition (CVPR), pp 11531-11539

Wang Z, Zeng F, Liu S, Zeng B (2021) OAENEt: Oriented attention ensemble for accurate facial expression recognition[J]. Pattern Recogn 112:107694

Woo S, Park J, Lee J, Kweon I (2018) CBAM: convolutional block attention module. european conference on computer vision. In: Proceedings of the European conference on computer vision (ECCV), pp 3-19

Wu F, Pang C, Zhang B (2021) FaceCaps for facial expression recognition. In: 25th International conference on pattern recognition (ICPR2020)

Xie S, Hu H (2019) Facial expression recognition using hierarchical features with deep comprehensive multipatches aggregation convolutional neural networks. IEEE Trans Multimedia 21(1):211–220

Zhao G, Pietikainen M (2007) Dynamic texture recognition using local binary patterns with an application to facial expressions. IEEE Trans Pattern Anal Mach Intell 29:915–928

Zhu X, Ye S, Zhao L, Dai Z (2021) Hybrid attention cascade network for facial expression recognition. Sensors 21(6):2003

Zou W, Zhang D, Lee D (2021) A new multi-feature fusion based convolutional neural network for facial expression recognition. Appl Intell, 1–12

Funding

This work is supported by National Natural Science Foundation of china under grant number (No. 62177037) and Education Department of Shaanxi Provincial Government Service Local Special Scientific Research Plan Project under grant number (No. 22JC037).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Qian, Z., Mu, J., Tian, F. et al. Facial expression recognition based on strong attention mechanism and residual network. Multimed Tools Appl 82, 14287–14306 (2023). https://doi.org/10.1007/s11042-022-13799-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13799-8