Abstract

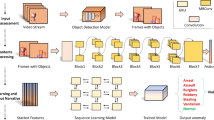

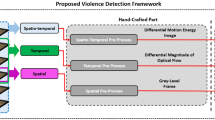

Numerous violent actions occur in the world every day, affecting victims mentally and physically. To reduce violence rates in society, an automatic system may be required to analyze human activities quickly and detect violent actions accurately. Violence detection is a complex machine vision problem involving insufficient violent datasets and wide variation in activities and environments. In this paper, an unsupervised framework is presented to discriminate between normal and violent actions overcoming these challenges. This is accomplished by analyzing the latent space of a double-stream convolutional AutoEncoder (AE). In the proposed framework, the input samples are processed to extract discriminative spatial and temporal information. A human detection approach is applied in the spatial stream to remove background environment and other noisy information from video segments. Since motion patterns in violent actions are entirely different from normal actions, movement information is processed with a novel Jerk feature in the temporal stream. This feature describes the long-term motion acceleration and is composed of 7 consecutive frames. Moreover, the classification stage is carried out with a one-class classifier using the latent space of AEs to identify the outliers as violent samples. Extensive experiments on Hockey and Movies datasets showed that the proposed framework surpassed the previous works in terms of accuracy and generality.

Similar content being viewed by others

Data availability

Data available on request from the authors.

References

Abdi H, Williams LJ (2010) Principal component analysis. Wiley interdisciplinary reviews: computational statistics 2:433–459

Anusha R, Jaidhar CD (2020) Human gait recognition based on histogram of oriented gradients and Haralick texture descriptor. Multimed Tools Appl 79:8213–8234

Baldi P (2012) Autoencoders, unsupervised learning, and deep architectures. In: 2012 Proceedings of ICML workshop on unsupervised and transfer learning, Bellevue, Washington, USA, 37–49

Bermejo Nievas E, Deniz Suarez O, Bueno García G, Sukthankar R (2011). Violence detection in video using computer vision techniques. In: 2011 14th international conference on computer analysis of images and patterns, Seville, Spain, 332–339. https://doi.org/10.1007/978-3-642-23678-5_39

Blumstein A, Wallman J (2020). The recent rise and fall of American violence. In: Vogel E (ed) Crime, Inequality and the State, 1st edn. Routledge, 103–124

Dhiman C, Vishwakarma DK (2020) View-invariant deep architecture for human action recognition using two-stream motion and shape temporal dynamics. IEEE Trans Image Process 29:3835–3844

Ehsan TZ, Mohtavipour SM (2020) Vi-net: a deep violent flow network for violence detection in video sequences. In: 11th International Conference on Information and Knowledge Technology, 88–92

Ehsan TZ, Nahvi M (2018). Violence detection in indoor surveillance cameras using motion trajectory and differential histogram of optical flow. In: 8th International Conference on Computer and Knowledge Engineering, 153–158

Ehsan TZ, Nahvi M, Mohtavipour SM (2022). DABA-net: deep acceleration-based AutoEncoder network for violence detection in surveillance cameras, In: Proceedings of IEEE International Conference on Machine Vision and Image Processing (MVIP), 1–6

Fortun D, Bouthemy P, Kervrann C (2015) Optical flow modeling and computation: a survey. Comput Vis Image Underst 134:1–21

Gao Y, Liu H, Sun X, Wang C, Liu Y (2016) Violence detection using oriented violent flows. Image Vis Comput 48:37–41

Guha T, Ward RK (2011) Learning sparse representations for human action recognition. IEEE Trans Pattern Anal Mach Intell 34:1576–1588. https://doi.org/10.1109/TPAMI.2011.253

Hassner T, Itcher Y, Kliper-Gross O (2012). Violent flows: real-time detection of violent crowd behavior. In: 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, 1–6. https://doi.org/10.1109/CVPRW.2012.6239348

Horn BK, Schunck BG (1981) Determining optical flow. Artif Intell 17:185–203

Kang MS, Park RH, Park HM (2021) Efficient spatio-temporal modeling methods for real-time violence recognition. IEEE Access 9:76270–76285

Khan SS, Ahmad A (2018) Relationship between variants of one-class nearest neighbors and creating their accurate ensembles. IEEE Trans Knowl Data Eng 30:1796–1809

Kingma DP, Ba J (2014). Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980

Mao D, Lin X, Liu Y, Xu M, Wang G, Chen J, Zhang W (2021). Activity recognition from skeleton and acceleration data using cnn and gcn. In: Human activity recognition challenge, Springer, Singapore, 15–25

Materzynska J, Xiao T, Herzig R, Xu H, Wang X, Darrell T (2020). Something-else: compositional action recognition with spatial-temporal interaction networks. In: 2020 Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1049–1059. https://doi.org/10.1109/CVPR42600.2020.00113

Mohamed MA, Mertsching B (2012). TV-L1 optical flow estimation with image details recovering based on modified census transform. In: International Symposium on Visual Computing, Springer, Berlin, Heidelberg, 482–491

Mohtavipour SM, Saeidi M, Arabsorkhi A (2022) A multi-stream CNN for deep violence detection in video sequences using handcrafted features. Vis Comput 38:2057–2072

Moon S, Qi H (2012) Hybrid dimensionality reduction method based on support vector machine and independent component analysis. IEEE transactions on neural networks and learning systems 23:749–761

Naik AJ, Gopalakrishna MT (2021) Deep-violence: individual person violent activity detection in video. Multimed Tools Appl 80:18365–18380

Peixoto BM, Lavi B, Dias Z, Rocha A (2021) Harnessing high-level concepts, visual, and auditory features for violence detection in videos. J Vis Commun Image Represent 78:103174

Pock T, Urschler M, Zach C, Beichel R, Bischof H (2007). A duality based algorithm for TV-L 1-optical-flow image registration. In: 10th international conference on medical image computing and computer-assisted intervention, Brisbane, Australia, 511–518. https://doi.org/10.1007/978-3-540-75759-7_62

Saad K, El-Ghandour M, Raafat A, Ahmed R, Amer E (2022) A Markov model-based approach for predicting violence scenes from movies. In IEEE 2nd international Mobile, intelligent, and ubiquitous computing conference (MIUCC), 21-26

Senst T, Eiselein V, Kuhn A, Sikora T (2017) Crowd violence detection using global motion-compensated lagrangian features and scale-sensitive video-level representation. IEEE transactions on information forensics and security 12:2945–2956

Serrano Gracia I, Deniz Suarez O, Bueno Garcia G, Kim TK (2015) Fast fight detection. PLoS One 10:e0120448

Shafiee MJ, Chywl B, Li F, Wong A (2017) Fast YOLO: a fast you only look once system for real-time embedded object detection in video. arXiv preprint arXiv:1709.05943

Singh D, Mohan CK (2017) Graph formulation of video activities for abnormal activity recognition. Pattern Recogn 65:265–272

Soliman MM, Kamal MH, Nashed MAEM, Mostafa YM, Chawky BS, Khattab D (2019). Violence recognition from videos using deep learning techniques. In: 2019 9th International Conference on Intelligent Computing and Information Systems, 80–85. https://doi.org/10.1109/ICICIS46948.2019.9014714

Su Y, Lin G, Zhu J, Wu Q (2020). Human interaction learning on 3d skeleton point clouds for video violence recognition. In: European Conference on Computer Vision, Springer, Cham, 74–90

Tran D, Bourdev L, Fergus R, Torresani L, Paluri M (2015) Learning spatiotemporal features with 3d convolutional networks. In: Proceedings of the IEEE international conference on computer vision, 4489–4497

Ullah FUM, Ullah A, Muhammad K, Haq IU, Baik SW (2019) Violence detection using spatiotemporal features with 3D convolutional neural network. Sensors 19:2472

Wang P, Wang P, Fan E (2021) Violence detection and face recognition based on deep learning. Pattern Recogn Lett 142:20–24

Wu P, Liu J, Shi Y, Sun Y, Shao F, Wu Z, Yang Z (2020) Not only look, but also listen: learning multimodal violence detection under weak supervision. In: European conference on computer vision, Springer, Cham, 322–339

Yu L, Liu H (2003). Feature selection for high-dimensional data: a fast correlation-based filter solution. In: Proceedings of the 20th International Conference on Machine Learning, 856–863

Yu J, Song W, Zhou G, Hou JJ (2019) Violent scene detection algorithm based on kernel extreme learning machine and three-dimensional histograms of gradient orientation. Multimed Tools Appl 78:8497–8512

Zhang T, Yang Z, Jia W, Yang B, Yang J, He X (2016) A new method for violence detection in surveillance scenes. Multimed Tools Appl 75:7327–7349

Zhang T, Jia W, He X, Yang J (2016) Discriminative dictionary learning with motion weber local descriptor for violence detection. IEEE transactions on circuits and systems for video technology 27:696–709

Zhou T, Wang S, Zhou Y, Yao Y, Li J, Shao L (2020). Motion-attentive transition for zero-shot video object segmentation. In: 2020 Proceedings of the AAAI Conference on Artificial Intelligence, 34:13066–13073. https://doi.org/10.1609/aaai.v34i07.7008

Zhou T, Wang W, Qi S, Ling H, Shen J (2020) Cascaded human-object interaction recognition. In: 2020 Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 4263–4272. https://doi.org/10.1109/CVPR42600.2020.00432

Zhou T, Wang W, Liu S, Yang Y, Van Gool L (2021). Differentiable multi-granularity human representation learning for instance-aware human semantic parsing. In: 2021 Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 1622–1631. https://doi.org/10.1109/CVPR46437.2021.00167

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Authors certified that they have no conflicts of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ehsan, T.Z., Nahvi, M. & Mohtavipour, S.M. Learning deep latent space for unsupervised violence detection. Multimed Tools Appl 82, 12493–12512 (2023). https://doi.org/10.1007/s11042-022-13827-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13827-7