Abstract

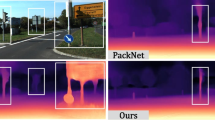

The raw depth image captured by the depth sensor usually has an extensive range of missing depth values, and the incomplete depth map burdens many downstream vision tasks. In order to overcome the incorrect estimation issue of depth information with the original luminosity loss function for processing complex texture areas and distant moving objects, this paper proposes a self-supervised monocular depth estimation algorithm based on multi-scale structure similarity loss. So as to enhance the perception ability of the depth prediction network for pixel edges, this paper proposes a multi-scale structural similarity when calculating the loss. In addition, an attention mechanism is also added to the encoder stage of the deep prediction network. As a result, the network not only ignores the features with small contributions, but also strengthens the features assist judgment based on the adjustment of the feature map. Finally, the experiments on the KITTI dataset and Cityscapes are conducted, and then the results are compared and analyzed with the state-of-the-art algorithms. The experimental results demonstrate that the proposed algorithm achieves significant improvements in accuracy, especially on the KITTI dataset, whose precision is raised to 88.4%. Moreover, under the premise of outstanding accuracy, the visualization effect of depth estimation has also been significantly improved, especially in the scenes with multi-person overlap on Cityscapes.

Similar content being viewed by others

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Ahmed SST, Thanuja K, Guptha NS, et al. (2016) Telemedicine approach for remote patient monitoring system using smart phones with an economical hardware kit[C]. 2016 international conference on computing technologies and intelligent data engineering (ICCTIDE'16), pp: 1–4

Ali U, Bayramli B, Alsarhan T, Lu H (2021) A lightweight network for monocular depth estimation with decoupled body and edge supervision[J]. Image Vis Comput 113:104261

Behley J, Arbade MG, Milioto A, et al. (2020) SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences[C]. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp:9297–9307

Bian JW, Li Z, Wang N, et al. (2019) Unsupervised Scale-consistent Depth and Ego-motion Learning from Monocular Video[J], 32, pp:1–11.

Casser V, Pirk S, Mahjourian R et al (2019) Depth Prediction without the Sensors: Leveraging Structure for Unsupervised Learning from Monocular Videos[C]. Thirty-Third AAAI Conf Artificial Intellig (AAAI’19) 33(01):8001–8008

Chen L, Kou Q, Cheng D, Yao J (2020) Content-guided deep residual network for single image super-resolution[J]. Optik 202:163678

Dc A, Rl A, Jl A et al (2021) Activity guided multi-scales collaboration based on scaled-CNN for saliency prediction[J]. Image Vis Comput 114:104267

Eigen D, Fergus R (2014) Predicting Depth, Surface Normals and Semantic Labels with a Common Multi-Scale Convolutional Architecture[J]. IEEE:2650–2658

Garg R, Bg VK, Carneiro G et al (2016) Unsupervised CNN for single view Depth estimation: geometry to the rescue[C]. European Conf Comput Vision 9912:740–756

Godard C, Aodha OM, Brostow GJ (2017) Unsupervised Monocular Depth Estimation with Left-Right Consistency[C]. Computer Vision & Pattern Recognition, pp:6602–6611.

Godard C, Aodha OM, Firman M, et al. (2019) Digging Into Self-Supervised Monocular Depth Estimation[C]. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp:3828–3838.

Gordon A, Li H, Jonschkowski R, et al. (2019) Depth From Videos in the Wild: Unsupervised Monocular Depth Learning From Unknown Cameras[C]. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp: 8976–8985.

He K, Zhang X, Ren S, et al. (2016) Deep Residual Learning for Image Recognition[J]. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp:770–778.

Jung H, Park E, Yoo S (2021) Fine-grained semantics-aware representation enhancement for self-supervised monocular depth estimation[C]. Proceedings of the IEEE/CVF International Conference on Computer Vision, pp: 12642–12652

Khan F, Salahuddin S, Javidnia H (2020) Deep learning-based monocular Depth estimation methods-a state-of-the-art review[J]. Sensors (Basel) 20(8):2272

Klingner M, Termhlen J A, Mikolajczyk J, et al. (2020) Self-Supervised Monocular Depth Estimation: Solving the Dynamic Object Problem by Semantic Guidance[J], pp:582–600.

Laina I, Rupprecht C, Belagiannis V, et al. (2016) Deeper Depth Prediction with Fully Convolutional Residual Networks[C]. Fourth International Conference on 3d Vision, pp: 239–248.

Li R, Wang S, Long Z, et al. (2017) UnDeepVO: Monocular Visual Odometry through Unsupervised Deep Learning[J], pp: 7286–729.

Li J, Cheng D, Liu R, Kou Q, Zhao K (2021) Unsupervised person re-identification based on measurement Axis[J]. IEEE Signal Proces Lett 28:379–383

Luo C, Yang Z, Peng W et al (2018) Every Pixel Counts ++: Joint Learning of Geometry and Motion with 3D Holistic Understanding[J]. IEEE Trans Pattern Anal Mach Intell 42:2624–2641

Mathew A, Mathew J (2020) Monocular depth estimation with SPN loss[J]. Image Vis Comput 100:103934

Mehta I, Sakurikar P, Narayanan PJ (2018) Structured Adversarial Training for Unsupervised Monocular Depth Estimation[C]. 2018 International Conference on 3D Vision (3DV), pp: 314–323.

Meng Y, Lu Y, Raj A, et al. (2020) SIGNet: Semantic Instance Aided Unsupervised 3D Geometry Perception[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 9802–9812

Pillai S, Ambrus R, Gaidon A (2019) SuperDepth: Self-Supervised, Super-Resolved Monocular Depth Estimation[C]. 2019 International Conference on Robotics and Automation (ICRA), pp: 9250–9256.

Poggi M, Tosi F, Mattoccia S (2018) Learning monocular depth estimation with unsupervised trinocular assumptions[C]. 2018 International Conference on 3D Vision (3DV), pp: 324–333.

Praveena HD, Guptha NS, Kazemzadeh A, Parameshachari BD, Hemalatha KL (2022) Effective CBMIR System Using Hybrid Features-Based Independent Condensed Nearest Neighbor Model[J]. J Healthcare Engin 2022:1–9

Ranftl R, Bochkovskiy A, Koltun V (2021) Vision transformers for dense prediction[C]. Proceedings of the IEEE/CVF International Conference on Computer Vision, pp: 12179–12188.

Ranjan A, Jampani V, Balles L, et al. (2019) Competitive Collaboration: Joint Unsupervised Learning of Depth, Camera Motion, Optical Flow and Motion Segmentation[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp: 12232–12241.

Ronneberger O, Fischer P, Brox T (2015) U-Net: Convolutional Networks for Biomedical Image Segmentation[J]. International Conference on Medical Image Computing and Computer-Assisted Intervention, pp:234–241.

Rosa N, Guizilini V, Grassi V (2019) Sparse-to-Continuous: Enhancing Monocular Depth Estimation using Occupancy Maps[C]. 2019 19th International Conference on Advanced Robotics (ICAR), pp: 793–800.

Schön M, Buchholz M, Dietmayer K (2021) Mgnet: Monocular geometric scene understanding for autonomous driving[C]. Proceedings of the IEEE/CVF International Conference on Computer Vision, pp: 15804–15815.

Tosi F, Aleotti F, Poggi M, et al. (2019) Learning monocular depth estimation infusing traditional stereo knowledge[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp: 9799–9809.

Wang Z, Simoncelli EP, Bovik AC (2003) Multiscale structural similarity for image quality assessment[C]. Proc IEEE Asilomar Conference on Signals, pp: 1398–1402.

Wang C, Buenaposada JM, Rui Z, et al (2018) Learning Depth from Monocular Videos using Direct Methods[C]. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp: 2022–2030.

Wang H, Wang M, Che Z, et al. RGB-Depth Fusion GAN for Indoor Depth Completion[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022, pp: 6209–6218.

Wong A, Hong B W, Soatto S (2019) Bilateral Cyclic Constraint and Adaptive Regularization for Unsupervised Monocular Depth Prediction[J]. IEEE, pp: 5627–5636.

Zhan H, Garg R, Weerasekera C S, et al. (2018) Unsupervised Learning of Monocular Depth Estimation and Visual Odometry with Deep Feature Reconstruction[J]. IEEE, pp: 340–349.

Zhe C, Kar A, Haene C, et al. (2019) Learning Independent Object Motion from Unlabelled Stereoscopic Videos[C]. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp: 5594–5603.

Zhou J, Wang Y, Qin K, et al. (2019) Unsupervised High-Resolution Depth Learning From Videos With Dual Networks[C]. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp: 6871–6880.

Acknowledgements

This paper is supported by the National Natural Science Foundation of China under Grant No. 51774281.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Han, C., Cheng, D., Kou, Q. et al. Self-supervised monocular Depth estimation with multi-scale structure similarity loss. Multimed Tools Appl 82, 38035–38050 (2023). https://doi.org/10.1007/s11042-022-14012-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-14012-6