Abstract

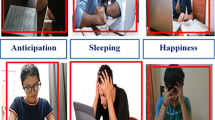

This paper aims to improve the lecture delivery mechanism in real-time in a classroom and remote sessions over web-based applications. In the traditional system, a lecturer observes their students’ attention levels from his/her experience. To date, no system automatically tracks the students’ attention level in a class in real-time (or while the lecturer is delivering his/her lectures remotely over web-based applications). On the other hand, our proposed system periodically will monitor the learning behaviour of the whole class and track the attentiveness of each student. The proposed system is not meant to identify the non-attentive students and punish them. Contrary to the punishment-based mechanism, it introduces a counseling-based mechanism. This deep learning-based real-time face monitoring system will allow lecturers to improvise/her delivery either through bringing diversity in the class contents or personal care to those non-attentive students. The concept of the deep learning technique in an ensemble configuration has been used to predict the likelihood of eyes’ openness. Separately, a student’s facial expressions are also recognized using our Convolutional Neural Network (CNN) model. Finally, the net learning behaviour of a student has been computed by a weighted average of these two features (that is, eyes’ openness and facial expressions). The student learning behaviour is validated twice with Pearson correlation coefficient and Spearman correlation coefficient measures between the openness of eye and facial expressions. Again, the Cosine similarity has been used to further examine the periodical similarity of the student’s learning patterns. The proposed pipeline has performed even better than the state-of-the-art models such as ResNet50, MobileNetV2, and EfficientNet-B0 in terms of accuracy and f1-score.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analysed during the current study are available in the following urls: 1. CEW dataset: http://parnec.nuaa.edu.cn/_upload/tpl/02/db/731/template731/pages/xtan/ClosedEyeDatabases.html 2. MRL dataset: http://mrl.cs.vsb.cz/eyedataset. 3. Extended Cohn-Kanade Dataset (CK+) and Perceived Attention GitHub: https://github.com/cserajdeep/Perceived-Attention

Notes

Perceived Attention GitHub: https://github.com/cserajdeep/Perceived-Attention

References

An N, Ding H, Yang J, Au R, Ang TF (2020) Deep ensemble learning for alzheimer’s disease classification. J Biomed Inf 105(103):411

Bdiwi R, de Runz C, Faiz S, Cherif AA (2019) Smart learning environment: teacher’s role in assessing classroom attention. Res Learn Technol :27

Bi AQ, Tian XY, Wang SH, Zhang YD (2022) A dynamic transfer exemplar based facial emotion recognition model towards online video. ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM)

Chatterjee R, Maitra T, Islam SH, Hassan MM, Alamri A, Fortino G (2019) A novel machine learning based feature selection for motor imagery eeg signal classification in internet of medical things environment. Futur Gener Comput Syst 98:419–434

Chatterjee R, Roy S, Roy S (2021) International conference on advanced network technologies and intelligent computing, Springer, pp 517–529

Courtad CA (2019) Smart education and e-learning, Springer, pp 501–510

Du S, Martinez AM (2022) Compound facial expressions of emotion: from basic research to clinical applications. Dialogues Clin Neurosci

Francis B, Craig N, Hodgen J, Taylor B, Tereshchenko A, Connolly P, Archer L (2020) The impact of tracking by attainment on pupil self-confidence over time: Demonstrating the accumulative impact of self-fulfilling prophecy. Br J Sociol Educ 41(5):626–642

Ganaie MA, Hu M et al (2021) Ensemble deep learning: a review. arXiv:2104.02395

Jaiswal S, Virmani S, Sethi V, De K, Roy PP (2019) An intelligent recommendation system using gaze and emotion detection. Multimed Tools Appl 78(11):14,231–14,250

Krizhevsky A, Sutskever I, Hinton GE (2012) Advances in neural information processing systems, pp 1097–1105

Kurakin A, Chien S, Song S, Geambasu R, Terzis A, Thakurta A (2022) Toward training at imagenet scale with differential privacy. arXiv:2201.12328

Li TM, Shen WX, Chao HC, Zeadally S (2019) International conference on innovative technologies and learning, Springer, pp 498–504

Liu S, Lu C, Alghowinem S, Gotoh L, Breazeal C, Park HW (2022) International conference on human-computer interaction, Springer, pp 161–178

Lucey P, Cohn JF, Kanade T, Saragih J, Ambadar Z, Matthews I (2010) 2010 IEEE computer society conference on computer vision and pattern recognition-workshops, IEEE, pp 94–101

Mazumdar S, Chatterjee R (2022) Advances in data computing, communication and security, Springer, pp 193–205

Perdiz J, Garrote L, Pires G, Nunes UJ (2021) A reinforcement learning assisted eye-driven computer game employing a decision tree-based approach and cnn classification. IEEE Access 9:46,011–46,021

Riccio A, Simione L, Schettini F, Pizzimenti A, Inghilleri M, Olivetti Belardinelli M, Mattia D, Cincotti F (2013) Attention and P300-based BCI performance in people with amyotrophic lateral sclerosis. Front Hum Neurosci 7:732

Saurav S, Gidde P, Saini R, Singh S (2022) Real-time eye state recognition using dual convolutional neural network ensemble. J Real-Time Image Proc :1–16

Shen F, Ye L, Ma X, Zhong W (2019) 3Rd international seminar on education innovation and economic management (SEIEM 2018) (Atlantis press)

Song F, Tan X, Chen S (2014) Exploiting relationship between attributes for improved face verification. Comput Vis Image Underst 122:143–154

Steil J, Huang MX, Bulling A (2018) Proceedings of the 2018 ACM symposium on eye tracking research & applications, pp 1–9

Wang Y, Huang R, Guo L (2019) Eye gaze pattern analysis for fatigue detection based on gp-bcnn with esm. Pattern Recogn Lett 123:61–74

Wang Y, Lu S, Harter D (2021) Multi-sensor eye-tracking systems and tools for capturing student attention and understanding engagement in learning: a review. IEEE Sensors J

Yoon HS, Baek NR, Truong NQ, Park KR (2019) Driver gaze detection based on deep residual networks using the combined single image of dual near-infrared cameras. IEEE Access 7:93,448–93,461

Funding

Nil.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval and consent to participate

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards.

Consent for Publication

Consent for Publication Informed consent was obtained from all individual participants included in the study.

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rajdeep Chatterjee is also affiliated with Amygdala AI, a volunteer-driven global research community www.amygdalaai.org

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chatterjee, R., Halder, R., Maitra, T. et al. A computer vision-based perceived attention monitoring technique for smart teaching. Multimed Tools Appl 82, 11523–11547 (2023). https://doi.org/10.1007/s11042-022-14283-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-14283-z