Abstract

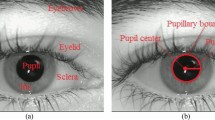

Pupil Centre’s localization is an essential aspect of ergonomics; it may be used in emotion analysis and attention evaluation. Numerous research studies have demonstrated the transformer to be beneficial in computer vision. When applied to pupil localization, it is envisaged that such detection performance should improve. Furthermore, labeling data is time-consuming; thus, utilizing data features to generate data reduces the intensity of data labeling while conserving the manually obtained image features. To initiate, we propose a fake dataset generation algorithm based on affine image features such as pupil and eyelid occlusion. Second, ViT and DETR models are utilized for training fake and real pupil datasets, respectively, and their recognition rates are analyzed. Finally, the FNet and DETR conclusions design the fDETR, train the fake and real pupil datasets, and analyze their recognition rate. The transformer was used for the pupil center’s localization and performed effectively (65% on average). There was the little discrepancy in accuracy between the fake dataset and the true pupil dataset (about 3% ). The final findings demonstrate that this approach of recognizing pupils using a fake pupil dataset is successful. The model can still produce decent results even if the pupil is occluded.

Similar content being viewed by others

Data Availability

The program can downloaded from https://github/xuepx/fakepupil. All other data generated or analyzed during this study are included in this published article.

Abbreviations

- AGI:

-

Artificial General Intelligence.

- AI:

-

Artificial Intelligence.

- ANI:

-

Artificial Narrow Intelligence.

- ASI:

-

Artificial Super Intelligence.

- CNNs:

-

Convolutional Neural Networks.

- DETR:

-

Detection Transformer.

- DIoU:

-

Distance-Intersection over Union.

- EPS:

-

Edge Points Selection.

- fDETR:

-

FNet Detection Transformer.

- GAN:

-

Generative Adversarial Networks.

- IoU:

-

Intersection over Union.

- LPW:

-

Labeled Pupils in the Wild.

- MLP:

-

Multi-Layer Perceptron.

- MSA:

-

Multi-headed Self Attention.

- MSE:

-

Mean Squared Error.

- NLP:

-

Natural Language Processing.

- SET:

-

Sinusoidal Eye-Tracker.

- ViT:

-

Vision Transformer.

References

Amir-Homayoun J, Zahra H, Morteza B et al (2015) SET: a pupil detection method using sinusoidal approximation. Front Neuroengineering

Carion N, Massa F, Synnaeve G et al (2020) End-to-end object detection with transformers. arXiv:2005.12872

Chaudhary AK, Kothari R, Acharya M, Dangi S, Nair N, Bailey R, Kanan C, Diaz G, Pelz JB (2019) Ritnet: real-time semantic segmentation of the eye for gaze tracking. In: 2019 IEEE/CVF international conference on computer vision workshop (ICCVW). IEEE, pages 3698–3702

Child R, Gray S, Radford A, Sutskever I (2019) Generating long sequences with sparse transformers

Cordonnier J-B, Loukas A, Jaggi M (2020) On the relationship between selfattention and convolutional layers. In: ICLR

Dosovitskiy A, Beyer L, Kolesnikov A et al (2011) An image is worth 16x16 words: transformers for image recognition at scale. arXiv:2010.11929v2

Frecker R C, Eizenman M, Hallett PE (1984) High-precision real-time measurement of eye position using the first purkinje image. Adv Psychol 22:13–20

Fuhl W., Geisler D., Rosenstiel W., Kasneci E. (2019) The applicability of cycle gans for pupil and eyelid segmentation, data generation and image refinement. In: Proceedings of the IEEE international conference on computer vision workshops

Fuhl W, Kubler T, Sippel K, Rosenstiel W, Kasneci E (2015) ExCuSe: robust pupil detection in real-world scenarios. Lecture Notes Comput Sci (including subseries Lecture Notes Artif Intell Lecture Notes Bioinf) 9256:39–51

Fuhl Wolfgang, Tonsen Marc, Bulling Andreas, Kasneci Enkelejda (2016) Pupil detection for head-mounted eye tracking in the wild: an evaluation of the state of the art. Mach Vis Appl 27(8):1275–1288

Hansen DW, Ji Q (2010) In the eye of the beholder: a survey of models for eyes and gaze. IEEE Trans Patt Anal Mach Intell 32(3):478–500

Huang G, Liu Z, van der Maaten L et al (2019) Convolutional networks with dense connectivity. IEEE Trans Pattern Anal Mach Intell

Katsini C, Abdrabou Y, Raptis GE, Khamis M, Alt F (2020) The role of eye gaze in security and privacy applications: survey and future HCI research directions. In: Proceedings of the 2020 CHI conference on human factors in computing systems

Krafka K, Khosla A, Kelnhofer P, Kannan H, Bhandarkar S, Matusik W, Torralba A (2016) Eye tracking for everyone. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 27–30, Las Vegas, USA. https://doi.org/10.1109/CVPR.2016.239

Lee-Thorp J, Ainslie J, Eckstein I et al (2021) FNet: mixing tokens with fourier transforms. arXiv:2105.03824

Li D, Winfield D, Parkhurst DJ (2005) Starburst: a hybrid algorithm for videobased eye tracking combining feature-based and model-based approaches. In: Computer vision and pattern recognition-workshops, 2005. CVPR workshops. IEEE computer society conference on. IEEE, pp 79–79

Lu S, ChangYuan W, Feng T, HongBo J (2021) An integrated neural network model for pupil detection and tracking. Soft Comput

Muhammad W, Spratling MW (2017) A neural model of coordinated head and eye movement control. J Intell Robot Syst 85:107–126

Park S, Spurr A, Hilliges O (2018) Deep pictorial gaze estimation. In: Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol 11217 LNCS, pages 741–757

Parmar N, Vaswani A, Uszkoreit J, Kaiser L, Shazeer N, Ku A, Tran D (2018) Image transformer. In: ICML

Rakshit K, Aayush C, Reynold B, Jeff P, Susan F (2021) EllSeg: an ellipse segmentation framework for robust gaze tracking. IEEE Trans Vis Comput Graph 03:1–1

Richmond S (2016) Superintelligence: Paths, Dangers, Strategies. By Nick Bostrom. Oxford University Press, Oxford. 2014, pp xvi 328. https://doi.org/10.1017/S0031819115000340

Roberto V, Theo G (2012) Accurate eye center location through invariant isocentric patterns. IEEE Trans Patt Anal Mach Intell, (34):1785-1798

Santini T, Fuhl W, Kasneci E (2018) PuRe: robust pupil detection for real-time pervasive eye tracking. Comput Vision Image Understand 170 (February):40–50

Sauvola J., Pietikainen M. (2000) Adaptive document image binarization. Pattern Recogn 33(2):225–236

Shi L, Wang C, Jia H (2021) EPS: robust pupil edge points selection with haar feature and morphological pixel patterns. Int J Patt Recognit Artif Intell 35:06

Sutskever I, Vinyals O, Le QV (2014) Sequence to sequence learning with neural networks. In: NeurIPS

Tao JP, Charlson ES, Zhu Y et al (2021) A Digital microscreen for the enhanced appearance of ocular prosthetic motility (an American ophthalmological society thesis). American J Ophthalmology, (228):35–46

Tonsen M, Zhang X, Sugano Y, Bulling A (2016) Labelled pupils in the wild: a dataset for studying pupil detection in unconstrained environments. In: Proceedings of the ninth biennial ACM symposium on eye tracking research and applications, pp 139–142

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. Adv Neural Inf Process Syst

Vera-Olmos FJ, Melero H, Malpica N (2019) DeepEye: deep convolutional network for pupil detection in real environments. Integr Comput-Aided Eng 26:85–95. https://doi.org/10.3233/ICA-180584

Wang L, Guo Y, Dalip B, et al. (2021) An experimental study of objective pain measurement using pupillary response based on genetic algorithm and artificial neural network. Appl Intell

Yiu YH, Aboulatta M, Raiser T et al (2019) DeepVOG: open-source pupil segmentation and gaze estimation in neuroscience using deep learning[J]. J Neurosci Methods

Ze L, Yutong L, Yue C et al (2021) Swin transformer: hierarchical vision transformer using shifted windows. https://doi.org/10.48550/arXiv.2103.14030

Zheng Z, Wang P, Ren D et al (2021) Enhancing geometric factors in model learning and inference for object detection and instance segmentation. IEEE Trans Cybernat

Świrski L, Bulling A, Dodgson N (2012) Robust real-time pupil tracking in highly off-axis images. In: Proceedings of the symposium on eye tracking research and applications

Funding

We are grateful for support from the National Natural Science Foundation of China Research on Pilot training fatigue assessment based on multi-dimensional data monitoring and fusion (#52072293) and the Basic Strengthening Plan Technology Foundation(#2020-JCJQJJ-430) and the key research and development program of Shaanxi (No.2023-ZDLGY-02).

Author information

Authors and Affiliations

Contributions

PX.XUE carried out the eye center localization studies, participated in ViT training fine-tune, and drafted the manuscript. CY.WANG conceived of the study, participated in its design, and helped to draft the manuscript. WB.HUANG participated in ViT training fine-tune studies. GY.JIANG performed the statistical analysis. GH.ZHOU performed the statistical analysis. Muhammad Raza performed the statistical analysis and helped write the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Consent for Publication

The author confirms:

⦁ that the work described has not been published before(except in the form of an abstract or as part of a published lecture, review, or thesis).

⦁ that it is not under consideration for publication elsewhere.

⦁ that its publication has been approved(tacitly or explicitly) by the responsible authorities at the institution where the work is carried out.

Competing interests

The authors declare no competing non-financial / financial interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xue, P., Wang, C., Huang, W. et al. Pupil centre’s localization with transformer without real pupil. Multimed Tools Appl 82, 25467–25484 (2023). https://doi.org/10.1007/s11042-023-14403-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14403-3