Abstract

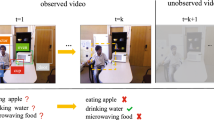

Spatio-temporal Scene Graphs Generation (STSGG) aims to extract a sequence of graph-based semantic representations for high-level visual tasks. Existing works often fail to exploit the strong temporal correlation and the details of local features, which leads to the inability to distinguish the action between dynamic relation (e.g., drinking) and static relation (e.g., holding). Furthermore, due to bad long-tailed bias, the prediction results are troubled by inaccurate tail predicates classifications. To address these issues, a slowfast local-aware attention (SFLA) Network is proposed for temporal modeling in STSGG. First, a two-branch network is used to extract static and dynamic relation features respectively. Second, a local relation-aware attention (LRA) module is proposed to attach higher importance to the crucial elements in the local relationship. Third, three novel self-supervision prediction tasks are proposed, that is, spatial location, human attention state, and distance variation. Such self-supervision tasks are trained simultaneously with the main model to alleviate the long-tailed bias problem and enhance feature discrimination. Systematic experiments show that our method achieves state-of-the-art performance in the recently proposed Action Genome (AG) dataset and the popular ImageNet Video dataset.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Carreira J, Zisserman A (2017) Quo vadis, action recognition? a new model and the kinetics dataset. In: Proceedings of the IEEE Conference on computer vision and pattern recognition, pp 6299–6308. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Chen VS, Varma P, Krishna R et al (2019) Scene graph prediction with limited labels. In: Proceedings of the IEEE international conference on computer vision, pp 2580–2590. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Chen L, Wang G, Hou G (2021) Multi-scale and multi-column convolutional neural network for crowd density estimation. Multimed Tools Appl 80 (5):6661–6674

Chen Y, Wang Y, Zhang Y, et al (2019) Panet: a context based predicate association network for scene graph generation. In: 2019 IEEE international conference on multimedia and expo (ICME), IEEE, pp 508–513

Feichtenhofer C, Fan H, Malik J et al (2019) Slowfast networks for video recognition. In: Proceedings of the IEEE international conference on computer vision, pp 6202–6211. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Gao K, Chen L, Huang Y et al (2021) Video relation detection via tracklet based visual transformer. In: Proceedings of the 29th ACM international conference on multimedia, pp 4833–4837. ACM MM Association for Computing Machinery (ACM), New York

Geng S, Gao P, Hori C et al (2020) Spatio-temporal scene graphs for video dialog. arXiv:2007.04365, 2007

Gu C, Sun C, Ross DA et al (2018) Ava: A video dataset of spatio-temporally localized atomic visual actions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6047–6056. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

He K, Zhang X, Ren S et al (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Ji J, Krishna R, Fei-Fei L et al (2020) Action genome: Actions as compositions of spatio-temporal scene graphs. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10236–10247. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Johnson J, Gupta A, Fei-Fei L (2018) Image generation from scene graphs. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1219–1228. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Krizhevsky A, Sutskever I, Hinton G E (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

Li Y, Ouyang W, Zhou B et al (2017) Scene graph generation from objects, phrases and region captions. In: Proceedings of the IEEE international conference on computer vision, pp 1261–1270. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Li R, Zhang S, Wan B et al (2021) Bipartite graph network with adaptive message passing for unbiased scene graph generation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 11109–11119. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Lin TY, Maire M, Belongie S et al (2014) Microsoft coco: Common objects in context. In: European conference on computer vision, Springer, pp 740–755

Liu C, Jin Y, Xu K et al (2020) Beyond short-term snippet: Video relation detection with spatio-temporal global context. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10840–10849. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Lu C, Krishna R, Bernstein M et al (2016) Visual relationship detection with language priors. In: European conference on computer vision, Springer, pp 852–869

Lyu F, Feng W, Wang S (2020) vtgraphnet: Learning weakly-supervised scene graph for complex visual grounding. Neurocomputing 413:51–60

Mi L, Chen Z (2020) Hierarchical graph attention network for visual relationship detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 13886–13895. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Peyre J, Sivic J, Laptev I et al (2017) Weakly-supervised learning of visual relations. In: Proceedings of the ieee international conference on computer vision, pp 5179–5188. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Qian X, Zhuang Y, Li Y et al (2019) Video relation detection with spatio-temporal graph. In: Proceedings of the 27th ACM international conference on multimedia, pp 84–93. ACM MM Association for Computing Machinery (ACM), New York

Ren S, He K, Girshick R et al (2015) Faster r-cnn: Towards real-time object detection with region proposal networks. In: Advances in neural information processing systems, pp 91–99. Neural Information Processing Systems Foundation, Cupertino

Shang X, Ren T, Guo J et al (2017) Video visual relation detection. In: Proceedings of the 25th ACM international conference on Multimedia, pp 1300–1308. ACM MM Association for Computing Machinery (ACM), New York

Shen K, Wu L, Xu F et al (2020) Hierarchical attention based spatial-temporal graph-to-sequence learning for grounded video description. In: Proceedings of the 29th International Joint Conference on Artificial Intelligence (IJCAI), pp 3406–3412

Sigurdsson GA, Varol G, Wang X et al (2016) Hollywood in homes: Crowdsourcing data collection for activity understanding. In: European conference on computer vision, Springer, pp 510–526

Tang K, Niu Y, Huang J et al (2020) Unbiased scene graph generation from biased training. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3716–3725. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Tang K, Zhang H, Wu B et al (2019) Learning to compose dynamic tree structures for visual contexts. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6619–6628. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Trojahn T H, Goularte R (2021) Temporal video scene segmentation using deep-learning. Multimed Tools Appl 80(12):17487–17513

Tsai YHH, Divvala S, Morency LP et al (2019) Video relationship reasoning using gated spatio-temporal energy graph. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 10424–10433. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Wang X, Gupta A (2018) Videos as space-time region graphs. In: Proceedings of the European conference on computer vision (ECCV), pp 399–417. Springer Science+Business Media, New York

Wang R, Wei Z, Li P et al (2020) Storytelling from an image stream using scene graphs. In: AAAI, pp 9185–9192. AAAI, Palo Alto

Xu D, Zhu Y, Choy CB et al (2017) Scene graph generation by iterative message passing. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5410–5419. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Yan S, Shen C, Jin Z et al (2020) Pcpl: Predicate-correlation perception learning for unbiased scene graph generation. In: Proceedings of the 28th ACM international conference on multimedia, pp 265–273. ACM MM Association for Computing Machinery (ACM), New York

Yang J, Lu J, Lee S et al (2018) Graph r-cnn for scene graph generation. In: Proceedings of the European conference on computer vision (ECCV), pp 670–685. Springer Science+Business Media, New York

Zareian A, Karaman S, Chang SF (2020a) Bridging knowledge graphs to generate scene graphs. arXiv:2001.02314

Zareian A, Karaman S, Chang SF (2020b) Weakly supervised visual semantic parsing. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3736–3745. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Zellers R, Yatskar M, Thomson S et al (2018) Neural motifs: Scene graph parsing with global context. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5831–5840. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Zhang J, Shih KJ, Elgammal A et al (2019) Graphical contrastive losses for scene graph parsing. In: Proceedings of the IEEE conference on computer vision and pattern Recognition, pp 11535–11543. Institute of Electrical and Electronics Engineers (IEEE), Piscataway

Acknowledgements

This work was supported in part by Natural Science Foundation of Chongqing (No. CSTB2022NSCQ-MSX0552), National Natural Science Foundation of China (No. 62002121, 62072183, and 62102151), Shanghai Science and Technology Commission (No. 21511100700, 22511104600), the National Key Research and Development Program of China (No. 2021ZD0111000), the Research Project of Shanghai Science and Technology Commission (No. 20DZ2260300), Shanghai Sailing Program (21YF1411200) and CAAI-Huawei MindSpore Open Fund (CAAIXSJLJJ-2021-031A), the Open Project Program of the State Key Lab of CAD&CG (No. A2203), Zhejiang University.

Funding

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, L., Cai, Y., Lu, C. et al. Video-based spatio-temporal scene graph generation with efficient self-supervision tasks. Multimed Tools Appl 82, 38947–38966 (2023). https://doi.org/10.1007/s11042-023-14640-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14640-6