Abstract

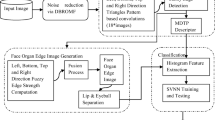

The facial expression and human emotions are considered as important components for building various real-time applications such as human expression and emotion recognition systems. Various parts of the human face contribute to recognizing expression. The contribution of action units on the nose is considered important. In this paper, input images are converted into HSV color space for better representation. The nose area is localized and the boundary is drawn by segmentation process using Fuzzy C-means Clustering (FCM). The segmented nose on the human face is modelled using a pyramid/tetrahedron structure and it is superimposed on the reference face. The feature points are identified on the pyramid model, where the Action Units (AUs) falling on the tetrahedron are identified. These points are validated with the theoretical properties of the tetrahedron so that the constructed feature vector is robust. The degree of deformation at various points is constructed as the feature vector. The feature vector is extracted for all the database images, say JAFE and CK++ datasets, and the feature database is created and stored separately. The feature data sets are used for training and thus, they are n-fold cross-validated to avoid over and under fitting. Given an input image for estimating the expression and emotion, the feature vector of the input image is compared with the feature vector of deformed images stored in the database. We have used Support Vector Machine (SVM), and Multilayer Perceptron (MLP) and Random Forest classifier to classify the expression and derive emotion. The JAFE and CK++ datasets are used for experimental analysis. It is found that the Nose feature using pyramid/tetrahedron structure is giving good results. Most of the time the classification accuracy is more than 95%. The experimental results are compared with some of the well-known approaches and the proposed tetrahedron model has performed well with classification accuracy more than 95%.

Similar content being viewed by others

References

Alhussein M (2016) Automatic facial emotion recognition using weber local descriptor for e-Healthcare system. Cluster Comput-J Netw Softw Tools Appl 19(1):99–108

Avani VS, Shaila SG, Vadivel A (2021a) Geometrical features of lips using the properties of parabola for recognizing facial expression. Cogn Neurodyn 15:481–499. https://doi.org/10.1007/s11571-020-09638-x

Avani VS, Shaila SG, Vadivel A (2021b) Interval graph of facial regions with common intersection salient points for identifying and classifying facial expression. Multimed Tools Appl 80:1–24. https://doi.org/10.1007/s11042-020-09806-5

Benitez-Quiroz CF, Srinivasan R, Martinez AM (2016) EmotioNet: An accurate, real-time algorithm for the automatic annotation of a million facial expressions in the wild, in Proc. IEEE Conf. Comput. Vis. Pattern Recognit, pp. 5562 5570

Bezdek JC (1973) Fuzzy mathematics in pattern classification,” Ph.D. dissertation, Appl. Math. Center, Cornell Univ., Ithaca, NY

Bilkhu MS, Gupta S, Srivastava VK (2019) Emotion classification from facial expressions using cascaded regression trees and SVM, in Computational Intelligence: Theories, Applications and Future Directions-Volume II, Springer, pp. 585–594

Biswas S (2015) An efficient expression recognition method using Contourlet transform. Int Conf Percept Mach Intell pp 167–174

Biswas B, Mukherjee AK, Konar A (1995) Matching of digital images using fuzzy logic. AMSE Publication 35(2):7–11

Bolotnikova A, Demirel H, Anbarjafari G (2017) Real-time ensemble based face recognition system for NAO humanoids using local binary pattern. Analog Integr Circuits Signal Process 92(3):467–475

Calvo MG, Fernández-Martín A, Nummenmaa L (2014) Facial expression recognition in peripheral versus central vision: Role of the eyes and the mouth. Psychol Res 78(2):180–195

Chakraborty A, Konar A, Chakraborty UK, Chatterjee A (2009) Emotion recognition from facial expressions and its control using fuzzy logic. IEEE Trans Syst Man Cybern A Syst Humans 39(4):726–743

Cohen I (2000) Facial expression recognition from video sequences, M.S. thesis, Univ. Illinois Urbana-Champaign, Dept. Elect. Eng., Urbana, IL

Demir Y (2014) A new facial expression recognition based on curvelet transform and online sequential extreme learning machine initialized with spherical clustering. Neural Comput Appl 27:131–142. https://doi.org/10.1007/s00521-014-1569-1

Drume D, Jalal AS (2012) A multi-level classification approach for facial emotion recognition,” in Proc. Int Conf Comput Intell Comput Res., Coimbatore, India, , pp. 288–292

Ekman P, Friesen WV (1975) Unmasking the face: a guide to recognizing emotions from facial clues. Prentice-Hall, Englewood Cliffs

Ekman P, Friesen W (1978) Facial action coding system: a technique for the measurement of facial movement. Consulting Psychologists, Palo Alto, CA, USA, Tech. Rep.

Fan et al (2021) Forecasting short-term electricity load using hybrid support vector regression with grey catastrophe and random forest modeling. Util Policy 73(2021):101294

Fellenz WA, Taylor JG, Cowie R, Douglas-Cowie E, Piat F, Kollias S, Orovas C, Apolloni B (2000) On emotion recognition of faces and of speech using neural networks, fuzzy logic and the ASSESS sys-tems, in Proc. IEEE -INNS-ENNS Int. Joint Conf. Neural Netw, pp. 93–98

Florea C, Florea L, Badea MA, Vertan C (2019) Annealed label transfer for face expression recognition. In British Machine Vision Conference (BMVC)

Gao Y, Leung MKH, Hui SC, Tananda MW (2003) Facial expression recognition from line-based caricatures. IEEE Trans Syst, Man, CybernA, Syst, Humans 33(3):407–412

Ghazouani H (2021) A genetic programming based feature selection and fusion for facial expression recognition. Appl Soft Comput 103:107173

Ghimire D, Lee J, Li Z-N, Jeong S (2017) Recognition of facial expressions based on salient geometric features and support vector machines. Int J Multimed Tools Appl 76:7921–7946

Gu W, Xiang C, Venkatesh YV, Huang D, Lin H (2012) Facial expression recognition using radial encoding of local Gabor features and classi-er synthesis. Pattern Recognit 45(1):80–91

Happy SL, Routray A (2015) Automatic facial expression recognition using features of salient facial patches. IEEE Trans Affective Comput 6(1):1–12

Happy SL, Routray A (2017) Fuzzy histogram of optical flow orientations for micro-expression recognition. IEEE Trans Affect Comput 10(3):394–406

Izumitani K, Mikami T, Inoue K (1984) A model of expression grade for face graphs using fuzzy integral. Syst Control 28(10):590–596

Kumar S, Bhuyan MK, Chakraborty BK (2016) Extraction of informative regions of a face for facial expression recognition. IET Comput Vis 10:567–576. https://doi.org/10.1049/iet-cvi.2015.0273

Li X, Ji Q (2005) Active affective state detection and user assistance with dynamic Bayesian networks. IEEE Trans Syst, Man, Cybern A, Syst, Humans 35(1):93–105

Li S, Deng W, Du J (2017) Reliable crowdsourcing and deep locality-preserving learning for expression recognition in the wild, in Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), pp. 2584–2593

Liu Y, et al. (2016) Facial expression recognition with PCA and LBP features extracting from active facial patches, 2016 IEEE International Conference on Real-time Computing and Robotics (RCAR), 2016, pp. 368–373, https://doi.org/10.1109/RCAR.2016.7784056.

Mahersia H, Hamrouni K (2015) Using multiple steerable fi lters and Bayesian regularization for facial expression recognition. Eng Appl Artif Intell 38:190–202. https://doi.org/10.1016/j.engappai.2014.11.002

Minaee S, Minaei M, Abdolrashidi A (2021) Deep-emotion: facial expression recognition using attentional convolutional network. Sensors 21:3046

Salmam FZ, Madani A, Kissi M (2016) Facial expression recognition using decision trees. IEEE. In: 13th Int Conf Comput. Graph Imaging Vis, pp 125–130. https://doi.org/10.1109/CGiV.2016.33

Shahid AR, Khan S, Yan H (2020) Contour and region harmonic features for sub-local facial expression recognition. J Vis Commun Image Represent 73:102949

Siddiqi M, Ali R, Khan A, Park YT, Lee S (2015) Human facial expression recognition using stepwise linear discriminant analysis and hidden conditional random fields. IEEE Trans Image Process 24:1386–1398. https://doi.org/10.1109/TIP.2015.2405346

SivaSai JG, Srinivasu PN, Sindhuri MN, Rohitha K, Deepika S (2021) An automated segmentation of brain mr image through fuzzy recurrent neural network. In: Bhoi A, Mallick P, Liu CM, Balas V (eds) Bio-inspired Neurocomputing. Studies in Computational Intelligence, vol 903. Springer, Singapore. https://doi.org/10.1007/978-981-15-5495-7_9

Srinivasu NP et al (2020a) A comparative review of optimization techniques in segmentation of brain MR images. J Intell Fuzzy Syst 38(5):6031–6043. https://doi.org/10.3233/JIFS-179688

Srinivasu NP et al (2020b) A comparative review of optimisation techniques in segmentation of brain MR images. J Intell Fuzzy Systems 38(5):6031–6043. https://doi.org/10.3233/JIFS-179688

Srinivasu N et al (2021) An AW-HARIS based automated segmentation of human liver using CT images. 69(3):3303–3319. https://doi.org/10.32604/cmc.2021.018472

Stockman G, Shapiro LG (2001) Computer vision. Prentice Hall PTR, USA. isbn:978-0-13-030796-5

Swaminathan A, Vadivel A, Arock M (2020) FERCE: facial expression recognition for combined emotions using FERCE algorithm. IETE J Res. https://doi.org/10.1080/03772063.2020.1756471

Uwechue OA, Pandya SA (1997) Human face recognition using third-order synthetic neural networks. Kluwer, Boston

Vadivel A, Sural S, Majumdar AK (2009) Robust histogram generation from the HSV space based on visual colour perception. Int J Signal Imaging Syst Eng 1(3–4):245–254

Vivek TV, Reddy GRM (2015) A hybrid bioinspired algorithm for facial emotion recognition using CSO-GA-PSO-SVM, in Proc. 5th Int. Conf. Commun. Syst. Netw. Technol., Gwalior, India, pp. 472–477

Wang K, Peng X, Yang J, Lu S, Qiao Y (2020a) Suppressing uncertainties for large-scale facial expression recognition. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR):6896–6905

Wang K, Peng X, Yang J, Meng D, Yu Q (2020b) Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans Image Process 29:4057–4069

Yao L, Wan Y, Ni H, Xu B (2021) Action unit classification for facial expression recognition using active learning and SVM. Multimed Tools Appl 80:1–15

You D, Hamsici OC, Martinez AM (2011) Kernel optimization in discriminant analysis. IEEE Trans Pattern Anal Mach Intell 33(3):631–638

Zavarez MV, Berriel RF, Oliveira-Santos T (2017) Cross-database facial expression recognition based on fine-tuned deep convolutional network. In Proceedings of the 2017 30th SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Niteroi, Brazil, 17–20; pp. 405–412

Zeng J, Shan S, Chen X (2018) Facial expression recognition with inconsistently annotated datasets. In European Conference on Computer Vision (ECCV), pages 227–243, Cham

Zhang H, Liu D, Wang Z (2009) Controlling chaos: suppression, synchronization and Chaotification, New York: Springer-Verlag

Zhang L, Jiang M, Farid D, Hossain MA (2013) Intelligent facial emotion recognition and semantic-based topic detection for a humanoid robot. Expert Syst Appl 40:5160–5168

Zhang YD, Yang ZJ, Lu HM, Zhou XX, Phillips P, Liu QM, Wang SH (2016) Facial emotion recognition based on biorthogonal wavelet entropy, fuzzy support vector machine, and stratified cross validation. IEEE Access 4:8375–8385

Zhao H, Wang Z (2008) Facial action units recognition based on fuzzy kernel clustering. in Proc. 5th Int. Conf. Fuzzy Syst. Knowl. Discov, pp. 168–172

Zhong F, Yan S, Liu L, Liu K (2018) An effective face recognition framework with subspace learning based on local texture patterns, in 2018 5th International Conference on Systems and Informatics (ICSAI), pp. 266–271

Funding

We have not received funding from any funding agency for the work presented in this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We have no conflicts of interests and competing interests in the presented work.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shaila, S., Vadivel, A. & Avani, S. Emotion estimation from nose feature using pyramid structure. Multimed Tools Appl 82, 42569–42591 (2023). https://doi.org/10.1007/s11042-023-14682-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14682-w