Abstract

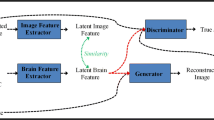

Generative Adversarial Networks have recently proven to be very effective in generative applications involving images, and they are now being used to regenerate images using visually evoked brain signals. Recent neuroscience research has discovered evidence that brain-evoked data can be used to decipher how the human brain functions. Simultaneously, the latest advancement in deep learning integrated with a high-level interest in generative methods has made learning the data distribution possible and realistic images can be produced from random noise. In this work, an advanced generative adversarial method that incorporates the capsule network with the generative adversarial networks model i.e. Capsule Generative Adversarial Network is proposed to regenerate images with decoded information and formulated features from visually evoked brain signals. There are two stages in the proposed method: Encoder, for data formulation of visually evoked brain activity and the image reconstruction phase from the brain signals. The image regeneration technique has been experimentally tested on a variety of generative adversarial networks including the proposed model and the final reconstructed image samples are compared to assess the quality using various evaluation metrics. The Structural Similarity Index Measure metric for Capsule Generative Adversarial Network has achieved highest value i.e., 0.9203 and outperforms the other GAN variants and also indicates that the Capsule Generative Adversarial Network reconstructed the images similar to original images.

Similar content being viewed by others

Data Availability

The datasets analyzed during the current study are available in the MNIST database (Modified National Institute of Standards and Technology database, http://yann.lecun.com/exdb/mnist/) and MindBigData (the “MNIST” of Brain Digits, http://www.mindbigdata.com/opendb/index.html) repository.

References

Acharya UR, Sree SV, Swapna G, Martis RJ, Suri JS (2013) Automated EEG analysis of epilepsy: a review. Knowl-Based Syst 45:147–165

Anwar S, Meghana S (2019) A pixel permutation based image encryption technique using chaotic map. Multimed Tools Applic 78(19):27569–27590

Anwar S, Rajamohan G (2020) Improved image enhancement algorithms based on the switching median filtering technique. Arab J Sci Eng 45 (12):11103–11114

Berthelot D, Schumm T, Metz L (2017) BEGAN: boundary equilibrium generative adversarial networks. arXiv:1703.10717

Bhat S, Hortal E (2021) Gan-based data augmentation for improving the classification of eeg signals. In: The 14th pervasive technologies related to assistive environments conference, pp 453–458

Bird JJ, Faria DR, Manso LJ, Ekárt A, Buckingham CD (2019) A deep evolutionary approach to bioinspired classifier optimisation for brain-machine interaction. Complexity, 2019

Carlson T, Tovar DA, Alink A, Kriegeskorte N (2013) Representational dynamics of object vision: the first 1000 ms. J Vis 13(10):1–1

de Beeck HPO, Torfs K, Wagemans J (2008) Perceived shape similarity among unfamiliar objects and the organization of the human object vision pathway. J Neurosci 28(40):10111–10123

Donahue J, Krähenbühl P, Darrell T (2016) Adversarial feature learning. arXiv:1605.09782

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Advances in neural information processing systems (NIPS)

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Ghahramani Z, Welling M, Cortes C, Lawrence N, Weinberger K Q (eds) Advances in neural information processing systems, vol 27. Curran Associates, Inc.

Gulrajani I, Ahmed F, Arjovsky M, Dumoulin V, Courville A (2017) Improved training of wasserstein GANS. arXiv:1704.00028

Guo K, Hu X, Li X (2022) Mmfgan: a novel multimodal brain medical image fusion based on the improvement of generative adversarial network. Multimed Tools Applic 81(4):5889–5927

Gupta A, Venkatesh S, Chopra S, Ledig C (2019) Generative image translation for data augmentation of bone lesion pathology. In: International conference on medical imaging with deep learning, PMLR, pp 225–235

Ha K-W, Jeong J-W (2019) Motor imagery eeg classification using capsule networks. Sensors 19(13):2854

Hartmann KG, Schirrmeister RT, Ball T (2018) Eeg-gan: generative adversarial networks for electroencephalograhic (EEG) brain signals. arXiv:1806.01875

Hassan MA, Khan MUG, Iqbal R, Riaz O, Bashir AK, Tariq U (2021) Predicting humans future motion trajectories in video streams using generative adversarial network. Multimedia Tools and Applications, 1–23

Hinton GE, Krizhevsky A, Wang SD (2011) Transforming auto-encoders. In: International conference on artificial neural networks. Springer, pp 44–51

Huth AG, Lee T, Nishimoto S, Bilenko NY, Vu AT, Gallant JL (2016) Decoding the semantic content of natural movies from human brain activity. Front Syst Neurosci 10:81

Hwang S, Hong K, Son G, Byun H (2019) EZSL-GAN: EEG-based zero-shot learning approach using a generative adversarial network. In: 2019 7th International winter conference on Brain-Computer Interface (BCI). IEEE, pp 1–4

Im DJ, Kim CD, Jiang H, Memisevic R (2016) Generating images with recurrent adversarial networks. arXiv:1602.05110

Jaiswal A, AbdAlmageed W, Wu Y, Natarajan P (2018) Bidirectional conditional generative adversarial networks. In: Asian conference on computer vision. Springer, pp 216–232

Jaiswal A, AbdAlmageed W, Wu Y, Natarajan P (2018) CapsuleGAN: generative adversarial capsule network. In: Proceedings of the european conference on computer vision (ECCV) workshops

Kavasidis I, Palazzo S, Spampinato C, Giordano D, Shah M (2017) Brain2image: converting brain signals into images. In: Proceedings of the 25th ACM international conference on multimedia, pp 1809–1817

Khare S, Choubey RN, Amar L, Udutalapalli V (2022) Neurovision: perceived image regeneration using cprogan. Neural Comput Appl 34 (8):5979–5991

Kim T, Cha M, Kim H, Lee JK, Kim J (2017) Learning to discover cross-domain relations with generative adversarial networks. In: International conference on machine learning, PMLR, pp 1857–1865

Lanaras C, Bioucas-Dias J, Galliani S, Baltsavias E, Schindler K (2018) Super-resolution of sentinel-2 images: learning a globally applicable deep neural network. ISPRS J Photogramm Remote Sens 146:305–319

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–324

Lee H-Y, Tseng H-Y, Huang J-B, Singh M, Yang M-H (2018) Diverse image-to-image translation via disentangled representations. In: Proceedings of the european conference on computer vision (ECCV), pp 35–51

Luo Z (2019) Face rotation via VAE-capsuleGAN. In: IOP Conference series: earth and environmental science, vol 252. IOP Publishing, p 042127

Majdabadi MM, Ko S-B (2020) Msg-capsgan: Multi-scale gradient capsule GAN for face super resolution. In: 2020 International Conference on Electronics, Information, and Communication (ICEIC). IEEE, pp 1–3

Marusaki K, Watanabe H (2020) Capsule GAN using capsule network for generator architecture. arXiv:2003.08047

Mirza M, Osindero S (2014) Conditional generative adversarial nets. arXiv:1411.1784

Odena A, Olah C, Shlens J (2017) Conditional image synthesis with auxiliary classifier GANS. In: International conference on machine learning, PMLR, pp 2642–2651

Olivas-Padilla BE, Chacon-Murguia MI (2019) Classification of multiple motor imagery using deep convolutional neural networks and spatial filters. Appl Soft Comput 75:461–472

Palazzo S, Spampinato C, Kavasidis I, Giordano D, Shah M (2017) Generative adversarial networks conditioned by brain signals. In: Proceedings of the IEEE International conference on computer vision, pp 3410–3418

Radford A, Metz L, Chintala S (2015) Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv:1511.06434

Ramos-Aguilar R, Olvera-López JA, Olmos-Pineda I, Sánchez-Urrieta S (2020) Feature extraction from EEG spectrograms for epileptic seizure detection. Pattern Recogn Lett 133:202–209

Sabour S, Frosst N, Hinton GE (2017) Dynamic routing between capsules. arXiv:1710.09829

Sara U, Akter M, Uddin MS (2019) Image quality assessment through fsim, ssim, mse and psnr—a comparative study. J Comput Commun 7(3):8–18

Seeliger K, Güçlü U, Ambrogioni L, Güçlütürk Y, van Gerven MAJ (2018) Generative adversarial networks for reconstructing natural images from brain activity. Neuroimage 181:775–785

Shao G, Huang M, Gao F, Liu T, Li L (2020) Ducagan: unified dual capsule generative adversarial network for unsupervised image-to-image translation. IEEE Access 8:154691–154707

Song J, Guo Y, Gao L, Li X, Hanjalic A, Shen HT (2018) From deterministic to generative: multimodal stochastic RNNs for video captioning. IEEE Trans Neural Netw Learn Syst 30(10):3047–3058

Song J, Zhang J, Gao L, Liu X, Shen HT (2018) Dual conditional GANs for face aging and rejuvenation. In: IJCAI, pp 899–905

Tirupattur P, Rawat YS, Spampinato C, Shah M (2018) Thoughtviz: visualizing human thoughts using generative adversarial network. In: Proceedings of the 26th ACM international conference on multimedia, pp 950–958

Upadhyay Y, Schrater P (2018) Generative adversarial network architectures for image synthesis using capsule networks. arXiv:1806.03796

Wang Z, Bovik AC (2002) A universal image quality index. IEEE Signal Process Lett 9(3):81–84

Wang R, Li G, Chu D (2019) Capsules encoder and CapsGAN for image inpainting. In: 2019 International conference on artificial intelligence and advanced manufacturing (AIAM). IEEE, pp 325–328

Yuhas RH, Goetz Alexander FH, Boardman JW (1992) Discrimination among semi-arid landscape endmembers using the spectral angle mapper (SAM) algorithm. In: Proc. summaries 3rd Annu. JPL airborne geosci. Workshop, vol 1, pp 147–149

Zhang L, Zhang L, Mou X, Zhang D (2011) Fsim: a feature similarity index for image quality assessment. IEEE Trans Image Process 20(8):2378–2386

Zhang X, Chen X, Dong M, Liu H, Ge C, Yao L (2019) Multi-task generative adversarial learning on geometrical shape reconstruction from EEG brain signals. arXiv:1907.13351

Zhu J-Y, Park T, Isola P, Efros AA (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE international conference on computer vision, pp 2223–2232

Funding

No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Kumari, N., Anwar, S., Bhattacharjee, V. et al. Visually evoked brain signals guided image regeneration using GAN variants. Multimed Tools Appl 82, 32259–32279 (2023). https://doi.org/10.1007/s11042-023-14769-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14769-4