Abstract

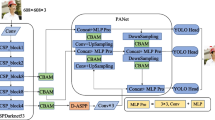

In past studies, atrous convolution is efficient in segmentation tasks to reinforce the receptive field and detection tasks. In addition, the attention module is efficient for feature extraction and enhancement. In this paper, we introduce atrous convolution, design a feature enhancement module, and utilize a plug-and-play technique, i.e., (AFE) module. Atrous convolution has been shown to be essential for expanding the perceptual field in past studies. We achieve this by fusing multiple layers of features of atrous convolution and adding a detection head to cope with the problem of varying object size scales. We achieve the purpose of extracting multi-scale contextual feature information while using an attention mechanism to effectively enhance the features and improve the overall multi-scale detection performance of the model. It can be added to a well-established backbone network or neck network. Therefore, based on this, we designed the C3 based on the atrous convolution (C3AT) module on the AFE module, replaced the C3 module in YOLOv5, and proposed the Multi-Scale Contextual Feature Enhancement Network (MCANet) as the neck network to obtain the final network structure. Experimental results indicate that the proposed method significantly improves inference speed and AP compared to the benchmark model. Single-model object detection results on the VisDrone2021 test set-dev dataset achieved 32.7% AP and 52.2% AP50, a significant improvement of 8.1% AP and 11.4% AP50 compared with the baseline model. The single-model object detection results on the VOC2007 test dataset reached 89.6% mAP.

Similar content being viewed by others

Data Availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Bochkovskiy A, Wang C-Y, Liao H-Y M (2020) Yolov4: optimal speed and accuracy of object detection. arXiv:2004.10934

Chen Q, Wang Y, Yang T, Zhang X, Cheng J, Sun J (2021) You only look one-level feature. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 13039–13048

Cui Y, Jia M, Lin T-Y, Song Y, Belongie S (2019) Class-balanced loss based on effective number of samples. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 9268–9277

Du D, Zhu P, Wen L, Bian X, Lin H, Hu Q, Peng T, Zheng J, Wang X, Zhang Y et al (2019) Visdrone-det2019: the vision meets drone object detection in image challenge results. In: Proceedings of the IEEE international conference on computer vision workshops

Everingham M, Winn J (2011) The pascal visual object classes challenge 2012 (voc2012) development kit. Pattern Analysis, Statistical Modelling and Computational Learning, Tech Rep 8:5

Gao H, Xu K, Cao M, Xiao J, Xu Q, Yin Y (2021) The deep features and attention mechanism-based method to dish healthcare under social iot systems: an empirical study with a hand-deep local–global net. IEEE Trans Comput Soc Syst 9(1):336–347

Gao H, Huang W, Liu T, Yin Y, Li Y (2022) Ppo2: location privacy-oriented task offloading to edge computing using reinforcement learning for intelligent autonomous transport systems. IEEE Trans Intell Transp Syst

Gao H, Qiu B, Barroso R J D, Hussain W, Xu Y, Wang X (2022) Tsmae: a novel anomaly detection approach for internet of things time series data using memory-augmented autoencoder. IEEE Transactions on Network Science and Engineering

Gao H, Xiao J, Yin Y, Liu T, Shi J (2022) A mutually supervised graph attention network for few-shot segmentation: the perspective of fully utilizing limited samples. IEEE Transactions on Neural Networks and Learning Systems

Ghiasi G, Cui Y, Srinivas A, Qian R, Lin T-Y, Cubuk E D, Le Q V, Zoph B (2021) Simple copy-paste is a strong data augmentation method for instance segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2918–2928

Gong Y, Yu X, Ding Y, Peng X, Zhao J, Han Z (2021) Effective fusion factor in fpn for tiny object detection. In: Proceedings of the IEEE winter conference on applications of computer vision, pp 1160–1168

He J, Erfani S, Ma X, Bailey J, Chi Y, Hua X-S (2021) alpha-iou: a family of power intersection over union losses for bounding box regression. Adv Neural Inf Process Syst, 34

Hou Q, Zhou D, Feng J (2021) Coordinate attention for efficient mobile network design. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 13713–13722

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Leng Z, Tan M, Liu C, Cubuk E D, Shi X, Cheng S, Anguelov D (2022) Polyloss: a polynomial expansion perspective of classification loss functions. arXiv:2204.12511

Lian J, Yin Y, Li L, Wang Z, Zhou Y (2021) Small object detection in traffic scenes based on attention feature fusion. Sensors 21(9):3031

Lin T-Y, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick C L (2014) Microsoft coco: common objects in context. In: Proceedings of the European conference on computer vision, pp 740–755

Lin T-Y, Dollár P, Girshick R, He K, Hariharan B, Belongie S (2017) Feature pyramid networks for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2117–2125

Lin T-Y, Goyal P, Girshick R, He K, Dollár P (2017) Focal loss for dense object detection. In: Proceedings of the IEEE international conference on computer vision, pp 2980–2988

Liu S, Qi L, Qin H, Shi J, Jia J (2018) Path aggregation network for instance segmentation. In: Proceedings of the IEEE Conference on computer vision and pattern recognition, pp 8759–8768

Liu Z, Zhou W, Li H (2019) Scene text detection with fully convolutional neural networks. Multimed Tools Applic 78(13):18205–18227

Luo Y, Cao X, Zhang J, Guo J, Shen H, Wang T, Feng Q (2022) Ce-fpn: enhancing channel information for object detection. Multimed Tool Appl, 1–20

Maaz M, Rasheed H, Khan S, Khan F S, Anwer R M, Yang M-H (2021) Multi-modal transformers excel at class-agnostic object detection. arXiv:2111.11430

Ortiz Castelló V, Salvador Igual I, del Tejo Catalá O, Perez-Cortes J-C (2020) High-profile vru detection on resource-constrained hardware using yolov3/v4 on bdd100k. J Imaging 6(12):142

Qu Z, Shang X, Xia S-F, Yi T-M, Zhou D-Y (2022) A method of single-shot target detection with multi-scale feature fusion and feature enhancement. IET Image Proc 16(6):1752–1763

Redmon J Darknet: open source neural networks in c. https://pjreddie.com/darknet/

Samyal A S, Hans S, et al. (2022) Analysis and adaptation of yolov4 for object detection in aerial images. arXiv:2203.10194

Shi Y, Fan Y, Xu S, Gao Y, Gao R (2022) Object detection by attention-guided feature fusion network. Symmetry 14(5):887

Singh B, Najibi M, Davis L S (2018) Sniper: efficient multi-scale training. Advances in Neural Information Processing Systems, 31

Tan M, Pang R, Le Q V (2020) Efficientdet: scalable and efficient object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 10781–10790

Team V (2020) Visdrone 2020 leaderboard. http://aiskyeye.com/visdrone-2020-leaderboard/

ultralytics (2020) yolov5 v5.0: Open source networks in c. https://github.com/ultralytics/yolov5

Viriyasaranon T, Choi J-H (2022) Object detectors involving a nas-gate convolutional module and capsule attention module. Sci Rep 12(1):1–13

Wang P, Chen P, Yuan Y, Liu D, Huang Z, Hou X, Cottrell G (2018) Understanding convolution for semantic segmentation. In: 2018 IEEE Winter conference on applications of computer vision, IEEE, pp 1451–1460

Wang C-Y, Liao H-Y M, Wu Y-H, Chen P-Y, Hsieh J-W, Yeh I-H (2020) Cspnet: a new backbone that can enhance learning capability of cnn. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pp 390–391

Wang J, Zhang W, Zang Y, Cao Y, Pang J, Gong T, Chen K, Liu Z, Loy C C, Lin D (2021) Seesaw loss for long-tailed instance segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 9695–9704

Woo S, Park J, Lee J-Y, Kweon I S (2018) Cbam: convolutional block attention module. In: Proceedings of the European conference on computer vision, pp 3–19

Wu L, Li J, Wang Y, Meng Q, Qin T, Chen W, Zhang M, Liu T-Y, et al. (2021) R-drop: regularized dropout for neural networks. Adv Neural Inf Process Syst, 34

Xiao J, Xu H, Gao H, Bian M, Li Y (2021) A weakly supervised semantic segmentation network by aggregating seed cues: the multi-object proposal generation perspective. ACM Trans Multimed Comput Commun Applic 17(1s):1–19

Yang L, Zhang R-Y, Li L, Xie X (2021) Simam: a simple, parameter-free attention module for convolutional neural networks. In: International conference on machine learning, PMLR, pp 11863–11874

Yu F, Koltun V (2015) Multi-scale context aggregation by dilated convolutions. arXiv:1511.07122

Yu F, Chen H, Wang X, Xian W, Chen Y, Liu F, Madhavan V, Darrell T (2020) Bdd100k: a diverse driving dataset for heterogeneous multitask learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2636–2645

Zhang S, Wen L, Bian X, Lei Z, Li S Z (2018) Single-shot refinement neural network for object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4203–4212

Zhang H, Wang Y, Dayoub F, Sunderhauf N (2021) Varifocalnet: an iou-aware dense object detector. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 8514–8523

Zhang H, Zu K, Lu J, Zou Y, Meng D (2021) Epsanet: an efficient pyramid squeeze attention block on convolutional neural network. arXiv:2105.14447

Zhou S, Qiu J (2021) Enhanced ssd with interactive multi-scale attention features for object detection. Multimed Tools Applic 80(8):11539–11556

Zhu X, Lyu S, Wang X, Zhao Q (2021) Tph-yolov5: improved yolov5 based on transformer prediction head for object detection on drone-captured scenarios. In: Proceedings of the IEEE international conference on computer vision, pp 2778–2788

Funding

This work is supported by the National Natural Science Foundation of China under grant no. 62162061.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This article does not contain any studies with human participants or animals performed by any of the authors. Important note: Informed consent was obtained from all individual participants included in the study.

Conflict of Interests

All authors of this manuscript declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, K., Liu, Z. MCANet: multi-scale contextual feature fusion network based on Atrous convolution. Multimed Tools Appl 82, 34679–34702 (2023). https://doi.org/10.1007/s11042-023-14800-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14800-8