Abstract

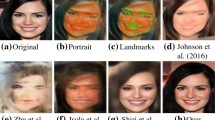

Portrait cartoonization aims at translating a portrait image to its cartoon version, which guarantees two conditions, namely, reducing textural details and synthesizing cartoon facial features (e.g., big eyes or line-drawing nose). To address this problem, we propose a two-stage training scheme based on GAN, which is powerful for stylization problems. The abstraction stage with a novel abstractive loss is used to reduce textural details. Meanwhile, the perception stage is adopted to synthesize cartoon facial features. To comprehensively evaluate the proposed method and other state-of-the-art methods for portrait cartoonization, we contribute a new challenging large-scale dataset named CartoonFace10K. In addition, we find that the popular metric FID focuses on the target style yet ignores the preservation of the input image content. We thus introduce a novel metric FISI, which compromises FID and SSIM to focus on both target features and retaining input content. Quantitative and qualitative results demonstrate that our proposed method outperforms other state-of-the-art methods.

Similar content being viewed by others

Data Availability

Data available on request from the authors

References

Achanta R, Hemami S S, Estrada F J, Süsstrunk S (2009) Frequency-tuned salient region detection. In: CVPR 2009

Benaim S, Wolf L (2017) One-sided unsupervised domain mapping. In: Advances in neural information processing system (2017)

Bińkowski M, Sutherland D J, Arbel M, Gretton A (2018) Demystifying MMD GANs. In: International conference on learning representations (2018)

Branwen G, Anonymous, Community D (2019) Danbooru2019 portraits: a large-scale anime head illustration dataset. https://www.gwern.net/Crops#danbooru2019-portraits. Accessed: DATE

Chen Y, Lai Y, Liu Y (2018) Cartoongan: generative adversarial networks for photo cartoonization. In: 2018 IEEE/CVF conference on computer vision and pattern recognition, pp 9465–9474

Choi Y, Choi M-J, Kim M, Ha J-W, Kim S, Choo J (2018) Stargan: unified generative adversarial networks for multi-domain image-to-image translation. In: 2018 IEEE/CVF Conference on computer vision and pattern recognition, pp 8789–8797

Choi Y, Uh Y, Yoo J, Ha J-W (2020) Stargan v2: diverse image synthesis for multiple domains. In: 2020 IEEE/CVF Conference on computer vision and pattern recognition, pp 8185–8194

Dumoulin V, Shlens J, Kudlur M (2017) A learned representation for artistic style. In: International conference on learning representations (2017)

Gatys L A, Ecker A S, Bethge M (2016) Image style transfer using convolutional neural networks. In: 2016 IEEE Conference on computer vision and pattern recognition, pp 2414–2423

Gooch A (2001) Non-photorealistic rendering

Gooch B, Coombe G, Shirley P (2002) Artistic vision: painterly rendering using computer vision techniques. In: Proceedings of the 2nd international symposium on non-photorealistic animation and rendering, pp 83–90

Goodfellow I J, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A C, Bengio Y (2014) Generative adversarial nets. In: Advances in neural information processing system (2014)

Goodfellow I, Bengio Y, Courville A C (2015) Deep learning. Nature 521:436–444

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE Conference on computer vision and pattern recognition, pp 770–778

Hertzmann A (1998) Painterly rendering with curved brush strokes of multiple sizes. In: SIGGRAPH ’98

Hertzmann A, Jacobs C, Oliver N, Curless B, Salesin D (2001) Image analogies. In: SIGGRAPH ’01

Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S (2017) Gans trained by a two time-scale update rule converge to a local nash equilibrium. In: Advanced in conference on neural information processing systems (2017), pp 6629–6640

Huang X, Belongie S J (2017) Arbitrary style transfer in real-time with adaptive instance normalization. In: 2017 IEEE International conference on computer vision (2017), pp 1510–1519

Huang X, Liu M-Y, Belongie S, Kautz J (2018) Multimodal unsupervised image-to-image translation. In: Eupopean conference on computer vision (2018)

Isola P, Zhu J-Y, Zhou T, Efros A A (2017) Image-to-image translation with conditional adversarial networks. In: 2017 IEEE Conference on computer vision and pattern recognition, pp 5967–5976

Johnson J, Alahi A, Fei-Fei L (2016) Perceptual losses for real-time style transfer and super-resolution. In: European conference on computer vision (2016)

Kim T, Cha M, Kim H, Lee J, Kim J (2017) Learning to discover cross-domain relations with generative adversarial networks. In: International conference on machine learning (2017)

Kim J, Kim M, Kang H, Lee K H (2020) U-gat-it: unsupervised generative attentional networks with adaptive layer-instance normalization for image-to-image translation. In: International conference on learning representations (2020)

Kolliopoulos A (2005) Image segmentation for stylized non-photorealistic rendering and animation

Kyprianidis J E, Collomosse J, Wang T, Isenberg T (2013) State of the ‘art’: a taxonomy of artistic stylization techniques for images and video. IEEE Trans Visual Comput Graphics, 866–885

Laovaan How to draw yourself as an anime character. Youtube. https://youtu.be/9YSpzmWwBkI. Accessed 24 Oct 2021

Lee H-Y, Tseng H-Y, Huang J-B, Singh M K, Yang M-H (2018) Diverse image-to-image translation via disentangled representations. In: European conference on computer vision (2018)

Li H, Liu G, Ngan K N (2011) Guided face cartoon synthesis. IEEE Trans Multimedia. 1230–1239

Liu Z, Luo P, Wang X, Tang X (2015) Deep learning face attributes in the wild. In: Proceedings of international conference on computer vision (2015)

Liu M-Y, Breuel T, Kautz J (2017) Unsupervised image-to-image translation networks. In: Advances in neural information processing system

Nguyen T V, Liu L (2017) Salient object detection with semantic priors. In: 2017 International joint conference on artificial intelligence. arXiv:1705.08207

Park T, Efros A A, Zhang R, Zhu J-Y (2020) Contrastive learning for unpaired image-to-image translation. In: European conference on computer vision (2020)

Perazzi F, Krähenbühl P, Pritch Y, Sorkine-Hornung A (2012) Saliency filters: contrast based filtering for salient region detection. In: 2012 IEEE conference on computer vision and pattern recognition, pp 733–740

Planet A Anime planet website. Anime Planet. https://www.anime-planet.com. Accessed 24 Oct 2021

Rosin P L, Lai Y (2015) Non-photorealistic rendering of portraits. In: CAE ’15

Salimans T, Goodfellow I, Zaremba W, Cheung V, Radford A, Chen X (2016) Improved techniques for training gans. In: Advanced in conference on neural information processing systems (2016)

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556

Wang X, Yu J (2020) Learning to cartoonize using white-box cartoon representations. In: 2020 IEEE/CVF Conference on computer vision and pattern recognition, pp 8087–8096

Wang Z, Bovik A, Sheikh H R, Simoncelli E P (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13:600–612

Wang T, Liu M-Y, Zhu J-Y, Liu G, Tao A, Kautz J, Catanzaro B (2018) Video-to-video synthesis. In: Advances in neural information processing system (2018)

Wang T, Liu M-Y, Zhu J-Y, Tao A, Kautz J, Catanzaro B (2018) High-resolution image synthesis and semantic manipulation with conditional gans. In: 2018 IEEE/CVF conference on computer vision and pattern recognition, pp 8798–8807

Yi Z, Zhang H, Tan P, Gong M (2017) Dualgan: unsupervised dual learning for image-to-image translation. In: 2017 IEEE international conference on computer vision (2017), pp 2868–2876

Zhan F, Zhang J, Yu Y, Wu R, Lu S (2022) Modulated contrast for versatile image synthesis. arXiv:2203.09333

Zhou B, Khosla A, Lapedriza A, Oliva A, Torralba A (2016) Learning deep features for discriminative localization. In: 2016 IEEE Conference on computer vision and pattern recognition, pp 2921–2929

Zhu J-Y, Park T, Isola P, Efros A A (2017) Unpaired image-to-image translation using cycle-consistent adversarial networks. In: 2017 IEEE International conference on computer vision (2017), pp 2242–2251

Zhu J-Y, Zhang R, Pathak D, Darrell T, Efros A A, Wang O, Shechtman E (2017) Toward multimodal image-to-image translation. In: Advances in neural information processing system (2017)

Zhu F, Liang Z, Jia X, Zhang L, Yu Y (2019) A benchmark for edge-preserving image smoothing. IEEE Trans Image Process 28:3556–3570

Acknowledgements

We gratefully acknowledge the support of NVIDIA Corporation with the GPU donation.

Funding

This research is funded by Vietnam National University Ho Chi Minh City (VNUHCM) under grant number C2022-26-01. This work is also supported by the National Science Foundation (NSF) under Grant 2025234. Thanh-Danh Nguyen is funded by the Master, PhD Scholarship Programme of Vingroup Innovation Foundation (VINIF), code VINIF.2022.ThS.104.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ho, ST., Huu, MK.N., Nguyen, TD. et al. Abstraction-perception preserving cartoon face synthesis. Multimed Tools Appl 82, 31607–31624 (2023). https://doi.org/10.1007/s11042-023-14853-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14853-9