Abstract

While infrared images have prominent targets and stable imaging, it can hardly maintain such detailed information or quality as texture or resolution. In contrast, although visible images have rich texture information and high resolution, the imaging is easily disturbed by the circumstance. Therefore, it is desirable to make up for shortcomings and integrate the advantages of the two images into one. In this paper, we propose an infrared and visible image fusion method that combines latent low-rank representation(LatLRR) and convolutional neural network(CNN), termed as LatLRR-CNN. This method can prevent loss of information, lack of imaging quality, and designing complex fusion rules or networks. Firstly, LatLRR is used to decompose infrared or visible images into low-rank parts and salient parts. Secondly, these two parts are fused separately using CNN. Finally, the fused low-rank part and the fused salient part are merged to obtain the fused image. Experiments on publicly accessible datasets reveal that our method outperforms state-of-the-art methods in terms of objective metrics and visual effects. Specifically, the average of our method on the Nato sequence, EN reaches 7.59, MI reaches 2.89, SD reaches 57.77, and VIf reaches 0.51.

Similar content being viewed by others

Data Availability

The datasets generated during and/or analysed during the current study are available in the TNO_Image_Fusion_Dataset repository, https://figshare.com/articles/TNO_Image_Fusion_Dataset/1008029.

References

Bavirisetti DP, Xiao G, Liu G (2017) Multi-sensor image fusion based on fourth order partial differential equations. In: 2017 20th international conference on information fusion (Fusion). IEEE, pp 1–9

Chen J, Li X, Luo L, Mei X, Ma J (2020) Infrared and visible image fusion based on target-enhanced multiscale transform decomposition. Inf Sci 508:64–78

Fu Y, Wu X-J (2021) A dual-branch network for infrared and visible image fusion. In: 2020 25th international conference on pattern recognition (ICPR). IEEE, pp 10675–10680

Gao Z, Wang Q, Zuo C (2021) A total variation global optimization framework and its application on infrared and visible image fusion. SIViP 16(1):219–227

Han J, Bhanu B (2007) Fusion of color and infrared video for moving human detection. Pattern Recogn 40(6):1771–1784

Han Y, Cai Y, Cao Y, Xu X (2013) A new image fusion performance metric based on visual information fidelity. Information Fusion 14(2):127–135

Han J, Pauwels EJ, De Zeeuw P (2013) Fast saliency-aware multi-modality image fusion. Neurocomputing 111:70–80

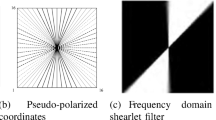

Kong W, Lei Y, Zhao H (2014) Adaptive fusion method of visible light and infrared images based on non-subsampled shearlet transform and fast non-negative matrix factorization. Infrared Physics & Technology 67:161–172

Kong W, Zhang L, Lei Y (2014) Novel fusion method for visible light and infrared images based on nsst–sf–pcnn. Infrared Physics & Technology 65:103–112

Kumar P, Mittal A, Kumar P (2006) Fusion of thermal infrared and visible spectrum video for robust surveillance. In: Computer vision, graphics and image processing. Springer, pp 528–539

Li G, Lin Y, Qu X (2021) An infrared and visible image fusion method based on multi-scale transformation and norm optimization. Information Fusion 71:109–129

Li H, Wu X-J (2018) Infrared and visible image fusion using latent low-rank representation. arXiv:1804.08992

Li H, Wu X-J, Kittler J (2018) Infrared and visible image fusion using a deep learning framework. In: 2018 24th international conference on pattern recognition (ICPR). IEEE, pp 2705–2710

Li H, Wu X-J, Kittler J (2020) Mdlatlrr: a novel decomposition method for infrared and visible image fusion. IEEE Trans Image Process 29:4733–4746

Li S, Yang B, Hu J (2011) Performance comparison of different multi-resolution transforms for image fusion. Information Fusion 12(2):74–84

Li S, Yin H, Fang L (2012) Group-sparse representation with dictionary learning for medical image denoising and fusion. IEEE Trans Biomed Eng 59(12):3450–3459

Liu Y, Chen X, Cheng J, Peng H, Wang Z (2018) Infrared and visible image fusion with convolutional neural networks. Int J Wavelets Multiresolut Inf Process 16(03):1850018

Liu G, Lin Z, Yu Y et al (2010) Robust subspace segmentation by low-rank representation. In: Icml, vol 1. Citeseer, p 8

Liu Y, Liu S, Wang Z (2015) A general framework for image fusion based on multi-scale transform and sparse representation. Information Fusion 24:147–164

Liu G, Yan S (2011) Latent low-rank representation for subspace segmentation and feature extraction. In: 2011 international conference on computer vision. IEEE, pp 1615–1622

Ma J, Chen C, Li C, Huang J (2016) Infrared and visible image fusion via gradient transfer and total variation minimization. Information Fusion 31:100–109

Ma J, Liang P, Yu W, Chen C, Guo X, Wu J, Jiang J (2020) Infrared and visible image fusion via detail preserving adversarial learning. Information Fusion 54:85–98

Ma J, Yu W, Liang P, Li C, Jiang J (2019) Fusiongan: a generative adversarial network for infrared and visible image fusion. Information Fusion 48:11–26

Ma J, Zhou Z, Wang B, Zong H (2017) Infrared and visible image fusion based on visual saliency map and weighted least square optimization. Infrared Physics & Technology 82:8–17

Pajares G, De La Cruz JM (2004) A wavelet-based image fusion tutorial. Pattern Recogn 37(9):1855–1872

Qu G, Zhang D, Yan P (2002) Information measure for performance of image fusion. Electron Lett 38(7):313–315

Rajkumar S, Mouli PC (2014) Infrared and visible image fusion using entropy and neuro-fuzzy concepts. In: ICT and critical infrastructure: proceedings of the 48th annual convention of computer society of India-Vol I. Springer, pp 93–100

Rao Y-J (1997) In-fibre bragg grating sensors. Measurement science and technology 8(4):355

Reinhard E, Adhikhmin M, Gooch B, Shirley P (2001) Color transfer between images. IEEE Comput Graphics Appl 21(5):34–41

Roberts JW, Van Aardt JA, Ahmed FB (2008) Assessment of image fusion procedures using entropy, image quality, and multispectral classification. J Appl Remote Sens 2(1):023522

Simone G, Farina A, Morabito FC, Serpico SB, Bruzzone L (2002) Image fusion techniques for remote sensing applications. Information Fusion 3 (1):3–15

Singh R, Vatsa M, Noore A (2008) Integrated multilevel image fusion and match score fusion of visible and infrared face images for robust face recognition. Pattern Recogn 41(3):880–893

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Wang J, Peng J, Feng X, He G, Fan J (2014) Fusion method for infrared and visible images by using non-negative sparse representation. Infrared Physics & Technology 67:477–489

Wang Z, Wu Y, Wang J, Xu J, Shao W (2022) Res2fusion: Infrared and visible image fusion based on dense res2net and double nonlocal attention models. IEEE Trans Instrum Meas 71:1–12

Wang Z, Wu Y, Wang J, Xu J, Shao W (2022) Res2fusion: Infrared and visible image fusion based on dense res2net and double nonlocal attention models. IEEE Trans Instrum Meas 71:1–12

Xiang T, Yan L, Gao R (2015) A fusion algorithm for infrared and visible images based on adaptive dual-channel unit-linking pcnn in nsct domain. Infrared Physics & Technology 69:53–61

Xu H, Liang P, Yu W, Jiang J, Ma J (2019) Learning a generative model for fusing infrared and visible images via conditional generative adversarial network with dual discriminators. In: IJCAI, pp 3954–3960

Xu H, Ma J, Le Z, Jiang J, Guo X (2020) Fusiondn: a unified densely connected network for image fusion. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 12484–12491

Yang Y, Liu J, Huang S, Wan W, Wen W, Guan J (2021) Infrared and visible image fusion via texture conditional generative adversarial network. IEEE Trans Circuits Syst Video Technol 31(12):4771–4783

Yang Z, Zeng S (2022) TPFUsion: Texture preserving fusion of infrared and visible images via dense networks. Entropy 24(2):294

Zhang Z, Blum RS (1999) A categorization of multiscale-decomposition-based image fusion schemes with a performance study for a digital camera application. Proc IEEE 87(8):1315–1326

Zhang L, Li H, Zhu R, Du P (2022) An infrared and visible image fusion algorithm based on ResNet-152. Multimed Tools Appl 81(7):9277–9287

Zhang X, Ma Y, Fan F, Zhang Y, Huang J (2017) Infrared and visible image fusion via saliency analysis and local edge-preserving multi-scale decomposition. JOSA A 34(8):1400–1410

Zhang H, Xu H, Xiao Y, Guo X, Ma J (2020) Rethinking the image fusion: a fast unified image fusion network based on proportional maintenance of gradient and intensity. In: Proceedings of the AAAI conference on artificial intelligence, vol 34, pp 12797–12804

Zhao J, Chen Y, Feng H, Xu Z, Li Q (2014) Infrared image enhancement through saliency feature analysis based on multi-scale decomposition. Infrared Physics & Technology 62:86–93

Zhao J, Cui G, Gong X, Zang Y, Tao S, Wang D (2017) Fusion of visible and infrared images using global entropy and gradient constrained regularization. Infrared Physics & Technology 81:201–209

Zhao Z, Xu S, Zhang C, Liu J, Li P, Zhang J (2020) Didfuse: Deep image decomposition for infrared and visible image fusion. arXiv:2003.09210

Zhao F, Zhao W, Yao L, Liu Y (2021) Self-supervised feature adaption for infrared and visible image fusion. Information Fusion 76:189–203

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that there are no conflicts of interest in this work.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Project supported by the National Key Research and Development Project of China (JG2018190).

Chengrui Gao, Zhangqiang Ming, Jixiang Guo, Edou Leopold, Junlong Cheng and Jie Zuo are contributed equally to this work.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, Y., Gao, C., Ming, Z. et al. LatLRR-CNN: an infrared and visible image fusion method combining latent low-rank representation and CNN. Multimed Tools Appl 82, 36303–36323 (2023). https://doi.org/10.1007/s11042-023-14967-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-14967-0