Abstract

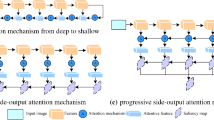

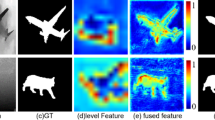

Existing saliency algorithms based on deep learning are not sufficient to extract features of images. And the features are fused only during decoding. As a result, the edge of saliency detection result is not clear and the internal structure display is not uniform. To solve the above problems, this paper proposes a saliency detection method of dual-stream encoding fusion based on RGB and grayscale image. Firstly, an interactive dual-stream encoder is constructed to extract the feature information of gray stream and RGB stream. Secondly, a multi-level fusion strategy is used to obtain more effective multi-scale features. These features are extended and optimized in the decoding stage by linear transformation with hybrid attention. Finally, We propose a hybrid weighted loss function. So that the prediction results of the model can keep a high level accuracy at pixel level and region level. The experimental results of the model proposed to this paper on 6 public datasets illustrate that: The prediction results of the proposed method are clearer about the edge of salient targets and more uniform within salient targets. And has a more lightweight model size.

Similar content being viewed by others

References

Achanta R, Hemami S, Estrada F, Susstrunk S (2009) Frequency-tuned salient region detection. In: 2009 IEEE conference on computer vision and pattern recognition. IEEE, pp 1597–1604

Boer P, Kroese DP, Mannor S, Rubinstein RY (2005) A tutorial on the cross-entropy method. Ann Oper Res 134(1):19–67

Borji A, Cheng M-M, Jiang H, Li J (2015) Salient object detection: a benchmark. IEEE Trans Image Process 24(12):5706–5722

Chen H, Li Y (2018) Progressively complementarity-aware fusion network for rgb-d salient object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3051–3060

Cheng M-M, Mitra NJ, Huang X, Torr PH, Hu S-M (2014) Global contrast based salient region detection. IEEE Trans Pattern Anal Mach Intell 37 (3):569–582

Fan D-P, Lin Z, Zhang Z, Zhu M, Cheng M-M (2020) Rethinking rgb-d salient object detection: models, data sets, and large-scale benchmarks. IEEE Trans Neural Netw Learn Syst 32(5):2075–2089

Feng M, Lu H, Ding E (2019) Attentive feedback network for boundary-aware salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1623–1632

Feng X, Zhou S, Zhu Z, Wang L, Hua G (2022) Local to global feature learning for salient object detection. Pattern Recogn Lett 162:81–88

Feng G, Meng J, Zhang L, Lu H (2022) Encoder deep interleaved network with multi-scale aggregation for rgb-d salient object detection. Pattern Recogn 128:108666

Gao Y, Shi M, Tao D, Xu C (2015) Database saliency for fast image retrieval. IEEE Trans Multimed 17(3):359–359

Goferman S, Zelnik-Manor L, Tal A (2012) Context-aware saliency detection. IEEE Trans Pattern Anal Mach Intell 34(10):1915–1926

Golner MA, Mikhael WB, Krishnang V (2002) Modified jpeg image compression with region-dependent quantization. Circ Syst Signal Process 21(2):163–180

Guo C, Zhang L (2010) A novel multiresolution spatiotemporal saliency detection model and its applications in image and video compression. IEEE Trans Image Process 19(1):185–198

Han K, Wang Y, Tian Q, Guo J, Xu C, Xu C (2020) Ghostnet: more features from cheap operations. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1580–1589

Hou Q, Cheng M-M, Hu X, Borji A, Tu Z, Torr PH (2017) Deeply supervised salient object detection with short connections. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3203–3212

Jerripothula KR, Cai J, Yuan J (2016) Image co-segmentation via saliency co-fusion. IEEE Press

Jiang H, Wang J, Yuan Z, Wu Y, Zheng N, Li S (2013) Salient object detection: a discriminative regional feature integration approach. IEEE conference on computer vision & pattern recognition

Lee G, Tai Y-W, Kim J (2016) Deep saliency with encoded low level distance map and high level features. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 660–668

Li G, Yu Y (2015) Visual saliency based on multiscale deep features. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 5455–5463

Li Y, Hou X, Koch C, Rehg JM, Yuille AL (2014) The secrets of salient object segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 280–287

Li G, Xie Y, Lin L, Yu Y (2017) Instance-level salient object segmentation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2386–2395

Liu N, Han J (2016) Dhsnet: deep hierarchical saliency network for salient object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 678–686

Liu Z, Shi R, Shen L, Xue Y, Ngan KN, Zhang Z (2012) Unsupervised salient object segmentation based on kernel density estimation and two-phase graph cut. IEEE Trans Multimed 14(4):1275–1289

Liu J, Yuan M, Huang X, Su Y, Yang X (2022) Diponet: dual-information progressive optimization network for salient object detection. Digit Signal Process 126:103425

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Luo Z, Mishra A, Achkar A, Eichel J, Jodoin PM (2017) Non-local deep features for salient object detection. In: 2017 IEEE Conference on computer vision and pattern recognition (CVPR)

Mahadevan V, Vasconcelos N (2009) Saliency-based discriminant tracking. In: IEEE Conference on computer vision & pattern recognition

Movahedi V, Elder JH (2010) Design and perceptual validation of performance measures for salient object segmentation. In: 2010 IEEE computer society conference on computer vision and pattern recognition-workshops. IEEE, pp 49–56

Mu X, Qi H, Li X (2019) Automatic segmentation of images with superpixel similarity combined with deep learning. Circ Syst Signal Process 39(3)

Ojala T, Pietikäinen M, Harwood D (1996) A comparative study of texture measures with classification based on featured distributions. Pattern Recognit 29(1):51–59

Pang Y, Zhao X, Zhang L, Lu H (2020) Multi-scale interactive network for salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9413–9422

Pinheiro PO, Lin T-Y, Collobert R, Dollár P (2016) Learning to refine object segments. In: European conference on computer vision. Springer, pp 75–91

Qin X, Zhang Z, Huang C, Gao C, Dehghan M, Jagersand M (2019) Basnet: boundary-aware salient object detection. In: Proceedings of the IEEE/CVf conference on computer vision and pattern recognition, pp 7479–7489

Qin X, Zhang Z, Huang C, Dehghan M, Zaiane OR, Jagersand M (2020) U2-net: going deeper with nested u-structure for salient object detection. Pattern Recogn 106:107404

Qu L, He S, Zhang J, Tian J, Tang Y, Yang Q (2017) Rgbd salient object detection via deep fusion. IEEE Trans Image Process 26 (5):2274–2285. https://doi.org/10.1109/TIP.2017.2682981

Ren Z, Gao S, Chia L, Tsang IW (2014) Region-based saliency detection and its application in object recognition. IEEE Trans Circ Syst Video Technol 24(5):769–779

Ren J, Wang Z, Ren J (2022) Ps-net: progressive selection network for salient object detection. Cognit Comput, pp 1–11

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 234–241

Rutishauser U, Walther D, Koch C, Perona P (2004) Is bottom-up attention useful for object recognition?. In: IEEE Computer society conference on computer vision & pattern recognition

Wang Z, Simoncelli EP, Bovik AC (2003) Multiscale structural similarity for image quality assessment. In: The thrity-seventh asilomar conference on signals, systems & computers, vol 2. IEEE, pp 1398–1402

Wang X, Han TX, Yan S (2009) An hog-lbp human detector with partial occlusion handling. In: 2009 IEEE 12th international conference on computer vision. IEEE, pp 32–39

Wang L, Lu H, Ruan X, Yang M -H (2015) Deep networks for saliency detection via local estimation and global search. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3183–3192

Wang L, Lu H, Wang Y, Feng M, Wang D, Yin B, Ruan X (2017) Learning to detect salient objects with image-level supervision. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 136–145

Wang T, Zhang L, Wang S, Lu H, Yang G, Ruan X, Borji A (2018) Detect globally, refine locally: a novel approach to saliency detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3127–3135

Wang X, Zhu L, Tang S, Fu H, Li P, Wu F, Yang Y, Zhuang Y (2022) Boosting rgb-d saliency detection by leveraging unlabeled rgb images. IEEE Trans Image Process 31:1107–1119

Wu Z, Su L, Huang Q (2019) Cascaded partial decoder for fast and accurate salient object detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3907–3916

Wu R, Feng M, Guan W, Wang D, Lu H, Ding E (2020) A mutual learning method for salient object detection with intertwined multi-supervision. In: 2019 IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Xu C, Li N, Zhang S, Wu Z (2014) Robust visual tracking with sift features and fragments based on particle swarm optimization. Circ Syst 33(5):1507–1526

Yan Q, Xu L, Shi J, Jia J (2013) Hierarchical saliency detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1155–1162

Yang C, Zhang L, Lu H, Ruan X, Yang M-H (2013) Saliency detection via graph-based manifold ranking. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3166–3173

Zhang YY, Wang ZP, Lv XD (2016) Saliency detection via sparse reconstruction errors of covariance descriptors on riemannian manifolds. Circ Syst Signal Process

Zhang J, Fan D-P, Dai Y, Anwar S, Saleh FS, Zhang T, Barnes N (2020) Uc-net: uncertainty inspired rgb-d saliency detection via conditional variational autoencoders. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8582–8591

Zhang J, Fan D-P, Dai Y, Yu X, Zhong Y, Barnes N, Shao L (2021) Rgb-d saliency detection via cascaded mutual information minimization. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 4338–4347

Zhang H, Wu C, Zhang Z, Zhu Y, Lin H, Zhang Z, Sun Y, He T, Mueller J, Manmatha R (2022) Resnest: Split-attention networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2736–2746

Zhao R, Ouyang W, Li H, Wang X (2015) Saliency detection by multi-context deep learning. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1265–1274

Zhou H, Xie X, Lai J-H, Chen Z, Yang L (2020) Interactive two-stream decoder for accurate and fast saliency detection. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9141–9150

Zhu W, Liang S, Wei Y, Sun J (2014) Saliency optimization from robust background detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2814–2821

Acknowledgments

This work was supported by the Major Science and Technology Project in Henan Province [221100110500], Science and Technology Project of Henan Province [222102320380, 222102110194, 212102210161], the National Key Research and Development Project [2019YFB1311000].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflicts of interest regarding the publication of this paper. And The datasets generated and/or analyzed during the present study are available from the corresponding author on reasonable request.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Weishuo Zhao contributed equally to this work.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Xu, T., Zhao, W., Chai, H. et al. Dual-stream encoded fusion saliency detection based on RGB and grayscale images. Multimed Tools Appl 82, 47327–47346 (2023). https://doi.org/10.1007/s11042-023-15217-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15217-z