Abstract

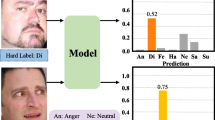

Previous research on cross-domain Facial Expression Recognition (FER) mainly focused on metric learning or adversarial learning, which presupposes access to source domain data to find domain invariant information. However, in practical applications, due to the high privacy and sensitivity of face data, it is often impossible to directly obtain source domain data. In this case, these methods cannot be effectively applied. In order to better apply the cross-domain FER method to the real scenarios, this paper proposes a source-free FER method called Label Transfer Virtual Adversarial Learning (LTVAL), which does not need to directly access source domain data. First, we train the target domain model based on the information maximization constraint, and obtain the pseudo-labels of the target domain data through deep clustering to achieve label transfer. Secondly, the perturbation is added to the target domain samples, and the perturbed samples and the original samples are together used for virtual adversarial training with local distributed smoothing constraints. Finally, a joint loss function is constructed to optimize the target domain model. Using the source domain model trained on RAF-DB, experiments on four public datasets FER2013, JAFFE, CK+, and EXPW as target domain datasets show that our approach achieves much higher performance than the state-of-the-art cross-domain FER methods that require access to source domain data.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are openly available at RAF-DB:

http://www.whdeng.cn/raf/model1.html

FER2013:https://www.kaggle.com/datasets/msambare/fer2013

JAFFE:https://zenodo.org/record/3451524

CK+:https://sites.pitt.edu/~emotion/ck-spread.htm

EXPW:http://mmlab.ie.cuhk.edu.hk/projects/socialrelation/index.html

References

Chen T, Pu T, Wu H, Xie Y, Liu L, Lin L (2021) Cross-domain facial expression recognition: a unified evaluation benchmark and adversarial graph learning. IEEE Transa Pattern Anal Mach Intell. https://doi.org/10.1109/TPAMI.2021.3131222

Cui S, Wang S, Zhuo J, Li L, Huang Q, Tian Q (2020) Towards discriminability and diversity: Batch nuclear-norm maximization under label insufficient situations. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 3941–3950

Deng J, Guo J, Xue N, Zafeiriou S (2019) ArcFace: Additive angular margin loss for deep face recognition. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE

Fang HS, Xie S, Tai YW, Lu C (2017) RMPE: Regional multi-person pose estimation. In: 2017 IEEE International Conference on Computer Vision (ICCV). IEEE.

Goodfellow IJ, Erhan D, Carrier PL et al (2013) Challenges in representation learning: A report on three machine learning contests. International conference on neural information processing.(ICIP). Springer, 2013

Gretton A, Borgwardt KM, Rasch MJ, Schölkopf B, Smola A (2012) A kernel two-sample test. J Mach Learn Res 13(1):723–773

Guo Y, Zhang L, Hu Y, He X, Gao J (2016) Ms-celeb-1m: A dataset and benchmark for large-scale face recognition. In: European conference on computer vision (pp 87–102). Springer, Cham

Hartigan JA, Wong MA (1979) Algorithm AS 136: a k-means clustering algorithm. Journal of the royal statistical society. series c (applied statistics) 28(1):100–108

Ji Y, Hu Y, Yang Y, Shen F, Shen HT (2019) Cross-domain facial expression recognition via an intra-category common feature and inter-category distinction feature fusion network. Neurocomputing 333:231–239

Kang G, Jiang L, Yang Y, Hauptmann AG (2019) Contrastive adaptation network for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF Conference on computer vision and pattern recognition, pp 4893–4902

Krizhevsky A, Sutskever I, Hinton GE (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

Lee CY, Batra T, Baig MH, Ulbricht D (2019) Sliced wasserstein discrepancy for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)(pp 10285–10295

Li S, Deng W (2018) Deep emotion transfer network for cross-database facial expression recognition. In: 2018 24th International Conference on Pattern Recognition (ICPR) (pp 3092–3099). IEEE

Li S, Deng W (2022) A deeper look at facial expression dataset bias. IEEE Trans Affect Comput 13(2):881–893

Li S, Deng W, Du J (2017) Reliable crowdsourcing and deep locality-preserving learning for expression recognition in the wild. In: Proceedings of the IEEE conference on computer vision and pattern recognition.(CVPR), pp 2852–2861

Li S, Deng W, Du J (2017) Reliable crowdsourcing and deep locality-preserving learning for expression recognition in the wild. IEEE/CVF conference on computer vision and pattern recognition. (CVPR), pp 2584–2593

Li T, Gu JC, Ling ZH, Liu Q (2022) Conversation-and tree-structure losses for dialogue disentanglement. In: Proceedings of the 2nd DialDoc Workshop on document-grounded dialogue and conversational question answering, pp 54–64

Liang J, Hu D, Feng J (2020) Do we really need to access the source data? source hypothesis transfer for unsupervised domain adaptation. In: International conference on machine learning, pp 6028–6039

Liang J, Hu D, Wang Y, He R, Feng J (2022) Source data-absent unsupervised domain adaptation through hypothesis transfer and labeling transfer. IEEE Trans Pattern Anal Mach Intell 44(1):8602–8617

Liu S, Huang S, Fu W, Lin JC (2022) A descriptive human visual cognitive strategy using graph neural network for facial expression recognition. Int J Mach Learn Cybern, 25 Oct 2022

Liu S, Li Y, Fu W (2022) Human-centered attention-aware networks for action recognition. Int J Intell Syst, 23 August 2022

Liu S, Wang S, Liu X et al (2022) Human inertial thinking strategy: a novel fuzzy reasoning mechanism for IoT-assisted visual monitoring. IEEE Int Things J, 11 Jan 2022

Liu H, Wang J, Long M (2021) Cycle self-training for domain adaptation. Adv Neural Inf Process Syst 34:22968–22981

Liu W, Wen Y, Yu Z, Li M, Raj B, Song L (2017) Sphereface: Deep hypersphere embedding for face recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR), pp 212–220

Long M, Cao Z, Wang J, Jordan MI (2018) Conditional adversarial domain adaptation. Adv Neural Inf Process Syst, p 31

Lucey P, Cohn JF, Kanade T, Saragih J, Ambadar Z, Matthews I (2010) The extended cohn-kanade dataset (ck+): A complete dataset for action unit and emotion-specified expression. In: 2010 ieee computer society conference on computer vision and pattern recognition-workshops (pp 94–101). IEEE

Lyons MJ, Akamatsu S, Kamachi M, Gyoba J, Budynek J (1998) The Japanese female facial expression (JAFFE) database. In: Proceedings of third international conference on automatic face and gesture recognition, pp 14–16

Mohan K, Seal A, Krejcar O, Yazidi A (2021) FER-Net: facial expression recognition using deep neural net. Neural Comput Applic 33(15):9125–9136

Mohan K, Seal A, Krejcar O, Yazidi A (2021) Facial expression recognition using local gravitational force descriptor-based deep convolution neural networks. IEEE Trans Instrum Meas 70:1–12

Newell A, Huang Z, Deng J (2017) Associative embedding: End-to-end learning for joint detection and grouping. Adv Neural Inf Process Syst, p 30

Ruan D, Yan Y, Lai S, Chai Z, Shen C, Wang H (2021) Feature decomposition and reconstruction learning for effective facial expression recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 7656–7665

Saenko K, Kulis B, Fritz M, Darrell T (2010) Adapting visual category models to new domains. In: European conference on computer vision (pp 213–226). Springer, Berlin, Heidelberg

She J, Hu Y, Shi H, Wang J, Shen Q, Mei T (2021) Dive into ambiguity: latent distribution mining and pairwise uncertainty estimation for facial expression recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), pp 6248–6257

Van der Maaten L, Hinton G (2008) Visualizing data using t-SNE. J Mach Learn Res 9(11):2579–2605

Wang K, Peng X, Yang J, Lu S, Qiao Y (2020) Suppressing uncertainties for large-scale facial expression recognition. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 6897–6906

Wang K, Peng X, Yang J, Meng D, Qiao Y (2020) Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans Image Process 29:4057–4069

Xie Y, Chen T, Pu T, Wu H, Lin L (2020) Adversarial graph representation adaptation for cross-domain facial expression recognition. In: Proceedings of the 28th ACM international conference on multimedia, pp 1255–1264

Xu R, Li G, Yang J, Lin L (2019) Larger norm more transferable: an adaptive feature norm approach for unsupervised domain adaptation. In: Proceedings of the IEEE/CVF International Conference on Computer Vision (CVPR), pp 1426–1435

Zavarez MV, Berriel RF, Oliveira-Santos T (2017) Cross-database facial expression recognition based on fine-tuned deep convolutional network. In: 2017 30th SIBGRAPI Conference on Graphics, Patterns and Images. (SIBGRAPI) (pp 405–412). IEEE

Zhang Z, Luo P, Loy CC, Tang X (2015) Learning social relation traits from face images. In: Proceedings of the IEEE International conference on computer vision, pp 3631–3639

Zhang K, Zhang Z, Li Z, Qiao Y (2016) Joint face detection and alignment using multitask cascaded convolutional networks. IEEE Signal Process Lett 23(10):1499–1503

Zhang X, Zhang F, Xu C (2022) Joint expression synthesis and representation learning for facial expression recognition. IEEE Trans Circuits Syst Video Technol 32(3):1681–1695

Zhou L, Fan X, Tjahjadi T, Das Choudhury S (2022) Discriminative attention-augmented feature learning for facial expression recognition in the wild. Neural Comput Applic 34(2):925–936

Zhou Q, Zhou WA, Wang S, Xing Y (2021) Unsupervised domain adaptation with adversarial distribution adaptation network. Neural Comput Applic 33(13):7709–7721

Zhu R, Sang G, Zhao Q (2016) Discriminative feature adaptation for cross-domain facial expression recognition. In: 2016 International Conference on Biometrics (ICB) (pp 1–7). IEEE

Zhu Y, Zhuang F, Wang J, Ke G, Chen J, Bian J, He Q (2020) Deep subdomain adaptation network for image classification. IEEE Trans Neural Netw Learn Syst 32(4):1713–1722

Zou W, Zhang D, Lee DJ (2022) A new multi-feature fusion based convolutional neural network for facial expression recognition. Appl Intell 52(3):2918–2929

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China (62071384), the Key Research and Development Project of Shaanxi Province of China(2023-YBGY-239), Natural Science Basic Research Plan in Shaanxi Province of China (2023-JC-YB-531).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that they have no conflict of interest to this work.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Zhe Guo and Xuewen Liu are contributed equally to this work.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Guo, Z., Liu, Y., Liu, X. et al. LTVAL: Label Transfer Virtual Adversarial Learning framework for source-free facial expression recognition. Multimed Tools Appl 83, 5207–5228 (2024). https://doi.org/10.1007/s11042-023-15297-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15297-x