Abstract

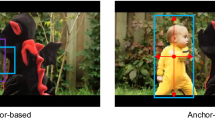

The efficient Siamese anchor-free tracker has fewer parameters, but it produces a large number of low-quality bounding boxes which are located far away from the center of the object. Moreover, a plenty of background information or distractors also interfere with the tracking process, resulting in the inaccurate results of classification and regression. As such, we propose a novel Siamese anchor-free network based on criss-cross attention and an improved head network. We apply ResNet-50 to extract the features of the template image and search region, then feed the feature maps into a recurrent criss-cross attention module to make it more discriminative. The enhanced feature maps are inputted into our improved head network, which include the center-ness branch based on the original classification and regression branches to filter out low-quality bounding boxes. Our proposed tracker reduces the impact of background information or distractors and can obtain high-quality bounding boxes, generating more accurate and robust tracking results. Extensive experiments and comparisons with state-of-the-art trackers are conducted on many challenging benchmarks such as VOT2016, VOT2018, GOT-10k, UAV123 and OTB2015. Our tracker achieves excellent performance with a considerable real-time speed.

Similar content being viewed by others

Data availability

The VOT2016 and VOT2018 datasets analyzed during the current study are available in https://www.votchallenge.net/; The UAV123 dataset is available in https://cemse.kaust.edu.sa/ivul/uav123; The GOT-10k dataset is available in http://got-10k.aitestunion.com/; The OTB2015 dataset analyzed during the current study is available in http://cvlab.hanyang.ac.kr/tracker_benchmark/.

References

Bertinetto L, Valmadre J, Henriques JF, Vedaldi A, Torr PHS (2016) Fully-convolutional Siamese networks for object tracking. In: Proceedings of the European Conference on Computer Vision. Springer, Cham, pp 850–865

Bhat G, Johnander J, Danelljan M, Khan FS, Felsberg M (2018) Unveiling the power of deep tracking. In: Proceedings of the European Conference on Computer Vision. Springer, Cham, pp 483–498

Chen ZD, Zhong BN, Li GR, Zhang SP, Ji RR (2020) Siamese box adaptive network for visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR, pp 6668–6677. https://doi.org/10.48550/arXiv.2003.06761

Chen X, Yan B, Zhu JW, Wang D, Yang XY, Lu HC (2021) Transformer tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR, pp 8126–8135. https://doi.org/10.48550/arXiv.2103.15436

Dai K, Wang D, Lu H, Sun C, Li J (2019) Visual tracking via adaptive spatially-regularized correlation filters. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Long Beach, CA, pp 4670–4679

Danelljan M, Robinson A, Khan FS, Felsberg M (2016) Beyond correlation filters: learning continuous convolution operators for visual tracking. In: Proceedings of the European Conference on Computer Vision. Springer, Cham, pp 472–488

Danelljan M, Bhat G, Khan FS, Felsberg M (2017) ECO: efficient convolution operators for tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Honolulu, HI, pp 6638–6646

Danelljan M, Bhat G, Khan FS, Felsberg M (2019) ATOM: accurate tracking by overlap maximization. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Long Beach, CA, pp 4660–4669

De Boer PT, Kroese DP, Mannor S, Rubinstein RY (2005) A tutorial on the cross-entropy method. Ann Oper Res 134(1):19–67

Fu J, Liu J, Tian HJ, Li Y, Bao YJ, Fang ZW, Lu HQ (2019) Dual attention network for scene segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Long Beach, CA, pp 3141–3149

Guo DY, Wang J, Cui Y, Wang ZH, Chen SY (2020) SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR, pp 6269–6277. https://doi.org/10.48550/arXiv.1911.07241

He KM, Zhang XY, Ren SQ, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Las Vegas, NV, pp 770–778

Hou Q, Zhou D, Feng J (2021) Coordinate attention for efficient mobile network design. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Nashville, TN, pp 13713–13722

Hu J, Shen L, Sun G (2018) Squeeze-and-Excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Salt Lake City, UT, pp 7132–7141

Huang Z, Wang X, Huang L, Huang C, Wei Y, Liu W (2019) CCNet: Criss-Cross attention for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recongnition. IEEE, Seoul, South Korea, pp 603–612

Huang LH, Zhao X, Huang KQ (2021) GOT-10k: a large high-diversity benchmark for generic object tracking in the wild. IEEE Trans Pattern Anal Mach Intell 43(5):1562–1577

Kristan M, Leonardis A, Matas J, Felsberg M, Pfugfelder R, Zajc LC, Vojir T, Bhat G, Lukezic A, Eldesokey A, Fernandez G (2016) The visual object tracking VOT2016 challenge results. In: Proceedings of the European Conference on Computer Vision. Springer, Cham, pp 777–823

Kristan M, Leonardis A, Matas J, Felsberg M, Pflugfelder R, Zajc LC, Vojir T, Bhat G, Lukezic A, Eldesokey A (2018) The sixth visual object tracking VOT2018 challenge results. In: Proceedings of the European Conference on Computer Vision. Springer, Cham, pp 3–53

Law H, Deng J (2018) CornerNet: detecting objects as paired keypoints. In: Proceedings of the European Conference on Computer Vision. ECCV, pp 734–750. https://doi.org/10.48550/arXiv.1808.01244

Li B, Yan JJ, Wu W, Zhu Z, Hu XL (2018) High performance visual tracking with siamese region proposal network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Salt Lake City, UT, pp 8971–8980

Li F, Tian C, Zuo W, Zhang L, Yang M (2018) Learning spatial-temporal regularized correlation filters for visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Salt Lake City, UT, pp 4904–4913

Li B, Wu W, Wang Q, Zhang FY, Xing JL, Yan JJ (2019) SiamRPN++: evolution of siamese visual tracking with very deep networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Long Beach, CA, pp 4282–4291

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollàr P, Zitnick CL (2014) Microsoft COCO: common objects in context. In: Proceedings of the European Conference on Computer Vision. Springer, Cham, pp 740–755

Liu P, Yu H, Cang S (2019) Adaptive neural network tracking control for underactuated systems with matched and mismatched disturbances. Nonlin Dyn 98:1447–1464

Luca B, Jack V, Stuart G, Ondrej M, Torr PHS (2016) Staple: complementary learners for real-time tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR, pp 1401–1409. https://doi.org/10.48550/arXiv.1512.01355

Mueller M, Smith N, Ghanem B (2016) A benchmark and simulator for UAV tracking. In: Proceedings of the European Conference on Computer Vision. Springer, Cham, pp 445–461

Real E, Shlens J, Mazzocchi S, Pan X, Vanhoucke V (2017) YouTube-BoundingBoxes: a large high-precision human-annotated data set for object detection in video. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR, pp 5296–5305. https://doi.org/10.48550/arXiv.1702.00824

Ren SQ, He KM, Girshick R, Sun J (2015) Faster R-CNN: Towards real-time object detection with region proposal networks. In: Proceedings of the Advances in neural information processing systems. NIPS, pp 91–99. https://doi.org/10.48550/arXiv.1506.01497

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M (2015) Imagenet large scale visual recognition challenge. Int J Comput Vis 115(3):211–252

Shen H, Lin D, Song T (2022) A real-time siamese tracker deployed on UAVs. J Real-Time Image Proc 19:463–473

Sosnovik I, Moskalev A, Smeulders AWM (2021) Scale equivariance improves siamese tracking. In: Proceedings of the IEEE Winter Conference on Applications of Computer Vision. IEEE, pp 2765–2774. https://doi.org/10.48550/arXiv.2007.09115

Sun L, Zhao C, Yan Z, Liu P, Duckett T, Stolkin R (2019) A novel weakly-supervised approach for RGB-D-based nuclear waste object detection. IEEE Sensors J 19(9):3487–3500

Tang F, Ling Q (2022) Ranking-based siamese visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR, pp 8741–8750. https://doi.org/10.48550/arXiv.2205.11761

Tian Z, Shen CH, Chen H, He T (2019) FCOS: fully convolutional one-stage object detection. In: Proceedings of the IEEE International Conference on Computer Vision. CVPR, pp 9627–9636. https://doi.org/10.48550/arXiv.1904.01355

Voigtlaender P, Luiten J, Torr PHS, Leibe B (2020) Siam r-cnn: visual tracking by re-detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR, pp 6578–6588. https://doi.org/10.48550/arXiv.1911.12836

Wang X, Girshick R, Gupta A, He K (2018) Non-local neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR, pp 7794–7803. https://doi.org/10.48550/arXiv.1711.07971

Wang N, Zhou W, Tian Q, Hong R, Wang M, Li H (2018) Multi-cue correlation filters for robust visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Salt Lake City, UT, pp 4844–4853

Wang Q, Zhang L, Bertinetto L, Hu W, Torr P (2019) Fast online object tracking and segmentation: a unifying approach. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Long Beach, CA, pp 1328–1338

Woo S, Park J, Lee JY, Kweon IS (2018) CBAM: convolutional block attention module. In: Proceedings of the European Conference on Computer Vision. ECCV, pp 3–19. https://doi.org/10.48550/arXiv.1807.06521

Wu Y, Lim J, Yang M-H (2015) Object tracking benchmark. IEEE Trans Pattern Anal Mach Intell 37(9):1834–1848

Xing D, Evangeliou N, Tsoukalas A (2022) Siamese transformer pyramid networks for real-time UAV tracking. In: Proceedings of the IEEE Winter Conference on Applications of Computer Vision. CACV, pp 2139–2148. https://doi.org/10.48550/arXiv.2110.08822

Xu TY, Feng ZH, Wu XJ, Kittler J (2019) Learning adaptive discriminative correlation filters via temporal consistency preserving spatial feature selection for robust visual object tracking. IEEE Trans Image Process 28(11):5596–5609

Xu YD, Wang ZY, Li ZX, Yuan Y, Yu G (2020) Siamfc++: Towards robust and accurate visual tracking with target estimation guidelines. In: Proceedings of the AAAI Conference on Artificial Intelligence. AAAI, pp 12549–12556. https://doi.org/10.48550/arXiv.1911.06188

Yu J, Jiang Y, Wang Z, Cao Z, Huang T (2016) Unitbox: an advanced object detection network. In: Proceedings of the ACM International Conference on Multimedia. Association for Computing Machinery, New York, NY, pp 516–520

Yu F, Zhang ZN, Shen H (2022) FPGA implementation and image encryption application of a new PRNG based on a memristive Hopfield neural network with a special activation gradient. Chin Phys B 31(2):020505

Zhang Z, Peng H (2019) Deeper and wider Siamese networks for real-time visual tracking. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR, pp 4586–4595. https://doi.org/10.48550/arXiv.1901.01660

Zhang Z, Zhang Y, Cheng X (2020) Siamese network for real-time tracking with action-selection. J Real-Time Image Proc 17:1647–1657

Zhang JM, Jin XK, Sun J, Wang J, Sangaiah AK (2020) Spatial and semantic convolutional features for robust visual object tracking. Multimed Tools Appl 79(21–22):15095–15115

Zhang JM, Sun J, Wang J, Yue X-G (2021) Visual object tracking based on residual network and cascaded correlation filters. J Ambient Intell Humaniz Comput 12(8):8427–8440

Zhang JM, Liu Y, Liu HH, Wang J (2021) Learning local–global multiple correlation filters for robust visual tracking with Kalman filter redetection. Sensors 21(4):1129

Zhang JM, Feng WJ, Yuan TY, Wang J, Sangaiah AK (2022) SCSTCF: spatial-channel selection and temporal regularized correlation filters for visual tracking. Appl Soft Comput 118:108485

Zhang JM, Sun J, Wang J, Li ZP, Chen X (2022) An object tracking framework with recapture based on correlation filters and Siamese networks. Comput Electr Eng 98:107730

Zhang JM, Yuan TY, He YQ, Wang J (2022) A background-aware correlation filter with adaptive saliency-aware regularization for visual tracking. Neural Comput Applic 34(8):6359–6376

Zhang JM, Liu Y, Liu HH, Wang J, Zhang YD (2022) Distractor-aware visual tracking using hierarchical correlation filters adaptive selection. Appl Intell 52(6):6129–6147

Zhou X, Zhuo J, Krähenbühl P (2019) Bottom-up object detection by grouping extreme and center points. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. CVPR, pp 850–859. https://doi.org/10.48550/arXiv.1901.08043

Zhu Z, Wang Q, Li B, Wu W, Yan JJ, Hu WM (2018) Distractor-aware Siamese networks for visual object tracking. In: Proceedings of the European Conference on Computer Vision. CVPR, pp 101–117. https://doi.org/10.48550/arXiv.1808.06048

Acknowledgements

This work was supported in part by the Open Fund of Key Laboratory of Safety Control of Bridge Engineering, Ministry of Education (Changsha University of Science and Technology) under Grant 21 KB06, in part by the Science Fund for Creative Research Groups of Hunan Province under Grant 2020JJ1006, in part by the National Natural Science Foundation of China under Grant 61972056.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, J., Huang, H., Jin, X. et al. Siamese visual tracking based on criss-cross attention and improved head network. Multimed Tools Appl 83, 1589–1615 (2024). https://doi.org/10.1007/s11042-023-15429-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15429-3