Abstract

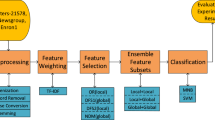

Text classification is a very important topic in the current era due to the high volume of textual data and handling. Feature selection is one of the most important steps in text classification studies, as well as significantly affecting classification performance. In the literature, filter-based global feature selection methods are widely proposed. While these methods are globalized, although they are generally performed by looking at the class information, feature information is ignored beside the class information. When calculating the score of each feature, the information of the feature should be taken into account along with the class information. To solve this problem, a new globalization technique called Feature and Class-based Weighted Sum (FCWS) which takes into account both feature and class information is proposed. FCWS method is compared with traditional globalization techniques on four datasets named as Reuters-21,578, 20Newsgroup, Enron1 and Polarity in addition to Support Vector Machines (SVM), Decision Tree (DT) and Multinomial Naive Bayes (MNB) classifiers. Also, it was employed 50, 100, 300, 500, 1000 and 3000 as dimension. Experimental studies on benchmark datasets show that the efficiency of the proposed method is higher performance than the other three methods named as maximum (MAX), sum (SUM), and weighted-sum (AVG), in most cases according to Micro-F1 and Macro-F1 scores.

Similar content being viewed by others

Data availability

The data that support the findings of this study are openly available in (Reference 4) for Reuters-21,578 and 20Newsgroup datasets; Enron1 and Polarity is binary-class dataset in (Reference 20); The data that support the findings of this study are openly available in Machine Learning Repository-UCI at https://archive.ics.uci.edu/ml/datasets/reuters-21578+text+categorization+collection (Reference 4).

References

Agnihotri D, Verma K, Tripathi P (2017) Variable global feature selection scheme for automatic classification of text documents. Expert Syst Appl 81:268–281

Agnihotri D, Verma K, Tripathi P, Singh BK (2019) Soft voting technique to improve the performance of global filter based feature selection in text corpus. Appl Intell 49(4):1597–1619

Ahmed B (2020) Wrapper feature selection approach based on binary firefly algorithm for spam e-mail filtering. J Soft Comput Data Min 1(2):44–52

Asuncion A, Newman D (2007) UCI machine learning repository. https://archive.ics.uci.edu/ml/index.php

Debole F, Sebastiani F (2004) Supervised term weighting for automated text categorization. Text mining and its applications. Springer, Berlin, pp 81–97

Deng X, Li Y, Weng J, Zhang J (2019) Feature selection for text classification: a review. Multimedia Tools Appl 78(3):3797–3816

Forman G (2003) An extensive empirical study of feature selection metrics for text classification. J Mach Learn Res 3(Mar):1289–1305

Gupta ST, Sahoo JK, Roul RK (2019) Authorship identification using recurrent neural networks. Proceedings of the 2019 3rd International Conference on Information System and Data Mining, p 133–7

Guyon I, Elisseeff A (2003) An introduction to variable and feature selection. J Mach Learn Res 3(Mar):1157–1182

Joachims T (1998) Text categorization with support vector machines: Learning with many relevant features. European conference on machine learning: Springer, Berlin, p 137–42

Khan J, Alam A, Lee Y (2021) Intelligent hybrid feature selection for textual sentiment classification. IEEE Access 9:140590–140608

Khurana A, Verma OP (2020) Novel approach with nature-inspired and ensemble techniques for optimal text classification. Multimedia Tools Appl 79(33):23821–23848

Kou G, Yang P, Peng Y, Xiao F, Chen Y, Alsaadi FE (2020) Evaluation of feature selection methods for text classification with small datasets using multiple criteria decision-making methods. Appl Soft Comput 86:105836

Kumar A, Bhatia M, Sangwan SR (2022) Rumour detection using deep learning and filter-wrapper feature selection in benchmark twitter dataset. Multimedia Tools Appl 81(24):34615–34632

Madasu A, Elango S (2020) Efficient feature selection techniques for sentiment analysis. Multimedia Tools Appl 79(9):6313–6335

Onan A (2018) An ensemble scheme based on language function analysis and feature engineering for text genre classification. J Inform Sci 44(1):28–47

Özgür A, Özgür L, Güngör T (2005) Text categorization with class-based and corpus-based keyword selection. International Symposium on Computer and Information Sciences: Springer, Berlin, p 606–15

Parlak B (2022) Class‐index corpus‐index measure: A novel feature selection method for imbalanced text data. Concurr Comput Pract Exp 34(21):e7140

Parlak B, Uysal AK (2019) On classification of abstracts obtained from medical journals. J Inf Sci 46(5):648–663

Parlak B, Uysal AK (2020) The effects of globalisation techniques on feature selection for text classification. J Inf Sci 47(6):727–739

Parlak B, Uysal AK (2023) A novel filter feature selection method for text classification: extensive feature selector. J Inf Sci 49(1):59–78

Porter MF (1997). In: Sparck Jones K, Willett P (eds) Readings in information retrieval. Morgan Kaufmann Publishers Inc, San Francisco

Rehman A, Javed K, Babri HA (2017) Feature selection based on a normalized difference measure for text classification. Inf Process Manag 53(2):473–489

Rehman A, Javed K, Babri HA, Asim N (2018) Selection of the most relevant terms based on a max-min ratio metric for text classification. Expert Syst Appl 114:78–96

Rehman A, Javed K, Babri HA, Saeed M (2015) Relative discrimination criterion–A novel feature ranking method for text data. Expert Syst Appl 42(7):3670–3681

Schütze H, Manning CD, Raghavan P (2008) Introduction to information retrieval. Cambridge University Press, Cambridge

Shunmugapriya P, Kanmani S (2017) A hybrid algorithm using ant and bee colony optimization for feature selection and classification (AC-ABC hybrid). Swarm Evol Comput 36:27–36

Taşcı Ş, Güngör T (2013) Comparison of text feature selection policies and using an adaptive framework. Expert Syst Appl 40(12):4871–4886

Theodoridis S, Koutroumbas K (2009) Pattern recognition, 4th edn. Academic

Uysal AK (2016) An improved global feature selection scheme for text classification. Expert Syst Appl 43:82–92

Uysal AK (2018) On two-stage feature selection methods for text classification. IEEE Access 6:43233–43251

Uysal AK, Gunal S (2012) A novel probabilistic feature selection method for text classification. Knowl Based Syst 36:226–235

Uysal AK, Gunal S (2014) The impact of preprocessing on text classification. Inf Process Manag 50(1):104–112

Xia T, Chen X (2021) A weighted feature enhanced hidden Markov Model for spam SMS filtering. Neurocomputing 444:48–58

Zhang Z, Hong W-C (2021) Application of variational mode decomposition and chaotic grey wolf optimizer with support vector regression for forecasting electric loads. Knowl Based Syst 228:107297

Zong W, Wu F, Chu L-K, Sculli D (2015) A discriminative and semantic feature selection method for text categorization. Int J Prod Econ 165:215–222

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

No author associated with this paper has disclosed any potential or pertinent conflicts which may be perceived to have impending conflict with this work.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Parlak, B. A novel feature and class-based globalization technique for text classification. Multimed Tools Appl 82, 37635–37660 (2023). https://doi.org/10.1007/s11042-023-15459-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15459-x