Abstract

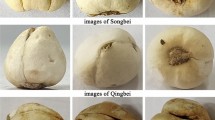

Fritillariae Cirrhosae Bulbus (FCB) as a well-known traditional Chinese Medicine (TCM), which is widely used for its ability of relieving cough and eliminating phlegm in cooking and treating. However, the adulteration by different species for economic profit has frequently been reported. Inspired by deep learning, a novel approach based on image captioning is proposed to achieve the accurate and fast identification of FCB: EGNet, via bridging between image visual features and word expression in Chinese Pharmacopoeia. In encoder module, Convolutional Block Attention Module (CBAM) and spatial attention module (SA) are introduced into EfficientNet-B0 to strengthen and focus on the unique features. For decoder module, due to the simpler structure and fewer parameters, gated recurrent unit (GRU) is applied for generating the correspondence and explanation with text descriptions. Simultaneously, the adaptive attention mechanism with a visual sentinel is inject into GRU for judging adaptively whether to rely on visual information or semantic information. Eventually, experiments confirm that the proposed EGNet outperforms competing methods. And it is superior in the highest identification accuracy of 99.0%, 99.3% and 99.4%, the best words matching completeness 91.1%, 92.2% and 91.6% for Lubei, Qingbei, and Songbei. This paper can significantly improve the accuracy of classification and the cost is low. It is proved to be an exceptional practice for the high-efficiency of TCM-discrimination and TCM-technology.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are available at https://www.kaggle.com/datasets/tanchaoqun/7788tcq.

References

Al-Muzaini HA, Al-Yahya TN, Benhidour H (2018) Automatic Arabic image captioning using RNN-LSTM-based language model and CNN. Int J Adv Comput Sci 9(6):67–73

Azimi S, Kaur T, Gandhi TK (2021) A deep learning approach to measure stress level in plants due to nitrogen deficiency. Measurement 173:108650

Bisen D (2021) Deep convolutional neural network based plant species recognition through features of leaf. Multimed Tools Appl 80(4):6443–6456

Che WB, Fan XP, Xiong RQ et al (2020) Visual relationship embedding network for image paragraph generation. IEEE Trans Multimed 22:2307–2320

Chen JB, Wang Y, Liu AX et al (2018) Two-dimensional correlation spectroscopy reveals the underlying compositions for FT-NIR identification of the medicinal bulbs of the genus Fritillaria. J Mol Struct 1155:681–686

Chen Q, Wu XB, Zhang DQ (2020) Comparison of the abilities of universal, super, and specific DNA barcodes to discriminate among the original species of Fritillariae Cirrhosae Bulbus and its adulterants. PLoS ONE 15(2):e0229181

Chen XY, Jiang M, Zhao Q (2021) Self-distillation for few-shot image captioning. IEEE Winter Conference on Applications of Computer Vision (WACV) pp 545–555

Chung JY, Gulcehre C, Cho KH et al (2014) Empirical evaluation of gated recurrent neural networks on sequence modeling. Reprint Arxiv

China Pharmaceutical Technology Press (2015) Pharmacopoeia of the People’s Republic of China, part 1. Ministry of Public Health of the People’s Republic of China, Beijing, pp 36–38

Deng ZR, Jiang ZQ, Lan RS et al (2020) Image captioning using DenseNet network and adaptive attention. Signal Process-Image 85:115836

Ding ST, Qu SR, Xi YL et al (2019) Stimulus-driven and concept-driven analysis for image caption generation. Neurocomputing 398:520–530

Diwakar M, Kumar M (2018) A review on CT image noise and its denoising [J]. Biomed Signal Process 42:73–88

Diwakar M, Kumar P, Singh AK (2020) CT image denoising using NLM and its method noise thresholding. Multimed Tools Appl 79(2):14449–14464

Fu K, Li Y, Zhang WK et al (2020) Boosting memory with a persistent memory mechanism for remote sensing image captioning. Remote Sens Basel 12(11):1874

Gao LL, Li XP, Song JK et al (2020) Hierarchical LSTMs with adaptive attention for visual captioning. IEEE Trans Pattern Anal 42:1112–1131

Geetharamani G, Arun PJ (2019) Identification of plant leaf diseases using a nine-layer deep convolutional neural network. Comput Electr Eng 76:323–338

Guo LT, Liu J, Lu SC et al (2020) Show, tell, and polish: ruminant decoding for image captioning. IEEE T Multimedia 22(99):2149–2162

Gupta K, Rani R, Bahia NK (2020) Plant-seedling classification using transfer learning-based deep convolutional neural networks. Int J Agric Environ 37(3):4003–4019

Hangzhou Jiaben Technology Co., Ltd (2010) Chinese herbal medicine market network. [Online]. Available: http://www.zgycsc.com/

Jie LF, Yan J, Ping L et al (2020) Untargeted metabolomics coupled with chemometric analysis reveals species-specific steroidal alkaloids for the authentication of medicinal Fritillariae Bulbus and relevant products. J Chromatogr 1612:460630

Kassim YM, Palaniappan K, Yang F et al (2020) Clustering-based dual deep learning architecture for detecting red blood cells in malaria diagnostic smears. IEEE J Biomed Health PP(99):1–12

Kuang HL, Liu CR, Chan LL, Yan H (2018) Multi-class fruit detection based on image region selection and improved object proposals. Neurocomputing 283:241–255

Loshchilov I, Hutter F (2019) Decoupled weight decay regularization[C]. In: 2019 International Conference on Learning Representations (ICLR), pp 1–19

Le THN, Duong CN, Han L, Luu K, Quach K, Savvides M (2018) Deep contextual recurrent residual networks for scene labeling. Pattern Recogn 80:32–41

Li Y, Pang Y, Wang J, Li X (2018) Patient-specific ECG classification by deeper CNN from generic to dedicated. Neurocomputing 314:336–346

Li RF, Liang HY, Shi YH et al (2020) Dual-CNN: A Convolutional language decoder for paragraph image captioning. Neurocomputing 396:92–101

Liu MF, Hu HJ, Li LJ et al (2020) Chinese image caption generation via visual attention and topic modeling. IEEE Trans Cybern 99:1–11

Lo F, Sun Y, Qiu J et al (2020) Image-based food classification and volume estimation for dietary assessment: a review. IEEE J Biomed Health 24(7):1926–1939

Long C, Zhang H, Xiao J et al (2015) SCA-CNN: spatial and channel-wise attention in convolutional networks for image captioning. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) pp 6298–6306

Lu J, Xiong C, Parikh D et al (2017) Knowing when to look: adaptive attention via a visual sentinel for image captioning. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 3242–3250

Luo DD, Liu YY, Wang YP et al (2018) Rapid identification of Fritillariae Cirrhosae Bulbus and its adulterants by UPLC-ELSD fingerprint combined with chemometrics methods. Syst Ecol 76:46–51

Mahmoud MAB, Guo P, Wang K (2020) Pseudoinverse learning autoencoder with DCGAN for plant diseases classification. Multimed Tools Appl 79(35–36):26245–26263

Minister of Health of the People's Republic of China (2002) List of items that can be used in health food. No.51

Naqvi NZ, Ye ZF (2020) Image captions: global-local and joint signals attention model (GL-JSAM). Multimed Tools Appl 79(3):24429–24448

Qiu DC, Rothrock B, Islam T et al (2020) SCOTI: Science captioning of terrain images for data prioritization and local image search. Planet Space Sci 188:104943

Sandler M, Howard A, Zhu ML et al (2018) MobileNetV2: inverted residuals and linear bottlenecks. 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) pp 4510–4520

Sharma H (2020) A novel image captioning model based on morphology and fisher vectors. International Conference on Communication and Artificial Intelligence (ICCAI) pp 483–93

Shen XQ, Liu B, Zhou Y, Zhao JQ (2020) Remote sensing image caption generation via transformer and reinforcement learning. Multimed Tools Appl 79(35–36):26661–26682

Spencer M, Eickholt J, Cheng J (2015) A deep learning network approach to ab initio protein secondary structure prediction. IEEE/ACM Trans Comput Biol Bioinform 12(99):103–112

Su JS, Tang JL, Lu ZY, Han XP et al (2019) A neural image captioning model with caption-to-images semantic constructor. Neurocomputing 367(20):144–151

Sun X, Qian H (2016) Chinese herbal medicine image recognition and retrieval by convolutional neural network. PLoS ONE 11(6):1–19

Tan MX, Le QV (2019) EfficientNet: rethinking model scaling for convolutional neural networks. arXiv preprint arXiv 1905: 11946v5

Tan CQ, Wu C, Huang YL et al (2020) Identification of different species of Zanthoxyli Pericarpium based on convolution neural network. PLoS ONE 15(4):e0230287

Tang Y, Wang Y, Li JZ, Zhang WW et al (2021) Classification of Chinese Herbal Medicines by deep neural network based on orthogonal design. 2021 IEEE 4th advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), pp 574–83

Tanti M, Gatt A, Muscat A (2018) Pre-gen metrics: predicting caption quality metrics without generating captions. 2018 European Conference on Computer Vision (ECCV) 11132, pp 114–123

Thangaraj R, Anandamurugan S, Kaliappan VK (2021) Automated tomato leaf disease classification using transfer learning-based deep convolution neural network. J Plant Dis Protect 128(1):73–86

Too EC, Li YJ, Kwao P, Njuki S et al (2019) Deep pruned nets for efficient image-based plants disease classification. J Intell Fuzzy Syst 37(3):4003–4019

Vellakani S, Pushbam I (2020) An enhanced OCT image captioning system to assist ophthalmologists detecting and classifying eye diseases. J X-Ray Sci Technol 28(5):1–14

Vinyals O, Toshev A, Bengio S et al (2015) Show and tell: a neural image caption generator. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 1–9

Wang L, Liu LF, Wang JY et al (2017) A strategy to identify and quantify closely related adulterant herbal materials by mass spectrometry-based partial least squares regression. Anal Chim Aata 977:28–35

Wang HZ, Wang HL, Xu KS (2020) Evolutionary recurrent neural network for image captioning. Neurocomputing 401:249–256

Wang CY, Liu BH, Liu LP, Zhu YJ et al (2021) A review of deep learning used in the hyperspectral image analysis for agriculture. Artif Intell Rev 54:5205–5253

Wang TS, Chao YP, Yin FZ, Yang XC et al (2021) An E-nose and convolution neural network based recognition method for processed products of Crataegi Fructus. Comb Chem High Throughput Screen 24(7):921–932

Woo SH, Park JC, Lee JY et al (2018) CBAM: Convolutional Block Attention Module. arXiv preprint arXiv 1807: 06521v2

Xie ZW, Li L, Zhong X et al (2020) Image-to-video person re-identification with cross-modal embeddings. Pattern Recogn Lett 133:70–76

Xin GZ, Lam YC, Mai WLJ et al (2014) Authentication of Bulbus Fritillariae Cirrhosae by RAPD-Derived DNA markers. Molecules 19(3):3450–3459

Xin GZ, Hu B, Shi ZQ et al (2014) Rapid identification of plant materials by wooden-tip electrospray ionization mass spectrometry and a strategy to differentiate the bulbs of Fritillaria. Anal Chim Aata 820:84–91

Xiong JB, Yu DZ, Liu SY, Shu L et al (2021) A review of plant phenotypic image recognition technology based on deep learning. Electronics 10:81

Xu K, Ba JL, Kiros R, et al (2015) Show, attend and tell: neural image caption generation with visual attention. Computer Science, pp 2048–2057

Yang SL, Xie SP, Xu M et al (2015) A novel method for rapid discrimination of bulbus of Fritillaria by using electronic nose and electronic tongue technology. Anal Methods-UK 7(3):943–952

Yang M, Liu JH, Shen Y, Zhao Z et al (2020) An ensemble of generation- and retrieval-based image captioning with dual generator generative adversarial network. IEEE Trans Image Process 29:9627–9640

Yap MH, Pons G, Marti J et al (2017) Automated breast ultrasound lesions detection using convolutional neural networks. IEEE J Biomed Health 22(4):1218–1226

Zhang XD, He SF, Song XH et al (2020) Image captioning via semantic element embedding. Neurocomputing 395:212–221

Zhao QQ, Ye ZYF, Su Y et al (2019) Predicting complexation performance between cyclodextrins and guest molecules by integrated machine learning and molecular modeling techniques. Acta Pharm Sin B 9(6):1241–1252

Zhong YC, Wang HY, Wei QH et al (2019) Combining DNA barcoding and HPLC fingerprints to trace species of an important traditional Chinese Medicine Fritillariae Bulbus. Molecules 24(18):3269

Zhong ZL, Lin ZQ, Bidart R et al (2020) Squeeze-and-attention networks for semantic segmentation. 2020 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 13062–13071

Zhou CL, Ge LM, Guo YB, Zhou DM et al (2021) A comprehensive comparison on current deep learning approaches for plant image classification. 2021 2nd International Workshop on Electronic Communication and Artificial Intelligence (IWECAI 2021) 012002–012012

Acknowledgements

This study was funded by the Project of State Administration of Traditional Chinese Medicine of Sichuan (grant no. 2021MS012), Research Promotion Plan for Xinglin Scholars in Chengdu University of Traditional Chinese Medicine (No.QNXZ2019018), and Research on Informatization of Traditional Chinese Medicine in Chengdu University of Traditional Chinese Medicine (No.MIEC1803).

Author information

Authors and Affiliations

Contributions

Chaoqun Tan: Formal analysis, Investigation, Software, Validation, Visualization. Writing-original draft, Writing-review & editing. Chong Wu: Software, Methodology, Investigation, Validation, Writing-original draft. Chunjie Wu: Resources, Supervision, Project administration, funding acquisition. Hu Chen: Methodology, Writing-review & editing, Supervision, Project administration, funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tan, C., Wu, C., Wu, C. et al. Visual feature-based improved EfficientNet-GRU for Fritillariae Cirrhosae Bulbus identification. Multimed Tools Appl 83, 5697–5721 (2024). https://doi.org/10.1007/s11042-023-15497-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15497-5