Abstract

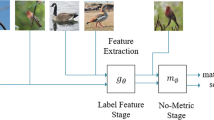

In the field of image classification, few shot learning (FSL) is to identify the new samples for each category using the extremely limited data. Due to lacking of data, FSL was usually performed by optimizing network or searching for new measurement methods. However, these mechanisms may fail to obtain enough information from the limited test data and then lack of learning ability. To address this problem, we attempt to obtain more information from the unlabeled data using the pseudo labels. In order to improve the reliability of selecting pseudo label data, we propose a new sample selection strategy, named Metric Confidence Selection (MCS), which is more conducive to select the most reliable pseudo-label data. In addition, we propose a new framework to combine our MCS and metric learning together. Our framework tends to get more information from the unlabeled samples, which is helpful to improve utilization efficiency. Extensive experiments on four widely-used benchmark datasets show that our proposed method surpass most state-of-the-art ones in few shot image classification.

Similar content being viewed by others

Data Availability

Not Applicable

Code Availability

Not Applicable

References

Abdelaziz M, Zhang Z (2021) Few-shot learning with saliency maps as additional visual information. Multim Tools Appl 80(7):10491–10508. https://doi.org/10.1007/s11042-020-09875-6

Abdelaziz M, Zhang Z (2022) Multi-scale kronecker-product relation networks for few-shot learning. Multim Tools Appl 81(5):6703–6722. https://doi.org/10.1007/s11042-021-11735-w

Alvarez M, Henao R (2006) Probabilistic kernel principal component analysis through time. Lect Notes Comput Sci, 747–754

Cai Q, Pan Y, Yao T, Yan C, Mei T (2018) Memory matching networks for one-shot image recognition. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 4080–4088

Chen W-Y, Liu Y-C, Kira Z, Wang Y-CF, Huang J-B (2019) A closer look at few-shot classification. In: International Conference on Learning Representations

Chen L-C, Papandreou G, Kokkinos I, Murphy K, Yuille AL (2018) Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Transactions on Pattern Analysis and Machine Intelligence 40(4):834–848

Chen Z, Fu Y, Zhang Y, Jiang Y-G, Xue X, Sigal L (2018) Semantic feature augmentation in few-shot learning

Chen Z, Ge J, Zhan H, Huang S, Wang D (2021) Pareto self-supervised training for few-shot learning. In: Proc IEEE/CVF Conf Comput Vis Pattern Recognit, pp 13663–13672

Choi J, Krishnamurthy J, Kembhavi A, Farhadi A (2018) Structured set matching networks for one-shot part labeling. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 3627–3636

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05) vol 1. p 886–893

De Maesschalck R, Jouan-Rimbaud D, Massart DL (2000) The mahalanobis distance. Chemometr Intell Lab Syst 50(1):1–18

Fei-Fei L, Fergus R, Perona P (2003) A bayesian approach to unsupervised one-shot learning of object categories. Proceedings Ninth IEEE International Conference on Computer Vision 1:671–678

Finn C, Abbeel P, Levine S (2017) Model-agnostic meta-learning for fast adaptation of deep networks. In: ICML’17 Proceedings of the 34th International Conference on Machine Learning - Volume 70, pp 1126–1135

Garcia V, Bruna J (2017) Few-shot learning with graph neural networks. arXiv preprint arXiv:1711.04043

Gidaris S, Komodakis N (2019) Generating classification weights with gnn denoising autoencoders for few-shot learning. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 21–30

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 770–778

Krizhevsky A (2009) Learning multiple layers of features from tiny images

Krizhevsky A, Sutskever I, Hinton GE (2017) Imagenet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

Lake BM, Salakhutdinov R, Gross J, Tenenbaum JB (2011) One shot learning of simple visual concepts. Cogn Sci 33(33)

Lake BM, Salakhutdinov R, Tenenbaum JB (2015) Human-level concept learning through probabilistic program induction. Science 350(6266):1332–1338

Lee K, Maji S, Ravichandran A, Soatto S (2019) Meta-learning with differentiable convex optimization. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 10657–10665

Li W, Xu J, Huo J, Wang L, Gao Y, Luo J (2019) Distribution consistency based covariance metric networks for few-shot learning. Proceedings of the AAAI Conference on Artificial Intelligence 33:8642–8649

Li H, Eigen D, Dodge S, Zeiler M, Wang X (2019) Finding task-relevant features for few-shot learning by category traversal. In: Proc IEEE/CVF Conf Comput Vis Pattern Recognit, pp 1–10

Lifchitz Y, Avrithis Y, Picard S, Bursuc A (2019) Dense classification and implanting for few-shot learning. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 9258–9267

Li Y, Gu C, Dullien T, Vinyals O, Kohli P (2019) Graph matching networks for learning the similarity of graph structured objects. In: International Conference on Machine Learning, pp 3835–3845

Liu Y, Lee J, Park M, Kim S, Yang E, Hwang SJ, Yang Y (2019) Learning to propagate labels: Transductive propagation network for few-shot learning. In: International Conference on Learning Representations

Li W, Wang L, Xu J, Huo J, Gao Y, Luo J (2019) Revisiting local descriptor based image-to-class measure for few-shot learning. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 7260–7268

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Ma Y, Bai S, Liu W, Wang S, Yu Y, Bai X, Liu X, Wang M (2021) Transductive relation-propagation with decoupling training for few-shot learning. IEEE Transactions on Neural Networks, 1–13

Mangla P, Kumari N, Sinha A, Singh M, Krishnamurthy B, Balasubramanian VN (2020) Charting the right manifold: Manifold mixup for few-shot learning. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp 2218–2227

Miller AH, Fisch A, Dodge J, Karimi A-H, Bordes A, Weston J (2016) Key-value memory networks for directly reading documents. In: Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, pp 1400–1409

Mishra N, Rohaninejad M, Chen X, Abbeel P (2018) A simple neural attentive meta-learner. In: International Conference on Learning Representations

Munkhdalai T, Yu H (2017) Meta networks. In: ICML’17 Proceedings of the 34th International Conference on Machine Learning - Volume 70, pp 2554–2563

Munkhdalai T, Yuan X, Mehri S, Trischler A (2018) Rapid adaptation with conditionally shifted neurons. In: International Conference on Machine Learning, pp 3661–3670

Oreshkin BN, López PR, Lacoste A (2018) Tadam: Task dependent adaptive metric for improved few-shot learning. Advances in Neural Information Processing Systems 31:719–729

Qiao S, Liu C, Shen W, Yuille A (2018) Few-shot image recognition by predicting parameters from activations. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 7229–7238

Raina R, Battle A, Lee H, Packer B, Ng AY (2007) Self-taught learning: transfer learning from unlabeled data. In: Proceedings of the 24th International Conference on Machine Learning, pp 759–766

Ravichandran A, Bhotika R, Soatto S (2019) Few-shot learning with embedded class models and shot-free meta training. In: 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp 331–339

Ravi S, Larochelle H (2017) Optimization as a model for few-shot learning. In: ICLR 2017 : International Conference on Learning Representations

Rizve MN, Khan SH, Khan FS, Shah M (2021) Exploring complementary strengths of invariant and equivariant representations for few-shot learning. In: Proc IEEE/CVF Conf Comput Vis Pattern Recognit, pp 10836–10846

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500):2323–2326

Rusu AA, Rao D, Sygnowski J, Vinyals O, Pascanu R, Osindero S, Hadsell R (2018) Meta-learning with latent embedding optimization. In: International Conference on Learning Representations

Simon C, Koniusz P, Nock R, Harandi M (2020) Adaptive subspaces for few-shot learning. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 4136–4145

Snell J, Swersky K, Zemel RS (2017) Prototypical networks for few-shot learning. Advances in Neural Information Processing Systems 30:4077–4087

Sung F, Yang Y, Zhang L, Xiang T, Torr PHS, Hospedales TM (2018) Learning to compare: Relation network for few-shot learning. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 1199–1208

Sun Q, Liu Y, Chua T-S, Schiele B (2019) Meta-transfer learning for few-shot learning. In: 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 403–412

Thrun S (1998) Lifelong Learning Algorithms, pp 181–209

Tipping ME, Bishop CM (1999) Probabilistic principal component analysis. J R Stat Soc Ser B Stat Methodol 61(3):611–622

Triantafillou E, Larochelle H, Snell J, Tenenbaum J, Swersky KJ, Ren M, Zemel R, Ravi S (2018) Meta-learning for semi-supervised few-shot classification. In: International Conference on Learning Representations

Vapnik VN (1995) The Nature of Statistical Learning Theory

Vilalta R, Drissi Y (2002) A perspective view and survey of meta-learning. Artif Intell Rev 18(2):77–95

Vinyals O, Blundell C, Lillicrap T, Kavukcuoglu K, Wierstra D (2016) Matching networks for one shot learning. In: NIPS’16 Proceedings of the 30th International Conference on Neural Information Processing Systems, vol 29. pp 3637–3645

Wah C, Branson S, Welinder P, Perona P, Belongie S (2011) The caltech-ucsd birds-200-2011 dataset

Wertheimer D, Tang L, Hariharan B (2021) Few-shot classification with feature map reconstruction networks. In: Proc IEEE/CVF Conf Comput Vis Pattern Recognit, pp 8012–8021

Ye H-J, Hu H, Zhan D-C, Sha F (2018) Learning embedding adaptation for few-shot learning

Ye H-J, Hu H, Zhan D-C, Sha F (2020) Few-shot learning via embedding adaptation with set-to-set functions. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 8808–8817

Zhang Z, Zha H (2005) Principal manifolds and nonlinear dimensionality reduction via tangent space alignment. SIAM J Sci Comput 26(1):313–338

Zhang C, Cai Y, Lin G, Shen C (2020) Deepemd: Few-shot image classification with differentiable earth mover’s distance and structured classifiers. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp 12203–12213

Zhong X, Zhong X, Hu H, Peng X (2021) A nonparametric-learning visual servoing framework for robot manipulator in unstructured environments. Neurocomputing 437:206–217

Zhou F, Wu B, Li Z (2018) Deep meta-learning: Learning to learn in the concept space. arXiv preprint arXiv:1802.03596

Funding

This study was supported by the National Natural Science Foundation of China (No. 62171314), and the recipient of the support was Kai He.

Author information

Authors and Affiliations

Contributions

Conceptualization: [Lei Wang] and [Kai He]; methodology: [Lei Wang]; software: [Lei Wang]; validation: [Lei Wang] and [Kai He]; formal analysis: [Lei Wang]; investigation: [Zikang Liu]; resources: [Zikang Liu]; data curation: [Zikang Liu]; writing-original draft preparation: [Lei Wang]; writing-review and editing: [Kai He]; supervision: [Kai He]; project administration: [Kai He]; funding acquisition: [Kai He].”; All authors have read and agreed to the published version of the manuscript

Corresponding author

Ethics declarations

Conflict of interest/Competing interests

The authors have no competing interests to declare that are relevant to the content of this article.

Ethics approval

Not Applicable

Consent to participate

Not Applicable

Consent for publication

Not Applicable

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, L., He, K. & Liu, Z. MCS: a metric confidence selection framework for few shot image classification. Multimed Tools Appl 83, 10865–10880 (2024). https://doi.org/10.1007/s11042-023-15892-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15892-y