Abstract

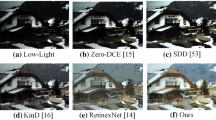

This paper proposes a Fusion and Recalibration Network (FRN) for low-light image enhancement. Firstly, The proposed method generates multi-exposure images from a single image to enhance low-light images. The proposed Feature Extraction Module (FEM) extracts multi-level features from an image. The proposed method uses Feature Augmentation Module (FAM), a U-net-like structure, to encode the multi-level features and assist in the reconstruction. The proposed Feature Fusion and Re-calibration Module (FFRM) re-calibrates and merges the features to provide an enhanced output image. The advantage of dynamically selecting features from extremely bright regions of the artificially darkened images and darker regions of the artificially brightened image results in a balanced output image. The proposed model was evaluated on various datasets and significantly outperformed most state-of-the-art techniques. Additionally, the experimental assessment shows that the proposed FRN model outperforms other quantitative and qualitative assessment approaches.

Similar content being viewed by others

Data Availability

Data sharing not applicable to this article as no datasets were generated during the current study

References

Amirkhani D, Bastanfard A (2021) An objective method to evaluate exemplar-based inpainted images quality using jaccard index. Multimedia Tools Appl 80(17):26199–26212

Amirkhani D, Bastanfard A (2019) Inpainted image quality evaluation based on saliency map features. In 2019 5th Iranian Conference on Signal Processing and Intelligent Systems (ICSPIS), pp 1–6

Bastanfard A, Amirkhani D, Mohammadi M (2022) Toward image superresolution based on local regression and nonlocal means. Multimedia Tools Appl 81(16):23473–23492

Bellamkonda S, Gopalan N (2020) An enhanced facial expression recognition model using local feature fusion of gabor wavelets and local directionality patterns. Intell J Ambient Comput Intell (IJACI) 11(1):48–70

Bellamkonda S, Gopalan N, Mala C, Settipalli L (2022) Facial expression recognition on partially occluded faces using component based ensemble stacked cnn. Cogn Neurodyn pp 1–24

Bhat N, Saggu N, Pragati, Kumar S (2020) Generating visible spectrum images from thermal infrared using conditional generative adversarial networks. In 2020 5th International Conference on Communication and Electronics Systems (ICCES), pp 1390–1394

Bhowmik A, Kumar S, Bhat N (2021) Evolution of automatic visual description techniques-a methodological survey. Multimedia Tools Appl 80(18):28015–28059

Cai J, Gu S, Zhang L (2018) Learning a deep single image contrast enhancer from multi-exposure images. IEEE Trans Image Process 27(4):2049–2062

Chakraborty S, Singh SK, Chakraborty P (2018) Correction to: Local directional gradient pattern: a local descriptor for face recognition. Multimedia Tools Appl 77(15):20269–20269

Chakraborty S, Mondal R, Singh PK, Sarkar R, Bhattacharjee D (2021) Transfer learning with fine tuning for human action recognition from still images. Multimedia Tools Appl 80(13):20547–20578

Cheng HD, Shi X (2004) A simple and effective histogram equalization approach to image enhancement. Digital signal Process 14(2):158–170

Dabov K, Foi A, Egiazarian K (2007) Video denoising by sparse 3d transform-domain collaborative filtering. In 2007 15th European Signal Processing Conference, IEEE, pp 145–149

Dang-Nguyen DT, Pasquini C, Conotter V, Boato G (2015) Raise: A raw images dataset for digital image forensics. In Proceedings of the 6th ACM multimedia systems conference, pp 219–224

Dehshibi MM, Bastanfard A (2010) Portability: A new challenge on designing family image database. In: IPCV, pp 270–276

Dehshibi MM, Bastanfard A, Kelishami AA (2010) Lpt: Eye features localizer in an n-dimensional image space. In IPCV, Citeseer, pp 347–352

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. Adv Neural Inform Process Syst 27

Guo X, Li Y, Ling H (2016) Lime: Low-light image enhancement via illumination map estimation. IEEE Trans Image Process 26(2):982–993

Gupta SS, Hossain S, Kim KD (2021) Hdr-like image from pseudoexposure image fusion: A genetic algorithm approach. IEEE Trans Consumer Electronics 67(2):119–128

Hao S, Han X, Guo Y, Xu X, Wang M (2020) Low-light image enhancement with semi-decoupled decomposition. IEEE Trans Multimedia 22(12):3025–3038

Hebbache L, Amirkhani D, Allili MS, Hammouche N, Lapointe JF (2023) Leveraging saliency in single-stage multi-label concrete defect detection using unmanned aerial vehicle imagery. Remote Sensing 15(5):1218

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In IEEE conference on computer vision and pattern recognition, pp 7132–7141

Jain G, Chopra S, Chopra S, Parihar AS (2022) Attention-net: An ensemble sketch recognition approach using vector images. IEEE Trans Cogn Dev Syst 14(1):136–145. https://doi.org/10.1109/TCDS.2020.3023055

Jiang Y, Gong X, Liu D, Cheng Y, Fang C, Shen X, Yang J, Zhou P, Wang Z (2021) Enlightengan: Deep light enhancement without paired supervision. IEEE Trans Image Process 30:2340–2349

Jobson DJ, Rahman Zu, Woodell GA (1997) A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans Image Process 6(7):965–976

Kalantari NK, Ramamoorthi R et al (2017) Deep high dynamic range imaging of dynamic scenes. ACM Trans Graph 36(4):144–1

Kaur J, Singh W (2022) Tools, techniques, datasets and application areas for object detection in an image: a review. Multimedia Tools Appl pp 1–55

Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z, et al. (2017) Photo-realistic single image super-resolution using a generative adversarial network. In IEEE conference on computer vision and pattern recognition, pp 4681–4690

Lee S, An GH, Kang SJ (2018) Deep chain hdri: Reconstructing a high dynamic range image from a single low dynamic range image. IEEE Access 6:49913–49924

Li Z, Wei Z, Wen C, Zheng J (2017) Detail-enhanced multi-scale exposure fusion. IEEE Trans Image Process 26(3):1243–1252

Li M, Liu J, Yang W, Sun X, Guo Z (2018) Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans Image Process 27(6):2828–2841

Li H, Ma K, Yong H, Zhang L (2020) Fast multi-scale structural patch decomposition for multi-exposure image fusion. IEEE Trans Image Process 29:5805–5816

Lore KG, Akintayo A, Sarkar S (2017) Llnet: A deep autoencoder approach to natural low-light image enhancement. Pattern Recogn 61:650–662

Lv F, Lu F, Wu J, Lim C (2018) Mbllen: Low-light image/video enhancement using cnns. In BMVC 220:4

Ma K, Li H, Yong H, Wang Z, Meng D, Zhang L (2017) Robust multiexposure image fusion: a structural patch decomposition approach. IEEE Trans Image Process 26(5):2519–2532

MirMashhouri A, Bastanfard A, Amirkhani D (2022) Collecting a database for emotional responses to simple and patterned two-color images. Multimedia Tools Appl 81(13):18935–18953

Mittal A, Soundararajan R, Bovik AC (2012) Making a “completely blind" image quality analyzer. IEEE Signal Process Lett 20(3):20–212

Mittal A, Moorthy AK, Bovik AC (2011) Blind/referenceless image spatial quality evaluator. In: 2011 conference record of the forty fifth asilomar conference on signals, systems and computers (ASILOMAR), IEEE, pp 723–727

Moran S, Marza P, McDonagh S, Parisot S, Slabaugh G (2020) Deeplpf: Deep local parametric filters for image enhancement. In IEEE conference on computer vision and pattern recognition, pp 12826–12835

Pandey NN, Muppalaneni NB (2022) A survey on visual and non-visual features in driver’s drowsiness detection. Multimedia Tools Appl pp 1–41

Parihar AS, Singh K, Ganotra A, Yadav A, Devashish (2022) Contrast aware image dehazing using generative adversarial network. In 2022 2nd international conference on intelligent technologies (CONIT), pp 1–6. https://doi.org/10.1109/CONIT55038.2022.9847710

Parihar AS, Singh K, Rohilla H, Asnani G, Kour H (2020) A comprehensive analysis of fusion-based image enhancement techniques. In 2020 4th international conference on intelligent computing and control systems (ICICCS), pp 823–828. https://doi.org/10.1109/ICICCS48265.2020.9120999

Parihar AS, Varshney D, Pandya K, Aggarwal A (2021) A comprehensive survey on video frame interpolation techniques. Visl Comput pp 1–25

Parihar AS, Verma OP (2016) Contrast enhancement using entropy-based dynamic sub-histogram equalisation. IET Image Process 10(11):799–808

Parihar AS, Singh K, Rohilla H, Asnani G (2021) Fusion-based simultaneous estimation of reflectance and illumination for low-light image enhancement. IET Image Process 15(7):1410–1423

Park S, Yu S, Moon B, Ko S, Paik J (2017) Low-light image enhancement using variational optimization-based retinex model. IEEE Trans Consum Electron 63(2):178–184

Ren W, Liu S, Ma L, Xu Q, Xu X, Cao X, Du J, Yang MH (2019) Lowlight image enhancement via a deep hybrid network. IEEE Trans Image Process 28(9):4364–4375

Ren X, Yang W, Cheng WH, Liu J (2020) Lr3m: Robust low-light enhancement via low-rank regularized retinex model. IEEE Trans Image Process 29:5862–5876

Rohith G, Kumar LS (2021) Paradigm shifts in super-resolution techniques for remote sensing applications. The Visual Computer 37(7):1965–2008

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556

Singh K, Parihar AS (2021) Variational optimization based single image dehazing. J Visual Commun Image Represent 79:103241

Singh N, Rathore SS, Kumar S (2022) Towards a super-resolution based approach for improved face recognition in low resolution environment. Multimedia Tools Appl 81(27):38887–38919

Singh K, Khare V, Agarwal V, Sourabh (2022) Weakly supervised image dehazing using generative adversarial networks. In 2022 4th international conference on advances in computing, communication control and networking (ICAC3N), pp 870–875. https://doi.org/10.1109/ICAC3N56670.2022.10074393

Singh K, Khare V, Agarwal V, Sourabh S (2022) A review on gan based image dehazing. In 2022 6th International conference on Intelligent Computing and Control Systems (ICICCS), pp 1565–1571. https://doi.org/10.1109/ICICCS53718.2022.9788377

Singh K, Parihar AS (2023) Dse-net: Deep simultaneous estimation network for low-light image enhancement. J Visual Commun Image Represent p 103780

Singh K, Parihar AS (2023) Illumination estimation for nature preserving low-light image enhancement. The Visual Computer pp 1–16

Sivaiah B, Gopalan N, Mala C, Lavanya S (2022) Fl-capsnet: facial localization augmented capsule network for human emotion recognition. Signal, Image Video Process pp 1–9

Ulucan O, Karakaya D, Turkan M (2021) Multi-exposure image fusion based on linear embeddings and watershed masking. Signal Process 178:107791

Vaidwan H, Seth N, Parihar AS, Singh K (2021) A study on transformerbased object detection. In 2021 international conference on intelligent technologies (CONIT), pp 1–6. https://doi.org/10.1109/CONIT51480.2021.9498550

Vishwakarma DK, Dhiman C (2019) A unified model for human activity recognition using spatial distribution of gradients and difference of gaussian kernel. Vis Comput 35(11):1595–1613

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Wang LW, Liu ZS, Siu WC, Lun DP (2020) Lightening network for low-light image enhancement. IEEE Trans Image Process 29:7984–7996

Wang Y, Wan R, Yang W, Li H, Chau LP, Kot AC (2021) Low-light image enhancement with normalizing flow. arXiv preprint arXiv:2109.05923

Wei C, Wang W, Yang W, Liu J (2018) Deep retinex decomposition for low-light enhancement. arXiv preprint arXiv:1808.04560

Xu H, Jiang G, Yu M, Zhu Z, Bai Y, Song Y, Sun H (2021) Tensor product and tensor-singular value decomposition based multi-exposure fusion of images. IEEE Trans Multimedia

Zhang Q, Nie Y, Zhu L, Xiao C, Zheng WS (2020) Enhancing underexposed photos using perceptually bidirectional similarity. IEEE Trans Multimedia 23:189–202

Zheng C, Shi D, Shi W (2021) Adaptive unfolding total variation network for low-light image enhancement. In Proceedings of the IEEE/CVF international conference on computer vision, pp 4439–4448

Acknowledgements

Akshat Agarwal, Mohit Kumar Agarwal, and Aditya Shankar worked on the implementation and analysis. Akshat Agarwal and Ashutosh Pandey worked on exploration and the initial draft of the paper writing. Kavinder Singh and Anil Singh Parihar finalized the problem, guided implementation, revised the rough draft of the paper, and took care of the revision

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The author has no conflicts of interest to declare that are relevant to the content of this article

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Singh, K., Pandey, A., Agarwal, A. et al. FRN: Fusion and recalibration network for low-light image enhancement. Multimed Tools Appl 83, 12235–12252 (2024). https://doi.org/10.1007/s11042-023-15908-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15908-7