Abstract

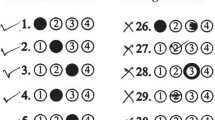

Public survey is popular in different domain to have a feedback from service users in the aim to improve the quality of service provided or to figure out what they think. In general, the data processing takes time due of manual intervention and it may distort the result because an error of processing may occur, so the aim of this research is to propose a new finding how to process rapidly a paper survey form to have the statistic result on time. The paper template design must have at least one question with “Yes” and “No” check box or radio button as response field. People have a liberal choice to reply: normal, no response or both. In each box in form can contain a different type of handwriting and the way to recognize them is within mathematics tools combined with neural networks. First of all, the physical paper which contents the information must be converted to image by digitization operation. Any type format of image is accepted then it will be handled by preprocessing module before coming to the Lite Convolutional Neural Network. Once the neural networks finish the treatment, a postprocessing module computes the information and insert into database. The singularity of this technique is the intervention of Lite Convolutional Neural Network as recognition tool which has more advantage compared with standard deep learning in terms of computation time, accuracy/precision and number of parameters. The validation of this approach is done with RVL-CDIP dataset, real survey of mobile money and satellite TV service, the accuracy reaches 96%, 97% and 98% respectively.

Similar content being viewed by others

Data Availability

Data is contained within the article.

References

Afzal MZ, Capobianco S, Malik MI, Marinai S, Breuel TM, Dengel A, Liwicki M (2015) Deepdocclassifier: Document classification with deep convolutional neural network. 13th International Conference on Document Analysis and Recognition (ICDAR). pp. 1111–1115 (2015). https://doi.org/10.1109/ICDAR.2015.7333933

Aldoski J (2022) Image classification accuracy assessment, Thesis at Bangor University, New York

Appalaraju S, Jasani B, Kota BU, Xie Y, Manmatha R (2021) Docformer: End-toend transformer for document understanding. IEEE/CVF International Conference on Computer Vision (ICCV). pp. 993–1003 (October 2021)

Asgher U, Khalil K, Jawad M, Riaz A, Butt S, Ayaz Y, Naseer N, Nazir S (2020) Enhanced Accuracy for Multiclass Mental Workload Detection Using Long Short-Term Memory for Brain-Computer Interface. Front Neurosci 14:584. https://doi.org/10.3389/fnins.00584

Baek J, Kim G, Lee J, Park S, Han D, Yun S, Oh SJ, Lee H (2019) What is wrong with scene text recognition model comparisons? dataset and model analysis. IEEE/CVF International Conference on Computer Vision (ICCV)

Baek Y, Lee B, Han D, Yun S, Lee H (2019) Character region awareness for text detection. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 9357–9366 (2019). https://doi.org/10.1109/CVPR.2019.00959

Bunke H, Patrick W, Debashish N, Sargur S, Venu G (1997). Analysis of Printed Forms. https://doi.org/10.1142/9789812830968_0018

Casey RG, Ferguson DR, Mohiuddin KM, Walach E (2007) Intelligent forms processing system. Mach Vis Appl 5:143–155

Chen JL, Lee HJ (1998) An efficient algorithm for form structure extraction using strip projection. Pattern Recognit 31(9):1353–1368

Devlin J, Chang MW, Lee K, Toutanova K (2019) BERT: Pre-training of deep bidirectional transformers for language understanding. Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers). pp. 4171–4186. Association for Computational Linguistics, Minneapolis, Minnesota (Jun 2019). https://doi.org/10.18653/v1/N19-1423, https://aclanthology.org/N19-1423

Goutte C, Gaussier E (2005) A Probabilistic Interpretation of Precision, Recall and F-Score, with Implication for Evaluation. Lect Notes Comput Sci 3408:345–359. https://doi.org/10.1007/978-3-540-31865-1_25

Gupta A, Vedaldi A, Zisserman A (2016) Synthetic data for text localisation in natural images. IEEE Conference on Computer Vision and Pattern Recognition (CVPR)

Harley AW, Ufkes A, Derpanis KG (2015) Evaluation of deep convolutional nets for document image classification and retrieval. 13th International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 2015, pp. 991–995. https://doi.org/10.1109/ICDAR.2015.7333910

Harley AW, Ufkes A, Derpanis KG (2015) Evaluation of deep convolutional nets for document image classification and retrieval. 13th International Conference on Document Analysis and Recognition (ICDAR). pp. 991–995 (2015). https://doi.org/10.1109/ICDAR.2015.7333910

He K, Zhang X, Ren S, Sun J (2016) Deep Residual Learning for Image Recognition. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 2016 pp. 770–778. https://doi.org/10.1109/CVPR.2016.90

Huang W, Qiao Y, Tang X (2014) Robust scene text detection with convolution neural network induced mser trees. In: Fleet D, Pajdla T, Schiele B, Tuytelaars T (eds) Computer Vision – ECCV 2014. Springer International Publishing, Cham, pp 497–511

Hwang W, Kim S, Yim J, Seo M, Park S, Park S, Lee J, Lee B, Lee H (2019) Post-ocr parsing: building simple and robust parser via bio tagging. Document Intelligence at NeurIPS, 2019. https://www.semanticscholar.org/paper/Post-OCR-parsing%3A-building-simple-and-robust-parser-Hwang-Kim/da5d93e2931c12b81774a6857db0175875fdf71a

Hwang W, Lee H, Yim J, Kim G, Seo M (2021) Cost-effective end-to-end information extraction for semi-structured document images. Conference on Empirical Methods in Natural Language Processing. pp. 3375–3383. Association for Computational Linguistics, Online and Punta Cana, Dominican Republic (Nov 2021). https://doi.org/10.18653/v1/2021.emnlp-main.271, https://aclanthology.org/2021.emnlp-main.271

Hwang W, Yim J, Park S, Yang S, Seo M (2021) Spatial dependency parsing for semi-structured document information extraction. Association for Computational Linguistics: ACL-IJCNLP. pp. 330–343. Association for Computational Linguistics, Online (Aug 2021). https://doi.org/10.18653/v1/2021.emnlp-main.271, https://aclanthology.org/2021.emnlp-main.271

Hwang W, Yim J, Park S, Yang S, Seo M (2021) Spatial dependency parsing for semi-structured document information extraction. Association for Computational Linguistics: ACL-IJCNLP 2021. pp. 330–343. Association for Computational Linguistics, Online (Aug 2021). https://doi.org/10.18653/v1/2021.findings-acl.28, https://aclanthology.org/2021.findings-acl.28

Jaderberg M, Simonyan K, Vedaldi A, Zisserman A (2014) Synthetic data and artificial neural networks for natural scene text recognition. Workshop on Deep Learning, NIPS

Kang L, Kumar J, Ye P, Li Y, Doermann DS (2014) Convolutional neural networks for document image classification. 22nd International Conference on Pattern Recognition pp. 3168–3172 (2014)

Karatzas D, Gomez-Bigorda L, Nicolaou A, Ghosh S, Bagdanov A, Iwamura M, Matas J, Neumann L, Chandrasekhar VR, Lu S, Shafait F, Uchida S, Valveny E (2015) Icdar.(2015). Competition on robust reading. In: 2015 13th International Conference on Document Analysis and Recognition (ICDAR). pp. 1156–1160. https://doi.org/10.1109/ICDAR.2015.7333942 13.

Kastrati Z, Imran AS, Yayilgan SY (2019) The impact of deep learning on document classification using semantically rich representations. Inf Process Manage 56(5):1618–1632

Kathait S (2018) Tiwari S (2018) Application of Image Processing and Convolution Networks in Intelligent Character Recognition for Digitized Forms Processing. Int J Comput Appl 179:7–13. https://doi.org/10.5120/ijca2018915460

Kim G et al (2022) OCR-Free Document Understanding Transformer. In: Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T. (eds) Computer Vision – ECCV 2022. ECCV 2022. Lecture Notes in Computer Science, vol 13688. Springer, Cham. https://doi.org/10.1007/978-3-031-19815-1_29

Kingma DP, Ba J (2015) Adam. A method for stochastic optimization. 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7–9, 2015, Conference Track Proceedings (2015), http://arxiv.org/abs/1412.6980

Li X, Doermann D, Oh W, Gao W (1999) A Robust Method for Unknown Forms Analysis," Proceedings of the Fifth International Conference on Document Analysis and Recognition. ICDAR '99 (Cat. No.PR00318), Bangalore, India, 1999, pp. 531-534, doi: 10.1109/ICDAR.1999.791842.

Li P, Gu J, Kuen J, Morariu VI, Zhao H, Jain R, Manjunatha V, Liu H (2021) Selfdoc: Self-supervised document representation learning. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). pp. 5648–5656 (2021). https://doi.org/10.1109/CVPR46437.2021.00560

Li X, Yan H, Xie W, Kang L, Tian Y (2020) An Improved Pulse-Coupled Neural Network Model for Pansharpening. Sensors (Basel, Switzerland) 20(10):2764. https://doi.org/10.3390/s20102764

Liao M, Shi B, Bai X, Wang X, Liu W (2017) Textboxes: A fast text detector with a single deep neural network. AAAI Conference on Artificial Intelligence 31(1) (Feb 2017). https://doi.org/10.1609/aaai.v31i1.11196, https://ojs.aaai.org/index.php/AAAI/article/view/11196

Lindblad T, Kinser JM (2005) Image Processing Using Pulse-Coupled Neural Networks, 2nd edn. Springer, Berlin Heidelberg New York, pp 11–23

Liu W, Chen C, Wong KYK, Su Z, Han J (2016) Star-net: A spatial attention residue network for scene text recognition. In: Richard C. Wilson, E.R.H., Smith, W.A.P. (eds.). British Machine Vision Conference (BMVC). pp. 43.1–43.13. BMVA Press (September 2016). https://doi.org/10.5244/C.30.43, https://doi.org/10.5244/C.30.43

Mahgoub A, Ebeid A, Abdel B, Hossam ED, El-Badawy, El-Sayed (2008) An intersecting cortical model based framework for human face recognition. J Systemics Cybern Inform 6. https://www.researchgate.net/publication/253933623_An_Intersecting_Cortical_Model_Based_Framework_for_Human_Face_Recognition/citation/download

Mahum R, Irtaza A, Nawaz M, Nazir T, Masood M, Mehmood A (2021) A generic framework for Generation of Summarized Video Clips using Transfer Learning (SumVClip). 1–8. https://doi.org/10.1109/MAJICC53071.2021.9526264

Majumder BP, Potti N, Tata S, Wendt JB, Zhao Q, Najork M (2020) Representation learning for information extraction from form-like documents. Association for Computational Linguistics. pp. 6495–6504. Association for Computational Linguistics, Online (Jul 2020). https://doi.org/10.18653/v1/2020.acl-main.580, https://www.aclweb.org/anthology/2020.acl-main.580

Mathew M, Karatzas D, Jawahar C (2021) Docvqa: A dataset for vqa on document images. IEEE/CVF Winter Conference on Applications of Computer Vision. pp. 2200–2209 (2021)

Mondal, Ajoy, Jawahar, C (2022) Deep Neural Features for Document Image Analysis. https://doi.org/10.21203/rs.3.rs-1576151/v1.

Monica MS, Melisa, Sarat KS (2014) Pulse Coupled Neural Networks and its Applications. Expert Systems with Applications. Volume 41, Issue 8, pp 3965-3974. https://doi.org/10.1016/j.eswa.2013.12.027

Phan TQ, Shivakumara P, Tian S, Tan CL (2013) Recognizing text with perspective distortion in natural scenes. Proceedings of the IEEE International Conference on Computer Vision (ICCV) (December 2013)

Rafidison MA, Ramafiarisona HM (2021) Modified Convolutional Neural Network For Ariary Banknotes Authentication. Int J Innov Eng Res Technol 8(1):62–69

Shi B, Bai X, Yao C (2017) An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans Pattern Anal Mach Intell 39:2298–2304

Shi B, Wang X, Lyu P, Yao C, Bai X (2016) Robust scene text recognition with automatic rectification. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 4168–4176 (2016). https://doi.org/10.1109/CVPR.2016.452

Sruthi PS (2015) Grid infrastructure based intelligent character recognition: a novel algorithm for extraction of handwritten and typewritten characters using neural networks. https://www.semanticscholar.org/paper/Grid-Infrastructure-Based-Intelligent-Character-ASruthi/98a951a37bdd94fe0a2707bd3a59b6cd3e8ba5a0#citing-papers

Stéphane T, Christophe J (2018) L’IFOP. https://www.ifop.com/qui-sommes-nous/

Tanaka M, Watanabe T, Baba Y, Kurita T, Mishima T (1999) Autonomous foveating system and integration of the foveated images. IEEE SMC'99 Conference Proceedings. 1999 IEEE International Conference on Systems, Man, and Cybernetics (Cat. No.99CH37028), 1999, pp. 559–564 vol.1. https://doi.org/10.1109/ICSMC.1999.814153

Tian Z, Huang W, He T, He P, Qiao Y (2016) Detecting text in natural image with connectionist text proposal network. Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) Computer Vision – ECCV 2016. pp. 56–72. Springer International Publishing, Cham (2016)

Ulf E, Jason M.K, Jenny A, Nils Z (2004) The intersecting cortical model in image processing. Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, Volume 525, Issues 1–2, Pages 392–396, ISSN 0168–9002. https://doi.org/10.1016/j.nima.2004.03.102

Wang Z et al (2014) Plant recognition based on intersecting cortical model. International Joint Conference on Neural Networks (IJCNN), pp. 975–980. https://doi.org/10.1109/IJCNN.2014.6889656

Wang J, Hu X (2017) Gated recurrent convolution neural network for ocr. In: Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R. (eds.) Advances in Neural Information Processing Systems. vol. 30. Curran Associates, Inc. (2017), https://proceedings.neurips.cc/paper/2017/file/c24cd76e1ce41366a4bbe8a49b02a028-Paper.pdf

Wang D, Srihari SN (1994) Analysis of Form Images. Int J Pattern Recognit Artif Intell 8:1031–1052

Xu Y, Li M, Cui L, Huang S, Wei F, Zhou M (2020) Layoutlm: Pre-training of text and layout for document image understanding. 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. p. 1192–1200. KDD ’20, Association for Computing Machinery, New York, NY, USA (2020). https://doi.org/10.1145/3394486.3403172

Xu Y, Xu Y, Lv T, Cui L, Wei F, Wang G, Lu Y, Florencio D, Zhang C, Che W, Zhang M, Zhou L (2021) LayoutLMv2: Multi-modal pre-training for visually-rich document understanding. 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers). pp. 2579–2591. Association for Computational Linguistics, Online (Aug 2021). https://doi.org/10.18653/v1/2021.acl-long.201, https://aclanthology.org/2021.acl-long.201

Xu Y, Xu Y, Lv T, Cui L, Wei F, Wang G, Lu Y, Florencio D, Zhang C, Che W, Zhang M, Zhou L (2021) LayoutLMv2: Multi-modal pre-training for visually-rich document understanding. Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers). pp. 2579–2591. Association for Computational Linguistics, Online (Aug 2021). https://doi.org/10.18653/v1/2021.acl-long.201, https://aclanthology.org/2021.acl-long.201

Yide M, Kun Z, Zhaobin W (2010) Application of Pulse Coupled Neural Networks. DOI: https://doi.org/10.1007/978-3-642-13745-7. Publisher: Springer Berlin, Heidelberg. eBook Packages: Computer Science, Computer Science (R0).

Zhang Z, Zhang C, Shen W, Yao C, Liu W, Bai X (2016) Multi-oriented text detection with fully convolutional networks. IEEE Conference on Computer Vision and Pattern Recognition (CVPR). pp. 4159–4167

Zhao Z, Tian X, Guo B (2002) A study on printed form processing and reconstruction. Proceedings. International Conference on Machine Learning and Cybernetics, 2002, pp. 1730–1732 vol.4. https://doi.org/10.1109/ICMLC.2002.1175332.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Authors declare no conflict of interest.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Rafidison, M.A., Rakotomihamina, A.H., Rajaonarison, F.T.M. et al. Intervention of light convolutional neural network in document survey form processing. Multimed Tools Appl 82, 32583–32605 (2023). https://doi.org/10.1007/s11042-023-16076-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16076-4