Abstract

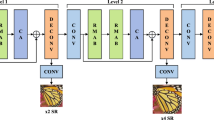

Deep neural networks have shown better effects for super-resolution in recent years. However, it is difficult to extract multi-level features of low-resolution (LR) images to reconstruct more clear images. Most of the existing mainstream methods use encoding and decoding frameworks, which are still difficult to extract multi-level features from low resolution images, and this process is essential for the reconstruction of more clear images. To overcome these limitations, we present a multi-level continuous encoding and decoding based on dilation convolution for super-resolution (MEDSR). Specifically, we first construct a multi-level continuous encoding and decoding module, which can obtain more easy-to-extract features, complex-to-extract features, and difficult-to-extract features of LR images. Then we construct dilated attention modules based on different dilated rates to capture multi-level regional information of different respective fields and focus on each level information of multi-level regional information to extract multi-level deep features. These dilated attention modules are designed to incorporate varying levels of contextual information by dilating the receptive field of the attention module. This allows the module to attend to a larger area of the input while maintaining a constant memory footprint. MEDSR uses multi-level deep features of LR images to reconstruct better SR images, the values of PSNR and SSIM of our method on Set5 dataset reach 32.65 dB and 0.9005 respectively when the scale factor is ×4. Extensive experimental results demonstrate that our proposed MEDSR outperforms that of some state-of-the-art super-resolution methods.

Similar content being viewed by others

Data availability

The raw/processed data required to reproduce these findings cannot be shared at this time as the data also forms part of an ongoing study.

References

Agustsson E, Timofte R (2017) Ntire 2017 challenge on single image super-resolution: Dataset and study[C]. Computer Vision and Pattern Recognition, IEEE, 126–135

Anwar S, Khan S, Barnes N (2020) A deep journey into super-resolution: A survey[J]. ACM Comput Surv (CSUR) 53(3):1–34

Bevilacqua M, Roumy A, Guillemot C et al (2012) Low-complexity single-image super-resolution based on nonnegative neighbor embedding[C]. British Machine Vision Conference, 1–10

Chen H, Gu J, Zhang Z (2021) Attention in Attention Network for Image Super-Resolution [J]. arXiv:210409497

Dai T, Cai J, Zhang Y et al (2019) Second-order attention network for single image super-resolution[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 11065–11074

Dong C, Loy CC, He K et al (2015) Image super-resolution using deep convolutional networks [J]. IEEE Trans Pattern Anal Mmach Intell 38(2):295–307

Dong C, Loy C C, Tang X et al (2016) Accelerating the Super-Resolution Convolutional Neural Network[C]. European Conference on Computer Vision, Springer, 391–407

Haris M, Shakhnarovich G, Ukita N (2018) Deep back-projection networks for super-resolution[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 1664–1673

Huang J, Singh A, Ahuja N et al (2015) Single image super-resolution from transformed self-exemplars[C]. Computer Vision and Pattern Recognition, IEEE, 5197–5206

Kim J, Lee JK, Lee KM et al (2016) Accurate Image Super-Resolution Using Very Deep Convolutional Networks[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 1646–1654

Kim J, Lee JK, Lee KM et al (2016) Deeply-Recursive Convolutional Network for Image Super-Resolution[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 1637–1645

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization[J]. arXiv preprint arXiv:14126980

Kumar BP, Kumar A, Pandey R (2022) Region-based adaptive single image dehazing, detail enhancement and pre-processing using auto-colour transfer method[J]. Signal Process Image Commun 100:116532

Lai W-S, Huang J-B, Ahuja N et al (2017) Deep laplacian pyramid networks for fast and accurate super-resolution[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 624–632

Lecun Y, Bengio Y, Hinton G (2015) Deep learning[J]. Nature 521(7553):436–444

Ledig C, Theis L, Huszár F et al (2017) Photo-realistic single image super-resolution using a generative adversarial network[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 4681–4690

Lim B, Son S, Kim H et al (2017) Enhanced deep residual networks for single image super-resolution[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 136–144

Liu AA, Shao Z, Wong Y et al (2019) LSTM-based multi-label video event detection[J]. Multimed Tools Appl 78(1):677–695

Liu H, Gu Y, Wang T et al (2020) Satellite video super-resolution based on adaptively spatiotemporal neighbors and nonlocal similarity regularization[J]. IEEE Trans Geosci Remote Sens 58(12):8372–8383

Liu H, Cao F, Wen C et al (2020) Lightweight multi-scale residual networks with attention for image super-resolution[J]. Knowl-Based Syst 203(4):106103

Liu J, Tang J, Wu G (2020) Residual feature distillation network for lightweight image super-resolution[C]. European Conference on Computer Vision, Springer, 41–55

Mao X, Shen C, Yang YB (2016) Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections[J]. Adv Neural Inf Proces Syst 29:2802–2810

Martin D, Fowlkes CC, Tal D et al (2001) A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics[C]. International Conference on Computer Vision, IEEE, 416–423

Meng Q, Zhao S, Huang Z et al (2021) Magface: A universal representation for face recognition and quality assessment[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, IEEE, 14225–14234

Shamsolmoali P, Zareapoor M, Jain DK et al (2019) Deep convolution network for surveillance records super-resolution[J]. Multimed Tools Appl 78(17):23815–23829

Shao Z, Han J, Marnerides D et al (2022) Region-object relation-aware dense captioning via transformer[J]. IEEE Trans Neural Netw Learn Syst

Shi W, Caballero J, Huszár F et al (2016) Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 1874–1883

Song Z, Zhao X, Hui Y et al (2021) Progressive back-projection network for COVID-CT super-resolution[J]. Comput Methods Prog Biomed 208:106193

Song Z, Zhao X, Jiang H (2021) Gradual deep residual network for super-resolution[J]. Multimed Tools Appl 80(7):9765–9778

Song Z, Zhao X, Hui Y et al (2022) Fusing Attention Network based on Dilated Convolution for Super Resolution[J]. IEEE Trans Cogn Develop Syst 15:234–241

Tai Y, Yang J, Liu X (2017) Image super-resolution via deep recursive residual network[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 3147–3155

Wang Z, Bovik AC, Sheikh HR et al (2004) Image quality assessment: from error visibility to structural similarity [J]. IEEE Trans Image Process 13(4):600–612

Wang X, Yu K, Wu S et al (2018) Esrgan: Enhanced super-resolution generative adversarial networks[C]. Proceedings of the European Conference on Computer Vision, Springer, 1–10

Wang Z, Chen J, Hoi SCH (2020) Deep learning for image super-resolution: A survey[J]. IEEE Trans Pattern Anal Mach Intell 43(10):3365–3387

Yang W, Zhang X, Tian Y et al (2019) Deep learning for single image super-resolution: A brief review[J]. IEEE Trans Multimed 21(12):3106–3121

Ye M, Shen J, Lin G et al (2021) Deep learning for person re-identification: A survey and outlook[J]. IEEE Trans Pattern Anal Mach Intell 44(6):2872–2893

Yue L, Shen H, Li J et al (2016) Image super-resolution: The techniques, applications, and future[J]. Signal Process 128:389–408

Zeyde R, Elad M, Protter M et al (2010) On single image scale-up using sparse-representations[C]. International Conference on Curves and Surfaces, 711–730

Zhang Y, Tian Y, Kong Y et al (2018) Residual dense network for image super-resolution[C]. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, IEEE, 2472–2481

Zhang Y, Li K, Li K et al (2018) Image super-resolution using very deep residual channel attention networks[C]. Proceedings of the European Conference on Computer Vision, Springer, 286–301

Acknowledgments

This work is supported by the National Key R&D Program (2020YFB1713600), the National Natural Science Foundation of China (61763029), the National Natural Science Foundation Youth Fund of China (41701479), the Science and Technology Program of Gansu Province (21YF5GA072, 21JR7RA206), the Education Industry Support Program of Gansu Provincial Department (2021CYZC-02), and the Natural Science Foundation of Liaoning Province (20180550529).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, Z., Ma, Y., Liu, W. et al. Multi-level continuous encoding and decoding based on dilation convolution for super-resolution. Multimed Tools Appl 83, 20149–20167 (2024). https://doi.org/10.1007/s11042-023-16415-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16415-5