Abstract

Monocular depth estimation is a crucial task in computer vision, and self-supervised algorithms are gaining popularity due to their independence from expensive ground truth supervision. However, current self-supervised algorithms may not provide accurate estimation and may suffer from distorted boundaries when applied to indoor scenes. Combining multi-scale features is an important research direction in image segmentation to achieve accurate estimation and resolve boundary distortion. However, there are few studies on indoor self-supervised algorithms in this regard. To solve this issue, we propose a novel full-scale feature information fusion approach that includes a full-scale skip-connection and a full-scale feature fusion block. This approach can aggregate the high-level and low-level information of all scale feature maps during the network's encoding and decoding process to compensate for the network's loss of cross-layer feature information. The proposed full-scale feature fusion improves accuracy and reduces the decoder parameters. To fully exploit the superiority of the full-scale feature fusion module, we replace the encoder backbone from ResNet with the more advanced ResNeSt. Combining these two methods results in a significant improvement in prediction accuracy. We have extensively evaluated our approach on the indoor benchmark datasets NYU Depth V2 and ScanNet. Our experimental results demonstrate that our method outperforms existing algorithms, particularly on NYU Depth V2, where our precision is raised to 83.8%.

Similar content being viewed by others

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Chibane J, Alldieck T, Pons-Moll G (2020) Implicit functions in feature space for 3d shape reconstruction and completion. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6970–6981

Du R, Turner E, Dzitsiuk M, Prasso L, Duarte I, Dourgarian J, Afonso J, Pascoal J, Gladstone J, Cruces N (2020) DepthLab: Real-time 3D interaction with depth maps for mobile augmented reality. In: Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology, pp 829–843

Yin W, Liu Y, Shen C (2021) Virtual normal: enforcing geometric constraints for accurate and robust depth prediction. IEEE Trans Pattern Anal Mach Intell 44:7282–7295

Han C, Cheng D, Kou Q, Wang X, Chen L, Zhao J (2022) Self-supervised monocular Depth estimation with multi-scale structure similarity loss. Multimed Tools Appl 31:3251–3266

Lee S, Im S, Lin S, Kweon I.S (2021) Learning monocular depth in dynamic scenes via instance-aware projection consistency. In:Proceedings of the AAAI conference on artificial intelligence, pp 1863–1872

Liu L, Song X, Wang M, Liu Y, Zhang L (2021) Self-supervised monocular depth estimation for all day images using domain separation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 12737–12746

Wang H, Wang M, Che Z, Xu Z, Qiao X, Qi M, Feng F, Tang J (2022) RGB-Depth fusion GAN for indoor depth completion. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 6209–6218

Yan Z, Wang K, Li X, Zhang Z, Li J, Yang J (2022) RigNet: Repetitive image guided network for depth completion. In: European conference on computer vision, Springer, pp 214–230

Jung G, Yoon SM (2022) Monocular depth estimation with multi-view attention autoencoder. Multimed Tools Appl 81:33759–33770

Sun L, Li Y, Liu B, Xu L, Zhang Z, Zhu J (2022) Transferring knowledge from monocular completion for self-supervised monocular depth estimation. Multimed Tools Appl 81:42485–42495

Ronneberger O, Fischer P, Brox T (2015) U-net: Convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention, Springer, pp 234–241

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Zhang H, Wu C, Zhang Z, Zhu Y, Lin H, Zhang Z, Sun Y, He T, Mueller J, Manmatha R (2022) Resnest: Split-attention networks. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2736–2746

Silberman N, Hoiem D, Kohli P, Fergus R (2012) Indoor segmentation and support inference from rgbd images. In: European conference on computer vision, Springer, pp 746–760

Dai A, Chang AX, Savva M, Halber M, Funkhouser T, Nießner M (2017) Scannet: Richly-annotated 3d reconstructions of indoor scenes. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5828–5839

Saxena A, Sun M, Ng AY (2008) Make3d: learning 3d scene structure from a single still image. IEEE Trans Pattern Anal Mach Intell 31:824–840

Eigen D, Puhrsch C, Fergus R (2014) Depth map prediction from a single image using a multi-scale deep network. Adv Neural Inf Process Syst 27(2):2366–2374

Hu J, Ozay M, Zhang Y, Okatani T (2019) Revisiting single image depth estimation: toward higher resolution maps with accurate object boundaries. 2019 IEEE winter conference on applications of computer vision (WACV), IEEE, pp 1043–1051

Huang G, Liu Z, Van Der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. Proceedings of the IEEE conference on computer vision and pattern recognition, pp 4700–4708

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Lee JH, Han M-K, Ko DW, Suh IH (2019) From big to small: Multi-scale local planar guidance for monocular depth estimation, arXiv preprint arXiv:1907.10326

Bhat SF, Alhashim I, Wonka P (2021) Adabins: Depth estimation using adaptive bins. Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4009–4018

Ranftl R, Bochkovskiy A, Koltun V (2021) Vision transformers for dense prediction. Proceedings of the IEEE/CVF international conference on computer vision, pp 12179–12188

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S (2020) An image is worth 16x16 words: Transformers for image recognition at scale, arXiv preprint arXiv:2010.11929

Garg R, Bg VK, Carneiro G, Reid I (2016) Unsupervised cnn for single view depth estimation: Geometry to the rescue. In: European conference on computer vision, Springer, pp 740–756

Godard C, Mac Aodha O, Brostow GJ (2017) Unsupervised monocular depth estimation with left-right consistency. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 270–279

Zhou T, Brown M, Snavely N, Lowe DG (2017) Unsupervised learning of depth and ego-motion from video. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1851–1858

Godard C, Mac Aodha O, Firman M, Brostow GJ (2019) Digging into self-supervised monocular depth estimation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 3828–3838

Lyu X, Liu L, Wang M, Kong X, Liu L, Liu Y, Chen X, Yuan Y (2021) Hr-depth: High resolution self-supervised monocular depth estimation. In: Proceedings of the AAAI conference on artificial intelligence, pp 2294–2301

Jung H, Park E, Yoo S (2021) Fine-grained semantics-aware representation enhancement for self-supervised monocular depth estimation. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 12642–12652

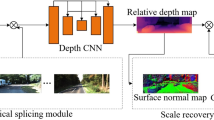

Ji P, Li R, Bhanu B, Xu Y (2021) Monoindoor: Towards good practice of self-supervised monocular depth estimation for indoor environments. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 12787–12796

Li B, Huang Y, Liu Z, Zou D, Yu W (2021) StructDepth: Leveraging the structural regularities for self-supervised indoor depth estimation. Proceedings of the IEEE/CVF international conference on computer vision, pp 12663–12673

Yu Z, Jin L, Gao S (2020) P2Net: Patch-Match and Plane-Regularization for unsupervised indoor depth estimation. European Conference on Computer Vision, Springer, pp 206–222

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13:600–612

Xie S, Girshick R, Dollár P, Tu Z, He K (2017) Aggregated residual transformations for deep neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1492–1500

Huang H, Lin L, Tong R, Hu H, Zhang Q, Iwamoto Y, Han X, Chen Y-W, Wu J (2020) Unet 3+: A full-scale connected unet for medical image segmentation. In: ICASSP 2020–2020 IEEE international conference on acoustics, speech and signal processing (ICASSP), IEEE, pp 1055–1059

Zhou Z, Siddiquee MMR, Tajbakhsh N, Liang J (2019) Unet++: Redesigning skip connections to exploit multiscale features in image segmentation. IEEE Trans Med Imaging 39:1856–1867

Clevert D-A, Unterthiner T, Hochreiter S (2016) Fast and accurate deep network learning by exponential linear units (elus). In: Proceedings of the International Conference on Learning Representations, pp 1–14

Zhou J, Wang Y, Qin K, Zeng W (2019) Moving indoor: Unsupervised video depth learning in challenging environments. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 8618–8627

Kingma DP, Ba J (2015) Adam: a method for stochastic optimization. In: Proceedings of the International Conference on Learning Representations, pp 1–15

Wei Y, Guo H, Lu J, Zhou J (2021) Iterative feature matching for self-supervised indoor depth estimation. IEEE Trans Circuits Syst Video Technol 32:3839–3852

Wu C-Y, Wang J, Hall M, Neumann U, Su S (2022) Toward practical monocular indoor depth estimation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 3814–3824

Ladicky L, Shi J, Pollefeys M (2014) Pulling things out of perspective. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 89–96

Wang P, Shen X, Lin Z, Cohen S, Price B, Yuille AL (2015) Towards unified depth and semantic prediction from a single image. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2800–2809

Liu F, Shen C, Lin G, Reid I (2015) Learning depth from single monocular images using deep convolutional neural fields. IEEE Trans Pattern Anal Mach Intell 38:2024–2039

Li J, Klein R, Yao A (2017) A two-streamed network for estimating fine-scaled depth maps from single rgb images. In: Proceedings of the IEEE international conference on computer vision, pp 3372–3380

Fu H, Gong M, Wang C, Batmanghelich K, Tao D (2018) Deep ordinal regression network for monocular depth estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2002–2011

Zhao W, Liu S, Shu Y, Liu Y-J (2020) Towards better generalization: Joint depth-pose learning without posenet. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9151–9161

Bian J-W, Zhan H, Wang N, Chin T-J, Shen C, Reid I (2020) Unsupervised depth learning in challenging indoor video: Weak rectification to rescue, arXiv preprint arXiv:2006.02708

Trockman A, Zico Kolter J (2022) Patches are all you need?, arXiv preprint at arXiv:2201.09792

Ma X, Zhou Y, Wang H (2023) Can Qin, Bin Sun, Chang Liu, Yun Fu, Image as Set of Points. In: Proceedings of the International Conference on Learning Representations, pp 1–18

Wu G, Zheng W-S, Lu Y, Tian Q (2023) PSLT: A light-weight vision transformer with ladder self-attention and progressive shift. IEEE Trans Pattern Anal Mach Intell 45:11120–11135

Hinton G, Vinyals O, Dean J (2015) Distilling the knowledge in a neural network. Comput Sci 14.7:38–39

Acknowledgements

This work was supported by the National Natural Science Foundation of China under Grants 52204177.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cheng, D., Chen, J., Lv, C. et al. Using full-scale feature fusion for self-supervised indoor depth estimation. Multimed Tools Appl 83, 28215–28233 (2024). https://doi.org/10.1007/s11042-023-16581-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16581-6