Abstract

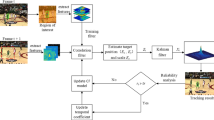

As an accurate and robust method for visual object tracking task, discriminative correlation filter (DCF) framework has received significant popularity from various researchers. For a given tracking sequence, the DCF formulates the target appearance model and tracking model according to the target information of the first frame, and then predicts the location and the scale of tracking target via specific tracking strategy while the tracking model and appearance model are updated via model learning strategy. However, the tracking performance of DCF tracker is limited by the undesirable impact of boundary effect caused by algorithm deficiency and response aberrance arisen from complex tracking environment. Aiming at tackling the above difficulties, a visual tracking method based on spatial-temporal regularized correlation filter with confidence template updating is developed, in which spatial-temporal regulariziers are formulated for tracking model learning process to tackle the spawning noises in the model learning process and an adaptive model template updating strategy for tracking strategy process is adopted to repress the response aberrance effect. The main findings and scientific contributions of our method (Ours) includes: 1) a novel spatial regularization method is introduced to restrain the boundary effect and to improve the overall tracking performance by penalizing the edge coefficient of correlation filter; 2) aiming at addressing the appearance variation of tracking target, a novel temporal regularizer is suggested to formulate a more stable learning process for the tracking model and further surmount the model noise caused by deficient model learning; 3) a novel adaptive updating strategy of model template is provided to alleviate the aberrances of response representations and obtain more accurate target prediction results. Extensive experimental results with 351 challenging videos on various datasets OTB2013, OTB2015, Temple-Color and UAV123 have proven that Ours can achieve favorable performances against other state-of-the-art trackers and efficiently adapt to a variety of complex scenarios in the tracking task.

Similar content being viewed by others

References

Nai K, Li Z, Gan Y et al (2023) Robust visual tracking via multitask sparse correlation filters learning[J]. IEEE Trans Neural Netw Learn Syst 34(1):502–515

An Z, Wang X, Li B et al (2023) Robust visual tracking for UAVs with dynamic feature weight selection[J]. Appl Intell 53(4):3836–3849

Wei B, Chen H, Cao S et al (2023) An IoU-aware Siamese network for real-time visual tracking[J]. Neurocomputing 527(3):13–26

Hosseiny MS, Alruwaili F, Clancy MP et al (2023) Automatic alignment of fractured femur: Integration of robot and optical tracking system[J]. IEEE Robot Auto Lett 8(5):2438–2445

Javed S, Danelljan M, Khan FS et al (2023) Visual object tracking with discriminative filters and siamese networks: A survey and outlook[J]. IEEE Trans Pattern Anal Mach Intell 45(5):6552–6574

Zadeh SM, Cheng L, Yakhdan HG et al (2022) Deep learning for visual tracking: A comprehensive survey[J]. IEEE Trans Intell Transp Syst 23(5):3943–3968

Wu Y, Lim J, Yang MH (2013) Online object tracking: A benchmark[C]. Proc IEEE Conf Comput Vis Pattern Recognit:2411–2418

Wu Y, Lim J, Yang MH (2015) Object tracking benchmark[J]. IEEE Trans Pattern Anal Mach Intell 37(9):1834–1848

Kristan M, Leonardis A, Matas J et al (2018) The sixth visual object tracking vot2018 challenge results[C]. Proc Europ Conf Comput Vis Worksh:8–53

Yang YJ, Gu XD (2023) Joint correlation and attention based feature fusion network for accurate visual tracking[J]. IEEE Trans Image Process 32:1705–1715

Meng FY, Gong XM, Zhang Y (2023) SiamRank: A siamese based visual tracking network with ranking strategy[J]. Pattern Recogn 141:109630

Bao H, Shu P, Zhang HC et al (2023) Siamese-based twin attention network for visual tracking[J]. IEEE Trans Circuits Syst Video Technol 33(2):847–860

Elayaperumal D, Joo YH (2023) Learning spatial variance-key surrounding-aware tracking via multi-expert deep feature fusion[J]. Inf Sci 629(6):502–519

Lin FL, Fu CH, He YJ (2022) ReCF: Exploiting response reasoning for correlation filters in real-time uav tracking[J]. IEEE Trans Intell Transp Syst 23(8):10469–10480

Bolme DS, Beveridge JR, Draper BA et al (2010) Visual object tracking using adaptive correlation filters[C]. Proc IEEE Conf Comput Vis Pattern Recognit:2544–2550

Henriques JF, Caseiro R, Martins P et al (2012) Exploiting the circulant structure of tracking-by-detection with kernels[C]. Proc Eur Conf Comput Vis:702–715

Henriques JF, Caseiro R, Martins P et al (2015) High-speed tracking with kernelized correlation filters[J]. IEEE Trans Pattern Anal Mach Intell 37(3):583–596

N Aslam and V Sharma (2017) Foreground detection of moving object using Gaussian mixture model[C]. Proceedings of the 2017 IEEE International Conference on Communication and Signal Processing, pp 1071–1074

Feng Z, Wang P (2023) A model adaptive updating kernel correlation filter tracker with deep CNN features[J]. Eng Appl Artif Intell 123:106250

N Aslam and M H Kolekar (2022) A probabilistic approach for detecting human motion in video sequence using gaussian mixture model[C]. Proceedings of 2022 2nd International Conference on Emerging Frontiers in Electrical and Electronic Technologies, 20223712704578

Danelljan M, Hager G, Khan FS et al (2015) Learning spatially regularized correlation filters for visual tracking[C]. IEEE Inte Conf Comput Vis:4310–4318

Yuan X, Liu J, Cheng D (2023) Motion-regularized background-aware correlation filter for marine radar target tracking[J]. IEEE Geosci Remote Sens Lett 20:3504705

Chen L, Liu Y (2023) A robust spatial-temporal correlation filter tracker for efficient UAV visual tracking[J]. Appl Intell 53(4):4415–4430

Wang M, Liu Y, Huang Z (2017) Large margin object tracking with circulant feature maps[C]. IEEE Conf Comput Vis Patt Recog:4021–4029

Choi J, Chang HJ, Yun S et al (2017) Attentional correlation filter network for adaptive visual tracking[J]. IEEE Conf Comput Vis Patt Recog:6931–6939

Huang Z, Fu C, Li Y et al (2019) Learning aberrance repressed correlation filters for real-time UAV tracking[C]. IEEE/CVF Int Conf Comput Vis:2891–2900

Wei B, Chen H, Ding Q et al (2023) SiamSTC: Updatable Siamese tracking network via spatio-temporal context[J]. Knowl-Based Syst 263(3):110286

Aslam N, Kolekar MH (2023) DeMAAE: Deep multiplicative attention-based autoencoder for identification of peculiarities in video sequences[J]. Vis Comput, 20232214155578

Fan N, Liu Q, Li X et al (2023) Siamese residual network for efficient visual tracking[J]. Inf Sci 624(5):606–623

Zhang J, He Y, Wang S (2023) Learning adaptive sparse spatially-regularized correlation filters for visual tracking[J]. IEEE Signal Proc Lett 30:11–15

Zhou L, Li J, Lei B et al (2023) Correlation filter tracker with sample-reliability awareness and self-guided update[J]. IEEE Trans Circuits Syst Video Technol 33(1):118–131

Liang P, Blasch E, Ling H (2015) Encoding color information for visual tracking: Algorithms and benchmark[J]. IEEE Trans Image Proc 24(12):5630–5644

Mueller M, Smith N, Ghanem B (2016) A benchmark and simulator for uav tracking[J]. Far East J Math ences 2(2):445–461

Danelljan M, Häger G, Khan F et al (2014) Accurate scale estimation for robust visual tracking[C]. British Machine Vision Conference, pp 1–11

Bertinetto L, Valmadre J, Golodetz S et al (2016) Staple: Complementary learners for real-time tracking[C]. IEEE Conference on Computer Vision and Pattern Recognition, pp 1401–1409

Galoogahi HK, Fagg A, Lucey S (2017) Learning background-aware correlation filters for visual tracking[C]. International Conference on Computer Vision, pp 1135–1143

Danelljan M, Bhat G, Khan FS et al ECO: Efficient convolution operators for tracking[C]. IEEE Conference on Computer Vision and Pattern Recognition, pp 6931–693

Mueller M, Smith N, Ghanem B (2017) Context-aware correlation filter tracking[C] Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 138–139

Xu T, Feng ZH, Wu XJ, Kittler J (2019) Learning adaptive discriminative correlation filters via temporal consistency preserving spatial feature selection for robust visual object Tracking[J]. IEEE Trans Image Process 28(11):5596–5609

Li Y, Fu C, Ding F et al (2020) AutoTrack: Towards high-performance visual tracking for UAV with automatic spatio-temporal regularization[C]. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 11923–11932

Li B, Fu C, Ding F et al (2021) ADTrack: Target-aware dual filter learning for real-time anti-dark UAV tracking[C]. Proc - IEEE Int Con Robot Auto 5:496–502

Zhu W, Wang Z, Xu L et al (2022) Exploiting temporal coherence for self-supervised visual tracking by using vision transformer[J]. Knowl-Based Syst 251:109318

Xu T, Feng Z, Wu X et al (2023) Toward robust visual object tracking with independent target-agnostic detection and effective Siamese cross-task interaction[J]. IEEE Trans Image Process 32:1541–1554

Acknowledgements

This work is supported by the National Natural Science Foundation of China (No. 61671222, No. 61903162), the Postgraduate Research & Practice Innovation Program of Jiangsu Province (KYCX22_3822) and the Postgraduate Research & Practice Innovation Program of Jiangsu Province (KYCX21_3484).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liang, M., Wu, X., Tang, S. et al. Visual tracking via confidence template updating spatial-temporal regularized correlation filters. Multimed Tools Appl 83, 37053–37072 (2024). https://doi.org/10.1007/s11042-023-16707-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16707-w