Abstract

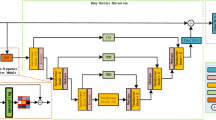

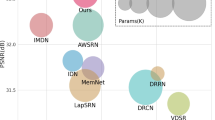

The global semantics and the local scene representation are crucial for image restoration. Although existing methods have proposed various hybrid frameworks of convolutional neural networks (CNNs) and Transformers to take into account both, they only focus on the complementarity of their capabilities. On the one hand, these works neglect the mutual guiding role of the two information, and on the other hand, they also ignore that the semantic gap caused by the two different modeling systems of convolution and Self-Attention seriously impede the feature fusion. In this work, we propose to establish entanglement between the global and the local to bridge the semantic gap and achieve mutual-guided modeling of the two features. In the proposed hybrid framework, the modeling of convolution and Self-Attention is no longer independent of each other, but through the proposed Mutual Transposed Cross Attention (MTCA), the mutual dependence of the two is realized, thereby strengthening the joint modeling of local and global. Further, we propose Bidirectional Injection Module (BIM), which makes the global and local features adapt to each other in parallel before fusion and greatly reduces interference in the fusion process caused by semantic gap. The proposed method is qualitatively and quantitatively evaluated on multiple benchmark datasets, and extensive experiments show that our method reaches the state-of-the-art with low computational consumption.

Similar content being viewed by others

Data Availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Du Y, Xu J, Qiu Q, Zhen X, Zhang L (2020) Variational image deraining. In: The IEEE Winter Conference on Applications of Computer Vision (WACV)

Zhang H, Patel VM (2018) Density-aware single image de-raining using a multi-stream dense network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 695–704

Zhang H, Patel VM (2018) Densely connected pyramid dehazing network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3194–3203

Xu K, Yang X, Yin B, Lau RW (2020) Learning to restore low-light images via decomposition-and-enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2281–2290

Zamir SW, Arora A, Khan S, Hayat M, Khan FS, Yang M-H, Shao L (2021) Multi-stage progressive image restoration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 14821–14831

Vaswani A, Shazeer N, Parmar N, Uszkoreit J, Jones L, Gomez AN, Kaiser L, Polosukhin I (2017) Attention is all you need. Advances in neural information processing systems 30

Dosovitskiy A, Beyer L, Kolesnikov A, Weissenborn D, Zhai X, Unterthiner T, Dehghani M, Minderer M, Heigold G, Gelly S, et al (2020) An image is worth 16x16 words: Transformers for image recognition at scale. arXiv preprint arXiv:2010.11929

Steiner A, Kolesnikov A, Zhai X, Wightman R, Uszkoreit J, Beyer L (2021) How to train your vit? data, augmentation, and regularization in vision transformers. arXiv preprint arXiv:2106.10270

Liu Z, Lin Y, Cao Y, Hu H, Wei Y, Zhang Z, Lin S, Guo B (2021) Swin transformer: Hierarchical vision transformer using shifted windows. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 10012–10022

Chen J, Lu Y, Yu Q, Luo X, Adeli E, Wang Y, Lu L, Yuille AL, Zhou Y (2021) Transunet: Transformers make strong encoders for medical image segmentation. arXiv preprint arXiv:2102.04306

Zhang Y, Liu H, Hu Q (2021) Transfuse: Fusing transformers and cnns for medical image segmentation. In: International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 14–24

Liu Z, Luo S, Li W, Lu J, Wu Y, Sun S, Li C, Yang L (2020) Convtransformer: A convolutional transformer network for video frame synthesis. arXiv preprint arXiv:2011.10185

Guo J, Han K, Wu H, Xu C, Tang Y, Xu C, Wang Y (2021) Cmt: Convolutional neural networks meet vision transformers. arXiv preprint arXiv:2107.06263

Chen Y, Dai X, Chen D, Liu M, Dong X, Yuan L, Liu Z (2022) Mobile-former: Bridging mobilenet and transformer. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5270–5279

Peng Z, Huang W, Gu S, Xie L, Wang Y, Jiao J, Ye Q (2021) Conformer: Local features coupling global representations for visual recognition. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 367–376

Zhang W, Huang Z, Luo G, Chen T, Wang X, Liu W, Yu G, Shen C (2022) Topformer: Token pyramid transformer for mobile semantic segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12083–12093

Zhang H, Sindagi V, Patel VM (2019) Image de-raining using a conditional generative adversarial network. IEEE transactions on circuits and systems for video technology 30(11):3943–3956

Li B, Ren W, Fu D, Tao D, Feng D, Zeng W, Wang Z (2018) Benchmarking single-image dehazing and beyond. IEEE Transactions on Image Processing 28(1):492–505

Dai T, Cai J, Zhang Y, Xia S-T, Zhang L (2019) Second-order attention network for single image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 11065–11074

Ledig C, Theis L, Huszár F, Caballero J, Cunningham A, Acosta A, Aitken A, Tejani A, Totz J, Wang Z, et al (2017) Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4681–4690

Pan X, Zhan X, Dai B, Lin D, Loy CC, Luo P (2021) Exploiting deep generative prior for versatile image restoration and manipulation. IEEE Transactions on Pattern Analysis and Machine Intelligence

Zamir SW, Arora A, Khan S, Hayat M, Khan FS, Yang M-H, Shao L (2020) Cycleisp: Real image restoration via improved data synthesis. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2696–2705

Zamir, S.W., Arora, A., Khan, S., Hayat, M., Khan, F.S., Yang, M.- H., Shao, L.: Learning enriched features for real image restoration and enhancement. In: European Conference on Computer Vision, pp. 492–511 (2020)

Wan, Y., Cheng, Y., Shao, M., Gonz‘alez, J.: Image rain removal and illumination enhancement done in one go. Knowledge-Based Systems 252:109244 (2022)

Wu, H., Qu, Y., Lin, S., Zhou, J., Qiao, R., Zhang, Z., Xie, Y., Ma, L.: Contrastive learning for compact single image dehazing. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10551–10560 (2021)

Yasarla, R., Sindagi, V.A., Patel, V.M.: Syn2real transfer learning for image deraining using gaussian processes. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2726–2736 (2020)

Wei, W., Meng, D., Zhao, Q., Xu, Z., Wu, Y.: Semi-supervised transfer learning for image rain removal. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3877–3886 (2019)

Zhu, J.-Y., Park, T., Isola, P., Efros, A.A.: Unpaired image-to-image translation using cycle-consistent adversarial networks. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2223–2232 (2017)

Ye, Y., Chang, Y., Zhou, H., Yan, L.: Closing the loop: Joint rain generation and removal via disentangled image translation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2053–2062 (2021)

Feng, X., Ji, H., Pei, W., Chen, F., Zhang, D., Lu, G.: Global-local stepwise generative network for ultra high-resolution image restoration. arXiv preprint arXiv:2207.08808 (2022)

Zheng, Z., Ren, W., Cao, X., Hu, X., Wang, T., Song, F., Jia, X.: Ultra-high-definition image dehazing via multi-guided bilateral learning. In: 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 16180–16189 (2021). IEEE

Touvron, H., Cord, M., Douze, M., Massa, F., Sablayrolles, A., Jégou, H.: Training data-efficient image transformers & distillation through attention. In: International Conference on Machine Learning, pp. 10347–10357 (2021)

Jiang, Z., Hou, Q., Yuan, L., Zhou, D., Jin, X., Wang, A., Feng, J.: Token labeling: Training a 85.4

Wu, H., Xiao, B., Codella, N., Liu, M., Dai, X., Yuan, L., Zhang, L.: Cvt: Introducing convolutions to vision transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 22–31 (2021)

Touvron, H., Cord, M., Sablayrolles, A., Synnaeve, G., Jégou, H.: Going deeper with image transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 32–42 (2021)

Wang, Z., Cun, X., Bao, J., Zhou, W., Liu, J., Li, H.: Uformer: A general u-shaped transformer for image restoration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 17683–17693 (2022)

Zamir, S.W., Arora, A., Khan, S., Hayat, M., Khan, F.S., Yang, M.- H.: Restormer: Efficient transformer for high-resolution image restoration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5728–5739 (2022)

Ding, M., Xiao, B., Codella, N., Luo, P., Wang, J., Yuan, L.: Davit: Dual attention vision transformers. arXiv preprint arXiv:2204.03645 (2022)

Mehta, S., Rastegari, M.: Mobilevit: light-weight, general-purpose, and mobile-friendly vision transformer. arXiv preprint arXiv:2110.02178 (2021)

Weng, Z., Yang, X., Li, A., Wu, Z., Jiang, Y.-G.: Semi-supervised vision transformers. arXiv preprint arXiv:2111.11067 (2021)

Ronneberger, O., Fischer, P., Brox, T.: U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical Image Computing and Computer-assisted Intervention, pp. 234–241 (2015)

Charbonnier, P., Blanc-Feraud, L., Aubert, G., Barlaud, M.: Two deterministic half-quadratic regularization algorithms for computed imaging. In: Proceedings of 1st International Conference on Image Processing, vol. 2, pp. 168–172 (1994)

Wang, L.-T., Hoover, N.E., Porter, E.H., Zasio, J.J.: Ssim: A software levelized compiled-code simulator. In: Proceedings of the 24th ACM/IEEE Design Automation Conference, pp. 2–8 (1987)

Fu X, Huang J, Ding X, Liao Y, Paisley J (2017) Clearing the skies: A deep network architecture for single-image rain removal. IEEE Transactions on Image Processing 26(6):2944–2956

Yang, W., Tan, R.T., Feng, J., Liu, J., Guo, Z., Yan, S.: Deep joint rain detection and removal from a single image. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1357–1366 (2017)

Zhang, H., Patel, V.M.: Density-aware single image de-raining using a multi-stream dense network. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 695–704 (2018)

Ancuti, C.O., Ancuti, C., Timofte, R., De Vleeschouwer, C.: O-haze: a dehazing benchmark with real hazy and haze-free outdoor images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 754–762 (2018)

Ancuti, C., Ancuti, C.O., Timofte, R., Vleeschouwer, C.D.: I-haze: a dehazing benchmark with real hazy and haze-free indoor images. In: International Conference on Advanced Concepts for Intelligent Vision Systems, pp. 620–631 (2018). Springer

Wei, C., Wang, W., Yang, W., Liu, J.: Deep retinex decomposition for low-light enhancement. arXiv preprint arXiv:1808.04560 (2018)

Hai, J., Xuan, Z., Yang, R., Hao, Y., Zou, F., Lin, F., Han, S.: R2rnet: Low-light image enhancement via real-low to real-normal network. arXiv preprint arXiv:2106.14501 (2021)

Xiao, C., She, R., Xiao, D., Ma, K.-L.: Fast shadow removal using adaptive multi-scale illumination transfer. In: Computer Graphics Forum, vol. 32, pp. 207–218 (2013)

Ma, L., Ma, T., Liu, R., Fan, X., Luo, Z.: Toward fast, flexible, and robust low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5637–5646 (2022)

Fu, L., Zhou, C., Guo, Q., Juefei-Xu, F., Yu, H., Feng, W., Liu, Y., Wang, S.: Auto-exposure fusion for single-image shadow removal. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10571–10580 (2021)

Varga D (2022) Saliency-guided local full-reference image quality assessment. Signals 3(3):483–496

Shi C, Lin Y (2022) Image quality assessment based on three features fusion in three fusion steps. Symmetry 14(4):773

He, K., Zhang, X., Ren, S., Sun, J.: Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1026–1034 (2015)

Yasarla R, Patel VM (2019) Uncertainty guided multi-scale residual learningusing a cycle spinning cnn for single image de-raining. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8405-8414

Li, X., Wu, J., Lin, Z., Liu, H., Zha, H.: Recurrent squeeze-and-excitation context aggregation net for single image deraining. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 254–269 (2018)

Ren, D., Zuo, W., Hu, Q., Zhu, P., Meng, D.: Progressive image deraining networks: A better and simpler baseline. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3937–3946 (2019)

Jiang, K.,Wang, Z., Yi, P., Chen, C., Huang, B., Luo, Y., Ma, J., Jiang, J.: Multi-scale progressive fusion network for single image deraining. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8346–8355 (2020)

Purohit, K., Suin, M., Rajagopalan, A., Boddeti, V.N.: Spatially-adaptive image restoration using distortion-guided networks. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 2309–2319 (2021)

Liu, X., Ma, Y., Shi, Z., Chen, J.: Griddehazenet: Attention-based multiscale network for image dehazing. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 7314–7323 (2019)

Liu, X., Suganuma, M., Sun, Z., Okatani, T.: Dual residual networks leveraging the potential of paired operations for image restoration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7007–7016 (2019)

Dong, H., Pan, J., Xiang, L., Hu, Z., Zhang, X., Wang, F., Yang, M.-H.: Multi-scale boosted dehazing network with dense feature fusion. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 2157–2167 (2020)

Qin, X., Wang, Z., Bai, Y., Xie, X., Jia, H.: Ffa-net: Feature fusion attention network for single image dehazing. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 11908–11915 (2020)

Jiang Y, Gong X, Liu D, Cheng Y, Fang C, Shen X, Yang J, Zhou P, Wang Z (2021) Enlightengan: Deep light enhancement without paired supervision. IEEE Transactions on Image Processing 30:2340–2349

Zhang Y, Guo X, Ma J, Liu W, Zhang J (2021) Beyond brightening lowlight images. International Journal of Computer Vision 129(4):1013–1037

Guo, C., Li, C., Guo, J., Loy, C.C., Hou, J., Kwong, S., Cong, R.: Zero-reference deep curve estimation for low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 1780–1789 (2020)

Yang, W., Wang, S., Fang, Y., Wang, Y., Liu, J.: From fidelity to perceptual quality: A semi-supervised approach for low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3063–3072 (2020)

Liu, R., Ma, L., Zhang, J., Fan, X., Luo, Z.: Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10561–10570 (2021)

Yang Q, Tan K-H, Ahuja N (2012) Shadow removal using bilateral filtering. IEEE Transactions on Image processing 21(10):4361–4368

Guo R, Dai Q, Hoiem D (2012) Paired regions for shadow detection and removal. IEEE transactions on pattern analysis and machine intelligence 35(12):2956–2967

Gong H, Cosker D (2014) Interactive shadow removal and ground truth for variable scene categories. In: BMVC, pp. 1–11. Citeseer

Wang, J., Li, X., Yang, J.: Stacked conditional generative adversarial networks for jointly learning shadow detection and shadow removal. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1788–1797 (2018)

Hu, X., Zhu, L., Fu, C.-W., Qin, J., Heng, P.-A.: Direction-aware spatial context features for shadow detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 7454–7462 (2018)

Zhang, L., Long, C., Zhang, X., Xiao, C.: Ris-gan: Explore residual and illumination with generative adversarial networks for shadow removal. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 12829–12836 (2020)

Cun, X., Pun, C.-M., Shi, C.: Towards ghost-free shadow removal via dual hierarchical aggregation network and shadow matting gan. In: Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 10680–10687 (2020)

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoderdecoder with atrous separable convolution for semantic image segmentation. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 801–818 (2018)

Cordts, M., Omran, M., Ramos, S., Rehfeld, T., Enzweiler, M., Benenson, R., Franke, U., Roth, S., Schiele, B.: The cityscapes dataset for semantic urban scene understanding. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3213–3223 (2016)

Acknowledgements

The authors are very indebted to the anonymous referees for their critical comments and suggestions for the improvement of this paper. This work was supported by National Key Research and development Program of China (2021YFA1000102), and in part by the grants from the National Natural Science Foundation of China (Nos. 62376285, 62272375, 61673396), Natural Science Foundation of Shandong Province, China (No. ZR2022MF260).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cheng, Y., Shao, M. & Wan, Y. Mutually guided learning of global semantics and local representations for image restoration. Multimed Tools Appl 83, 30019–30044 (2024). https://doi.org/10.1007/s11042-023-16724-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16724-9