Abstract

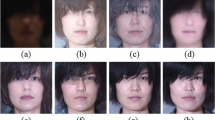

In real-world long-range surveillance systems, thermal face images captured from a distance suffer from low resolution and noise, posing challenges for thermal-to-visible face image translation. Current methods assume similar resolutions and noise-free conditions between thermal and visible images, limiting their applicability. To address these issues, we propose the Generative Facial Prior Embedded Degradation Adaption Network (GDANet), which synthesizes high-quality visible images from low-quality thermal images. GDANet combines pretrained Generative Adversarial Network (GAN) blocks with a U-shaped deep neural network (DNN) to incorporate faithful facial priors, including geometry, facial textures, and colors. Additionally, an unsupervised degradation representation learning scheme is developed to capture abstract degradation representations of degraded thermal images in a representation space. This approach allows GDANet to adapt spatial features based on the degradation representation, striking a balance between fidelity and texture faithfulness using degradation-aware feature fusion (DAFF) blocks. Experimental results demonstrate that GDANet outperforms state-of-the-art methods, showing its effectiveness in handling real-world low-quality thermal images across diverse practical applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

References

Xiao W, Zhang Y, Wang H, Li F, Jin H (2022) Heterogeneous knowledge distillation for simultaneous infrared-visible image fusion and super-resolution. IEEE Trans Instrum Meas 71:1–15

Luo M, Wu H, Huang H, He W, He R (2022) Memory-modulated transformer network for heterogeneous face recognition. IEEE Trans Inf Forensic Secur

Rai D, Rajput SS (2022) Robust face hallucination algorithm using motion blur embedded nearest proximate patch representation. IEEE Trans Instrum Meas

Kumar S, Singh SK, Mishra NK, Dutta M (2022) An encoder-decoder based thermo-visible image translation for disguised and undisguised faces. Image Vis Comput 119:104376

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2020) Generative adversarial networks. Commun ACM 63(11):139–144

Aggarwal K, Mijwil MM, Al-Mistarehi A-H, Alomari S, Gök M, Alaabdin AMZ, Abdulrhman SH et al (2022) Has the future started? the current growth of artificial intelligence, machine learning, and deep learning. Iraqi J Comput. Sci. Math. 3(1):115–123

Tomar AS, Arya K, Rajput SS (2023) Deep hyfeat based attention in attention model for face super-resolution. IEEE Trans Instrum Meas 72:1–11

Duan B, Fu C, Li Y, Song X, He R (2020) Cross-spectral face hallucination via disentangling independent factors. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 7930–7938

Fu C, Wu X, Hu Y, Huang H, He R (2019) Dual variational generation for low shot heterogeneous face recognition. Adv Neural Inf Process Syst 32

Song L, Zhang M, Wu X, He R (2018) Adversarial discriminative heterogeneous face recognition. In: Proceedings of the AAAI conference on artificial intelligence, vol 32

Yu J, Cao J, Li Y, Jia X, He R (2019) Pose-preserving cross-spectral face hallucination. In: Proceedings of the 28th International joint conference on artificial intelligence, pp 1018–1024

Zhang H, Patel VM, Riggan BS, Hu S (2017) Generative adversarial network-based synthesis of visible faces from polarimetrie thermal faces. In: 2017 IEEE international joint conference on biometrics (IJCB), IEEE, pp 100–107

Anghelone D, Chen C, Faure P, Ross A, Dantcheva A (2021) Explainable thermal to visible face recognition using latent-guided generative adversarial network. In: 2021 16th IEEE international conference on automatic face and gesture recognition (FG 2021), IEEE, pp 1–8

Mei Y, Guo P, Patel VM (2022) Escaping data scarcity for high-resolution heterogeneous face hallucination. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 18676–18686

Konečnỳ J, McMahan HB, Yu FX, Richtárik P, Suresh AT, Bacon D (2016) Federated learning: Strategies for improving communication efficiency. arXiv:1610.05492

Di X, Riggan BS, Hu S, Short NJ, Patel VM (2021) Multi-scale thermal to visible face verification via attribute guided synthesis. IEEE Trans Biom Behav Identity Sci 3(2):266–280

Parkhi OM, Vedaldi A, Zisserman A (2015) Deep face recognition

Du H, Shi H, Zeng D, Zhang X-P, Mei T (2022) The elements of end-to-end deep face recognition: A survey of recent advances. ACM Comput Surv (CSUR) 54(10s):1–42

Zheng Q, Deng J, Zhu Z, Li Y, Zafeiriou S (2022) Decoupled multi-task learning with cyclical self-regulation for face parsing. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4156–4165

Hou X, Zhang X, Liang H, Shen L, Lai Z, Wan J (2022) Guidedstyle: Attribute knowledge guided style manipulation for semantic face editing. Neural Netw 145:209–220

Xu C, Zhang J, Han Y, Tian G, Zeng X, Tai Y, Wang Y, Wang C, Liu Y (2022) Designing one unified framework for high-fidelity face reenactment and swapping. In: European conference on computer vision, Springer, pp 54–71

Cheema U, Ahmad M, Han D, Moon S (2021) Heterogeneous visible-thermal and visible-infrared face recognition using unit-class loss and cross-modality discriminator. arXiv:2111.14339

Zhang T, Wiliem A, Yang S, Lovell B (2018) Tv-gan: Generative adversarial network based thermal to visible face recognition. In: 2018 International conference on biometrics (ICB), IEEE, pp 174–181

Chen C, Ross A (2019) Matching thermal to visible face images using a semantic-guided generative adversarial network. In: 2019 14th IEEE international conference on automatic face & gesture recognition (FG 2019), IEEE, pp 1–8

Immidisetti R, Hu S, Patel VM (2021) Simultaneous face hallucination and translation for thermal to visible face verification using axial-gan. In: 2021 IEEE international joint conference on biometrics (IJCB), IEEE, pp 1–8

Di X, Zhang H, Patel VM (2018) Polarimetric thermal to visible face verification via attribute preserved synthesis. In: 2018 IEEE 9th International conference on biometrics theory, applications and systems (BTAS), IEEE, pp 1–10

Sarfraz MS, Stiefelhagen R (2017) Deep perceptual mapping for cross-modal face recognition. Int J Comput Vision 122(3):426–438

Yu X, Porikli F (2016) Ultra-resolving face images by discriminative generative networks. In: European Conference on Computer Vision, Springer, pp 318–333, https://doi.org/10.1007/978-3-319-46454-1_20

Yu X, Porikli F (2017) Face hallucination with tiny unaligned images by transformative discriminative neural networks. In: Proceedings of the AAAI conference on artificial intelligence, vol 31

Jaderberg M, Simonyan K, Zisserman A et al (2015) Spatial transformer networks. Adv Neural Inf Process Syst 28:2017–2025

Huang H, He R, Sun Z, Tan T (2017) Wavelet-srnet: a wavelet-based cnn for multi-scale face super resolution. In: Proceedings of the IEEE international conference on computer vision, pp 1689–1697, https://doi.org/10.1109/ICCV.2017.187

Wang L, Wang Y, Liang Z, Lin Z, Yang J, An W, Guo Y (2019) Learning parallax attention for stereo image super-resolution. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 12250–12259, https://doi.org/10.1109/cvpr.2019.01253

Dai Q, Li J, Yi Q, Fang F, Zhang G (2021) Feedback network for mutually boosted stereo image super-resolution and disparity estimation. https://doi.org/10.1145/3474085.3475356, arXiv:2106.00985

Yu X, Fernando B, Ghanem B, Porikli F, Hartley R (2018) Face super-resolution guided by facial component heatmaps. In: Proceedings of the European conference on computer vision (ECCV), pp 217–233, https://doi.org/10.1007/978-3-030-01240-3_14

Liu L, Chen CP, Li S (2020) Hallucinating color face image by learning graph representation in quaternion space. IEEE Trans Cybern

Ma C, Jiang Z, Rao Y, Lu J, Zhou J (2020) Deep face super-resolution with iterative collaboration between attentive recovery and landmark estimation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 5569–5578, https://doi.org/10.1109/cvpr42600.2020.00561

Liu L, Feng Q, Chen CP, Wang Y (2021) Noise robust face hallucination based on smooth correntropy representation. IEEE Trans Neural Netw Learn Syst

Wang X, Li Y, Zhang H, Shan Y (2021) Towards real-world blind face restoration with generative facial prior. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9168–9178, https://doi.org/10.1109/cvpr46437.2021.00905

Xie C, Ning Q, Dong W, Shi G (2023) Tfrgan: Leveraging text information for blind face restoration with extreme degradation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2534–2544

Wang Z, Zhang Z, Zhang X, Zheng H, Zhou M, Zhang Y, Wang Y (2023) Dr2: Diffusion-based robust degradation remover for blind face restoration. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 1704–1713

Gao G, Xu Z, Li J, Yang J, Zeng T, Qi G-J (2023) Ctcnet: A cnn-transformer cooperation network for face image super-resolution. IEEE Trans Image Process 32:1978–1991

Teng Z, Yu X, Wu C (2022) Blind face restoration via multi-prior collaboration and adaptive feature fusion. Front Neurorobotics 16

Karras T, Laine S, Aittala M, Hellsten J, Lehtinen J, Aila T (2020) Analyzing and improving the image quality of stylegan. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 8110–8119

Michaeli T, Irani M (2013) Nonparametric blind super-resolution. In: Proceedings of the IEEE international conference on computer vision, pp 945–952

Bell-Kligler S, Shocher A, Irani M (2019) Blind super-resolution kernel estimation using an internal-gan. Adv Neural Inf Process Syst 32

Gu J, Lu H, Zuo W, Dong C (2019) Blind super-resolution with iterative kernel correction. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 1604–1613

He K, Fan H, Wu Y, Xie S, Girshick R (2020) Momentum contrast for unsupervised visual representation learning. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 9729–9738

Yu X, Porikli F (2017) Hallucinating very low-resolution unaligned and noisy face images by transformative discriminative autoencoders. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3760–3768, https://doi.org/10.1109/cvpr.2017.570

Wang X, Yu K, Dong C, Loy CC (2018) Recovering realistic texture in image super-resolution by deep spatial feature transform. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 606–615, https://doi.org/10.1109/cvpr.2018.00070

Cao Q, Shen L, Xie W, Parkhi OM, Zisserman A (2018) Vggface2: A dataset for recognising faces across pose and age. In: 2018 13th IEEE international conference on automatic face & gesture recognition (FG 2018), IEEE, pp 67–74, https://doi.org/10.1109/fg.2018.00020

Miyato T, Kataoka T, Koyama M, Yoshida Y (2018) Spectral normalization for generative adversarial networks. arXiv:1802.05957

Brock A, Donahue J, Simonyan K (2018) Large scale gan training for high fidelity natural image synthesis. arXiv:1809.11096

Zhang H, Goodfellow I, Metaxas D, Odena A (2019) Self-attention generative adversarial networks. In: International conference on machine learning, PMLR, pp 7354–7363

Gatys LA, Ecker AS, Bethge M (2016) Image style transfer using convolutional neural networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2414–2423

Tennessee U (2012) Iris thermal/visible face database. http://www.cse.ohio-state.edu/otcbvs-bench/

Panetta K, Wan Q, Agaian S, Rajeev S, Kamath S, Rajendran R, Rao SP, Kaszowska A, Taylor HA, Samani A et al (2018) A comprehensive database for benchmarking imaging systems. IEEE Trans Pattern Anal Mach Intell 42(3):509–520

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980

Hore A, Ziou D (2010) Image quality metrics: Psnr vs. ssim. In: 2010 20th international conference on pattern recognition, IEEE, pp 2366–2369, https://doi.org/10.1109/icpr.2010.579

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612. https://doi.org/10.1109/tip.2003.819861

Zhang R, Isola P, Efros AA, Shechtman E, Wang O (2018) The unreasonable effectiveness of deep features as a perceptual metric. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 586–595

Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S (2017) Gans trained by a two time-scale update rule converge to a local nash equilibrium. Adv Neural Inf Process Syst 30

Isola P, Zhu J-Y, Zhou T, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1125–1134

Yang L, Wang S, Ma S, Gao W, Liu C, Wang P, Ren P (2020) Hifacegan: face renovation via collaborative suppression and replenishment. In: Proceedings of the 28th ACM international conference on multimedia, pp 1551–1560

Acknowledgements

The authors would like to thank Zi Teng and Chuanjiang Leng for helpful discussions and fruitful feedback along the way.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interests

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, H., Chi, J., Li, X. et al. Generative facial prior embedded degradation adaption network for heterogeneous face hallucination. Multimed Tools Appl 83, 43955–43981 (2024). https://doi.org/10.1007/s11042-023-16932-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16932-3