Abstract

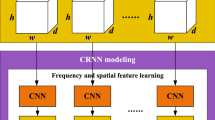

In EEG-based emotion recognition, finding EEG representations that maintain both temporal and spatial features is crucial. This study aims to identify robust representations from EEG independent of subject differences and discriminative. We convert EEG data into feature image sequences with 3D representation, which fully preserve the spatial, spectral and temporal structure of the EEG signal. However, existing models ignore the complementarity between spatial-spectral-temporal features, which limits the classification ability of the models to some extent. Therefore, this paper proposes the Temporal Shift Residual Network(TSM-ResNet) based on feature image sequences for EEG emotion recognition. The Temporal Shift Module(TSM), a highly efficient and high-performance temporal modeling module, is utilized. It shifts certain channels of the feature map along the time dimension, facilitating information exchange between adjacent frames. In summary, the integration of feature image sequences, encompassing multi-domain information, and the powerful temporal modeling of TSM-ResNet enable the unified integration of spatial and spectral features while adequately considering temporal sequence features, all without increasing computational costs. The effectiveness of the proposed method is validated on the internationally recognized DEAP dataset, utilizing evaluation metrics such as accuracy, F1 score, and confusion matrix. The results from subject-dependent experiments (ten-fold cross-validation) demonstrate TSM-ResNet's average accuracy of 93.43% for valence and 93.26% for arousal. Additionally, excellent performance is achieved in subject-independent experiments (leave-one-subject-out cross-validation), with accuracy rates of 64.91% for valence and 62.52% for arousal. These findings highlight the advantages of the proposed method in cross-subject and within-subject emotion recognition.

Similar content being viewed by others

Data availability

Publicly available datasets were analyzed in this study. This data can be found at:

DEAPdataset http://www.eecs.qmul.ac.uk/mmv/datasets/deap/index.html.

References

Doma V, Pirouz M (2020) A comparative analysis of machine learning methods for emotion recognition using EEG and peripheral physiological signals. J Big Data 7(1):1–21. https://doi.org/10.1186/s40537-020-00289-7

Pan C, Shi C, Mu H, Li J, Gao X (2020) EEG-based emotion recognition using logistic regression with Gaussian kernel and Laplacian prior and investigation of critical frequency bands. Appl Sci 10(5):1619. https://doi.org/10.3390/app10051619

Egger M, Ley M, Hanke S (2019) Emotion recognition from physiological signal analysis: A review. Electronic Notes Theoret Comput Sci 343:35–55. https://doi.org/10.1016/j.entcs.2019.04.009

Zheng WL, Lu BL (2015) Investigating critical frequency bands and channels for EEG-based emotion recognition with deep neural networks. IEEE Trans Autonomous Mental Dev 7(3):162–175. https://doi.org/10.1109/TAMD.2015.2431497

Bhatti AM, Majid M, Anwar SM, Khan B (2016) Human emotion recognition and analysis in response to audio music using brain signals. Comput Human Behavior 65:267–275. https://doi.org/10.1016/j.chb.2016.08.029

Shahabi H, Moghimi S (2016) Toward automatic detection of brain responses to emotional music through analysis of EEG effective connectivity. Comput Human Behavior 58:231–239. https://doi.org/10.1016/j.chb.2016.01.005

Soroush MZ, Maghooli K, Setarehdan SK, Nasrabadi AM (2020) Emotion recognition using EEG phase space dynamics and Poincare intersections. Biomed Signal Process Control 59:101918. https://doi.org/10.1016/j.bspc.2020.101918

Algarni M, Saeed F, Al-Hadhrami T, Ghabban F, Al-Sarem M (2022) Deep learning-based approach for emotion recognition using electroencephalography (EEG) signals using Bi-directional long short-term memory (Bi-LSTM). Sensors 22(8):2976. https://doi.org/10.3390/s22082976

Zhang Y, Zhang S, Ji X (2018) EEG-based classification of emotions using empirical mode decomposition and autoregressive model. Multimed Tools Appl 77:26697–26710. https://doi.org/10.1007/s11042-018-5885-9

Yin Y, Zheng X, Hu B, Zhang Y, Cui X (2021) EEG emotion recognition using fusion model of graph convolutional neural networks and LSTM. Appl Soft Comput 100:106954. https://doi.org/10.1016/j.asoc.2020.106954

Chao H, Dong L, Liu Y, Lu B (2019) Emotion recognition from multiband EEG signals using CapsNet. Sensors 19(9):2212. https://doi.org/10.3390/s19092212

Roy Y, Banville H, Albuquerque I, Gramfort A, Falk TH, Faubert J (2019) Deep learning-based electroencephalography analysis: a systematic review. J Neural Eng 16(5):051001. https://doi.org/10.1088/1741-2552

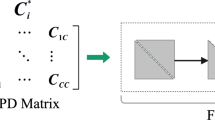

Wang Y, Qiu S, Ma X, He H (2021) A prototype-based SPD matrix network for domain adaptation EEG emotion recognition. Pattern Recog 110:107626. https://doi.org/10.1016/j.patcog.2020.107626

Lin J, Gan C, Han S (2019) Tsm: Temporal shift module for efficient video understanding. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 7083-7093). https://doi.org/10.48550/arXiv.1811.08383

Özerdem MS, Polat H (2017) Emotion recognition based on EEG features in movie clips with channel selection. Brain informatics 4(4):241–252. https://doi.org/10.1007/s40708-017-0069-3

Cui H, Liu A, Zhang X, Chen X, Wang K, Chen X (2020) EEG-based emotion recognition using an end-to-end regional-asymmetric convolutional neural network. Knowledge-Based Syst 205:106243. https://doi.org/10.1016/j.knosys.2020.106243

Liu J, Su Y, Liu Y (2018) Multi-modal emotion recognition with temporal-band attention based on LSTM-RNN. In Advances in Multimedia Information Processing–PCM 2017: 18th Pacific-Rim Conference on Multimedia, Harbin, China, September 28-29, 2017, Revised Selected Papers, Part I 18 (pp. 194-204). Springer International Publishing. https://doi.org/10.1007/978-3-319-77380-3_19

Jia Z, Lin Y, Cai X, Chen H, Gou H, Wang J (2020) Sst-emotionnet: Spatial-spectral-temporal based attention 3d dense network for eeg emotion recognition. In Proceedings of the 28th ACM international conference on multimedia (pp. 2909-2917). https://doi.org/10.1145/3394171.3413724

Liu S, Wang X, Zhao L, Li B, Hu W, Yu J, Zhang YD (2021) 3DCANN: A spatio-temporal convolution attention neural network for EEG emotion recognition. IEEE J Biomed Health Inform 26(11):5321–5331. https://doi.org/10.1109/JBHI.2021.3083525

Huang D, Chen S, Liu C, Zheng L, Tian Z, Jiang D (2021) Differences first in asymmetric brain: A bi-hemisphere discrepancy convolutional neural network for EEG emotion recognition. Neurocomputing 448:140–151. https://doi.org/10.1016/j.neucom.2021.03.105

Pandey P, Seeja KR (2019) Subject independent emotion recognition from EEG using VMD and deep learning. J King Saud Univ -Comput Inform Sciences. https://doi.org/10.1016/j.jksuci.2019.11.003

Yang Y, Wu Q, Qiu M, Wang Y, Chen X (2018) Emotion Recognition from Multi-Channel EEG through Parallel Convolutional Recurrent Neural Network. Proc IEEE Int Conf Joint Conf Neural Networks 2018

Nath D, Anubhav, Singh M, Sethia D, Kalra D, & Indu S (2020) A comparative study of subject-dependent and subject-independent strategies for EEG-based emotion recognition using LSTM network. In Proceedings of the 2020 the 4th International Conference on Compute and Data Analysis (pp. 142-147)

Rajpoot AS, Panicker MR (2022) Subject independent emotion recognition using EEG signals employing attention driven neural networks. Biomed Signal Process Control 75:103547. https://doi.org/10.1016/j.bspc.2022.103547

Liu S, Wang Z, An Y, Zhao J, Zhao Y, Zhang YD (2023) EEG emotion recognition based on the attention mechanism and pre-trained convolution capsule network. Knowledge-Based Syst 265:110372. https://doi.org/10.1016/j.knosys.2023.110372

Hernandez-Pavon JC, Kugiumtzis D, Zrenner C, Kimiskidis VK, Metsomaa J (2022) Removing artifacts from TMS-evoked EEG: A methods review and a unifying theoretical framework. J Neurosci Methods 109591. https://doi.org/10.1016/j.jneumeth.2022.109591

Grobbelaar M, Phadikar S, Ghaderpour E, Struck AF, Sinha N, Ghosh R, Ahmed MZI (2022) A Survey on Denoising Techniques of Electroencephalogram Signals Using Wavelet Transform. Signals 3(3):577–586. https://doi.org/10.3390/signals3030035

Shi LC, Jiao YY, Lu BL (2013) Differential entropy feature for EEG-based vigilance estimation. In 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (pp. 6627-6630). IEEE. https://doi.org/10.1109/EMBC.2013.6611075

Chen DW, Miao R, Yang WQ, Liang Y, Chen HH, Huang L, … Han N (2019) A feature extraction method based on differential entropy and linear discriminant analysis for emotion recognition. Sensors 19(7):1631. https://doi.org/10.3390/s19071631

Zheng WL, Dong BN, Lu BL (2014) Multimodal emotion recognition using EEG and eye tracking data. In 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (pp. 5040-5043). IEEE. https://doi.org/10.1109/EMBC.2014.6944757

He K, Zhang X, Ren S, Sun J (2016). Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 770-778). https://doi.org/10.48550/arXiv.1512.03385

Koelstra S, Muhl C, Soleymani M, Lee JS, Yazdani A, Ebrahimi T, … Patras I (2011) Deap: A database for emotion analysis; using physiological signals. IEEE Trans Affect Comput 3(1):18 31. https://doi.org/10.1109/T-AFFC.2011.15

Zheng WL, Lu BL (2016) Personalizing EEG-based affective models with transfer learning. In Proceedings of the twenty-fifth international joint conference on artificial intelligence (pp. 2732-2738). https://doi.org/10.5555/3060832.3061003

Atkinson J, Campos D (2016) Improving BCI-based emotion recognition by combining EEG feature selection and kernel classifiers. Expert Syst Appl 47:35–41. https://doi.org/10.1016/j.eswa.2015.10.049

Sarma P, Barma S (2021) Emotion recognition by distinguishing appropriate EEG segments based on random matrix theory. Biomed Signal Process Control 70:102991. https://doi.org/10.1016/j.bspc.2021.102991

Kim BH, Jo S (2018) Deep physiological affect network for the recognition of human emotions. IEEE Trans Affect Comput 11(2):230–243. https://doi.org/10.1109/TAFFC.2018.2790939

Salama ES, El-Khoribi RA, Shoman ME, Shalaby MAW (2018) EEG-based emotion recognition using 3D convolutional neural networks. Int J Adv Comput Sci Appl 9(8). https://doi.org/10.14569/IJACSA.2018.090843

Yin Y, Zheng X, Hu B, Zhang Y, Cui X (2021) EEG emotion recognition using fusion model of graph convolutional neural networks and LSTM. Appl Soft Comput 100:106954. https://doi.org/10.1016/j.asoc.2020.106954

Yang Y, Wu Q, Qiu M, Wang Y, Chen X (2018) Emotion recognition from multi-channel EEG through parallel convolutional recurrent neural network. In 2018 international joint conference on neural networks (IJCNN) (pp. 1-7). IEEE. https://doi.org/10.1109/IJCNN.2018.8489331

Singh U, Shaw R, Patra BK (2023) A data augmentation and channel selection technique for grading human emotions on DEAP dataset. Biomed Signal Process Control 79:104060. https://doi.org/10.1016/j.bspc.2022.104060

Ma J, Tang H, Zheng WL, Lu BL (2019). Emotion recognition using multimodal residual LSTM network. In Proceedings of the 27th ACM international conference on multimedia (pp. 176-183). https://doi.org/10.1145/3343031.3350871

Kunjan S, Grummett TS, Pope KJ, Powers DM, Fitzgibbon SP, Bastiampillai T, ..., Lewis TW (2021) The necessity of leave one subject out (LOSO) cross validation for EEG disease diagnosis. In Brain Informatics: 14th International Conference, BI 2021, Virtual Event, September 17–19, 2021, Proceedings 14 (pp. 558-567). Springer International Publishing. https://doi.org/10.1007/978-3-030-86993-9_50

Chen JX, Jiang DM, Zhang YN (2019) A hierarchical bidirectional GRU model with attention for EEG-based emotion classification. IEEE Access 7:118530–118540. https://doi.org/10.1109/ACCESS.2019.2936817

Liang Z, Oba S, Ishii S (2019) An unsupervised EEG decoding system for human emotion recognition. Neural Networks 116:257–268. https://doi.org/10.1016/j.neunet.2019.04.003

Rayatdoost S, Soleymani M (2018) Cross-corpus EEG-based emotion recognition. In 2018 IEEE 28th international workshop on machine learning for signal processing (MLSP) (pp. 1-6). IEEE. https://doi.org/10.1109/MLSP.2018.8517037

Pandey P, Seeja KR (2022) Subject independent emotion recognition from EEG using VMD and deep learning. J King Saud Univ-Comput Inform Sci 34(5):1730–1738. https://doi.org/10.1016/j.jksuci.2019.11.003

Funding statement

This work was supported by the National Natural Science Foundation of China (61300098), the Natural Science Foundation of Heilongjiang Province (F201347), and the Fundamental Research Funds for the Central Universities (2572015DY07).

Financial interests

The authors declare they have no financial interests.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by Yu Chen, Haopeng Zhang, Jun Long and Yining Xie. The first draft of the manuscript was written by Haopeng Zhang and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, Y., Zhang, H., Long, J. et al. Temporal shift residual network for EEG-based emotion recognition: A 3D feature image sequence approach. Multimed Tools Appl 83, 45739–45759 (2024). https://doi.org/10.1007/s11042-023-17142-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-17142-7