Abstract

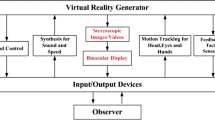

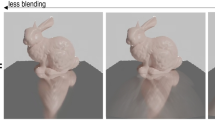

Previous 2D video coding standards obtain efficient compression of traditional 2D color images. However, because new services, such as virtual reality (VR), augmented reality (AR), and mixed reality (MR), have been recently introduced, an immersive video coding standard that compresses view information captured at many viewpoints is being actively developed for high immersion of VR, AR, and MR. This video coding standard generates patches, which represent non-overlapping areas among different views. In general, the patches give a high impact on rendering of specular areas in virtual viewpoints, but it is very difficult to accurately find them. Therefore, this paper proposes an efficient immersive video coding method using specular detection for high rendering quality, which generates additional specular patches. Experimental results demonstrate that the proposed method improves the rendering quality in terms of specularity with a negligible change in coding performance. In particular, subjective assessments clearly show the effectiveness of the proposed method.

Similar content being viewed by others

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Chen M, Jin Y, Goodall T, Yu X, Bovik AC (2020) Study of 3D virtual reality picture quality. IEEE J Select Top Signal Proces 14(1):89–102

Lai Z, Hu YC, Cui Y, Sun L, Dai N, Lee H-S (2020) Furion: Engineering high-quality immersive virtual reality on today’s mobile devices. IEEE Trans Mobile Comput 19(7):1586–1602

Lee S, Jeong J-B, Ryu E-S (2022) Group-based adaptive rendering system for 6DoF immersive video streaming. IEEE Access 10:102691–102700

Isgro F, Trucco E, Kauff P, Schreer O (2004) Three-dimensional image processing in the future of immersive media. IEEE Trans Circuits Syst Video Technol 14(3):288–303

Wiegand T, Sullivan GJ, Bjontegaard G, Luthra A (2003) Overview of the H. 264/AVC Video Coding Standard. IEEE Trans Circuits Syst Video Technol 13(7):560–576

Sullivan GJ, Ohm J-R, Han W-J, Wiegand T (2012) Overview of the High Efficiency Video Coding (HEVC) Standard. IEEE Trans Circuits Syst Video Technol 22(12):1649–1668

Bross B, Wang Y-K, Ye Y, Liu S, Chen J, Sullivan GJ, Ohm J-R (2021) Overview of the Versatile Video Coding (VVC) standard and its applications. IEEE Trans Circuits Syst Video Technol 31(10):3736–3764

Lee JY, Lin J-L, Chen Y-W, Chang Y-L, Kovliga I, Fartukov A, Mishurovskiy M, Wey H-C, Huang Y-W, Lei S-M (2015) Depth-based texture coding in AVC-compatible 3D video coding. IEEE Trans Circuits Syst Video Technol 25(8):1347–1361

Sullivan GJ, Boyce JM, Chen Y, Ohm J-R, Segall CA, Vetro A (2013) Standardized extensions of High Efficiency Video Coding (HEVC). IEEE J Select Top Signal Process 7(6):1001–1016

Tech G, Chen Y, Muller K, Ohm J-R, Vetro A, Wang Y-K (2016) Overview of the multiview and 3D extensions of high efficiency video coding. IEEE Trans Circuits Syst Video Technol 26(1):35–49

Boyce JM, Dore R, Dziembowski A, Fleureau J, Jung J, Kroon B, Salahieh B, Vadakital VKM, Yu L (2021) MPEG immersive video coding standard. Proceed IEEE 109(9):1521–1536

Salahieh B, Jung J, Dziembowski A (2021) Test Model 10 for MPEG Immersive Video. ISO/IEC JTC1/SC29/WG04, N0112

Cai Y, Gao X, Chen W, Wang R (2022) Towards 6DoF live video streaming system for immersive media. Multimed Tools Appl 81:35875–35898

Wien M, Boyce JM, Stockhammer T, Peng W-H (2019) Standardization status of immersive video coding. IEEE J Emerg Select Top Circuits Syst 9(1):5–17

Mieloch D, Dziembowski A, Domanski M, Lee G, Jeong JY (2022) Color-dependent pruning in immersive video coding. J WSCG 30(1–2):91–98

Park D, Lim S-G, Oh K-J, Lee G, Kim J-G (2022) Nonlinear depth quantization using piecewise linear scaling for immersive video coding. IEEE Access 10:4483–4494

Dziembowski A, Mieloch D, Domanski M, Lee G, Jeong JY (2022) Spatiotemporal redundancy removal in immersive video coding. J WSCG 30(1–2):54–62

Shin HC, Jeong JY, Lee G, Kakli MU, Yun J, Seo J (2021) Enhanced pruning algorithm for improving visual quality in MPEG immersive video. ETRI J 44(1):73–84

Dinechin GD, Paljic A, Tanant J (2021) Impact of view-dependent image-based effects on perception of visual realism and presence in virtual reality environments created using multi-camera systems. Appl Sci 11(13):6173

Oh JH, Hwang S, Lee JK, Tavanapong W, Wong J, de Groen PC (2007) Informative frame classification for endoscopy video. Med Image Anal 11(2):110–127

Shen D-F, Guo J-J, Lin G-S, Lin J-Y (2020) Content-aware specular reflection suppression based on adaptive image inpainting and neural network for endoscopic images. Comput Methods Programs Biomed 192:105414

Jiddi S, Robert P, Marchand E (2022) Detecting specular reflections and cast shadows to estimate reflectance and illumination of dynamic indoor scenes. IEEE Trans Vis Comput Graph 28(2):1249–1260

Ortiz F, Torres F, Gil P (2005) A comparative study of highlights detection and elimination by color morphology and polar color models. Iberian Conference on Pattern Recognition and Image Analysis pp 295–302

Xia W, Chen ECS, Pautler SE, Peters TM (2019) A global optimization method for specular highlight removal from a single image. IEEE Access 7:125976–125990

Ronneberger O, Fischer P, Brox T (2015) U-Net: convolutional networks for biomedical image segmentation. Lect Notes Comput Sci (LNCS) 9351:234–241

Oktay O, Schlemper J, Folgoc LL, Lee M, Heinrich M, Misawa K, Mori K, McDonagh S, Hammerla NY, Kainz B, Glocker B, Rueckert D (2018) Attention U-Net: learning where to look for the pancreas. Conference on medical imaging with deep learning (MIDL)

Attard L, Debono CJ, Valentino G, Castro M (2020) Specular highlights detection using a U-net based deep learning architecture. International conference on multimedia computing, networking and applications (MCNA)

Fu G, Zhang Q, Lin Q, Zhu L, Xiao C (2020) Learning to detect specular highlights from real-world images. ACM international conference on multimedia pp 1873–1881

Fu G, Zhang Q, Lin Q, Zhu L, Xiao C (2021) A multi-task network for joint specular highlight detection and removal. IEEE/CVF conference on computer vision and pattern recognition (CVPR)

Anwer A, Ainouz S, Saad MNM, Ali SSA, Meriaudeau F (2022) SpecSeg network for specular highlight detection and segmentation in real-world images. Sensors 22(17):6552

Mieloch D, Dziembowski A, Domański M (2020) “MIV CE2.7: Adaptive texture-based pruning. ISO/IEC JTC1/SC29/WG4, M54893

Kroon B, Sonneveldt B (2019) Philips response to immersive video CE-2 pruning. ISO/IEC JTC1/SC29/WG11, M49962

Jylänki J (2010) A thousand ways to pack the bin - a practical approach to two-dimensional rectangle bin packing. http://clb.demon.fi/files/RectangleBinPack.pdf

Alface PR, Naik D, Vadakital VKM, Keränen J (2021) [MPEG-I] [MIV] multiple texture patches per geometry patch. ISO/IEC JTC1/SC29/WG4, M55977

Bang G, Lee J, Kang J, Choi Y, Lee JY (2021) Results for EE3 on Future MIV. ISO/IEC JTC1/SC29/WG4, M57492

Choi Y, Le TV, Bang G, Lee J, Kang J, Lee JY (2021) Future MPEG immersive video coding based on specular detection. ISO/IEC JTC1/SC29/WG4, M57981

Choi Y, Le TV, Bang G, Lee J, Kang J, Lee JY (2022) Deep learning based specular pruning. ISO/IEC JTC1/SC29/WG4, M58997

Shi W, Caballero J, Huszar F, Totz J, Aitken AP, Bishop R, Rueckert D, Wang Z (2016) Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. IEEE conference on computer vision and pattern recognition (CVPR)

Park JB, Kak AC (2003) A truncated least squares approach to the detection of specular highlights in color images. IEEE international conference on robotics and automation (ICRA)

Sudre CH, Li W, Vercauteren T, Ourselin S, Cardoso MJ (2017) “Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. Deep learning in medical image analysis and multimodal learning for clinical decision support

Wieckowski A, Brandenburg J, Hinz T, Bartnik C, George V, Hege G, Helmrich C, Henkel A, Lehmann C, Stoffers C, Zupancic I, Bross B, Marpe D (2021) VVenC: an open and optimized VVC encoder implementation. IEEE international conference on multimedia & expo workshops (ICMEW)

Jung J, Kroon B (2021) Common Test Conditions for MPEG Immersive Video. ISO/IEC JTC1/SC29/WG4, N0113

Bjontegaard G (2021) Calculation of average PSNR differences between RD-Curves. ITU-T Q.6/SG16, VCEG-M33

Korkmaz S (2020) Deep learning-based imbalanced data classification for drug discovery. J Chem Inf Model 60(9):4180–4190

Methodology for the Subjective Assessment of the Quality of Television Pictures. Rec. ITU-R BT.500–11, 2002

Acknowledgements

This work was supported in prat by the Institute of Information & communications Technology Planning & Evaluation (IITP) grant funded by the Korea government (MSIT) (IITP-2024-RS-2022-00156345, IITP-2017-0-00072) and in prat by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (RS-2023-00219051).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

It has not been published elsewhere and that it has not been submitted simultaneously for publication elsewhere.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Choi, Y., Van Le, T., Bang, G. et al. Efficient immersive video coding using specular detection for high rendering quality. Multimed Tools Appl 83, 81091–81105 (2024). https://doi.org/10.1007/s11042-024-18815-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-024-18815-7