Abstract

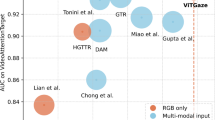

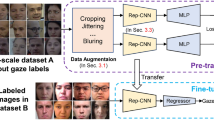

Existing gaze estimation methods with multi-branch structures significantly improve accuracy but come at the cost of extra training overhead and slow inference speed. In this paper. We propose a hybrid model combining online re-parameterization structures and improved transformer encoders for precise and efficient gaze estimation that significantly reduces training requirements while accelerating inference speed. Our multi-branch model employs online re-parameterization structures to extract multi-scale gaze-related features and can be equivalently transformed into a single-branch model during training and inference to achieve significant cost savings and operational improvements. Moreover, we employ transformer encoders to enhance the global correlation of gaze-related features. To offset performance degradation when the conventional position embeddings that affect the inference speed of encoders are removed, we substitute zero-padding position embeddings for the conventional position embeddings to facilitate encoders to learn absolute position information without introducing additional inference costs. Our experimental results demonstrate that the proposed model achieves improved performance on multiple datasets while saving the training time by 57%, memory usage by 36%, and accelerating the inference speed by 26%.

Similar content being viewed by others

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Code Availability

The code is available upon reasonable request.

References

Bao Y, Cheng Y, Liu Y et al (2021) Adaptive feature fusion network for gaze tracking in mobile tablets. In: 2020 25th international conference on pattern recognition (ICPR). IEEE, pp 9936–9943

Chen Z, Shi BE (2018) Appearance-based gaze estimation using dilated-convolutions. In: Asian conference on computer vision. Springer, pp 309–324

Cheng Y, Lu F (2022) Gaze estimation using transformer. In: 2022 26th international conference on pattern recognition (ICPR). IEEE, pp 3341–3347

Cheng Y, Lu F, Zhang X (2018) Appearance-based gaze estimation via evaluation-guided asymmetric regression. In: Proceedings of the European conference on computer vision (ECCV). pp 100–115

Cheng Y, Huang S, Wang F et al (2020) A coarse-to-fine adaptive network for appearance-based gaze estimation. In: Proceedings of the AAAI conference on artificial intelligence. pp 10623–10630

Cheng Y, Zhang X, Lu F et al (2020) Gaze estimation by exploring two-eye asymmetry. IEEE Trans Image Process 29:5259–5272

Cheng Y, Wang H, Bao Y et al (2021) Appearance-based gaze estimation with deep learning: a review and benchmark. arXiv:2104.12668

Ding X, Guo Y, Ding G et al (2019) ACNet: strengthening the kernel skeletons for powerful CNN via asymmetric convolution blocks. In: Proceedings of the IEEE/CVF international conference on computer vision (ICCV)

Ding X, Zhang X, Han J et al (2021) Diverse branch block: building a convolution as an inception-like unit. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 10886–10895

Ding X, Zhang X, Ma N et al (2021) RepVGG: making VGG-style convnets great again. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 13733–13742

Dosovitskiy A, Beyer L, Kolesnikov A et al (2020) An image is worth 16x16 words: transformers for image recognition at scale. arXiv:2010.11929

Fischer T, Chang HJ, Demiris Y (2018) RT-GENE: real-time eye gaze estimation in natural environments. In: Proceedings of the European conference on computer vision (ECCV). pp 334–352

Funes Mora KA, Monay F, Odobez JM (2014) Eyediap: a database for the development and evaluation of gaze estimation algorithms from RGB and RGB-D cameras. In: Proceedings of the symposium on eye tracking research and applications. pp 255–258

Guestrin ED, Eizenman M (2006) General theory of remote gaze estimation using the pupil center and corneal reflections. IEEE Trans Biomed Eng 53(6):1124–1133

He K, Zhang X, Ren S et al (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 770–778

Hu M, Feng J, Hua J et al (2022) Online convolutional re-parameterization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 568–577

Huang T, You S, Zhang B et al (2022) DyRep: bootstrapping training with dynamic re-parameterization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 588–597

Kellnhofer P, Recasens A, Stent S et al (2019) Gaze360: physically unconstrained gaze estimation in the wild. In: Proceedings of the IEEE/CVF international conference on computer vision. pp 6912–6921

Krafka K, Khosla A, Kellnhofer P et al (2016) Eye tracking for everyone. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 2176–2184

Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. Adv Neural Inf Process Syst 25

Li Y, Zhang K, Cao J et al (2021) LocalViT: bringing locality to vision transformers. arXiv:2104.05707

Ma N, Zhang X, Zheng HT et al (2018) ShuffleNet V2: practical guidelines for efficient CNN architecture design. In: Proceedings of the European conference on computer vision (ECCV). pp 116–131

Martin S, Vora S, Yuen K et al (2018) Dynamics of driver’s gaze: explorations in behavior modeling and maneuver prediction. IEEE Trans Intell Veh 3(2):141–150

Massé B, Ba S, Horaud R (2017) Tracking gaze and visual focus of attention of people involved in social interaction. IEEE Trans Pattern Anal Mach Intell 40(11):2711–2724

Meißner M, Oll J (2019) The promise of eye-tracking methodology in organizational research: a taxonomy, review, and future avenues. Organ Res Methods 22(2):590–617

Murthy L, Biswas P (2021) Appearance-based gaze estimation using attention and difference mechanism. In: 2021 IEEE/CVF conference on computer vision and pattern recognition workshops (CVPRW). IEEE, pp 3137–3146

O Oh J, Chang HJ, Choi SI (2022) Self-attention with convolution and deconvolution for efficient eye gaze estimation from a full face image. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 4992–5000

Ren D, Chen J, Zhong J et al (2021) Gaze estimation via bilinear pooling-based attention networks. Journal of Visual CommunImage Represent 81:103369. https://doi.org/10.1016/j.jvcir.2021.103369

Shishido E, Ogawa S, Miyata S et al (2019) Application of eye trackers for understanding mental disorders: cases for schizophrenia and autism spectrum disorder. Neuropsychopharmacology Rep 39(2):72–77. https://doi.org/10.1002/npr2.12046

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. arXiv:1409.1556

Vasu PKA, Gabriel J, Zhu J et al (2023) FastViT: a fast hybrid vision transformer using structural reparameterization. arXiv:2303.14189

Vasu PKA, Gabriel J, Zhu J et al (2023) Mobileone: an improved one millisecond mobile backbone. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. pp 7907–7917

Vaswani A, Shazeer N, Parmar N et al (2017) Attention is all you need. Adv Neural Inf Process Syst 30

Wadekar SN, Chaurasia A (2022) MobileViTv3: mobile-friendly vision transformer with simple and effective fusion of local, global and input features. arXiv:2209.15159

Wang W, Xie E, Li X et al (2022) PVT v2: improved baselines with pyramid vision transformer. Comput Vis Media 8(3):415–424

Wang X, Zhou J, Wang L et al (2023) BoT2L-Net: appearance-based gaze estimation using bottleneck transformer block and two identical losses in unconstrained environments. Electron 12(7). https://doi.org/10.3390/electronics12071704

Xu T, Wu B, Fan R et al (2023) FR-Net: a light-weight FFT residual net for gaze estimation. arXiv:2305.11875

Xu Y, Dong Y, Wu J et al (2018) Gaze prediction in dynamic 360 immersive videos. In: Proceedings of the IEEE conference on computer vision and pattern recognition. pp 5333–5342

Zhang X, Sugano Y, Fritz M et al (2015) Appearance-based gaze estimation in the wild. In: The IEEE conference on computer vision and pattern recognition (CVPR)

Zhang X, Sugano Y, Fritz M et al (2017) It’s written all over your face: full-face appearance-based gaze estimation. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops. pp 51–60

Zhang X, Sugano Y, Fritz M et al (2019) MPIIGaze: real-world dataset and deep appearance-based gaze estimation. IEEE Trans Pattern Anal Mach Intell 41(1):162–175. https://doi.org/10.1109/TPAMI.2017.2778103

Zhang X, Park S, Beeler T et al (2020) ETH-XGaze: a large scale dataset for gaze estimation under extreme head pose and gaze variation. In: Computer vision–ECCV 2020: 16th European conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part V 16. Springer, pp 365–381

Zhu Z, Ji Q (2007) Novel eye gaze tracking techniques under natural head movement. IEEE Trans Biomed Eng 54(12):2246–2260

Acknowledgements

This work was supported by Natural Science Foundation of Jiangsu Province of China (Grant No. BK20180594); Natural Science Foundation of Jiangsu Province of China (Grant No. BK20231036).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that there is no conflict of interest related to this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gu, D., Lv, M., Liu, J. et al. Highly efficient gaze estimation method using online convolutional re-parameterization. Multimed Tools Appl 83, 83867–83887 (2024). https://doi.org/10.1007/s11042-024-18941-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-024-18941-2