Abstract

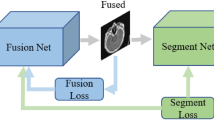

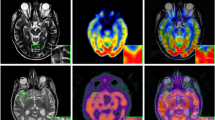

Nowadays, the visual content of various medical images is increased through multimodal image fusion to gather more information from medical images. The complementary information available in various modalities is merged to increase the visual content of the image for quick medical diagnosis. However, the resultant fused multi-modality images suffered from different issues, like texture distortion and gradient, mainly for the affected region. Thus, a hybrid deep learning model, Dense-ResNet is designed for the fusing of multimodal medical images from different modalities in this research work. The images from three different modalities are collected initially, which are individually pre-processed by using a median filter. The pre-processed image of the spatial domain is transformed into a spectral domain by applying Dual-Tree Complex Wavelet Transform (DTCWT), and the transformed image is segmented using Edge-Attention Guidance Network (ET-Net). Finally, the multimodal fusion of medical images is performed on the segmented images using the designed Dense-ResNet model. Moreover, the superiority of the designed model is validated, which shows that the designed Dense-ResNet model outperforms as compared with other existing multimodal medical image fusion approaches. The Dense-ResNet model achieved 0.402 Mean Square Error (MSE), 0.634 Root Mean Square Error (RMSE), and 47.136 dB Peak Signal to Noise Ratio (PSNR).

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Data Availability

The dataset used for the manuscript is the BRATS 2020 dataset taken from “https://www.kaggle.com/datasets/awsaf49/brats2020-trainingdata?select=BraTS20+Training+Metadata.csv”.

References

Kaur M, Singh D (2021) Multi-modality medical image fusion technique using multi-objective differential evolution based deep neural networks. J Ambient Intell Humaniz Comput 12:2483–2493

Wang L, Zhang J, Liu Y, Mi J, Zhang J (2021) Multimodal medical image fusion based on Gabor representation combination of multi-CNN and fuzzy neural network. IEEE Access 9:67634–67647

Chang L, Feng X, Zhu X, Zhang R, He R, Xu C (2019) CT and MRI image fusion based on multiscale decomposition method and hybrid approach. IET Image Proc 13(1):83–88

Lou XC, Feng X (2021) Multimodal medical image fusion based on multiple latent low-rank representation, Comput Math Meth Med, 1–16

Nandhini Abirami R, Durai Raj Vincent PM, Srinivasan K, Manic KS, Chang CY (2022) Multimodal medical image fusion of positron emission tomography and magnetic resonance imaging using generative adversarial networks. Behav Neurol

Li Y, Zhao J, Lv Z, Pan Z (2021) Multimodal medical supervised image fusion method by CNN. Front Neurosci 15:638976

Huang B, Yang F, Yin M, Mo X, Zhong C (2020) A review of multimodal medical image fusion techniques. Comput Math Meth Med

Meher B, Agrawal S, Panda R, Abraham A (2019) A survey on region based image fusion methods. Information Fusion 48:119–132

Almasri MM, Alajlan AM (2022) Artificial Intelligence-Based Multimodal Medical Image Fusion Using Hybrid S2 Optimal CNN. Electronics 11(14):2124

Fu J, Li W, Du J, Xiao B (2020) Multimodal medical image fusion via laplacian pyramid and convolutional neural network reconstruction with local gradient energy strategy. Comput Biol Med 126:104048

Hill P, Al-Mualla ME, Bull D (2016) Perceptual image fusion using wavelets. IEEE Trans Image Process 26(3):1076–1088

Li S, Yin H, Fang L (2012) Group-sparse representation with dictionary learning for medical image denoising and fusion. IEEE Trans Biomed Eng 59(12):3450–3459

Liu Y, Liu S, Wang Z (2015) A general framework for image fusion based on multi-scale transform and sparse representation. Information fusion 24:147–164

Li Y, Zhao J, Lv Z, Li J (2021) Medical image fusion method by deep learning. Intl J Cognit Comput Eng 2:21–29

Liu Y, Chen X, Peng H, Wang Z (2017) Multi-focus image fusion with a deep convolutional neural network. Information Fusion 36:191–207

Li W, Piëch V, Gilbert CD (2004) Perceptual learning and top-down influences in primary visual cortex. Nat Neurosci 7(6):651–657

Gilbert CD, Li W (2013) Top-down influences on visual processing. Nat Rev Neurosci 14(5):350–363

Tang W, He F, Liu Y, Duan Y (2022) MATR: multimodal medical image fusion via multiscale adaptive transformer. IEEE Trans Image Process 31:5134–5149

Maheshan CM, Prasanna Kumar H (2020) Performance of image pre-processing filters for noise removal in transformer oil images at different temperatures. SN Appl Sci, 2: 1–7

Slimen IB, Boubchir L, Mbarki Z, Seddik H (2020) EEG epileptic seizure detection and classification based on dual-tree complex wavelet transform and machine learning algorithms. J Biomed Res 34(3):151

Zhang Z, Fu H, Dai H, Shen J, Pang Y, Shao L (2019) Et-net: A generic edge-attention guidance network for medical image segmentation. In Proceedings of 22nd International Conference, In Medical Image Computing and Computer Assisted Intervention–MICCAI 2019, Shenzhen, China, Part I vol.22, Springer International Publishing, pp. 442–450, October 13–17

Vulli A, Srinivasu PN, Sashank MSK, Shafi J, Choi J, Ijaz MF (2022) Fine-tuned DenseNet-169 for breast cancer metastasis prediction using FastAI and 1-cycle policy. Sensors 22(8):2988

Chen Z, Chen Y, Wu L, Cheng S, Lin P (2019) Deep residual network based fault detection and diagnosis of photovoltaic arrays using current-voltage curves and ambient conditions. Energy Convers Manage 198:111793

Bhaladhare PR, Jinwala DC (2014) A clustering approach for the-diversity model in privacy preserving data mining using fractional calculus-bacterial foraging optimization algorithm. Adv Comput Eng

BRATS 2020 dataset is taken from “https://www.kaggle.com/datasets/awsaf49/brats2020-training-data?select=BraTS20+Training+Metadata.csv”, accessed on July 2023

Tang W, Fazhi F, Liu Y, Duan Y (2022) MATR: Multimodal Medical Image Fusion via Multiscale Adaptive Transformer. IEEE Trans Image Process 31:5134–5149

Weiwei Kong, Chi Li, Yang Lei (2022) Multimodal medical image fusion using convolutional neural network and extreme learning machine. Front Neurorob, Vol. 16, November

Acknowledgements

I would like to express my very great appreciation to the co-authors of this manuscript for their valuable and constructive suggestions during the planning and development of this research work.

Funding

This research did not receive any specific funding.

Author information

Authors and Affiliations

Contributions

All authors have made substantial contributions to conception and design, revising the manuscript, and the final approval of the version to be published. Also, all authors agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Informed consent

Not Applicable.

Ethical approval

Not Applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ghosh, T., Jayanthi, N. An efficient Dense-Resnet for multimodal image fusion using medical image. Multimed Tools Appl 83, 68181–68208 (2024). https://doi.org/10.1007/s11042-024-18974-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-024-18974-7