Abstract

The correlation based framework has recently been proposed for sparse support recovery in noiseless case. To solve this framework, the constrained least absolute shrinkage and selection operator (LASSO) was employed. The regularization parameter in the constrained LASSO was found to be a key to the recovery. This paper will discuss the sparse support recoverability via the framework and adjustment of the regularization parameter in noisy case. The main contribution is to provide noise-related conditions to guarantee the sparse support recovery. It is pointed out that the candidates of the regularization parameter taken from the noise-related region can achieve the optimization and the effect of the noise cannot be ignored. When the number of the samples is finite, the sparse support recoverability is further discussed by estimating the recovery probability for the fixed regularization parameter in the region. The asymptotic consistency is obtained in probabilistic sense when the number of the samples tends to infinity. Simulations are given to demonstrate the validity of our results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Ariananda, D., & Leus, G. (2012). Compressive wideband power spectrum estimation. IEEE Transactions on Signal Processing, 60, 4775–4789.

Baraniuk, R. (2007). Compressive sensing [lecture notes]. IEEE Signal Processing Magazine, 24, 118–121.

Ben-Haim, Z., Eldar, Y., & Elad, M. (2010). Coherence-based performance guarantees for estimating a sparse vector under random noise. IEEE Transactions on Signal Processing, 58, 5030–5043.

Boyd, S., & Vandenberghe, L. (2004). Convex optimization. Cambridge: Cambridge University Press.

Candes, E., & Wakin, M. (2008). An introduction to compressive sampling. IEEE Signal Processing Magazine, 25, 21–30.

Chen, J., & Huo, X. (2006). Theoretical results on sparse representations of multiple-measurement vectors. IEEE Transactions on Signal Processing, 54, 4634–4643.

Cotter, S., Rao, B., Engan, K., & Kreutz-Delgado, K. (2005). Sparse solutions to linear inverse problems with multiple measurement vectors. IEEE Transactions on Signal Processing, 53, 2477–2488.

Davies, M., & Eldar, Y. (2012). Rank awareness in joint sparse recovery. IEEE Transactions on Information Theory, 58, 1135–1146.

Donoho, D., & Tanner, J. (2005). Sparse nonnegative solution of underdetermined linear equations by linear programming. Proceedings of the National Academy of Sciences, 102, 9446–9451.

Donoho, D., Elad, M., & Temlyakov, V. (2006). Stable recovery of sparse overcomplete representations in the presence of noise. IEEE Transactions on Information Theory, 52, 6–18.

Eldar, Y., & Mishali, M. (2009). Robust recovery of signals from a structured union of subspaces. IEEE Transactions on Information Theory, 55, 5302–5316.

Fuchs, J. (2005). Recovery of exact sparse representations in the presence of bounded noise. IEEE Transactions on Information Theory, 51, 3601–3608.

Grant, M., & Boyd, S. (2014). CVX: Matlab software for disciplined convex programming, version 2.1. http://cvxr.com/cvx.

Haupt, J., Bajwa, W., Raz, G., & Nowak, R. (2010). Toeplitz compressed sensing matrices with applications to sparse channel estimation. IEEE Transactions on Information Theory, 56, 5862–5875.

Jin, Y., & Rao, B. (2013). Support recovery of sparse signals in the presence of multiple measurement vectors. IEEE Transactions on Information Theory, 59, 3139–3157.

Kim, J. M., Lee, O. K., & Ye, J. C. (2012). Compressive music: Revisiting the link between compressive sensing and array signal processing. IEEE Transactions on Information Theory, 58, 278–301.

Lustig, M., Donoho, D., Santos, J., & Pauly, J. (2008). Compressed sensing MRI. IEEE Signal Processing Magazine, 25, 72–82.

Pal, P., & Vaidyanathan, P. (2010). Nested arrays: A novel approach to array processing with enhanced degrees of freedom. IEEE Transactions on Signal Processing, 58, 4167–4181.

Pal, P., & Vaidyanathan, P. (2015). Pushing the limits of sparse support recovery using correlation information. IEEE Transactions on Signal Processing, 63, 711–726.

Slawski, M., & Hein, M. (2012). Non-negative least squares for high-dimensional linear models: Consistency and sparse recovery without regularization. arXiv:1205.0953.

Tan, Z., Eldar, Y., & Nehorai, A. (2014). Direction of arrival estimation using co-prime arrays: A super resolution viewpoint. IEEE Transactions on Signal Processing, 62, 5565–5576.

Tang, G., & Nehorai, A. (2010). Performance analysis for sparse support recovery. IEEE Transactions on Information Theory, 56, 1383–1399.

Tibshirani, R. (2011). Regression shrinkage and selection via the lasso: A retrospective. Journal of the Royal Statistical Society Series B Statistical Methodology, 73, 273–282.

Tulino, A., Caire, G., Verdu, S., & Shamai, S. (2013). Support recovery with sparsely sampled free random matrices. IEEE Transactions on Information Theory, 59, 4243–4271.

Vaidyanathan, P., & Pal, P. (2011). Sparse sensing with co-prime samplers and arrays. IEEE Transactions on Signal Processing, 59, 573–586.

Wipf, D., & Rao, B. (2007). An empirical bayesian strategy for solving the simultaneous sparse approximation problem. IEEE Transactions on Signal Processing, 55, 3704–3716.

Zhao, P., & Yu, B. (2006). On model selection consistency of Lasso. Journal of Machine Learning Research, 7, 2541–2563.

Zheng, J., & Kaveh, M. (2013). Sparse spatial spectral estimation: A covariance fitting algorithm, performance and regularization. IEEE Transactions on Signal Processing, 61, 2767–2777.

Acknowledgments

This work is supported by NSFC (Grant No. 61471174) and Guangzhou Science Research Project (Grant No. 2014J4100247).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1: Proof of Theorem 1

In our proof, following lemma will be used.

Lemma 1

(Pal and Vaidyanathan 2015, Lemma 4) The mutual coherence of matrix \(\mathbf {A}\) and the mutual coherence of its Khatri-Rao product matrix \(\mathbf {B}\) have the relation as follows

Proof (Proof of Theorem 1)

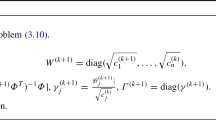

By the convex optimization theory (Boyd and Vandenberghe 2004), the Lagrangian function of (8) is

where \(\mathbf {\lambda }(\tau )\in {\mathbb {R}^{N }}\) is the Lagrangian multiplier related to the parameter \(\tau \). The Karush-Kuhn-Tucker (KKT) conditions are given by

where \(i=1,\cdots ,N\). From KKT conditions, it is easy to verify that conditions \({\mathbf {r}_{{{{\varLambda }'} ^c}}}(\tau ) = \mathbf {0}\) and \({\mathbf {r}_{{\varLambda }'} }(\tau )\succ \mathbf {0}\) are sufficient to let \(\mathrm {supp}[\mathbf {r}(\tau )] = {\varLambda }'\) hold. If \({\varLambda }' \subseteq {{\varLambda }}\), partition the true support into \({{\varLambda }} = \{{\varLambda }' ,{{\varLambda }}\backslash {\varLambda }' \}\) and the nonzero part of the true sparse signal into \({\hat{\mathbf {r}}_{{{\varLambda }}}} = {[{{\hat{\mathbf {r}}^T_{{\varLambda }'} }},{{\hat{\mathbf {r}}^T_{{{\varLambda }}\backslash {{\varLambda }'} }}}]^T}\). (13) can be reformulated as

where \(\mathbf {h}={\mathbf {B}_{{{\varLambda }}\backslash {{\varLambda }'} }}{\hat{\mathbf {r}}_{{{\varLambda }}\backslash {{\varLambda }'} } } + \mathrm {vec}(\sigma _\varepsilon ^2{\mathbf {I}_M} + \mathbf {H} )\). From KKT conditions, the optimum solution should satisfy

and

If \(| {{\varLambda }' }| < 1/2+1/2\mu _{\mathbf {B}}\), the pseudo-inverse \(\mathbf {B}_{{\varLambda }' } ^ + = {(\mathbf {B}_{{\varLambda }' }^T{\mathbf {B}_{{\varLambda }' }})^{-1}}\mathbf {B}_{{\varLambda }' }^T\) exists (Fuchs 2005). From (17), it follows that

Substituting (16) into (19), we have

Substituting (16) into (18), we obtain

Denote that

Then, (21) can be rewritten as

Now, from (20) and (22), the conditions

and

imply that \({\mathbf {r}_{{\varLambda }'}}(\tau ) \succ \mathbf {0}\;\) and \({\mathbf {r}_{{{{\varLambda }'} ^c}}}(\tau ) = \mathbf {0}\;\), respectively. Using the similar techniques in the proof of Theorem 4 in Fuchs (2005) and Lemma 1, we have the following conditions

and

that can guarantee \(\mathrm {supp}[\mathbf {r}(\tau )] \subseteq {{\varLambda }}\). This completes the first part of the proof.

Next, to prove the second part of this theorem, it only needs to verify that (15) can guarantee \(\mathrm {supp}[\mathbf {r}(\tau )] = {\varLambda }\), if the regularization parameter

is used. From (20) and (22), one directly has

and \(\mathbf {h} = \mathrm {vec}(\sigma _\varepsilon ^2{\mathbf {I}_M} + \mathbf {H})\). Next, we observe that if atom \(\mathbf {a}\) is normalized, i.e.

then atom \(\mathbf {b}\) is also normalized, since

Using this relationship, we have

Substituting (26) into (24) and (25), one has

and

Substituting (23) into the right hand of (27) and (28), one has the equalities as follows

and

Therefore, the conditions

and

imply that \(\mathrm {supp}[\mathbf {r}(\tau )] = {{\varLambda }}\). This completes the second part of the proof. \(\square \)

Appendix 2: Proof of Theorem 2

Before the proof of Theorem 2, a proposition is stated.

Proposition 1

If \(\Vert \mathrm {vec}({\mathbf {H}})\Vert _2 <{\xi }\upsilon /{\eta } \), then the probability of exact sparse support recovery by the constrained LASSO can be estimated as

Proof

Let (15) be random event, one directly has

For this random event is a sufficient condition for the exact support recovery. If \(\Vert \mathrm {vec}(\mathbf {H})\Vert _2 <{\xi }\upsilon /{\eta } \), then

Using the similar techniques in the proof of Lemma 6 in Pal and Vaidyanathan (2015), it follows that

This completes the proof of proposition 1. \(\square \)

Proof (Proof of Theorem 2)

Since the distributions of signal and the additive noise are unknown, We will use Chebyshev Inequality to estimate the probability of \(\Pr \left[ \hat{r} _{i} >{(\xi +\eta )}\upsilon /{\eta ^2}\right] \) and \(\Pr (\left| {{{H}_{mn}}} \right| \ge {\xi }\upsilon /{M\eta })\).

The variance of \({H}_{mn}\) is

Hence, one has

By Chebyshev Inequality \(\Pr [\left| {x - \mathbb {E}(x)} \right| \ge t] \le {\mathbb {D}(x)}/{t^2}\), one obtains

and

When \(\sigma _i^2 \le (\xi +\eta )\upsilon /{\eta ^2}\) , the inequality in (31) is meaningless, hence \(\upsilon < {\eta ^2}\sigma _{\min }^2(\xi +\eta )\) is necessary. From (30), (31) and using Proposition 1, one obtains

which completes the proof of the theorem. \(\square \)

Appendix 3: Proof of Theorem 3

Before the proof of this theorem, three helpful lemmas are stated here.

Lemma 2

(Haupt et al. 2010, Lemma 6) Let \({x_i}\) and \({y_i}\), \(i = 1, \cdots ,K\) be sequences of i.i.d zero mean Gaussian random variables with variances equal to \(\sigma _x^2\) and \(\sigma _y^2\), respectively. Then

Lemma 3

(Tan et al. 2014, Lemma A.2) Let \(x_i, i=1,\cdots ,K\) be a sequence of i.i.d zero mean Gaussian random variables with variances equal to \(\sigma ^2\). Then

for \(0\le t \le 4\sigma ^2K\).

Lemma 4

(Pal and Vaidyanathan 2015, Lemma 9) Let \(x_i\), \(i = 1, \cdots ,K\) be a sequence of i.i.d zero mean Gaussian random variables with variances equal to \(\sigma _x^2\). Assume \(0< C < \sigma _x^2\), then there exists a constant \(0<s_0<1/2\) such that \(\Pr \left( \sum \nolimits _{i = 1}^K x_i^2/K > C \right) \ge 1 - {\beta ^{ - K}}\) where \(\beta =(1+2s_0)^{1/2} \exp \left( -Cs_0/\sigma _{x}^2\right) > 1\).

Proof (Proof of Theorem 3)

The proof is similar to Theorem 2. Firstly, we have

For probability \(\Pr \left( |T_{1}|\ge {\xi \upsilon }/{4M\eta }\right) \), we note that

where \(x_{\max }^{(1)}(l)\) and \(x_{\max }^{(2)}(l)\) are the first and the second largest ones of the set \(\{x_i(l)\}_{i\in {\varLambda }} \;\forall l\). This implies

By Lemma 2, the upper bound of the probability \(\Pr \left( |T_{1}|\ge {\xi \upsilon }/{4M\eta }\right) \) can be estimated as

where

and \(\delta _1=8M\eta \Vert \mathrm {vec}(\mathbf {A})\Vert _{\infty }^{2} |{\varLambda }|(|{\varLambda }| - 1)\sigma _{\max }^{(1)}\sigma _{\max }^{(2)}\). Similarly, we can obtain the following results

where \( \delta _2=8 M\eta \Vert \mathrm {vec}(\mathbf {A})\Vert _{\infty }|{\varLambda }|\sigma _{\max }^{(1)}{\sigma _\varepsilon }\) and \(\delta _3 = 8M\eta \sigma _\varepsilon ^2\). From Lemma 3, it follows that

where

Applying Proposition 1 and Lemma 4, the desired result is obtained as

which completes the proof of the theorem. \(\square \)

Rights and permissions

About this article

Cite this article

Fu, Y., Hu, R., Xiang, Y. et al. Sparse support recovery using correlation information in the presence of additive noise. Multidim Syst Sign Process 28, 1443–1461 (2017). https://doi.org/10.1007/s11045-016-0420-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-016-0420-5