Abstract

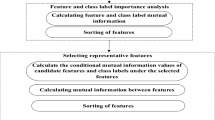

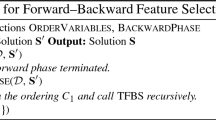

A new filter method is proposed for feature selection and ranking that incorporates a novel mutual information with Gaussian gain for evaluating the relationships between features and the class, and in-between features. The new mutual information is derived as per the axioms of classical information theory from the recently introduced non-extensive entropy with Gaussian gain. The characteristic of this entropy is its non-linearity when representing correlated information in natural texture images represented by sparse probability distributions. In this work, we trace this property in our new mutual information in the context of correlated random variables associated with real-world datasets. The non-linearity of the Gaussian function embedded in the mutual information formula is utilized for identifying the most important features in the correct order of rank, right at the outset of the incremental feature selection algorithm. This leads to formation of smaller groups of ranked feature subsets that give the highest classification accuracies. Extensive experimentation on twenty benchmark datasets from the UCI repository along with comparison to the state-of-the-art confirms the efficacy of our approach. An automated optimum feature subset selection is also proposed based on a simple statistical test on the new measure.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

Akande, K. O., Owolabi, T. O., & Olatunji, S. O. (2015). Investigating the effect of correlation-based feature selection on the performance of support vector machines in reservoir characterization. Journal of Natural Gas Science and Engineering, 22, 515–522.

Battiti, R. (1994). Using mutual information for selecting features in supervised neural net learning. IEEE Transactions on Neural Network, 5(4), 537–550.

Ben-Bassat, M. (1982). 35 Use of distance measures, information measures and error bounds in feature evaluation. Handbook of Statistics, 2, 773–791.

Bolón-Canedo, V., Alonso-Betanzos, A., & Sánchez-Maroño, N. (2017). Artificial intelligence: Foundations, theory, and algorithms feature selection for high-dimensional data. New ork: Springer.

Bostani, H., & Sheikhan, M. (2017). Hybrid of binary gravitational search algorithm and mutual information for feature selection in intrusion detection systems. Soft Computing, 21(9), 2307–2324.

Chow, C., & Liu, C. (1968). Approximating discrete probability distributions with dependence trees. IEEE Transactions on Information Theory, 14(3), 462–467.

Cover, T. M., & Thomas, J. A. (1991). Elements of information theory. New York: Wiley.

Demšar, J. (2006). Statistical comparisons of classifiers over multiple data sets. Journal of Machine Learning Research, 7, 1–30.

Duda, R. O., Hart, P. E., & Stork, D. G. (2001). Pattern classification (2nd ed.). New York: Wiley.

Eriksson, T., Kim, S., Kang, H.-G., & Lee, C. (2005). An information-theoretic perspective on feature selection in speaker recognition. IEEE Signal Processing Letters, 12(7), 500–503.

Estevez, P. A., Tesmer, M., Perez, C. A., & Zurada, J. M. (2009). Normalized mutual information feature selection. IEEE Transactions on Neural Networks, 20(2), 189–201.

Frank, A., & Asuncion, A. (2010). UCI machine learning repository http://archive.ics.uci.edu/ml. Irvine, CA: University of California, School of Information and Computer Science.

Hoque, N., Bhattacharyya, D. K., & Kalita, J. K. (2014). MIFS-ND: A mutual information-based feature selection method. Expert Systems with Applications, 41(14), 6371–6385.

Jurado, S., Nebot, À., Mugica, F., & Avellana, N. (2015). Hybrid methodologies for electricity load forecasting: Entropy-based feature selection with machine learning and soft computing techniques. Energy, 86, 276–291.

Khoshgoftaar, T. M., Gao, K., Napolitano, A., & Wald, R. (2014). A comparative study of iterative and non-iterative feature selection techniques for software defect prediction. Information Systems Frontiers, 16(5), 801–822.

Khushaba, R. N., Al-Ani, A., & Al-Jumaily, A. (2011). Feature subset selection using differential evolution and a statistical repair mechanism. Expert Systems with Applications, 38(9), 11515–11526.

Kira, K., & Rendell, L. A. (1992). The feature selection problem: Traditional methods and a new algorithm. In AAAI (Vol. 2, pp. 129–134).

Lee, S., Park, Y.-T., & d’Auriol, B. J. (2012). A novel feature selection method based on normalized mutual information. Applied Intelligence, 37(1), 100–120.

Lisker, L. (1957). Minimal cues for separating/w, r, l, y/in intervocalic position. Word, 13(2), 256–267.

Liu, H., & Lei, Yu. (2005). Toward integrating feature selection algorithms for classification and clustering. IEEE Transactions on Knowledge and Data Engineering, 17(4), 491–502.

Mafarja, M., Aljarah, I., Heidari, A. A., Hammouri, A. I., Faris, H., Ala’M, A. Z., et al. (2018). Evolutionary population dynamics and grasshopper optimization approaches for feature selection problems. Knowledge-Based Systems, 145, 25–45.

Momma, M., & Bennett, K. P. (2002). A pattern search method for model selection of support vector regression. In SDM (Vol. 132, pp. 261–274).

Pascoal, C., Oliveira, M. R., Pacheco, A., & Valadas, R. (2017). Theoretical evaluation of feature selection methods based on mutual information. Neurocomputing, 226, 168–181.

Peng, H., Long, F., & Ding, C. (2005). Feature selection based on mutual Information: Criteria of max-dependency, max-relevance and min redundancy. IEEE Transactions on Pattern Analysis and Machine Intelligence, 27(8), 1226–1238.

Quinlan, J. R. (1986). Induction of decision trees. Machine Learning, 1(1), 81–106.

Shannon, C. E. (1948). A mathematical theory of communication. The Bell System Technical Journal, 27, 379–423.

Song, Q., Ni, J., & Wang, G. (2013). A fast clustering-based feature subset selection algorithm for high-dimensional data. IEEE Transactions on Knowledge and Data Engineering, 25(1), 1–14.

Susan, S., & Dwivedi, M. (2014). Dynamic growth of hidden-layer neurons using the non-extensive entropy. In 2014 Fourth international conference on communication systems and network technologies (CSNT) (pp. 491–495). IEEE, 2014.

Susan, S., & Hanmandlu, M. (2013a). A non-extensive entropy feature and its application to texture classification. Neurocomputing, 120, 214–225.

Susan, S., & Hanmandlu, M. (2013b). A novel fuzzy entropy based on the non-extensive entropy and its application for feature selection. In 2013 IEEE international conference on fuzzy systems (FUZZ) (pp. 1–8). IEEE.

Susan, S., & Hanmandlu, M. (2015). Unsupervised detection of nonlinearity in motion using weighted average of non-extensive entropies. Signal, Image and Video Processing, 9(3), 511–525.

Susan, S., & Kumar, A. (2016). Auto-segmentation using mean-shift and entropy analysis. In 2016 3rd international conference on computing for sustainable global development (INDIACom) (pp. 292–296). IEEE, 2016.

Susan, S., & Sharma, M. (2017). Automatic texture defect detection using Gaussian mixture entropy modeling. Neurocomputing, 239, 232–237.

Tang, B., He, H., Baggenstoss, P. M., & Kay, S. (2016). A Bayesian classification approach using class-specific features for text categorization. IEEE Transactions on Knowledge and Data Engineering, 28(6), 1602–1606.

Tang, J., & Liu, H. (2014). An unsupervised feature selection framework for social media data. IEEE Transactions on Knowledge and Data Engineering, 26(12), 2914–2927.

Vinh, N. X., & Bailey, J. (2013). Comments on supervised feature selection by clustering using conditional mutual information-based distances. Pattern Recognition, 46(4), 1220–1225.

Wilcoxon, F. (1945). Individual comparisons by ranking methods. Biometrics Bulletin, 1(6), 80–83.

Yamada, M., Jitkrittum, W., Sigal, L., Xing, E. P., & Sugiyama, M. (2014). High-dimensional feature selection by feature-wise kernelized lasso. Neural Computation, 26(1), 185–207.

Yang, F., Mao, K. Z., Lee, G. K. K., & Tang, W. (2015). Emphasizing minority class in LDA for feature subset selection on high-dimensional small-sized problems. IEEE Transactions on Knowledge and Data Engineering, 27(1), 88–101.

Yang, J.-B., & Ong, C.-J. (2012). An effective feature selection method via mutual information estimation. IEEE Transactions on Systems, Man, and Cybernetics, Part B: Cybernetics, 42(6), 1550–1559.

Zhang, Y., Li, S., Wang, T., & Zhang, Z. (2013). Divergence-based feature selection for separate classes. Neurocomputing, 101, 32–42.

Zhou, L.-T., Cao, Y.-H., Lv, L.-L., Ma, K.-L., Chen, P.-S., Ni, H.-F., et al. (2017). Feature selection and classification of urinary mRNA microarray data by iterative random forest to diagnose renal fibrosis: A two-stage study. Scientific Reports, 7, 39832.

Zou, Q., Ni, L., Zhang, T., & Wang, Q. (2015). Deep learning based feature selection for remote sensing scene classification. IEEE Geoscience and Remote Sensing Letters, 12(11), 2321–2325.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Susan, S., Hanmandlu, M. Smaller feature subset selection for real-world datasets using a new mutual information with Gaussian gain. Multidim Syst Sign Process 30, 1469–1488 (2019). https://doi.org/10.1007/s11045-018-0612-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-018-0612-2