Abstract

Clustering algorithms have been applied to different problems in many different real-word applications. Nevertheless, each algorithm has its own advantages and drawbacks, which can result in different solutions for the same problem. Therefore, the combination of different clustering algorithms (cluster ensembles) has emerged as an attempt to overcome the limitations of each clustering technique. The use of cluster ensembles aims to combine multiple partitions generated by different clustering algorithms into a single clustering solution (consensus partition). Recently, several approaches have been proposed in the literature in order to optimize or to improve continuously the solutions found by the cluster ensembles. As a contribution to this important subject, this paper presents an investigation of five bio-inspired techniques in the optimization of cluster ensembles (Genetic Algorithms, Particle Swarm Optimization, Ant Colony Optimization, Coral Reefs Optimization and Bee Colony Optimization). In this investigation, unlike most of the existing work, an evaluation methodology for assessing three important aspects of cluster ensembles will be presented, assessing robustness, novelty and stability of the consensus partition delivered by different optimization algorithms. In order to evaluate the feasibility of the analyzed techniques, an empirical analysis will be conducted using 20 different problems and applying two different indexes in order to examine its efficiency and feasibility. Our findings indicated that the best population-based optimization method was PSO, followed by CRO, AG, BCO and ACO, for providing robust and stable consensus partitions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.Avoid common mistakes on your manuscript.

1 Introduction

Data clustering employs the idea of gathering similar objects in a specific group, taking into consideration one or more common features shared by them (Aggarwal and Reddy 2013). There is a huge variety of clustering algorithms proposed over the years which have been successfully applied in different application domains. However, data clustering techniques have some drawbacks. The idea of combining different clustering algorithms has emerged as an attempt to overcome the limitations of the individual techniques, since these techniques tend to provide complementary information about the problem to be solved. Systems that combine several different clustering methods are called Cluster Ensembles (Fred and Lourenço 2008).

In cluster ensembles, the goal is to find a consensus partition taken as basis partitions provided by clustering algorithms (individual clustering) (Fred and Lourenço 2008). Although cluster ensembles are more accurate than individual clustering algorithms, these systems do not always perform well. This is due to the fact that different application domains or clustering algorithms composing an ensemble can influence the final output of a cluster ensemble. One way to improve the efficiency of clustering ensembles is through the use of optimization techniques. The optimization of cluster ensembles is aimed to improve stability and robustness of the final partition of an ensemble (Chatterjee and Mukhopadhyay 2013).

In this context, optimization techniques can follow two different approaches: the first approach is the generation of initial partitions of an ensemble. In this case, the optimization techniques provide the initial partitions and a traditional combination method is used to combine these initial partitions to compose a consensus partition (Everitt et al. 2011). The second approach works in the opposite direction, focusing on a consensus partition, which is the combination of the initial partitions (Nisha et al. 2013; Sulaiman et al. 2009; Zhong et al. 2015). In other words, the optimization techniques are responsible to generate a consensus partition, based on a set of initial partitions provided by clustering algorithms. This paper will focus on the second approach, more specifically, applying five bio-inspired optimization techniques to provide the consensus partition for cluster ensembles.

In this sense, in this paper, we aim to evaluate the use of population-based bio-inspired optimization techniques in the optimization of cluster ensemble consensus partitions, which are: Genetic Algorithms, Particle Swarm Optimization, Ant Colony Optimization, Coral Reefs Optimization and Bee Colony Optimization. The main aim of this investigation is to assess these optimization algorithms, not only in terms of performance, but also in terms of three important aspects of cluster ensembles, robustness (obtaining a more robust consensus partition than the initial partitions), novelty (achieving an original consensus partition, which cannot be individually obtained from any algorithm) and stability (finding solutions of clusters with less sensitivity to noise, outliers, sampling variations or algorithm variance). An additional objective of this paper is to investigate the use of a new population-based bio-inspired technique, called Coral Reefs Optimization (CRO) algorithm, in the context of cluster ensembles. This optimization algorithm was recently proposed by Salcedo-Sanz et al. (2014) and applied to problems in the mobile network field (Salcedo-Sanz et al. 2014) and sustainable energy (Salcedo-Sanz et al. 2014a, 2015), achieving a good performance. The CRO algorithm was also applied to data clustering problems as individual algorithm in Medeiros et al. (2015). As a consequence of the promising results obtained in Medeiros et al. (2015), we used the original CRO algorithm for cluster ensembles in Silva et al. (2016), using three different modifications for the CRO algorithm proposed by the authors. However, in this paper, we will extend the work of Silva et al. (2016), investigating more extensively the performance of different bio-inspired techniques, not only CRO, in optimizing the consensus partitions. Therefore, there are two main contributions of this paper, which are:

-

To evaluate the use of population-based bio-inspired techniques in the optimization of consensus partitions of cluster ensembles, in terms of performance, robustness, novelty and stability; and

-

To perform a robust comparison of CRO in this context, analysing its performance with several population-based bio-inspired techniques.

This paper is divided into eight sections and organized as follows. Section 2 describes the literature related to this issue, focusing on bio-inspired algorithms applied to optimizing the consensus function of a cluster ensemble. Section 3 briefly addresses cluster ensembles, the main subject of this paper. Section 4 introduces the optimization algorithms used in the proposed approach and comparative analysis. Section 5 describes the evaluation approach of this paper, while the methods and materials are presented in Sect. 6. Section 7 describes the results achieved and presents a comparative analysis. And, to conclude, Sect. 8 summarizes the final thoughts of this article.

2 Related work

As mentioned previously, cluster ensembles are applied to improve the overall accuracy of individual clustering algorithms (Ghaemi et al. 2011). In order to achieve this goal, these algorithms combine multiple partitions generated by different clustering algorithms into a single consensus partition. Basically, a cluster ensemble consists in two stages:

-

Initial partition: this stage represents the generation of the initial partitions by using different clustering algorithms;

-

Ensemble consensus partition: this stage represents the combination of the initial partitions to obtain a consensus partition.

There is a wide variety of studies addressing both the initial partition and the ensemble consensus partition stages of building a cluster ensemble, such as in Esmin and Coelho (2013), Hu et al. (2016), Nisha et al. (2013), Sulaiman et al. (2009), and Zhong et al. (2015). More specifically, different approaches apply optimization techniques in one or both stages, such as in Azimi et al. (2009), Chatterjee and Mukhopadhyay (2013), Esmin and Coelho (2013), Ghaemi et al. (2011), and Yang et al. (2009). This paper is focused on optimizing the ensemble consensus partition. In this sense, the studies described hereafter in this section are related to the optimization of this stage.

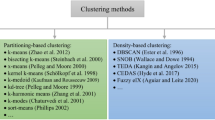

Several techniques have been used in the literature as an attempt to optimize the ensemble consensus partition, and the use of bio-inspired methods to achieve this goal has been proved to be promising. The most common optimization algorithms are PSO (Particle Swarm Optimization), ACO (Ant Colony Optimization) and GA (Genetic Algorithm).

Genetic algorithm techniques have emerged as promising in the optimization of data clustering (José-García and Gómez-Flores 2016), as well as in the optimization of cluster ensembles. In Ghaemi et al. (2011), for instance, an approach was proposed which uses genetic algorithms to generate the consensus parition of a cluster ensemble. According to the authors, the proposed algorithm enabled fast convergence, simplicity and robustness. Experimental results showed the method’s efficiency in popular data sets. Another study using the genetic algorithm can be found in Chatterjee and Mukhopadhyay (2013), in which the authors use a multi-objective genetic algorithm approach for cluster ensembles.

The PSO algorithm has also been applied in the context of cluster ensembles. In Esmin and Coelho (2013), for instance, the authors proposed a PSO-based algorithm to generate the consensus partition in cluster ensembles. In the cited study, five different methods were used for the consensus partition, in which four of them are clustering algorithms (k-Means using Euclidean Distance, k-Means with Manhattan Distance, Maximum Expectancy and PSO), and the other one was the voting method. Results showed that by using PSO in the consensus partition, it is possible to achieve a good performance (often better than the other analyzed methods). Another study using the PSO algorithm can be found in Yang et al. (2009), in which a PSO algorithm was used to optimize the parameters of a cluster ensemble. Adopting error rate as the fitness function, weights vectors were treated as particles in the search space.

The Ant Colony Optimization (ACO) algorithm has also been used to optimize the ensemble consensus partition. In Azimi et al. (2009), for instance, the authors proposed a new consensus function based on the ACO algorithm, which can automatically determine the number of clusters and generate the final partition for a cluster ensemble.

The majority of papers in the optimization of cluster ensembles evaluates the obtained consensus partition only in terms of performance, not assessing important aspects of a cluster ensemble, such as robustness, diversity and stability. Unlike most of the existing papers, this paper evaluates the optimization algorithms based on these aspects. Additionally, differently from the above mentioned studies which, in a general majority, use one or two well-known bio-inspired techniques, this paper investigates the use of five bio-inspired techniques, aiming at performing a comprehensive investigation of bio-inspired techniques in the cluster ensemble context. Finally, this paper investigates the use of a recent optimization technique, namely the Coral Reefs Optimization (CRO), in the optimization of the cluster ensemble consensus partition. This algorithm yielded good results in the context of data clustering, and, precisely for this reason, it is important to analyze its efficiency as far as cluster ensembles are concerned.

3 Cluster ensembles

The combination of different clustering algorithms, also called cluster ensembles, consists of finding a final solution, a consensus partition, based on the combination of multiple partitions of a data set, resulting from multiple applications of one or more clustering algorithms. This consensus partition should be better than the initial partitions (Topchy et al. 2003). By using cluster ensembles, usually the goals of a consensus partitions can be described by three parameters (Faceli et al. 2011), which are described as follows.

-

Robustness: in this case, the consensus partition should obtain a more robust partition than the initial partitions;

-

Novelty: in this case, the consensus partition should achieve an original partition, which cannot be individually obtained from any initial algorithm; and

-

Stability: in this case, the consensus partition should find solutions of clusters with less sensitivity to noise, outliers, sampling variations or algorithm variance.

In the context of cluster ensembles, it is necessary to consider two important aspects, which are: the generation of the initial partitions and the creation of consensus function to combine the initial partitions. The following subsections briefly present the approaches commonly found in literature to generate diversity in initial partitions and obtain the consensus function, highlighting the most relevant approaches for this work.

3.1 The initial partitions

In order to have a final partition with good quality, it is important to have diversity in the generation of initial partitions, which means that initial partitions must be different from each other, in a way that each partition may add relevant information to the final partition. The diversity in the initial partitions can be obtained in the following way (Kuncheva 2004):

-

Making use of different clustering algorithms;

-

Performing the same algorithm several times with different initialization parameters (non deterministic algorithms);

-

Using different samples from the used data set.

In general, cluster ensembles can be homogeneous or heterogeneous. When the initial partitions are generated by the same clustering algorithm, the ensemble is said to be homogeneous. In this case, depending on the characteristics of the algorithm, diversity is obtained from the variation of the parameters given to the algorithm. In contrast, in cases where the initial partitions are generated by different clustering algorithms, the ensemble is said to be heterogeneous. In such cases, algorithms with different characteristics should be considered, so that the partitions generated can promote diversity.

3.2 The consensus function

The consensus function is defined as the combination of the generated initial partitions into a single final partition, also called consensus partition. As mentioned previously, the consensus partition must be better than the initial partitions and, due to this fact, the choice of a consensus function should be made carefully. The combination of initial partitions is a complex task, taking into account the absence of labels on objects to be clustered. This results in partitions not explicitly matching the initial partitions (Topchy et al. 2003). In fact, this problem is even worse when the partitions have different numbers of clusters, resulting in a computationally unmanageable problem (Topchy et al. 2003).

In order to apply population-based optimization techniques in the definition of the consensus partition, three main aspects must be taken into consideration, the representation of each possible solution, the creation of the initial population and the objective function. In relation to the first aspect, in most of the studies presented in the literature, such as in Chatterjee and Mukhopadhyay (2013), Esmin and Coelho (2013), and Yang et al. (2009), each solution is represented by a vector of integers. Therefore, suppose that \(X = \lbrace x_{1}, \ldots , x_{n}\rbrace\) is the used data set, a possible solution S is represented by \(S = \{s_1, \ldots , s_n\}\), where n represents the number of instances of this data set. Each element of S has values that vary from 1 to K, where K is the number of groups of the partition and it represents the cluster assigned to this instance. In this paper, we will use this representation for each solution of the analyzed optimization techniques.

In relation to the initial population, there is no consensus about the best way to create this population. One possible approach, used in Chatterjee and Mukhopadhyay (2013), is to generate the initial population taking into consideration the whole set of initial partitions, along with a random aspect. However, in Chatterjee and Mukhopadhyay (2013), the authors did not explain how this random aspect is included in the initial population generation. In another approach, as used in Esmin and Coelho (2013), the initial population is generated using only the original data set and the initial partitions are used only in the objective function. In this paper, the generation of the initial population, described in Sect. 6.2, uses the initial partitions and a random aspect as basis.

In relation to the objective function, there is a wide variety of functions that have been used in the literature, either in the mono or multi-objective contexts. In the mono-objective context, the work of Esmin and Coelho (2013) uses an external index based on the functioning of PSO (Particle Swarm Optimization) as objective function. On the other hand, in the multi-objective context, the approach proposed in Chatterjee and Mukhopadhyay (2013) uses two objective functions. The first one maximizes the similarity of the consensus cluster to all initial partitions, where the similarity between two partitions is calculated using the adjusted rand index. The second objective minimizes the standard deviation among the similarity scores in order to avoid that the consensus partition is very similar to one initial partition and very different to the others. In this work, two objective functions will be used, one external and one internal index (described in more details in Sect. 6.3).

4 Population-based bio-inspired techniques

The concept of optimization consists in finding an optimal solution for a required function, often used to maximize or minimize this function. The optimal solution is inherent to the specific problem to be optimized. In general, the optimization techniques are used to solve complex problems, often difficult to be solved, i.e., when there is no simple, directly calculable solution for the problem (Simon 2013).

There are several optimization techniques proposed in the literature and the bio-inspired techniques are among the most promising ones (Simon 2013). There are two main classes of techniques that can be used in the optimization process, trajectory-based and population-based techniques. In the first approach, it starts with one solution s and employs a local search to iteratively move from this solution s to an improved solution \(s^*\) in the neighborhood of s. In the second approach, we start with a population of solutions, instead of just one. In this paper, we will focus on the second approach and we will briefly describe five well-known population-based techniques that will be used in this paper.

4.1 Coral reefs optimization—CRO

Proposed by Salcedo-Sanz et al. (2014a), Salcedo-Sanz et al. (2014b), the CRO algorithm is a bio-inspired metaheuristic for optimization problems. By artificially simulating all the processes related to corals and reefs formation (reproduction of the corals and reef longevity), the CRO algorithm provides an efficient process for solving optimization problems. The CRO algorithm is described in the following steps.

-

1.

Create a N × M square grid;

-

2.

Randomly assign some squares to be occupied;

-

3.

Start the reef formation:

-

(a)

Apply external sexual reproduction (broadcast spawning);

-

(b)

Apply internal sexual reproduction (brooding);

-

(a)

-

4.

Start the larvae setting phase (populate the grid)

-

(a)

Randomly set a coral to a square;

-

(b)

If the square is empty then the coral sets and grows;

-

(c)

Otherwise, this coral competes to the existing one by using its health function value;

-

(d)

After a number of unsuccessful tries, the coral dies;

-

(a)

-

5.

Start the budding phase (asexual reproduction)

-

(a)

Sort the corals in the reef by using their level of healthiness;

-

(b)

Duplicate a fraction of sorted corals;

-

(c)

Try to settle in a different part by using step 4;

-

(a)

-

6.

Start the degradation phase

-

(a)

Apply the degradation operator to a fraction of the worse health corals in the grid;

-

(a)

-

7.

Stop or return to step 3.

Basically, the CRO algorithm creates an \(N \times M\) sized square grid, where each square (i, j) is able to allocate a coral (or colony of corals), representing one possible solution to a certain problem (step 1 of algorithm 1). Initially, the algorithm randomly assigns some squares to be occupied by corals and some other squares to be empty. In this way, empty cells are left for new corals to settle and grow (step 2 of algorithm 1). The occupation rate at the beginning of the algorithm is an important parameter of the CRO algorithm, which is defined as \(0< \rho < 1\).

After the reef initialization phase, a second phase of reef formation is carried out (step 3 of algorithm 1). In the CRO algorithm, corals reproduce at each iteration step, producing new individuals. In the sexual context of reproduction, there are two phases: broadcast spawning (external sexual reproduction) and brooding (internal sexual reproduction). The first one (step 3(a) of algorithm 1) is similar to crossover whereas the second one (step 3(b) of algorithm 1) is similar to mutation in an evolutionary context. Once all the larvae are formed either by broadcast spawning or by brooding (called larvae setting), they will try to set and grow in the reef (step 4 of algorithm 1). However, the health function of each coral larva must be computed. Each coral will be randomly set to a square (cell) of the reef. In case of available space (free cell), a coral sets and grows. In contrast, it will have to compete to the existing coral by using its health function value as a dispute parameter. A number of attempts for a coral to set in the reef can be defined. After this number of unsuccessful tries, it will be removed from the reef.

After these three phases (broadcast spawning, brooding and larvae setting), a new phase called budding or fragmentation (asexual reproduction) begins (step 5 of algorithm 1). In this phase, the overall set of existing corals in the reef are sorted as a function of their level of healthiness. Thus, a fraction of it is duplicated and tries to settle in a different part of the reef by following the larvae setting process described above. In the last phase, called degradation (step 6 of algorithm 1), a small number of corals in the reef can be removed, freeing space in the reef for next coral generation. The degradation operator is applied with a very small probability, and exclusively to a fraction of the worst health corals in the grid.

Then, a stopping criterion is evaluated and the algorithm either stops or returns to the reproduction of new individuals.

4.1.1 The extensions of the CRO algorithm

Initially proposed for optimization problems, the CRO algorithm was also applied to clustering problems, such as in Medeiros et al. (2015). In this study, the authors analyzed the effectiveness of the CRO algorithm in clustering problems, and also proposed three new modifications of the algorithm in order to improve its performance.

The first extension of the CRO algorithm, called as CRO1, basically modifies the step 2 of the original algorithm. Instead of organizing the possible solution in a random order in the grid, it uses a criterion to rank and organize these solutions into the grid. The idea is to have the notion of neighbourhood in the grid, and solutions that have more similarity are closer in the grid than distant solutions. The solutions are ranked in ascending rank (from the worst to the best solution). In addition, in order to rank the possible solutions of the grid in each iteration, step 2 has to be included in the main loop of the algorithm. Therefore, step 7 of algorithm 1 also needs to be changed to include step 2 in the main loop.

The second extension of the CRO algorithm, called CRO2, simplifies the computation of CRO and gives more chance of survival for all possible solutions of the problem. In the original CRO, the degradation phase (step 6) occurs in every iteration of the algorithm. We believe that this step eliminates the chances of surviving for the least health corals in the grid. In evolution, a bad solution may become a good one when it is combined with good solutions. In this case, in this extension, we use the degradation phase less frequent than the original CRO, and with a higher probability than the original CRO. Then, the degradation phase is executed every N iterations (we will use \(N = 5\)). In addition, the degradation probability in CRO2 is higher than in the original CRO (5% for the original and 10% for CRO2).

The third and last extension of the CRO algorithm, called CRO3, consists in combining the modification proposed in the previous two extensions in only one algorithm. Therefore, CRO3 uses a ranked grid of CRO1 and applies the new degradation phase and different probability of CRO2.

4.2 Genetic algorithm

Genetic Algorithms (GA) are evolutionary methods and it can be defined as a search technique based on a metaphor of the biological process of natural evolution, that can be used in search problems and optimization (Holland 1992).

Genetic algorithm is a population-based method and a chromosome is used to describe each solution of a problem. Additionally, in each step of a genetic algorithm, a population of chromosomes (individuals) is considered.

The genetic algorithm processing is defined as follows: the population of the first iteration (initial population) is usually chosen randomly. Then, the chromosomes of this population are evaluated using an objective (fitness) function that defines the goodness of an individual (chromosome) in the optimization problem. After this evaluation, some individuals are chosen and genetic operators are used, creating a new set of individuals (population). This loop which involves evaluation, selection and genetic operator is repeated until an ending condition can be reached. The main goal is to evolve these individuals, obtaining better chromosomes and this is done until the global optimum is reached (Holland 1992).

Genetic algorithms are commonly used in data clustering problems and cluster ensembles. In this article, the performance of genetic algorithms will be analyzed to create the optimal consensus partition in cluster ensembles.

4.3 Ant colony optimization

As genetic algorithm, Ant colony optimization (ACO) can be seen as a popula-tion-based method which is applied in computational problems that can be reduced to the idea of defining good paths in graph structures. In other words, ACO was proposed as a nature-inspired meta-heuristic which aims to find solutions for hard combinatorial optimization (CO) problems (Dorigo 1992). The main aim of ACO is to represent the behavior of real ants while they search for food.

Basically, ACO applies a parallel search over several constructive computational threads. In this search, it is taken into consideration some local problem data and it simulates the idea of pheromone with a dynamic memory which contains information about the quality of the results which were previously obtained. The idea that an intelligent collective behavior can be achieved by the interaction of different search threads is the main aim of ACO. The functioning of an ACO algorithm can be broadly expressed by repeating the next steps: (1) Using a pheromone model, candidate solutions are built. This model is based on a parametric probability distribution which can be calculated on the solution space; (2) The pheromone values of all candidate solutions are updated and this is done to reach high quality solutions.

4.4 Bee colony optimization

Bee colony optimization (BCO) is a swarm intelligence method originally proposed in Karaboga (2005). The BCO algorithm simulates the behavior of real bees in finding food sources and sharing information with other bees (Karaboga 2005). Usually, there are few agents (bees) which search solution space at the same time. These artificial bees have incomplete information when solving the problem. In addition, there is no global control and the artificial bees are based on the concept of cooperation. Cooperation enables bees to be more efficient, sometimes even to achieve goals they are not able to achieve individually. These algorithms denote general algorithmic frameworks that could be applied to several optimization problems (Karaboga and Basturk 2008).

In the BCO algorithm, there are three types of bees, which are: employed bees, onlooker bees, and scout bees. The employed bees are responsible for searching food around the food source in their memory; and the employed bees share the food source information with the onlooker bees. The onlooker bees tend to select good food sources from those found by the employed bees. In this sense, the food sources that have higher quality (based on the objective function) will have a high probability of being selected by the onlooker bees. Finally, some employed bees are turned into scout bees, basically those that abandon their food sources and the scout bees start a new search for finding food source.

4.5 Particle swarm optimization

This technique was originally proposed in Kennedy and Eberhart (1995) and it can be considered as a stochastic method that was inspired by the behavior of a flock of birds or the sociological behavior of a group of people. The captured behavior of a particle swarm is defined as follows: birds are randomly arranged in a flock and they should look for food and for places in which a nest can be built. In the beginning, as there exist no information about food and nest, birds should fly with no guidance.

It is important to emphasize that they fly in flocks until food or nest is found. When food or nest is found by a bird, the ones that are close to it are attracted by this bird. Then, all birds can find food or nest. According to Kennedy and Eberhart (1995), the intelligence in the behavior of birds is basically social. Therefore, it is possible for a bird to learn from the knowledge of a different one since the chance of other birds to find food or nest increases drastically when one bird finds them.

In an analogy, the search space of a problem may be defined as the area flown by the birds (particle). In addition, an optimum solution is the one in which a bird finds food or nest. As a result, PSO is an algorithm formed by a set of particles and each one defines a possible solution to a problem. During its functioning, the search for an improved position in the search space is done by each particle. This is done by updating a velocity parameter and it is based on some rules inspired by behavioral models of flock of birds.

5 The evaluation approach

As mentioned previously, in this paper, we aim to present an evaluation approach that assess the use of optimization techniques to provide cluster ensemble consensus partitions. Figure 1 summarizes the approach proposed in this work.

As it can be seen in Fig. 1, the evaluation approach can be described in the following steps.

-

1.

The original data set X is divided into N subsets, \(\{X_1,\ldots ,X_n\}\), which can have the same size of the original data set (samples with replacement, as in Bagging or Boosting) or not (feature and/or instance selection methods for ensembles);

-

2.

Once the subsets are created, they are presented to M clustering algorithms to provide the initial partitions \(P_1,\ldots ,P_m\). In this paper, we will use three well-known clustering algorithms, which are: k-Means, Expectation–Maximization (EM) and Hierarchical (Aggarwal and Reddy 2013);

-

3.

Then, these partitions are combined in order to obtain a consensus partition. In other words, a consensus partition, CP, (also called final partition) will be generated, which results from the combination of the initial partitions created in step 2. It is given by Eq. 1.

$$\begin{aligned} CP=Comb\{P_1,\ldots ,P_m\} \end{aligned}$$(1)The generation of this Comb function is performed by an optimization technique, based on an objective function f(OF). In this paper, five population-based bio-inspired techniques will be used;

-

4.

Finally, the final partition will be assessed in terms of performance (CR and DB indexes), as well as in terms of robustness, novelty and stability (described in Sect. 6.4).

We would like to emphasize the functioning of the evaluation approach, in which the consensus function is generated by an optimization technique. Figure 2 illustrates the functioning of this approach in terms of product (the result of each phase).

As we can observe, an optimization technique (OT) receives as input the initial partitions generated by the clustering algorithms, and then generates an initial population. The initial and consensus partitions are represented by an integer vector, containing the group assigned to each instance. Once the initial population is generated, the optimization process (based on the used optimization technique) is executed until achieving a stopping criterion, always selecting the best solutions in every interaction (an elite procedure). After this process, the OT result is represented by the final population, from which one single individual is selected as the most relevant one and it represents the aimed final partition.

6 Experimental setting up

In order to analyze the efficiency of the population-based techniques when optimizing cluster ensembles, an experimental analysis will be conducted. The next subsections will describe important aspects of this investigation.

6.1 Data sets

In this analysis, twenty (20) data sets will be used, which were exported from UCI Machine Learning Repository (Asuncin and Newman 2012). The main features of these data sets are succinctly described in Table 1. All data sets are pre-processed, with the goal of correcting some issues, such as attribute values in different scales and missing attribute values. In order to deal with missing values, for numerical attributes, we replace them with the average value, and with the most frequent category value for the categorical attributes.

6.2 Optimization techniques

In all five optimization techniques, as mentioned previously, each possible solution is represented by an integer vector, with size n (number of instances), and each element contains an integer ranging from 1 to K (number of groups), representing the group assigned for this instance.

In this paper, the initial population is generated using the initial partitions and a random aspect as basis. Suppose that we have an ensemble with 3 clustering algorithms. In this case, we will have 3 integer vectors representing the initial partitions, \(A = \{a_1, \ldots , a_n\}, B = \{b_1, \ldots , b_n\}, C = \{c_1, \ldots , c_n\}\) and a random vector \(V_{random} = \{v_1, \ldots , r_n\}\). Therefore, a solution of the initial population, \(S_i=\{s_1, \ldots , s_n\}\), is created by randomly choosing an element of A, B, C or \(V_{random}\). For instance, the \(s_1\) value is defined by randomly choosing among \(a_1, b_1, c_1\) and \(v_1\). When \(v_1\) is selected, this means that an element of the random vector was selected and a random number, from 1 to K, was randomly selected.

The parameter setting was done using ParamILS (Hutter et al. 2009), an automatic algorithm configuration framework. For simplicity reasons, ParamILS was applied to select only two parameters, for each data set and optimization technique, number of individuals [10, 150] and number of interactions [10, 200]. For the remaining parameters, we applied an initial analysis, with a separated validation set, for each data set and we used different settings for all 20 data sets. The selected values can be described as follows.

-

ACO: The main ACO parameters are \(\alpha =[0.1,0.7]\), \(\beta =[0.5,1.2]\) and \(\rho [=0.1,0.4]\);

-

BCO: The maximum number of iterations that a solution can be stagnated was set to a value between 10 and 20; the movement operator selects the components of a solution to be randomly changed; and we applied a simple local search when this algorithms needs to apply a search technique;

-

CRO: The dimension of the grid varies between 80 and 200; the initial occupation rate was set to values higher than 0.85; the coral replication rate was set to a value in the interval [0.4, 0.6]; Fb-broadcast was set to 0.8, for all data sets, and Fd-depredation was set differently for each CRO extension, CRO1 = 0.05, CRO2 = 0.1 and CRO3 = 0.1; Steps Until Depredation were also set differently to each CRO extension, CRO1 = 1, CRO2 = 5 and CRO3 = 5; Finally, we used a two-point crossover (crossover probability = [70, 85%]) and a mutation operator (probability = [1, 10%]);

-

GA: a standard two-point crossover (crossover probability = [65, 87%]) and bit flipping mutation; a random and uniform choice of the solution parents; and population of 30–50 chromosomes. The mutation operator was applied with a probability of [2, 13%], in order to avoid premature convergence.

-

PSO: The initial probability of a particle follows its own way was set to values higher than 0.88; the initial probability of a particle gets to its best local position was set to [0.04, 0.09]; and the initial probability of a particle gets to the best global position was set to [0.03, 0.08]. We use a simple local search as search technique and path re-linking as the velocity operator.

6.3 The objective functions

For mono-objective optimization problems, only one objective function f(OF) is usually applied. This function can be maximized, \(f_{c}(OF) = max \{{OF}\}\) or minimized, \(f_{c}(OF) = min \ \{OF\}\). In this paper, two different objective functions will be used, in all optimization techniques, which are:

-

Adjusted Rand Index (ARI): it is an external index and it determines how similar two partitions are, in which one of the partitions must be a previously known data structure, while the other partition is the one being assessed (Aggarwal and Reddy 2013); Suppose that \(X = \lbrace x_{1}, \ldots , x_{n}\rbrace\) is the used data set. Given two partitions \(P_A = \lbrace C_{1}, \ldots , C_{K}\rbrace\), composed of K groups and \(P_B = \lbrace C_{1}, \ldots , C_{Q}\rbrace\), composed of Q groups, the ARI is calculated as follows.

$$\begin{aligned} ARI=\frac{{n \atopwithdelims ()2}(a+d)-\left[ (a+b)(a+c)+(c+d)(b+d) \right] }{{n \atopwithdelims ()2}^{2}-\left[ (a+b)(a+c)+(c+d)(b+d) \right] } \end{aligned}$$(2)where a represents the number of pair of instances with the same cluster in \(P_A\) and in \(P_B\), b represents the number of pairs of instances with the same cluster in \(P_A\), but in different clusters in \(P_B\), c represents the number of pairs of instances with different clusters in \(P_A\), but with the same cluster in \(P_B\) and d represents the number of pairs of instances with different clusters in both \(P_A\) and \(P_B\).

-

Davies–Bouldin (DB): it is an internal index and it calculates the rate between the sum of dispersion inside the groups (compactness) and the dispersion among the groups (separability) (Aggarwal and Reddy 2013). Suppose that \(X = \lbrace x_{1}, \ldots , x_{n}\rbrace\) is the used data set and \(P = \lbrace C_{1}, \ldots , C_{K}\rbrace\) is a partition composed of K groups, the DB is calculated as follows.

$$\begin{aligned} DB=\frac{1}{K} \sum _{r=1}^{K} max_{s=1,\ldots ,K,s\ne r}(R_{rs}) \end{aligned}$$(3)Where

$$\begin{aligned} R_{rs}=\frac{S_r + S_s}{ED(c_r,c_s)} \end{aligned}$$(4)And

$$\begin{aligned} S_r=\frac{1}{|C_{r}|}\sum _{x_i \in C_r}ED(x_i,c_r) \end{aligned}$$(5)\(S_r\) represents the distance within group r and \(ED(c_r,c_s)\) is the Euclidean distance between the centroid of group r, \(c_r\), and the controid of group s, \(c_s\). Finally, \(|C_{r}|\) is the cardinality of Cluster r.

Therefore, for the generation of the ensemble consensus partition, two different configurations will be analyzed for each optimization technique, varying the objective function.

6.4 Evaluation parameters

As mentioned in Sect. 3, a consensus partition should be more robust, stable and different from the initial partitions. In this sense, we will assess the consensus partition using performance and these three parameters (robustness, novelty and stability).

In the performance analysis, two evaluation indexes will be used, Adjusted Rand Index (ARI) and Davies–Bouldin (DB). These are well known indexes and one is an external index (ARI) and the other one is an internal one (DB).

For robustness, we will use Eq. (6) and it represents the average gain in performance of the consensus partition, in relation to the initial partitions.

where \(Per_C\) is the performance of the consensus partition and \(Per_i\) is the performance of the i-th partition, and M is the number of initial partitions.

For novelty, we will use Eq. (7), which represents the average dissimilarity between the consensus partition and the initial partition.

where \(DIS(P_i,P_j)\) is a dissimilarity function between two vectors that sets 0 to the i-th element when the i-th element of both vectors are the same and 1 when the elements are different, M is the number of initial partitions and n is the number of instances in the data set.

Finally, stability will be assessed using Eq. (8), which represents the variation in performance of a consensus partition when varying the data set.

where NV is the number of variations of the data set and \(Per_{ave}\) is the average performance among all NV performance. The idea behind this equation is to vary the analysed data set and to assess the variation in performance of the consensus partition. In this paper, the variation was done using a resampling technique with different proportions for each class. For instance, for a balanced 4-class problem (25% of the instances for each class), with an initial proportion of [0.25, 0.25, 0.25, 0.25], we would have variations such as: [0, 0.25, 0.25, 0.5] or [0.10, 0.3, 0.3, 0.3]. As there are too many possible variations, we restricted to 30 randomly selected variations. Therefore, in this paper, \(NV=30\).

6.5 Methods and materials

As mentioned previously, the initial partitions of the cluster ensemble are generated using three different clustering algorithms, which are: k-Means, Expectation-Maximization and Hierarchical. These algorithms have been chosen based on their wide applicability on cluster ensembles. In addition, they are simple and easily applicable algorithms. In this work, the implementations of the used algorithms were exported from the WEKA machine learning simulator (Hall et al. 2009).

Once the optimization techniques and clustering algorithms used in this work (except the Hierarchical algorithm) are not deterministic, 10 executions of each configuration are performed. In addition, for each clustering algorithm, the number of groups varies from 2 to 10. Therefore, for each configuration, there will be 90 results (10 executions \(\times\) 9 number of groups) and they will be averaged to be presented in this paper.

In addition, in order to more significantly validate the performance of the optimization techniques used in the empirical analysis, a statistical test will be applied. In this case, we will use the Friedmann and post-hoc Nemenyi test, since these non-parametric tests are suitable to compare performance of different learning algorithms applied on several data sets. Friedmann test is used to compare the performance of all of the seven techniques, ACO, BCO, GA, PSO and all three extensions of CRO, CRO1, CRO2 and CRO3. The post-hoc Nemenyi test will perform a two-by-two comparison when the Friedmann test detects statistical difference among the different techniques.

7 Results

As mentioned previously, this section presents the results of all four existing optimization techniques. In addition, three extension of CRO will be evaluated in this paper. Therefore, seven different techniques will be assessed, which are: Genetic Algorithms (GA), Particle Swarm Optimization (PSO), Ant Colony Optimization (PSO), Bee Colony Optimization (BCO), along with three extensions of CRO, called CRO1, CRO2 and CRO3. Note that each technique uses two different objective functions (ARI and DB). In this sense, we have a total of 14 combinations which will be evaluated. They will be evaluated by the same objective function, for instance, ARI as objective function and ARI as evaluation measure.

In this paper, the empirical analysis is divided into four parts. In the first part, we will evaluate the performance (evaluation measures) of the population-based optimization techniques. In the second, third and fourth parts, a comparative analysis of all seven techniques using robustness, novelty and stability will be performed, respectively.

7.1 Performance

Table 2 shows the performance of all seven optimization techniques, using Adjusted Rand Index (ARI) and Davies–Bouldin (DB) as fitness functions and evaluation measures for each data set. The last row in this table is the overall value of the compared techniques for all analyzed data sets. The bold numbers represent the best average results for each data set.

As we can see in Table 2, PSO, CRO3 and CRO2 presented the best results. However, PSO obtained the best average result using the ARI and DB objective functions (with CRO3 being the second best in ARI and CRO2 in DB). Another important aspect to be observed in Table 2 is that all three CRO extensions (CRO1, CRO2 and CRO3) obtained the second, third and fourth best results, outperforming ACO, GA and BCO. Therefore, as a result of this analysis, we can state that, according to the performance results, the CRO variations obtained promising results, having better results than important bio-inspired algorithms, such as GA, ACO and BCO.

7.2 Robustness

In this section, we will investigate the robustness of the consensus partition, when compared to the initial partition. Robustness, presented in Eq. (6), represents the average gain in performance reached by the consensus partition. Table 3 shows the robustness results for all seven techniques using Adjusted Rand Index (ARI) and Davies–Bouldin (DB). In this table, the bold numbers represent the best average results for each data set.

As we can see in Table 3, all the optimization techniques provided consensus partition more robust than the initial partitions (positive values), showing the importance of using an efficient method to build the consensus partition. In addition, once again, the best robustness results were obtained by PSO, followed by CRO3, CRO2, CRO1, BCO, AG and ACO. The important aspect is that all three CRO extensions provided better average results than ACO, BCO and GA (as in the performance results).

7.3 Novelty

In this section, we aim at assessing how different (new) the consensus partition is from the initial partitions. Novelty, presented in Eq. (7), represents the average distance between the consensus partition and the initial partitions. Table 4 shows the novelty results for all seven techniques using Adjusted Rand Index (ARI) and Davies–Bouldin (DB). In this table, the bold numbers represent the best average results for each data set.

In analyzing Table 4, we observe that all the optimization techniques provide different consensus partitions, in relation to the initial partitions (positive values). However, an interesting aspect is that the optimization technique that provided the most different consensus partition was not PSO, but GA when using ARI and BCO when using DB index. We believe that the exploitation aspect of GA had guided the GA search for different regions of the search space, leading to different consensus partitions. Nevertheless, these new regions do not provide robust consensus partitions. Finally, PSO and all three CRO extensions provided reasonable different consensus partitions, but they were not the best ones, in terms of novelty, in neither ARI nor DB.

7.4 Stability

Finally, in this section, we will analyze the behavior of the population-based algorithms based on the stability that the consensus partition can reach when varying data set. Stability, presented in Eq. (8), represents the variation in performance of the consensus partition when different resamples of the data set are presented. The resampling procedure is explained in Sect. 6.4. Table 5 shows the novelty results for all seven techniques using Adjusted Rand Index (ARI) and Davies–Bouldin (DB). In this table, the bold numbers represent the best average results for each data set.

As we can see in Table 5, as it was expected, all the optimization techniques had variations in performance of the consensus partition, when varying (resampling) the used data set. However, unlike robustness and performance, the population-based algorithms that achieve the most stable algorithms were CRO1 and PSO. CRO1 obtained the best results for ARI and PSO for DB. An important aspect is the poor performance of PSO for ARI, showing that this optimization algorithm may provide the most robust consensus partitions, but they are not as stable as the ones provided by all three extensions of CRO. For DB, PSO obtained the best results, but was followed closely by CRO1, CRO2 and CRO3.

7.5 The statistical tests

In order to validate more significantly the performance of the optimization techniques used in the empirical analysis, we applied the Friedmann test to compare the performance of all seven optimization techniques. Table 6 presents the results of the statistical test for all four evaluation parameters. The first lines of all parameters present the p-value of the Friedmann test, and the results show that the performance results of all seven techniques are statistically significant, for all four evaluation parameters and both evaluation metrics (p values < 0.05).

As a result of the Friedman test, we applied the post-hoc test to make a two-by-two comparison. For simplicity reasons, we will compare the CRO variations to PSO and BCO, since they were the best bio-inspired techniques, apart from CRO. In order to make a fair comparison, we applied the post-hoc test for each objective function (ARI and DB) individually, always comparing two techniques with the same objective function. In Table 6, the bold numbers represent the cases in which CRO is better than the other technique (PSO or BCO), from a statistical point of view. In contrast, the bold number cells represent the cases in which another technique is better than CRO. For the regular cells, the performance of both techniques are similar, from a statistical point of view.

When comparing CRO to BCO, the CRO technique had better performance (bold numbers) than the BCO technique in 38% of the analyzed cases (9 out of 24). In addition, the CRO technique had similar performance in 10 cases and worse results in 5 cases, all of them for novelty. When compared to PSO, CRO had similar results to PSO in 8 cases and better results (bold numbers) in 3 case out of 36 as well as worse results in 11 cases. It is important to emphasize that the good performance obtained by BCO in stability did not lead to robust consensus partitions. On the other hand, CRO obtained reasonable stability (not as good as BCO), which was sufficient to achieve robust and stable consensus partitions.

As a result of the statistical analysis, we can state that the best overall performance was obtained by PSO, but CRO obtained better performance than BCO, GA and ACO, from a statistical point of view. We believe that the mechanisms of global and local bests used by PSO, allowing the individuals to select the best path, are important aspects in the search for the optimal solutions and makes PSO an efficient technique for cluster ensembles. Additionally, the idea behind CRO (external and internal Sexual Reproduction, among others) is also making CRO a robust technique for cluster ensembles, better than important bio-inspired techniques.

8 Final remarks

This paper presented an investigation of population-based optimization techniques to define the consensus partition for cluster ensembles. The main contribution of this paper is to present an empirical evaluation in terms of performance and three important parameters in a cluster ensembles, robustness, novelty and stability. In addition, we investigated the use of the Coral Reefs Optimization (CRO) algorithm to provide the consensus partition from a set of initial partitions.

In order to assess the performance of the evaluation approach, an empirical analysis was conducted. In this analysis, the evaluation approach used seven optimization techniques and each one with two different objective functions (ARI and DB) and they were all applied to 20 different data sets.

As a result of this analysis, we can state that, according to the results, the CRO variations obtained competitive results, when compared to important bio-inspired algorithms, such as GA, ACO and BCO. In fact, for performance, robustness and stability, a CRO extension provided either the best or second best results of all seven techniques. In general, we can conclude that the best overall performance was obtained by PSO, followed by CRO, BCO, GA and ACO. We believe that the mechanisms of global and local bests used by PSO, allowing the individuals to select the best path, are important aspects in the search for the optimal solutions and makes PSO an efficient technique for cluster ensembles. Additionally, the idea behind CRO (external and internal Sexual Reproduction, among others) is also making CRO a robust technique for cluster ensembles, better than important bio-inspired techniques.

As future work, we can include other meta-heuristic optimization techniques, mainly the trajectory-based ones, such as: Simulated Annealing, GRASP, among others. The results can also be complemented by performing experiments with the use of other objective functions as well as other evaluation measures. Finally, we could think of some ways to modify CRO inspired by some mechanism adopted in PSO, which was the best performing algorithm.

References

Aggarwal CC, Reddy CK (2013) Data clustering: algorithms and applications. Chapman & Hall/CRC, Boca Raton

Asuncin A, Newman DJ (2012) UCI machine learning repository. http://ics.uci.edu/~mlearn/MLRepository.html

Azimi J, Cull P, Fern X (2009) Clustering ensembles using ants algorithm. Springer, Berlin, pp 295–304

Chatterjee S, Mukhopadhyay A (2013) Clustering ensemble: a multiobjective genetic algorithm based approach. In: International conference on computational intelligence: modeling, techniques and applications (CIMTA), pp 443–449

Dorigo M (1992) Optimization, learning and natural algorithms

Esmin AAA, Coelho RA (2013) Consensus clustering based on particle swarm optimization algorithm. In: 2013 IEEE international conference on systems, man, and cybernetics, pp 2280–2285. IEEE

Everitt BS, Landau S, Leese M, Stahl D (2011) Optimization clustering techniques. Wiley, London, pp 111–142. https://doi.org/10.1002/9780470977811.ch5

Faceli K, Lorena AC, Gama J, de Leon Ferreira de Carvalho ACP (2011) Artificial intelligence: a machine learning approach (in portuguese). LTC, Rio de Janeiro

Fred A, Lourenço A (2008) Cluster ensemble methods: from single clusterings to combined solutions. Springer, Berlin, pp 3–30

Ghaemi R, Sulaiman Nb, Ibrahim H, Mustapha N (2011) A review: accuracy optimization in clustering ensembles using genetic algorithms. Artif Intell Rev 35(4):287–318

Hall M, Frank E, Holmes G, Pfahringer B, Reutemann P, Witten IH (2009) The weka data mining software: an update. SIGKDD Explorations 11(1)

Holland JH (1992) Genetic algorithms. Sci Am 267(1):66–72

Hu J, Li T, Wang H, Fujita H (2016) Hierarchical cluster ensemble model based on knowledge granulation. Knowl-Based Syst 91(C):179–188

Hutter F, Hoos HH, Leyton-Brown K, Stützle T (2009) Paramils: an automatic algorithm configuration framework. J Artif Int Res 36(1):267–306

José-García A, Gómez-Flores W (2016) Automatic clustering using nature-inspired metaheuristics. Appl Soft Comput 41(C):192–213

Karaboga D (2005) An idea based on honey bee swarm for numerical optimization. Technical Report TR06, Erciyes University

Karaboga D, Basturk B (2008) On the performance of artificial bee colony (abc) algorithm. Appl Soft Comput 8(1):687–697

Kennedy J, Eberhart R (1995) Particle swarm optimization. In: Neural networks, 1995. Proceedings, IEEE international conference on, vol 4, pp 1942–1948

Kuncheva LI (2004) Combining pattern classifiers: methods and algorithms. Wiley, New Jersey

Medeiros IG, Xavier-Jnior JC, Canuto AMP (2015) Applying the coral reefs optimization algorithm to clustering problems. In: International joint conference on neural networks (IJCNN). Proceedings of international joint conference on neural networks (IJCNN) vol 1, pp 1–8

Nisha MN, Mohanavalli S, Swathika R (2013) Improving the quality of clustering using cluster ensembles. In: Proceedings of 2013 IEEE conference on information and communication technologies (ICT 2013), pp 88–92. IEEE

Salcedo-Sanz S, Gallo-Marazuela D, Pastor-Sánchez A, Carro-Calvo L, Portilla-Figueras A, Prieto L (2014) Offshore wind farm design with the coral reefs optimization algorithm. Renew Energy 63:109–115

Salcedo-Sanz S, Casanova-Mateo C, Pastor-Sánchez A, Sánchez-Girn M (2014) Daily global solar radiation prediction based on a hybrid coral reefs optimization 17 extreme learning machine approach. Sol Energy 105:91–98

Salcedo-Sanz S, García-Díaz P, Portilla-Figueras JA, Ser JD, Gil-López S (2014) A coral reefs optimization algorithm for mobile network optimal deployment with electromagnetic pollution control criterion. Appl Soft Comput 24:239–248

Salcedo-Sanz S, Pastor-Sánchez A, Ser JD, Prieto L, Geem Z (2015) A coral reefs optimization algorithm with harmony search operators for accurate wind speed prediction. Renew Energy 75:93–101

Salcedo-Sanz S, Ser JD, Landa-Torres I, Gil-López S, Portilla-Figueras JA (2014) The coral reefs optimization algorithm: a novel metaheuristic for efficiently solving optimization problems, vol 2014. Sci World J

Silva HM, Canuto AMP, Medeiros IG, Xavier-Júnior JC (2016) A bio-inspired optimization technique for cluster ensembles optimization. In: The 5th Brazilian conference on intelligent system (BRACIS). IEEE

Simon D (2013) Evolutionary optimization algorithms: biologically inspired and population-based approaches to computer intelligence

Sulaiman N, Ghaemi R, Ibrahim H, Mustapha N (2009) A survey: clustering ensembles techniques. World Acad Sci Eng Technol 38:636–645

Topchy A, Jain AK, Punch W (2003) Combining multiple weak clusterings. In: Proceedings of the IEEE international conference on data mining (ICDM172003). Melbourne, Florida, USA, pp 331–338

Yang LY, Zhang JY, Wang WJ (2009) Cluster ensemble based on particle swarm optimization. In: Global congress on intelligent systems, pp 519–523. IEEE

Zhong C, Yue X, Zhang Z, Lei J (2015) A clustering ensemble: two-level-refined co-association matrix with path-based transformation. Pattern Recognit 48(8):2699–2709

Acknowledgements

This work has been financially supported partially by Capes/Brazil.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Canuto, A., Neto, A.F., Silva, H.M. et al. Population-based bio-inspired algorithms for cluster ensembles optimization. Nat Comput 19, 515–532 (2020). https://doi.org/10.1007/s11047-018-9682-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11047-018-9682-1