Abstract

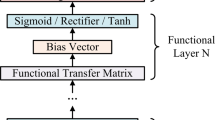

In this paper, a new multi-output neural model with tunable activation function (TAF) and its general form are presented. It combines both traditional neural model and TAF neural model. Recursive least squares algorithm is used to train a multilayer feedforward neural network with the new multi-output neural model with tunable activation function (MO-TAF). Simulation results show that the MO-TAF-enabled multi-layer feedforward neural network has better capability and performance than the traditional multilayer feedforward neural network and the feedforward neural network with tunable activation functions. In fact, it significantly simplifies the neural network architecture, improves its accuracy and speeds up the convergence rate.

Similar content being viewed by others

References

Moody, J. and Wu L.: Optimization of trading system and portfolios, Proceedings of the Neural Networks Capital Markets Conference, Pasadena, CA, 1996.

Bassi, D.: Stock price predictions by recurrent multilayer neural network architecture, Proceedings of the Neural Networks in the Capital Markets Conference, London Business School, London, UK, 1995,pp. 331-340.

Puskorius, G. and Feldman, L.: Neurocontrol of dynamical syatems with Kalman filter trained recurrent network, IEEE Transactions on Neural Networks, 5 (1994) 279-297.

Narendra, K. and Parthasarathy, K.: Identification of control of dynamical systems using neural networks, IEEE Transactions on Neural Networks, 1 (1990) 4-27.

Suykens, J., De Moor, B. and Vandewalle, J.: Nonlinear system identification using neural state-space model applicable to robust control design, International Journal of Control, 62 (1995) 129-152.

Haykin, S.: Neural Networks: A Comprehensive Foundation, IEEE Press, 1994.

Segee, B E.: Using spectral techniques for improved performance in ANN, Proceedings of the IEEE, 1993, pp. 500-505.

Lee, S. and Kil, R. M.: A Gaussian potential function network with hierarchnically selforganizing learning, Neural Network 4 (1991), 207-224.

Stork, D. G., Allen, J. D., and et al.: How to solve the N-bit parity problem with two hidden units, Neural Networks 5 (1992), 923-926.

Stock, D. G.: A replay to Brown and Kom, Neural Networks 6 (1993), 607-609.

Wu, Y. S.: A new approach to design a simplest ANN for performing certain special problems, Proceeding of the International Conference on Neural Information, Beijing, 1995, pp. 477-480.

Wu, Y. S.: The research on constructing some group neural networks by the known input signal, Science in China, E-edition, 26(2) (1996), 140-144.

Wu, Y. S., Zhao, M. S. and Ding, X. Q.: A new artificial neural network with tunable activation function and its applicatin, Science in China, 27(1) (1997), 55-60.

Wu, Y. S., Zhao, M. S., and Ding, X Q.: A new perceptron model with tunable activation function, Chinese Journal of Electronics, 5(2) (1996), 55-62.

Wu, Y. S. and Zhao, M. S.: The neural model with tunable activation funtion and its supervised learning and application, Science in China, 31(3) (2001), 263-272.

Lippmann, R. P.: An introduction to computing neural networks, IEEE ASSP Magazine, 4(2) (1987).

Rumelhart, D. E. and McClelland, J. L.: Parallel Distributed Processing, Vol. 1, Cambridge, MA, MIT Press, 1986.

Scalero, R. S. and Tepedelenlioglu, N.: A fast new algorithm for training feedforward neural networks, IEEE Transactions on Signal Processing, 40(1) (1992), 203-210.

Azimi-Sadjadi, M. R. and Liou, R. J.: Fast learning process of multilayer neural networks using recursive least squares method, IEEE Transactions on Signal Processing, 40(2) (1992), 447-450.

Ouyang, S., Bao, Z. and Liao, G. S.: Robust recursive least squares learning algorithm for principal component analysis, IEEE Transactions on Neural Networks, 11(1) (2000).

Haykin, S.: Adaptive Filter Theory, Prentice-Hall, Enflewoood Cliffs, NJ, 1986.

Goodwin, G. C. and Sin, K. S.: Adaptive Filtering, Prediction, and Control. Prentice-Hall, Englewood Cliffs, NJ, 1984.

Hagan, M.T. and Menhaj, M. ''Training feedforward networks with the Marquardt algorithm,'' IEEE Transaction. Neural Networks, 5 (1994) 989-993.

Levenberg, K.: A method for the solution of certain problems in least squares, Quart. Appl. Math., 5 164-168. (5) 1944.

Marquardt, D.: An algorithm for least squares estimation of nonlinear parameters, SIAM J. appl. Math., 11 (1963) 431-441.

Hagan, M.T. and Menhaj, M.: ''Training feedforward networks with the Marquardt algorithm,'' IEEE Transaction. Neural Networks, 5, (1994) 989-993.

Ampazis, N. and Perantonis, S.J.: Two Highly Efficient Second-order Algorithms for Training Feedforward Networks, IEEE Transaction. Neural Networks, 13 (5) 1064-1074.

Atiya, A. F. and Parlos, A. G.: New results on recurrent network training: unifying the algorithms and accelerating convergence, IEEE Transactions on Neural Networks, 11 (3) (2000).

Narenda, K. S and Parthasarathy, K.: Neural networks and dynamical systems, Int. J.Approximate Reasoning, 6 (1992) 109-131.

Narendra, K. and Parthasarathy, K.: Identification and control of dynamical systems using neural netwroks, IEEE Transactions on Neural Netwroks, 1, (1990), 4-27.

Hochreiter, S. and Schmidhuber, J.: Long short-term memory, Neural Computing, 9(8) (1997) 1735-1780.

Shen, Y. and Wang, B.: A fast learning algorithm for neural networks with tunable activation function, Science in China, E-edition, 33 (2003) 733-740.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Shen, Y., Wang, B., Chen, F. et al. A New Multi-output Neural Model with Tunable Activation Function and its Applications. Neural Processing Letters 20, 85–104 (2004). https://doi.org/10.1007/s11063-004-0637-4

Issue Date:

DOI: https://doi.org/10.1007/s11063-004-0637-4