Abstract

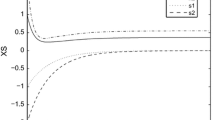

This paper studies the complete convergence of a class of neural networks with different time scales under the assumption that the activation functions are unsaturated piecewise linear functions. Under this assumption, there are multiple equilibrium points in the neural network. Traditional methods cannot be used in this neural network. Complete convergence is proved by constructing an energy-like function. Simulations are employed to illustrate the theory.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.References

S. Amari (1983) ArticleTitleField theory of self-organizing neural nets IEEE Transactions System Man Cybernetics SMC 13 741–748

R. Douglas C. Koch M. Mahowald K. Martin H. Suarez (1995) ArticleTitleRecurrent excitation in neocortical circuits Science 269 981–985

S. Grossberg (1978) ArticleTitleCompetition, decision and consensus Journal of Mathematical and Analytical and Applications 66 470–493

R. Hahnloser (1998) ArticleTitleOn the piecewise analysis of linear threshold neural networks Neural Networks 11 691–697

R. Hahnloser M. A. Mahowald R. J. Douglas H.S. Seung (2000) ArticleTitleDigitial selection and analogue amplification coexist in a cortex inspired silicon circuit Nature 405 947–951

R. Hahnloser H. S. Seung J. J. Slotine (2003) ArticleTitlePermitted and forbidden sets in symmetric threshold-linear networks Neural Computation 15 621–638

M. Lemmon V. Kumar (1989) ArticleTitleEmulating the dynamics for a class of laterally inhibited neural networks Neural Networks 2 193–214

A. Meyer-Baese S. S. Pilyugin Y. Chen (2003) ArticleTitleGlobal exponential stability of competitive neural networks with differernt time scales IEEE Transactions Neural Network 14 IssueID3 716–719

A. Meyer-Base F. Ohl H. Scheich (1996) ArticleTitleSingular perturbation annalysis of competitve neural networks with different time scales Neural Computation 8 545–563

H. Wersing W. J. Beyn H. Ritter (2001) ArticleTitleDynamical stability conditions for recurrent neural networks with unsaturating piecewise linear transfer functions Neural Computation 13 1811–1825

X. Xie R. Hahnloser H. S. Seung (2002) ArticleTitleSelectively grouping neurons in recurrent networks of lateral inhibition Neural Computation 14 2627–2646

Z. Yi K. K. Tan T. H. Lee (2003) ArticleTitleMultistablity analysis for recurrent neural networks with unsaturating piecewise linear transfer functions Neural Computation 15 639–662

Z Yi K. Tan (2003) Convergence Analysis of Recurrent Neural Networks Kluwer Academic Publishers Dordrecht

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ye, M., Zhang, Y. Complete Convergence of Competitive Neural Networks with Different Time Scales. Neural Process Lett 21, 53–60 (2005). https://doi.org/10.1007/s11063-004-3427-0

Issue Date:

DOI: https://doi.org/10.1007/s11063-004-3427-0